GPU Accelerated Approach to Numerical Linear Algebra and Matrix Analysis with CFD Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Adjacency and Incidence Matrices

Adjacency and Incidence Matrices 1 / 10 The Incidence Matrix of a Graph Definition Let G = (V ; E) be a graph where V = f1; 2;:::; ng and E = fe1; e2;:::; emg. The incidence matrix of G is an n × m matrix B = (bik ), where each row corresponds to a vertex and each column corresponds to an edge such that if ek is an edge between i and j, then all elements of column k are 0 except bik = bjk = 1. 1 2 e 21 1 13 f 61 0 07 3 B = 6 7 g 40 1 05 4 0 0 1 2 / 10 The First Theorem of Graph Theory Theorem If G is a multigraph with no loops and m edges, the sum of the degrees of all the vertices of G is 2m. Corollary The number of odd vertices in a loopless multigraph is even. 3 / 10 Linear Algebra and Incidence Matrices of Graphs Recall that the rank of a matrix is the dimension of its row space. Proposition Let G be a connected graph with n vertices and let B be the incidence matrix of G. Then the rank of B is n − 1 if G is bipartite and n otherwise. Example 1 2 e 21 1 13 f 61 0 07 3 B = 6 7 g 40 1 05 4 0 0 1 4 / 10 Linear Algebra and Incidence Matrices of Graphs Recall that the rank of a matrix is the dimension of its row space. Proposition Let G be a connected graph with n vertices and let B be the incidence matrix of G. -

Parallel Rendering on Hybrid Multi-GPU Clusters

Eurographics Symposium on Parallel Graphics and Visualization (2012) H. Childs and T. Kuhlen (Editors) Parallel Rendering on Hybrid Multi-GPU Clusters S. Eilemann†1, A. Bilgili1, M. Abdellah1, J. Hernando2, M. Makhinya3, R. Pajarola3, F. Schürmann1 1Blue Brain Project, EPFL; 2CeSViMa, UPM; 3Visualization and MultiMedia Lab, University of Zürich Abstract Achieving efficient scalable parallel rendering for interactive visualization applications on medium-sized graphics clusters remains a challenging problem. Framerates of up to 60hz require a carefully designed and fine-tuned parallel rendering implementation that fits all required operations into the 16ms time budget available for each rendered frame. Furthermore, modern commodity hardware embraces more and more a NUMA architecture, where multiple processor sockets each have their locally attached memory and where auxiliary devices such as GPUs and network interfaces are directly attached to one of the processors. Such so called fat NUMA processing and graphics nodes are increasingly used to build cost-effective hybrid shared/distributed memory visualization clusters. In this paper we present a thorough analysis of the asynchronous parallelization of the rendering stages and we derive and implement important optimizations to achieve highly interactive framerates on such hybrid multi-GPU clusters. We use both a benchmark program and a real-world scientific application used to visualize, navigate and interact with simulations of cortical neuron circuit models. Categories and Subject Descriptors (according to ACM CCS): I.3.2 [Computer Graphics]: Graphics Systems— Distributed Graphics; I.3.m [Computer Graphics]: Miscellaneous—Parallel Rendering 1. Introduction Hybrid GPU clusters are often build from so called fat render nodes using a NUMA architecture with multiple The decomposition of parallel rendering systems across mul- CPUs and GPUs per machine. -

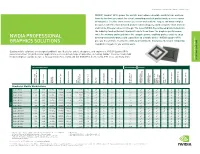

NVIDIA Professional Graphics Solutions | Line Card

PROFEssional GRAPHICS Solutions | MOBILE | LINE Card | JUN17 NVIDIA® Quadro® GPUs power the world’s most advanced mobile workstations and new form-factor devices to meet the visual computing needs of professionals across a range of industries. Creative and technical users can work with the largest and most complex designs, render the most detailed photo-realistic imagery, and develop the most intricate and lifelike VR experiences on-the-go. The latest NVIDIA Pascal based products build on the industry-leading Maxwell lineup with up to three times the graphics performance, twice the memory and nearly twice the compute power, enabling professionals to enjoy NVIDIA PROFESSIONAL desktop-level performance and capabilities on a mobile device. NVIDIA Quadro GPUs GRAPHICS SOLUTIONS give you the ultimate creative freedom, by providing the most powerful visual computing capabilities anywhere you want to work. Quadro mobile solutions are designed and built specifically for artists, designers, and engineers, NVIDIA Quadro GPUs power more than 100 professional applications across a broad range of industries, including Adobe® Creative Cloud, Avid Media Composer, Autodesk Suites, Dassault Systemes, CATIA and SOLIDWORKS, Siemens NX, PTC Creo, and many more. PROFESSIONAL GRAPHICS PROFESSIONAL CARD LINE SOLUTIONS GPU SPECIFICATIONS PERFORMANCE OPTIONS 1 2 NVIDIA CUDA NVIDIA CUDA Cores Processing GPU Memory Memory Bandwidth Memory Type Memory Interface TGP Max Power Consumption Display Port OpenGL Shader Model DirectX PCIe Generation Floating-Point Performance -

Graph Equivalence Classes for Spectral Projector-Based Graph Fourier Transforms Joya A

1 Graph Equivalence Classes for Spectral Projector-Based Graph Fourier Transforms Joya A. Deri, Member, IEEE, and José M. F. Moura, Fellow, IEEE Abstract—We define and discuss the utility of two equiv- Consider a graph G = G(A) with adjacency matrix alence graph classes over which a spectral projector-based A 2 CN×N with k ≤ N distinct eigenvalues and Jordan graph Fourier transform is equivalent: isomorphic equiv- decomposition A = VJV −1. The associated Jordan alence classes and Jordan equivalence classes. Isomorphic equivalence classes show that the transform is equivalent subspaces of A are Jij, i = 1; : : : k, j = 1; : : : ; gi, up to a permutation on the node labels. Jordan equivalence where gi is the geometric multiplicity of eigenvalue 휆i, classes permit identical transforms over graphs of noniden- or the dimension of the kernel of A − 휆iI. The signal tical topologies and allow a basis-invariant characterization space S can be uniquely decomposed by the Jordan of total variation orderings of the spectral components. subspaces (see [13], [14] and Section II). For a graph Methods to exploit these classes to reduce computation time of the transform as well as limitations are discussed. signal s 2 S, the graph Fourier transform (GFT) of [12] is defined as Index Terms—Jordan decomposition, generalized k gi eigenspaces, directed graphs, graph equivalence classes, M M graph isomorphism, signal processing on graphs, networks F : S! Jij i=1 j=1 s ! (s ;:::; s ;:::; s ;:::; s ) ; (1) b11 b1g1 bk1 bkgk I. INTRODUCTION where sij is the (oblique) projection of s onto the Jordan subspace Jij parallel to SnJij. -

Network Properties Revealed Through Matrix Functions 697

SIAM REVIEW c 2010 Society for Industrial and Applied Mathematics Vol. 52, No. 4, pp. 696–714 Network Properties Revealed ∗ through Matrix Functions † Ernesto Estrada Desmond J. Higham‡ Abstract. The emerging field of network science deals with the tasks of modeling, comparing, and summarizing large data sets that describe complex interactions. Because pairwise affinity data can be stored in a two-dimensional array, graph theory and applied linear algebra provide extremely useful tools. Here, we focus on the general concepts of centrality, com- municability,andbetweenness, each of which quantifies important features in a network. Some recent work in the mathematical physics literature has shown that the exponential of a network’s adjacency matrix can be used as the basis for defining and computing specific versions of these measures. We introduce here a general class of measures based on matrix functions, and show that a particular case involving a matrix resolvent arises naturally from graph-theoretic arguments. We also point out connections between these measures and the quantities typically computed when spectral methods are used for data mining tasks such as clustering and ordering. We finish with computational examples showing the new matrix resolvent version applied to real networks. Key words. centrality measures, clustering methods, communicability, Estrada index, Fiedler vector, graph Laplacian, graph spectrum, power series, resolvent AMS subject classifications. 05C50, 05C82, 91D30 DOI. 10.1137/090761070 1. Motivation. 1.1. Introduction. Connections are important. Across the natural, technologi- cal, and social sciences it often makes sense to focus on the pattern of interactions be- tween individual components in a system [1, 8, 61]. -

Fast Calculation of the Lomb-Scargle Periodogram Using Graphics Processing Units 3

Draft version August 7, 2018 A Preprint typeset using LTEX style emulateapj v. 11/10/09 FAST CALCULATION OF THE LOMB-SCARGLE PERIODOGRAM USING GRAPHICS PROCESSING UNITS R. H. D. Townsend Department of Astronomy, University of Wisconsin-Madison, Sterling Hall, 475 N. Charter Street, Madison, WI 53706, USA; [email protected] Draft version August 7, 2018 ABSTRACT I introduce a new code for fast calculation of the Lomb-Scargle periodogram, that leverages the computing power of graphics processing units (GPUs). After establishing a background to the newly emergent field of GPU computing, I discuss the code design and narrate key parts of its source. Benchmarking calculations indicate no significant differences in accuracy compared to an equivalent CPU-based code. However, the differences in performance are pronounced; running on a low-end GPU, the code can match 8 CPU cores, and on a high-end GPU it is faster by a factor approaching thirty. Applications of the code include analysis of long photometric time series obtained by ongoing satellite missions and upcoming ground-based monitoring facilities; and Monte-Carlo simulation of periodogram statistical properties. Subject headings: methods: data analysis — methods: numerical — techniques: photometric — stars: oscillations 1. INTRODUCTION of the newly emergent field of GPU computing; then, Astronomical time-series observations are often charac- Section 3 reviews the formalism defining the L-S peri- terized by uneven temporal sampling (e.g., due to trans- odogram, and Section 4 presents a GPU-based code im- formation to the heliocentric frame) and/or non-uniform plementing this formalism. Benchmarking calculations coverage (e.g., from day/night cycles, or radiation belt to evaluate the accuracy and performance of the code passages). -

Parallel Texture-Based Vector Field Visualization on Curved Surfaces Using GPU Cluster Computers

Eurographics Symposium on Parallel Graphics and Visualization (2006) Alan Heirich, Bruno Raffin, and Luis Paulo dos Santos (Editors) Parallel Texture-Based Vector Field Visualization on Curved Surfaces Using GPU Cluster Computers S. Bachthaler1, M. Strengert1, D. Weiskopf2, and T. Ertl1 1Institute of Visualization and Interactive Systems, University of Stuttgart, Germany 2School of Computing Science, Simon Fraser University, Canada Abstract We adopt a technique for texture-based visualization of flow fields on curved surfaces for parallel computation on a GPU cluster. The underlying LIC method relies on image-space calculations and allows the user to visualize a full 3D vector field on arbitrary and changing hypersurfaces. By using parallelization, both the visualization speed and the maximum data set size are scaled with the number of cluster nodes. A sort-first strategy with image-space decomposition is employed to distribute the workload for the LIC computation, while a sort-last approach with an object-space partitioning of the vector field is used to increase the total amount of available GPU memory. We specifically address issues for parallel GPU-based vector field visualization, such as reduced locality of memory accesses caused by particle tracing, dynamic load balancing for changing camera parameters, and the combination of image-space and object-space decomposition in a hybrid approach. Performance measurements document the behavior of our implementation on a GPU cluster with AMD Opteron CPUs, NVIDIA GeForce 6800 Ultra GPUs, and Infiniband network connection. Categories and Subject Descriptors (according to ACM CCS): I.3.3 [Computer Graphics]: Viewing algorithms I.3.3 [Three-Dimensional Graphics and Realism]: Color, shading, shadowing, and texture 1. -

Diagonal Sums of Doubly Stochastic Matrices Arxiv:2101.04143V1 [Math

Diagonal Sums of Doubly Stochastic Matrices Richard A. Brualdi∗ Geir Dahl† 7 December 2020 Abstract Let Ωn denote the class of n × n doubly stochastic matrices (each such matrix is entrywise nonnegative and every row and column sum is 1). We study the diagonals of matrices in Ωn. The main question is: which A 2 Ωn are such that the diagonals in A that avoid the zeros of A all have the same sum of their entries. We give a characterization of such matrices, and establish several classes of patterns of such matrices. Key words. Doubly stochastic matrix, diagonal sum, patterns. AMS subject classifications. 05C50, 15A15. 1 Introduction Let Mn denote the (vector) space of real n×n matrices and on this space we consider arXiv:2101.04143v1 [math.CO] 11 Jan 2021 P the usual scalar product A · B = i;j aijbij for A; B 2 Mn, A = [aij], B = [bij]. A permutation σ = (k1; k2; : : : ; kn) of f1; 2; : : : ; ng can be identified with an n × n permutation matrix P = Pσ = [pij] by defining pij = 1, if j = ki, and pij = 0, otherwise. If X = [xij] is an n × n matrix, the entries of X in the positions of X in ∗Department of Mathematics, University of Wisconsin, Madison, WI 53706, USA. [email protected] †Department of Mathematics, University of Oslo, Norway. [email protected]. Correspond- ing author. 1 which P has a 1 is the diagonal Dσ of X corresponding to σ and P , and their sum n X dP (X) = xi;ki i=1 is a diagonal sum of X. -

Przewodnik Ubuntu 14.04 LTS Trusty Tahr

Zespół Ubuntu.pl Przewodnik po Ubuntu 14.04 LTS Trusty Tahr wersja 1.0 16 maja 2014 Spis treści Spis treści Spis treści 1 Wstęp ....................2 4.15 LibreOffice — pakiet biurowy..... 77 1.1 O Ubuntu................2 4.16 Rhythmbox — odtwarzacz muzyki.. 77 1.2 Dlaczego warto zmienić system na 4.17 Totem — odtwarzacz filmów..... 79 Ubuntu?................3 5 Sztuczki z systemem Ubuntu ...... 81 2 Instalacja Ubuntu .............6 5.1 Wybór szybszych repozytoriów.... 81 2.1 Pobieranie obrazu instalatora.....6 5.2 Wyłączenie Global Menu....... 82 2.2 Nagrywanie pobranego obrazu....6 5.3 Minimalizacja aplikacji poprzez 2.3 Przygotowanie do instalacji......9 kliknięcie na jej ikonę w Launcherze. 82 2.4 Uruchomienie instalatora....... 11 5.4 Normalny wygląd pasków przewijania 83 2.5 Graficzny instalator Ubuntu..... 14 5.5 Prywatność............... 83 2.6 Partycjonowanie dysku twardego... 23 5.6 Unity Tweak Tool........... 84 2.7 Zaawansowane partycjonowanie.... 29 5.7 Ubuntu Tweak............. 84 2.8 Instalacja na maszynie wirtualnej.. 34 5.8 Instalacja nowych motywów graficznych 84 2.9 Aktualizacja z poprzedniego wydania 37 5.9 Instalacja zestawu ikon........ 85 2.10 Rozwiązywanie problemów z instalacją 37 5.10 Folder domowy na pulpicie...... 86 3 Pierwsze uruchomienie systemu .... 40 5.11 Steam.................. 86 3.1 Uruchomienie systemu Ubuntu.... 40 5.12 Wyłączenie raportowania błędów... 87 3.2 Ekran logowania............ 41 5.13 Odtwarzanie szyfrowanych płyt DVD 87 3.3 Rzut oka na pulpit Ubuntu...... 42 5.14 Przyspieszanie systemu poprzez 3.4 Instalacja oprogramowania...... 42 lepsze wykorzystanie pamięci..... 87 3.5 Rzeczy do zrobienia po instalacji 5.15 Oczyszczanie systemu........ -

Evolution of the Graphical Processing Unit

University of Nevada Reno Evolution of the Graphical Processing Unit A professional paper submitted in partial fulfillment of the requirements for the degree of Master of Science with a major in Computer Science by Thomas Scott Crow Dr. Frederick C. Harris, Jr., Advisor December 2004 Dedication To my wife Windee, thank you for all of your patience, intelligence and love. i Acknowledgements I would like to thank my advisor Dr. Harris for his patience and the help he has provided me. The field of Computer Science needs more individuals like Dr. Harris. I would like to thank Dr. Mensing for unknowingly giving me an excellent model of what a Man can be and for his confidence in my work. I am very grateful to Dr. Egbert and Dr. Mensing for agreeing to be committee members and for their valuable time. Thank you jeffs. ii Abstract In this paper we discuss some major contributions to the field of computer graphics that have led to the implementation of the modern graphical processing unit. We also compare the performance of matrix‐matrix multiplication on the GPU to the same computation on the CPU. Although the CPU performs better in this comparison, modern GPUs have a lot of potential since their rate of growth far exceeds that of the CPU. The history of the rate of growth of the GPU shows that the transistor count doubles every 6 months where that of the CPU is only every 18 months. There is currently much research going on regarding general purpose computing on GPUs and although there has been moderate success, there are several issues that keep the commodity GPU from expanding out from pure graphics computing with limited cache bandwidth being one. -

The Generalized Dedekind Determinant

Contemporary Mathematics Volume 655, 2015 http://dx.doi.org/10.1090/conm/655/13232 The Generalized Dedekind Determinant M. Ram Murty and Kaneenika Sinha Abstract. The aim of this note is to calculate the determinants of certain matrices which arise in three different settings, namely from characters on finite abelian groups, zeta functions on lattices and Fourier coefficients of normalized Hecke eigenforms. Seemingly disparate, these results arise from a common framework suggested by elementary linear algebra. 1. Introduction The purpose of this note is three-fold. We prove three seemingly disparate results about matrices which arise in three different settings, namely from charac- ters on finite abelian groups, zeta functions on lattices and Fourier coefficients of normalized Hecke eigenforms. In this section, we state these theorems. In Section 2, we state a lemma from elementary linear algebra, which lies at the heart of our three theorems. A detailed discussion and proofs of the theorems appear in Sections 3, 4 and 5. In what follows below, for any n × n matrix A and for 1 ≤ i, j ≤ n, Ai,j or (A)i,j will denote the (i, j)-th entry of A. A diagonal matrix with diagonal entries y1,y2, ...yn will be denoted as diag (y1,y2, ...yn). Theorem 1.1. Let G = {x1,x2,...xn} be a finite abelian group and let f : G → C be a complex-valued function on G. Let F be an n × n matrix defined by F −1 i,j = f(xi xj). For a character χ on G, (that is, a homomorphism of G into the multiplicative group of the field C of complex numbers), we define Sχ := f(s)χ(s). -

Nvidia Quadro T1000

NVIDIA professional laptop GPUs power the world’s most advanced thin and light mobile workstations and unique compact devices to meet the visual computing needs of professionals across a wide range of industries. The latest generation of NVIDIA RTX professional laptop GPUs, built on the NVIDIA Ampere architecture combine the latest advancements in real-time ray tracing, advanced shading, and AI-based capabilities to tackle the most demanding design and visualization workflows on the go. With the NVIDIA PROFESSIONAL latest graphics technology, enhanced performance, and added compute power, NVIDIA professional laptop GPUs give designers, scientists, and artists the tools they need to NVIDIA MOBILE GRAPHICS SOLUTIONS work efficiently from anywhere. LINE CARD GPU SPECIFICATIONS PERFORMANCE OPTIONS 2 1 ® / TXAA™ Anti- ® ™ 3 4 * 5 NVIDIA FXAA Aliasing Manager NVIDIA RTX Desktop Support Vulkan NVIDIA Optimus NVIDIA CUDA NVIDIA RT Cores Cores Tensor GPU Memory Memory Bandwidth* Peak Memory Type Memory Interface Consumption Max Power TGP DisplayPort Open GL Shader Model DirectX PCIe Generation Floating-Point Precision Single Peak)* (TFLOPS, Performance (TFLOPS, Performance Tensor Peak) Gen MAX-Q Technology 3rd NVENC / NVDEC Processing Cores Processing Laptop GPUs 48 (2nd 192 (3rd NVIDIA RTX A5000 6,144 16 GB 448 GB/s GDDR6 256-bit 80 - 165 W* 1.4 4.6 7.0 12 Ultimate 4 21.7 174.0 Gen) Gen) 40 (2nd 160 (3rd NVIDIA RTX A4000 5,120 8 GB 384 GB/s GDDR6 256-bit 80 - 140 W* 1.4 4.6 7.0 12 Ultimate 4 17.8 142.5 Gen) Gen) 32 (2nd 128 (3rd NVIDIA RTX A3000