Hypertextural Garments on Pixar's Soul

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

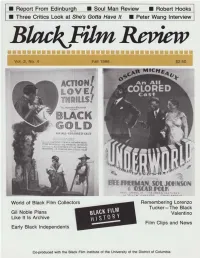

Report from Edinbur H • Soul Man Review • Robert Hooks Three Critics Look at She's Gotta Have It • Peter Wang Interview

Report From Edinbur h • Soul Man Review • Robert Hooks Three Critics Look at She's Gotta Have It • Peter Wang Interview World of Black Film Collectors Remembering Lorenzo Tucker- The Black. Gil Noble Plans Valentino Like It Is Archive Film Clips and News Early Black Independents Co-produced with the Black Film Institute of the University of the District of Columbia ••••••••••••••••••••••••••••••• Vol. 2, No. 4/Fa111986 'Peter Wang Breaks Cultural Barriers Black Film Review by Pat Aufderheide 10 SSt., NW An Interview with the director of A Great Wall p. 6 Washington, DC 20001 (202) 745-0455 Remembering lorenzo Tucker Editor and Publisher by Roy Campanella, II David Nicholson A personal reminiscence of one of the earliest stars of black film. ... p. 9 Consulting Editor Quick Takes From Edinburgh Tony Gittens by Clyde Taylor (Black Film Institute) Filmmakers debated an and aesthetics at the Edinburgh Festival p. 10 Associate EditorI Film Critic Anhur Johnson Film as a Force for Social Change Associate Editors by Charles Burnett Pat Aufderheide; Keith Boseman; Excerpts from a paper delivered at Edinburgh p. 12 Mark A. Reid; Saundra Sharp; A. Jacquie Taliaferro; Clyde Taylor Culture of Resistance Contributing Editors Excerpts from a paper p. 14 Bill Alexander; Carroll Parrott Special Section: Black Film History Blue; Roy Campanella, II; Darcy Collector's Dreams Demarco; Theresa furd; Karen by Saundra Sharp Jaehne; Phyllis Klotman; Paula Black film collectors seek to reclaim pieces of lost heritage p. 16 Matabane; Spencer Moon; An drew Szanton; Stan West. With a repon on effons to establish the Like It Is archive p. -

(LACMA) Hosted Its Ninth Annual Art+Film Gala on Saturday, November 2, 2019, Honoring Artist Betye Saar and Filmmaker Alfonso Cuarón

(Los Angeles, November 3, 2019)—The Los Angeles County Museum of Art (LACMA) hosted its ninth annual Art+Film Gala on Saturday, November 2, 2019, honoring artist Betye Saar and filmmaker Alfonso Cuarón. Co-chaired by LACMA trustee Eva Chow and actor Leonardo DiCaprio, the evening brought together more than 800 distinguished guests from the art, film, fashion, and entertainment industries, among others. This year’s gala raised more than $4.6 million, with proceeds supporting LACMA’s film initiatives and future exhibitions, acquisitions, and programming. The 2019 Art+Film Gala was made possible through Gucci’s longstanding and generous partnership. Additional support for the gala was provided by Audi. Eva Chow, co-chair of the Art+Film Gala, said, “I’m so happy that we have outdone ourselves again with the most successful Art+Film Gala yet. It was such a joy to celebrate Betye Saar and Alfonso Cuarón’s incredible creativity and passion, while supporting LACMA’s art and film initiatives. I couldn’t be more grateful to Alessandro Michele, Marco Bizzarri, and everyone at Gucci—our invaluable partner since the first Art+Film Gala—and to Anderson .Paak and The Free Nationals for making this evening one to remember.” “I’m deeply grateful to our returning co-chairs Eva Chow and Leonardo DiCaprio for helping us set another Art+Film Gala record,” said Michael Govan, LACMA CEO and Wallis Annenberg Director. “We honored two incredibly powerful artistic voices this year. Betye Saar has helped define the genre of Assemblage art for nearly seven decades, and recognition of her as one of the most important and influential artists working today is long overdue. -

Reconsidering the Theoretical/Practical Divide: the Philosophy of Nishida Kitarō

University of Mississippi eGrove Electronic Theses and Dissertations Graduate School 2013 Reconsidering The Theoretical/Practical Divide: The Philosophy Of Nishida Kitarō Lockland Vance Tyler University of Mississippi Follow this and additional works at: https://egrove.olemiss.edu/etd Part of the Philosophy Commons Recommended Citation Tyler, Lockland Vance, "Reconsidering The Theoretical/Practical Divide: The Philosophy Of Nishida Kitarō" (2013). Electronic Theses and Dissertations. 752. https://egrove.olemiss.edu/etd/752 This Thesis is brought to you for free and open access by the Graduate School at eGrove. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of eGrove. For more information, please contact [email protected]. RECONSIDERING THE THEORETICAL/PRACTICAL DIVIDE: THE PHILOSOPHY OF NISHIDA KITARŌ A Thesis presented in partial fulfillment of requirements for the degree of Master of Arts in the Department of Philosophy University of Mississippi by LOCKLAND V. TYLER APRIL 2013 Copyright Lockland V. Tyler 2013 ALL RIGHTS RESERVED ABSTRACT Over the years professional philosophy has undergone a number of significant changes. One of these changes corresponds to an increased emphasis on objectivity among philosophers. In light of new discoveries in logic and science, contemporary analytic philosophy seeks to establish the most objective methods and answers possible to advance philosophical progress in an unambiguous way. By doing so, we are able to more precisely analyze concepts, but the increased emphasis on precision has also been accompanied by some negative consequences. These consequences, unfortunately, are much larger and problematic than many may even realize. What we have eventually arrived in at in contemporary Anglo-American analytic philosophy is a complete repression of humanistic concerns. -

Jon Batiste and Stay Human's

WIN! A $3,695 BUCKS COUNTY/ZILDJIAN PACKAGE THE WORLD’S #1 DRUM MAGAZINE 6 WAYS TO PLAY SMOOTHER ROLLS BUILD YOUR OWN COCKTAIL KIT Jon Batiste and Stay Human’s Joe Saylor RUMMER M D A RN G E A Late-Night Deep Grooves Z D I O N E M • • T e h n i 40 e z W a YEARS g o a r Of Excellence l d M ’ s # m 1 u r D CLIFF ALMOND CAMILO, KRANTZ, AND BEYOND KEVIN MARCH APRIL 2016 ROBERT POLLARD’S GO-TO GUY HUGH GRUNDY AND HIS ZOMBIES “ODESSEY” 12 Modern Drummer June 2014 .350" .590" .610" .620" .610" .600" .590" “It is balanced, it is powerful. It is the .580" Wicked Piston!” Mike Mangini Dream Theater L. 16 3/4" • 42.55cm | D .580" • 1.47cm VHMMWP Mike Mangini’s new unique design starts out at .580” in the grip and UNIQUE TOP WEIGHTED DESIGN UNIQUE TOP increases slightly towards the middle of the stick until it reaches .620” and then tapers back down to an acorn tip. Mike’s reason for this design is so that the stick has a slightly added front weight for a solid, consistent “throw” and transient sound. With the extra length, you can adjust how much front weight you’re implementing by slightly moving your fulcrum .580" point up or down on the stick. You’ll also get a fat sounding rimshot crack from the added front weighted taper. Hickory. #SWITCHTOVATER See a full video of Mike explaining the Wicked Piston at vater.com remo_tamb-saylor_md-0416.pdf 1 12/18/15 11:43 AM 270 Centre Street | Holbrook, MA 02343 | 1.781.767.1877 | [email protected] VATER.COM C M Y K CM MY CY CMY .350" .590" .610" .620" .610" .600" .590" “It is balanced, it is powerful. -

An Analysis of Sound and Music in the Shining and Bridget Jones' Diary

Pace University DigitalCommons@Pace Honors College Theses Pforzheimer Honors College 2019 The oundS s Behind the Scenes: An Analysis of Sound and Music in The hininS g and Bridget Jones’ Diary Abby Fox Follow this and additional works at: https://digitalcommons.pace.edu/honorscollege_theses Part of the Communication Commons, and the Film and Media Studies Commons Running head: THE SOUNDS BEHIND THE SCENES The Sounds Behind the Scenes: An Analysis of Sound and Music in The Shining and Bridget Jones’ Diary Abby Fox Communication Studies Advisor: Satish Kolluri December 2018 THE SOUNDS BEHIND THE SCENES 2 Abstract Although often subtle, music and sounds have been an integral part of the motion picture experience since the days of silent films. When used effectively, sounds in film can create emotion, give meaning to a character’s actions or feelings, act as a cue to direct viewers’ attention to certain characters or settings, and help to enhance the overall storytelling. Sound essentially acts as a guide for the audience as it evokes certain emotions and reactions to accompany the narrative unfolding on-screen. The aim of this thesis is to focus on two specific films of contrasting genres and explore how the music and sounds help to establish the genres of the given films, as well as note any commonalities in which the films follow similar aural patterns. By conducting a content analysis and theoretical review, this study situates the sound use among affect theory, aesthetics and psychoanalysis, which are all highly relevant to the reception of music and sound in film. Findings consist of analyses of notable instances of sound use in both films that concentrate on the tropes of juxtaposition, contradiction, repetition and leitmotifs, and results indicate that there are actually similar approaches to using sound and music in both films, despite them falling under two vastly different genres. -

Jon Batiste - We Are Episode 205

Song Exploder Jon Batiste - We Are Episode 205 Hrishikesh: You’re listening to Song Exploder, where musicians take apart their songs and piece by piece tell the story of how they were made. My name is Hrishikesh Hirway. (“We Are” by JON BATISTE) Hrishikesh: Jon Batiste is a pianist, songwriter, and composer from New Orleans. He’s been nominated for multiple Grammys, and just won the Golden Globe and got an Oscar nomination for the soundtrack to the Pixar film Soul, which he composed along with Trent Reznor and Atticus Ross. Jon is also a recipient of the American Jazz Museum’s lifetime achievement award, and on weeknights, you can see him as the bandleader on The Late Show with Stephen Colbert. In March 2021, he put out his new album, We Are. But the title track from it actually came out much earlier last year in June 2020. Jon: We put the song out during the Black Lives Matter protest before it was even finished being mixed and mastered. Hrishikesh: In this episode, Jon talks about how he drew from his roots, both at a personal level and at a cultural level, and wove all of it into the song. Jon: I’m Jon Batiste. (Music fades out) Jon: So I started working on this song in September of 2019 with Kizzo, a great producer from the Netherlands, and Autumn Rowe. We met up in New York City. And this is pre-COVID, my life is busy - I'm doing the Late Show, I'm writing the score for Soul. -

Nederlands Film Festival

SHOCK HEAD SOUL THE SPUTNIK EFFECT Simon Pummell PRESS BOOK 12 / 02 / 12 INTERVIEWS TRAILERS LINKS SHOCK HEAD SOUL TRAILER Autlook Film Sales http://vimeo.com/36138859 VENICE FILM FESTIVAL PRESS CONFERENCE http://www.youtube.com/watch?v=xwqGDdQbkqE SUBMARINE TRANSMEDIA PROFILES Profiles of significant current transmedia projects http://www.submarinechannel.com/transmedia/simon-pummell/ GRAZEN WEB TV IFFR Special http://www.youtube.com/watch?v=BrxtxmsWtbE SHOCK HEAD SOUL SECTION of the PROGRAMME http://www.youtube.com/watch?v=-se5L0G7pzM&feature=related BFI LFF ONLINE SHOCK HEAD SOUL LFF NOTES http://www.youtube.com/watch?v=5xX6iceTG8k The NOS radio interview broadcasted and can be listened to online, http://nos.nl/audio/335923‐sputnik‐effect‐en‐shock‐head‐soul.html. NRC TOP FIVE PICJ IFFR http://www.nrc.nl/nieuws/2012/01/19/kaartverkoop-gaat-van-start-haal-het- maximale-uit-het-iffr/ SHOCK HEAD SOUL geselecteerd voor Venetië Film Festival... http://www.filmfestival.nl/nl/festival/nieuws/nederlandse-film... SHOCK HEAD SOUL geselecteerd voor Venetië Film Festival 28 juli 2011 De Nederlands/Engelse coproductie SHOCK HEAD SOUL van regisseur Simon Pummell met o.a. Hugo Koolschijn, Anniek Pheifer, Thom Hoffman en Jochum ten Haaf is geselecteerd voor de 68e editie van het prestigieuze Venetië Film Festival in het Orizzonti competitieprogramma. Het festival vindt plaats van 31 augustus tot en met 10 september 2011. Synopsis: Schreber was een succesvolle Duitse advocaat die in 1893 boodschappen van God doorkreeg via een ‘typemachine’ die de kosmos overspande. Hij bracht de negen daaropvolgende jaren door in een inrichting, geteisterd door wanen over kosmische controle en lijdend aan het idee dat hij langzaam van geslacht veranderde. -

A Journey to the Soul of Jon Batiste

In Tune Activity Plans – Volume 18, Issue 5 Cover Story: A Journey To The Soul of Jon Batiste National Standards: 7-8, 11 Jon Batiste grew up in a New Orleans jazz family of top teachers and performers. He has a masters degree from Julliard, a week nightly gig as musical director on The Late Show with Steven Colbert, the music director for The Atlantic and Co-Artistic Director of the National Jazz Museum in Harlem. His demanding performance schedule has taken him to 40 countries, and often includes his signature ‘love riot’ street parades. He’s also one of the musical minds behind the music of Pixar’s new animated film Soul. He named his band “Stay Human” and believes in the power of interpersonal connection, saying that music has the power to elevate the spirit. Although he records and appears in the media, he places an outsized importance on live performance, frequently bringing his band into the street for New Orleans style parades. His band once recorded an entire album in the New York City subway. Prepare Have students listen to some of Batiste’s music created for the Pixar film Soul. Discuss his style of jazz and compare it to “free jazz,” bebop and dixieland. Which album cuts are reminiscent of “New Orleans” jazz and why? Key points in the article: • Batiste comes from a famous family of New Orleans jazz musicians • He has toured, taught, recorded and his band performs on the nightly talk show The Late Show with Stephen Colbert. • He believes that there is great value in artists directly connecting with their audiences in live environments and plays what he calls “social music.” • He plays the melodica Begin Review vocabulary words from the article: • JULLIARD: The Juilliard School is a highly regarded performing arts college in New York City. -

Flashdance! Never Trust Spiritual Leader Who Cannot Dance

I see dance being used as communication between body and soul, to express what it too deep to find for words. ~ Ruth St. Denis Part 8: Flashdance! Never trust spiritual leader who cannot dance. ~Mr. Miyagi, The Next Karate Kid …a reverent heart and a dancing foot can belong to the …So David went there and brought the Ark of God from the same person. David had both. May we have the same. house of Obed-edom to the City of David with a great ~ Max Lucado celebration. But as the Ark of the LORD entered the City of David, Michal, ~ 2 Samuel 6:12b NLT the daughter of Saul, looked down from her window. When The transfer of the ark to Jerusalem was to place God at the she saw King David leaping and dancing before the LORD, center of their national life, making Jerusalem the central site she was filled with contempt for him. of worship of the Lord. ~ 2 Samuel 6:16 NLT ~ Mark J. Boda For Michal there was an acceptable way of receiving back God is with us. That’s reason to celebrate. the Ark and David had chosen to ignore it. But this was more ~ Max Lucado than a clash of opinions over good manners. There was an issue between them over dignity. And David danced before the LORD with all his might, ~ Ian Coffey wearing a priestly garment. So David and all the people of Israel brought up the Ark of the LORD with shouts of joy and David reminds her that his behavior is defined by the the blowing of rams’ horns. -

Reznor Reveals Individuality Cd Review Coolest Guys in the World

SIGNAL Tuesday. April L, Features 25 Reznor reveals individuality Cd review coolest guys in the world. add to his long list of achieve Trent Reznor, Producer He has done work on ments. He has compiled an "Lost Highway" Oliver Stone's soundtrack, re other movie soundtrack for soundtrack leased CDs of artistically epic David Lynch's movie, "Lost proportions, and sparked a Highway." By Tommy Lee (ones, III music revolution towards in Reznor changes his Staff writer dustrial music as well as in ways a bit on this soundtrack. spired artists like Poe to do The music is a little more re Trent Reznor has come a what Reznor does. fined and a little less in-the- long way from playing the He composes his music dark erratic. Reznor has spo tuba in his high school using the computer, by him ken about deviating from his marching band. Reznor was self, then hires people to per project known as Nine Inch once the dorkiest kid in form with his onstage. Nails. school. Now, he is among the Reznor has a new honor to On this soundtrack, Reznor sings several songs under lason Kay (bottom left) and fellow lamiroquai members worked his own name rather than together to release latest album. "Travelling Without Moving.' the pseudonym Nine Inch Nails. Jamiroquai does some soul Reznor's soundtrack re minds those more familiar travelling on "Moving" with classical music, of the energy and passion as well as Cd review ets in the path of smooth gui creative ingenuity which is Jamiroquai tar and bass grooves on "Vir characterized with old school "Travelling Without tual Insanity" and "You Are composers. -

John Barry Aaron Copland Mark Snow, John Ottman & Harry Gregson

Volume 10, Number 3 Original Music Soundtracks for Movies and Television HOLY CATS! pg. 24 The Circle Is Complete John Williams wraps up the saga that started it all John Barry Psyched out in the 1970s Aaron Copland Betrayed by Hollywood? Mark Snow, John Ottman & Harry Gregson-Williams Discuss their latest projects Are80% You1.5 BWR P Da Nerd? Take FSM’s fi rst soundtrack quiz! �� � ����� ����� � $7.95 U.S. • $8.95 Canada ������������������������������������������� May/June 2005 ����������������������������������������� contents �������������� �������� ������� ��������� ������������� ����������� ������� �������������� ��������������� ���������� ���������� �������� ��������� ����������������� ��������� ����������������� ���������������������� ������������������������������ �������������������������������������������������������������������������������������������������� ���������� ��������������������������������������������������������������������������������������������������������������� ������������������������������������������������������������������������������������� ��������������������������� �������������������������� ��������������������������������������������������������������������������������������������������������� ���������������� ������������������������������������������������������ ����������������������������������������������������������������������� FILM SCORE MAGAZINE 3 MAY/JUNE 2005 contents May/June 2005 DEPARTMENTS COVER STORY 4 Editorial 31 The Circle Is Complete Much Ado About Summer. Nearly 30 years after -

Jon Batiste & Stay Human

PROGRAM: JON BATISTE AND STAY HUMAN SUNDAY, OCTOBER 27, 2013 / 7:00 PM / BING CONCERT HALL ARTISTS Jon Batiste Piano, Harmonaboard, and Vocals Eddie Barbash Alto Saxophone and Vocals Ibanda Ruhumbika Tuba and Trombone Joseph Saylor Drums and Tambourine Jamison Ross Percussion and Drums Barry Stephenson Bass PROGRAM The program will be announced from the stage. This program was generously funded by the Koret Foundation. The Koret Jazz Project is a multiyear initiative to support, expand, and celebrate the role of jazz in the artistic and educational programming of Stanford Live. The performance is co-sponsored by KCSM Jazz 91. JON BATISTE & STAY HUMAN PROGRAM SUBJECT TO CHANGE. Please be considerate of others and turn off all phones, pagers, and watch alarms, and unwrap all lozenges prior to the performance. Photography and recording of any kind are not permitted. Thank you. encoreartsprograms.com 33 PROGRAM: JON BATISTE & STAY HUMAN JON BATISTE Now 26, Mr. Batiste has defined a “I’m always about trying to fill a need vision based on the most-profound with what I do in my artistry,” says aspects of what has already been a Jon Batiste, an artist whose ambition is rich artistic journey. He was born nothing less than to transform the very in New Orleans into a family whose lives of his listeners. “There is definitely deep musical heritage is part of the a need in the performing arts world for a inspiration for the HBO series Treme, movement to come along that seriously in which he has appeared. Over the connects with a next-generation audience last decade, he has forged his own while still maintaining the timeless artistic path by indelibly fusing himself artistic objectives present throughout the within the fabric of New York City history of the American music tradition.” culture.