Brownian Motion

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Brownian Motion and the Heat Equation

Brownian motion and the heat equation Denis Bell University of North Florida 1. The heat equation Let the function u(t, x) denote the temperature in a rod at position x and time t u(t,x) Then u(t, x) satisfies the heat equation ∂u 1∂2u = , t > 0. (1) ∂t 2∂x2 It is easy to check that the Gaussian function 1 x2 u(t, x) = e−2t 2πt satisfies (1). Let φ be any! bounded continuous function and define 1 (x y)2 u(t, x) = ∞ φ(y)e− 2−t dy. 2πt "−∞ Then u satisfies!(1). Furthermore making the substitution z = (x y)/√t in the integral gives − 1 z2 u(t, x) = ∞ φ(x y√t)e−2 dz − 2π "−∞ ! 1 z2 φ(x) ∞ e−2 dz = φ(x) → 2π "−∞ ! as t 0. Thus ↓ 1 (x y)2 u(t, x) = ∞ φ(y)e− 2−t dy. 2πt "−∞ ! = E[φ(Xt)] where Xt is a N(x, t) random variable solves the heat equation ∂u 1∂2u = ∂t 2∂x2 with initial condition u(0, ) = φ. · Note: The function u(t, x) is smooth in x for t > 0 even if is only continuous. 2. Brownian motion In the nineteenth century, the botanist Robert Brown observed that a pollen particle suspended in liquid undergoes a strange erratic motion (caused by bombardment by molecules of the liquid) Letting w(t) denote the position of the particle in a fixed direction, the paths w typically look like this t N. Wiener constructed a rigorous mathemati- cal model of Brownian motion in the 1930s. -

Notes on Elementary Martingale Theory 1 Conditional Expectations

. Notes on Elementary Martingale Theory by John B. Walsh 1 Conditional Expectations 1.1 Motivation Probability is a measure of ignorance. When new information decreases that ignorance, it changes our probabilities. Suppose we roll a pair of dice, but don't look immediately at the outcome. The result is there for anyone to see, but if we haven't yet looked, as far as we are concerned, the probability that a two (\snake eyes") is showing is the same as it was before we rolled the dice, 1/36. Now suppose that we happen to see that one of the two dice shows a one, but we do not see the second. We reason that we have rolled a two if|and only if|the unseen die is a one. This has probability 1/6. The extra information has changed the probability that our roll is a two from 1/36 to 1/6. It has given us a new probability, which we call a conditional probability. In general, if A and B are events, we say the conditional probability that B occurs given that A occurs is the conditional probability of B given A. This is given by the well-known formula P A B (1) P B A = f \ g; f j g P A f g providing P A > 0. (Just to keep ourselves out of trouble if we need to apply this to a set f g of probability zero, we make the convention that P B A = 0 if P A = 0.) Conditional probabilities are familiar, but that doesn't stop themf fromj g giving risef tog many of the most puzzling paradoxes in probability. -

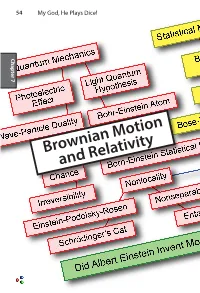

Brownian Motion and Relativity Brownian Motion 55

54 My God, He Plays Dice! Chapter 7 Chapter Brownian Motion and Relativity Brownian Motion 55 Brownian Motion and Relativity In this chapter we describe two of Einstein’s greatest works that have little or nothing to do with his amazing and deeply puzzling theories about quantum mechanics. The first,Brownian motion, provided the first quantitative proof of the existence of atoms and molecules. The second,special relativity in his miracle year of 1905 and general relativity eleven years later, combined the ideas of space and time into a unified space-time with a non-Euclidean 7 Chapter curvature that goes beyond Newton’s theory of gravitation. Einstein’s relativity theory explained the precession of the orbit of Mercury and predicted the bending of light as it passes the sun, confirmed by Arthur Stanley Eddington in 1919. He also predicted that galaxies can act as gravitational lenses, focusing light from objects far beyond, as was confirmed in 1979. He also predicted gravitational waves, only detected in 2016, one century after Einstein wrote down the equations that explain them.. What are we to make of this man who could see things that others could not? Our thesis is that if we look very closely at the things he said, especially his doubts expressed privately to friends, today’s mysteries of quantum mechanics may be lessened. As great as Einstein’s theories of Brownian motion and relativity are, they were accepted quickly because measurements were soon made that confirmed their predictions. Moreover, contemporaries of Einstein were working on these problems. Marion Smoluchowski worked out the equation for the rate of diffusion of large particles in a liquid the year before Einstein. -

The Theory of Filtrations of Subalgebras, Standardness and Independence

The theory of filtrations of subalgebras, standardness and independence Anatoly M. Vershik∗ 24.01.2017 In memory of my friend Kolya K. (1933{2014) \Independence is the best quality, the best word in all languages." J. Brodsky. From a personal letter. Abstract The survey is devoted to the combinatorial and metric theory of fil- trations, i. e., decreasing sequences of σ-algebras in measure spaces or decreasing sequences of subalgebras of certain algebras. One of the key notions, that of standardness, plays the role of a generalization of the no- tion of the independence of a sequence of random variables. We discuss the possibility of obtaining a classification of filtrations, their invariants, and various links to problems in algebra, dynamics, and combinatorics. Bibliography: 101 titles. Contents 1 Introduction 3 1.1 A simple example and a difficult question . .3 1.2 Three languages of measure theory . .5 1.3 Where do filtrations appear? . .6 1.4 Finite and infinite in classification problems; standardness as a generalization of independence . .9 arXiv:1705.06619v1 [math.DS] 18 May 2017 1.5 A summary of the paper . 12 2 The definition of filtrations in measure spaces 13 2.1 Measure theory: a Lebesgue space, basic facts . 13 2.2 Measurable partitions, filtrations . 14 2.3 Classification of measurable partitions . 16 ∗St. Petersburg Department of Steklov Mathematical Institute; St. Petersburg State Uni- versity Institute for Information Transmission Problems. E-mail: [email protected]. Par- tially supported by the Russian Science Foundation (grant No. 14-11-00581). 1 2.4 Classification of finite filtrations . 17 2.5 Filtrations we consider and how one can define them . -

An Application of Probability Theory in Finance: the Black-Scholes Formula

AN APPLICATION OF PROBABILITY THEORY IN FINANCE: THE BLACK-SCHOLES FORMULA EFFY FANG Abstract. In this paper, we start from the building blocks of probability the- ory, including σ-field and measurable functions, and then proceed to a formal introduction of probability theory. Later, we introduce stochastic processes, martingales and Wiener processes in particular. Lastly, we present an appli- cation - the Black-Scholes Formula, a model used to price options in financial markets. Contents 1. The Building Blocks 1 2. Probability 4 3. Martingales 7 4. Wiener Processes 8 5. The Black-Scholes Formula 9 References 14 1. The Building Blocks Consider the set Ω of all possible outcomes of a random process. When the set is small, for example, the outcomes of tossing a coin, we can analyze case by case. However, when the set gets larger, we would need to study the collections of subsets of Ω that may be taken as a suitable set of events. We define such a collection as a σ-field. Definition 1.1. A σ-field on a set Ω is a collection F of subsets of Ω which obeys the following rules or axioms: (a) Ω 2 F (b) if A 2 F, then Ac 2 F 1 S1 (c) if fAngn=1 is a sequence in F, then n=1 An 2 F The pair (Ω; F) is called a measurable space. Proposition 1.2. Let (Ω; F) be a measurable space. Then (1) ; 2 F k SK (2) if fAngn=1 is a finite sequence of F-measurable sets, then n=1 An 2 F 1 T1 (3) if fAngn=1 is a sequence of F-measurable sets, then n=1 An 2 F Date: August 28,2015. -

1 Introduction Branching Mechanism in a Superprocess from a Branching

数理解析研究所講究録 1157 巻 2000 年 1-16 1 An example of random snakes by Le Gall and its applications 渡辺信三 Shinzo Watanabe, Kyoto University 1 Introduction The notion of random snakes has been introduced by Le Gall ([Le 1], [Le 2]) to construct a class of measure-valued branching processes, called superprocesses or continuous state branching processes ([Da], [Dy]). A main idea is to produce the branching mechanism in a superprocess from a branching tree embedded in excur- sions at each different level of a Brownian sample path. There is no clear notion of particles in a superprocess; it is something like a cloud or mist. Nevertheless, a random snake could provide us with a clear picture of historical or genealogical developments of”particles” in a superprocess. ” : In this note, we give a sample pathwise construction of a random snake in the case when the underlying Markov process is a Markov chain on a tree. A simplest case has been discussed in [War 1] and [Wat 2]. The construction can be reduced to this case locally and we need to consider a recurrence family of stochastic differential equations for reflecting Brownian motions with sticky boundaries. A special case has been already discussed by J. Warren [War 2] with an application to a coalescing stochastic flow of piece-wise linear transformations in connection with a non-white or non-Gaussian predictable noise in the sense of B. Tsirelson. 2 Brownian snakes Throughout this section, let $\xi=\{\xi(t), P_{x}\}$ be a Hunt Markov process on a locally compact separable metric space $S$ endowed with a metric $d_{S}(\cdot, *)$ . -

And Nanoemulsions As Vehicles for Essential Oils: Formulation, Preparation and Stability

nanomaterials Review An Overview of Micro- and Nanoemulsions as Vehicles for Essential Oils: Formulation, Preparation and Stability Lucia Pavoni, Diego Romano Perinelli , Giulia Bonacucina, Marco Cespi * and Giovanni Filippo Palmieri School of Pharmacy, University of Camerino, 62032 Camerino, Italy; [email protected] (L.P.); [email protected] (D.R.P.); [email protected] (G.B.); gianfi[email protected] (G.F.P.) * Correspondence: [email protected] Received: 23 December 2019; Accepted: 10 January 2020; Published: 12 January 2020 Abstract: The interest around essential oils is constantly increasing thanks to their biological properties exploitable in several fields, from pharmaceuticals to food and agriculture. However, their widespread use and marketing are still restricted due to their poor physico-chemical properties; i.e., high volatility, thermal decomposition, low water solubility, and stability issues. At the moment, the most suitable approach to overcome such limitations is based on the development of proper formulation strategies. One of the approaches suggested to achieve this goal is the so-called encapsulation process through the preparation of aqueous nano-dispersions. Among them, micro- and nanoemulsions are the most studied thanks to the ease of formulation, handling and to their manufacturing costs. In this direction, this review intends to offer an overview of the formulation, preparation and stability parameters of micro- and nanoemulsions. Specifically, recent literature has been examined in order to define the most common practices adopted (materials and fabrication methods), highlighting their suitability and effectiveness. Finally, relevant points related to formulations, such as optimization, characterization, stability and safety, not deeply studied or clarified yet, were discussed. -

Mathematical Finance an Introduction in Discrete Time

Jan Kallsen Mathematical Finance An introduction in discrete time CAU zu Kiel, WS 13/14, February 10, 2014 Contents 0 Some notions from stochastics 4 0.1 Basics . .4 0.1.1 Probability spaces . .4 0.1.2 Random variables . .5 0.1.3 Conditional probabilities and expectations . .6 0.2 Absolute continuity and equivalence . .7 0.3 Conditional expectation . .8 0.3.1 σ-fields . .8 0.3.2 Conditional expectation relative to a σ-field . 10 1 Discrete stochastic calculus 16 1.1 Stochastic processes . 16 1.2 Martingales . 18 1.3 Stochastic integral . 20 1.4 Intuitive summary of some notions from this chapter . 27 2 Modelling financial markets 36 2.1 Assets and trading strategies . 36 2.2 Arbitrage . 39 2.3 Dividend payments . 41 2.4 Concrete models . 43 3 Derivative pricing and hedging 48 3.1 Contingent claims . 49 3.2 Liquidly traded derivatives . 50 3.3 Individual perspective . 54 3.4 Examples . 56 3.4.1 Forward contract . 56 3.4.2 Futures contract . 57 3.4.3 European call and put options in the binomial model . 57 3.4.4 European call and put options in the standard model . 61 3.5 American options . 63 2 CONTENTS 3 4 Incomplete markets 70 4.1 Martingale modelling . 70 4.2 Variance-optimal hedging . 72 5 Portfolio optimization 73 5.1 Maximizing expected utility of terminal wealth . 73 6 Elements of continuous-time finance 80 6.1 Continuous-time theory . 80 6.2 Black-Scholes model . 84 Chapter 0 Some notions from stochastics 0.1 Basics 0.1.1 Probability spaces This course requires a decent background in stochastics which cannot be provided here. -

Download File

i PREFACE Teaching stochastic processes to students whose primary interests are in applications has long been a problem. On one hand, the subject can quickly become highly technical and if mathe- matical concerns are allowed to dominate there may be no time available for exploring the many interesting areas of applications. On the other hand, the treatment of stochastic calculus in a cavalier fashion leaves the student with a feeling of great uncertainty when it comes to exploring new material. Moreover, the problem has become more acute as the power of the dierential equation point of view has become more widely appreciated. In these notes, an attempt is made to resolve this dilemma with the needs of those interested in building models and designing al- gorithms for estimation and control in mind. The approach is to start with Poisson counters and to identity the Wiener process with a certain limiting form. We do not attempt to dene the Wiener process per se. Instead, everything is done in terms of limits of jump processes. The Poisson counter and dierential equations whose right-hand sides include the dierential of Poisson counters are developed rst. This leads to the construction of a sample path representa- tions of a continuous time jump process using Poisson counters. This point of view leads to an ecient problem solving technique and permits a unied treatment of time varying and nonlinear problems. More importantly, it provides sound intuition for stochastic dierential equations and their uses without allowing the technicalities to dominate. In treating estimation theory, the conditional density equation is given a central role. -

4 Martingales in Discrete-Time

4 Martingales in Discrete-Time Suppose that (Ω, F, P) is a probability space. Definition 4.1. A sequence F = {Fn, n = 0, 1,...} is called a filtration if each Fn is a sub-σ-algebra of F, and Fn ⊆ Fn+1 for n = 0, 1, 2,.... In other words, a filtration is an increasing sequence of sub-σ-algebras of F. For nota- tional convenience, we define ∞ ! [ F∞ = σ Fn n=1 and note that F∞ ⊆ F. Definition 4.2. If F = {Fn, n = 0, 1,...} is a filtration, and X = {Xn, n = 0, 1,...} is a discrete-time stochastic process, then X is said to be adapted to F if Xn is Fn-measurable for each n. Note that by definition every process {Xn, n = 0, 1,...} is adapted to its natural filtration {Fn, n = 0, 1,...} where Fn = σ(X0,...,Xn). The intuition behind the idea of a filtration is as follows. At time n, all of the information about ω ∈ Ω that is known to us at time n is contained in Fn. As n increases, our knowledge of ω also increases (or, if you prefer, does not decrease) and this is captured in the fact that the filtration consists of an increasing sequence of sub-σ-algebras. Definition 4.3. A discrete-time stochastic process X = {Xn, n = 0, 1,...} is called a martingale (with respect to the filtration F = {Fn, n = 0, 1,...}) if for each n = 0, 1, 2,..., (a) X is adapted to F; that is, Xn is Fn-measurable, 1 (b) Xn is in L ; that is, E|Xn| < ∞, and (c) E(Xn+1|Fn) = Xn a.s. -

Long Memory and Self-Similar Processes

ANNALES DE LA FACULTÉ DES SCIENCES Mathématiques GENNADY SAMORODNITSKY Long memory and self-similar processes Tome XV, no 1 (2006), p. 107-123. <http://afst.cedram.org/item?id=AFST_2006_6_15_1_107_0> © Annales de la faculté des sciences de Toulouse Mathématiques, 2006, tous droits réservés. L’accès aux articles de la revue « Annales de la faculté des sci- ences de Toulouse, Mathématiques » (http://afst.cedram.org/), implique l’accord avec les conditions générales d’utilisation (http://afst.cedram. org/legal/). Toute reproduction en tout ou partie cet article sous quelque forme que ce soit pour tout usage autre que l’utilisation à fin strictement personnelle du copiste est constitutive d’une infraction pénale. Toute copie ou impression de ce fichier doit contenir la présente mention de copyright. cedram Article mis en ligne dans le cadre du Centre de diffusion des revues académiques de mathématiques http://www.cedram.org/ Annales de la Facult´e des Sciences de Toulouse Vol. XV, n◦ 1, 2006 pp. 107–123 Long memory and self-similar processes(∗) Gennady Samorodnitsky (1) ABSTRACT. — This paper is a survey of both classical and new results and ideas on long memory, scaling and self-similarity, both in the light-tailed and heavy-tailed cases. RESUM´ E.´ — Cet article est une synth`ese de r´esultats et id´ees classiques ou nouveaux surla longue m´emoire, les changements d’´echelles et l’autosimi- larit´e, `a la fois dans le cas de queues de distributions lourdes ou l´eg`eres. 1. Introduction The notion of long memory (or long range dependence) has intrigued many at least since B. -

Levy Processes

LÉVY PROCESSES, STABLE PROCESSES, AND SUBORDINATORS STEVEN P.LALLEY 1. DEFINITIONSAND EXAMPLES d Definition 1.1. A continuous–time process Xt = X(t ) t 0 with values in R (or, more generally, in an abelian topological groupG ) isf called a Lévyg ≥ process if (1) its sample paths are right-continuous and have left limits at every time point t , and (2) it has stationary, independent increments, that is: (a) For all 0 = t0 < t1 < < tk , the increments X(ti ) X(ti 1) are independent. − (b) For all 0 s t the··· random variables X(t ) X−(s ) and X(t s ) X(0) have the same distribution.≤ ≤ − − − The default initial condition is X0 = 0. A subordinator is a real-valued Lévy process with nondecreasing sample paths. A stable process is a real-valued Lévy process Xt t 0 with ≥ initial value X0 = 0 that satisfies the self-similarity property f g 1/α (1.1) Xt =t =D X1 t > 0. 8 The parameter α is called the exponent of the process. Example 1.1. The most fundamental Lévy processes are the Wiener process and the Poisson process. The Poisson process is a subordinator, but is not stable; the Wiener process is stable, with exponent α = 2. Any linear combination of independent Lévy processes is again a Lévy process, so, for instance, if the Wiener process Wt and the Poisson process Nt are independent then Wt Nt is a Lévy process. More important, linear combinations of independent Poisson− processes are Lévy processes: these are special cases of what are called compound Poisson processes: see sec.