A Guide to Brownian Motion and Related Stochastic Processes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Interpolation for Partly Hidden Diffusion Processes*

Interpolation for partly hidden di®usion processes* Changsun Choi, Dougu Nam** Division of Applied Mathematics, KAIST, Daejeon 305-701, Republic of Korea Abstract Let Xt be n-dimensional di®usion process and S(t) be a smooth set-valued func- tion. Suppose X is invisible when X S(t), but we can see the process exactly t t 2 otherwise. Let X S(t ) and we observe the process from the beginning till the t0 2 0 signal reappears out of the obstacle after t0. With this information, we evaluate the estimators for the functionals of Xt on a time interval containing t0 where the signal is hidden. We solve related 3 PDE's in general cases. We give a generalized last exit decomposition for n-dimensional Brownian motion to evaluate its estima- tors. An alternative Monte Carlo method is also proposed for Brownian motion. We illustrate several examples and compare the solutions between those by the closed form result, ¯nite di®erence method, and Monte Carlo simulations. Key words: interpolation, di®usion process, excursions, backward boundary value problem, last exit decomposition, Monte Carlo method 1 Introduction Usually, the interpolation problem for Markov process indicates that of eval- uating the probability distribution of the process between the discretely ob- served times. One of the origin of the problem is SchrÄodinger's Gedankenex- periment where we are interested in the probability distribution of a di®usive particle in a given force ¯eld when the initial and ¯nal time density functions are given. We can obtain the desired density function at each time in a given time interval by solving the forward and backward Kolmogorov equations. -

Markovian Bridges: Weak Continuity and Pathwise Constructions

The Annals of Probability 2011, Vol. 39, No. 2, 609–647 DOI: 10.1214/10-AOP562 c Institute of Mathematical Statistics, 2011 MARKOVIAN BRIDGES: WEAK CONTINUITY AND PATHWISE CONSTRUCTIONS1 By Lo¨ıc Chaumont and Geronimo´ Uribe Bravo2,3 Universit´ed’Angers and Universidad Nacional Aut´onoma de M´exico A Markovian bridge is a probability measure taken from a disin- tegration of the law of an initial part of the path of a Markov process given its terminal value. As such, Markovian bridges admit a natural parameterization in terms of the state space of the process. In the context of Feller processes with continuous transition densities, we construct by weak convergence considerations the only versions of Markovian bridges which are weakly continuous with respect to their parameter. We use this weakly continuous construction to provide an extension of the strong Markov property in which the flow of time is reversed. In the context of self-similar Feller process, the last result is shown to be useful in the construction of Markovian bridges out of the trajectories of the original process. 1. Introduction and main results. 1.1. Motivation. The aim of this article is to study Markov processes on [0,t], starting at x, conditioned to arrive at y at time t. Historically, the first example of such a conditional law is given by Paul L´evy’s construction of the Brownian bridge: given a Brownian motion B starting at zero, let s s bx,y,t = x + B B + (y x) . s s − t t − t arXiv:0905.2155v3 [math.PR] 14 Mar 2011 Received May 2009; revised April 2010. -

CONFORMAL INVARIANCE and 2 D STATISTICAL PHYSICS −

CONFORMAL INVARIANCE AND 2 d STATISTICAL PHYSICS − GREGORY F. LAWLER Abstract. A number of two-dimensional models in statistical physics are con- jectured to have scaling limits at criticality that are in some sense conformally invariant. In the last ten years, the rigorous understanding of such limits has increased significantly. I give an introduction to the models and one of the major new mathematical structures, the Schramm-Loewner Evolution (SLE). 1. Critical Phenomena Critical phenomena in statistical physics refers to the study of systems at or near the point at which a phase transition occurs. There are many models of such phenomena. We will discuss some discrete equilibrium models that are defined on a lattice. These are measures on paths or configurations where configurations are weighted by their energy with a preference for paths of smaller energy. These measures depend on at leastE one parameter. A standard parameter in physics β = c/T where c is a fixed constant, T stands for temperature and the measure given to a configuration is e−βE . Phase transitions occur at critical values of the temperature corresponding to, e.g., the transition from a gaseous to liquid state. We use β for the parameter although for understanding the literature it is useful to know that large values of β correspond to “low temperature” and small values of β correspond to “high temperature”. Small β (high temperature) systems have weaker correlations than large β (low temperature) systems. In a number of models, there is a critical βc such that qualitatively the system has three regimes β<βc (high temperature), β = βc (critical) and β>βc (low temperature). -

Superprocesses and Mckean-Vlasov Equations with Creation of Mass

Sup erpro cesses and McKean-Vlasov equations with creation of mass L. Overb eck Department of Statistics, University of California, Berkeley, 367, Evans Hall Berkeley, CA 94720, y U.S.A. Abstract Weak solutions of McKean-Vlasov equations with creation of mass are given in terms of sup erpro cesses. The solutions can b e approxi- mated by a sequence of non-interacting sup erpro cesses or by the mean- eld of multityp e sup erpro cesses with mean- eld interaction. The lat- ter approximation is asso ciated with a propagation of chaos statement for weakly interacting multityp e sup erpro cesses. Running title: Sup erpro cesses and McKean-Vlasov equations . 1 Intro duction Sup erpro cesses are useful in solving nonlinear partial di erential equation of 1+ the typ e f = f , 2 0; 1], cf. [Dy]. Wenowchange the p oint of view and showhowtheyprovide sto chastic solutions of nonlinear partial di erential Supp orted byanFellowship of the Deutsche Forschungsgemeinschaft. y On leave from the Universitat Bonn, Institut fur Angewandte Mathematik, Wegelerstr. 6, 53115 Bonn, Germany. 1 equation of McKean-Vlasovtyp e, i.e. wewant to nd weak solutions of d d 2 X X @ @ @ + d x; + bx; : 1.1 = a x; t i t t t t t ij t @t @x @x @x i j i i=1 i;j =1 d Aweak solution = 2 C [0;T];MIR satis es s Z 2 t X X @ @ a f = f + f + d f + b f ds: s ij s t 0 i s s @x @x @x 0 i j i Equation 1.1 generalizes McKean-Vlasov equations of twodi erenttyp es. -

Brownian Motion and the Heat Equation

Brownian motion and the heat equation Denis Bell University of North Florida 1. The heat equation Let the function u(t, x) denote the temperature in a rod at position x and time t u(t,x) Then u(t, x) satisfies the heat equation ∂u 1∂2u = , t > 0. (1) ∂t 2∂x2 It is easy to check that the Gaussian function 1 x2 u(t, x) = e−2t 2πt satisfies (1). Let φ be any! bounded continuous function and define 1 (x y)2 u(t, x) = ∞ φ(y)e− 2−t dy. 2πt "−∞ Then u satisfies!(1). Furthermore making the substitution z = (x y)/√t in the integral gives − 1 z2 u(t, x) = ∞ φ(x y√t)e−2 dz − 2π "−∞ ! 1 z2 φ(x) ∞ e−2 dz = φ(x) → 2π "−∞ ! as t 0. Thus ↓ 1 (x y)2 u(t, x) = ∞ φ(y)e− 2−t dy. 2πt "−∞ ! = E[φ(Xt)] where Xt is a N(x, t) random variable solves the heat equation ∂u 1∂2u = ∂t 2∂x2 with initial condition u(0, ) = φ. · Note: The function u(t, x) is smooth in x for t > 0 even if is only continuous. 2. Brownian motion In the nineteenth century, the botanist Robert Brown observed that a pollen particle suspended in liquid undergoes a strange erratic motion (caused by bombardment by molecules of the liquid) Letting w(t) denote the position of the particle in a fixed direction, the paths w typically look like this t N. Wiener constructed a rigorous mathemati- cal model of Brownian motion in the 1930s. -

A Stochastic Processes and Martingales

A Stochastic Processes and Martingales A.1 Stochastic Processes Let I be either IINorIR+.Astochastic process on I with state space E is a family of E-valued random variables X = {Xt : t ∈ I}. We only consider examples where E is a Polish space. Suppose for the moment that I =IR+. A stochastic process is called cadlag if its paths t → Xt are right-continuous (a.s.) and its left limits exist at all points. In this book we assume that every stochastic process is cadlag. We say a process is continuous if its paths are continuous. The above conditions are meant to hold with probability 1 and not to hold pathwise. A.2 Filtration and Stopping Times The information available at time t is expressed by a σ-subalgebra Ft ⊂F.An {F ∈ } increasing family of σ-algebras t : t I is called a filtration.IfI =IR+, F F F we call a filtration right-continuous if t+ := s>t s = t. If not stated otherwise, we assume that all filtrations in this book are right-continuous. In many books it is also assumed that the filtration is complete, i.e., F0 contains all IIP-null sets. We do not assume this here because we want to be able to change the measure in Chapter 4. Because the changed measure and IIP will be singular, it would not be possible to extend the new measure to the whole σ-algebra F. A stochastic process X is called Ft-adapted if Xt is Ft-measurable for all t. If it is clear which filtration is used, we just call the process adapted.The {F X } natural filtration t is the smallest right-continuous filtration such that X is adapted. -

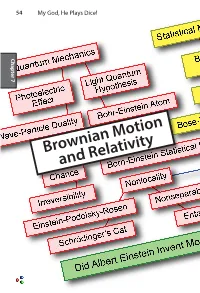

Brownian Motion and Relativity Brownian Motion 55

54 My God, He Plays Dice! Chapter 7 Chapter Brownian Motion and Relativity Brownian Motion 55 Brownian Motion and Relativity In this chapter we describe two of Einstein’s greatest works that have little or nothing to do with his amazing and deeply puzzling theories about quantum mechanics. The first,Brownian motion, provided the first quantitative proof of the existence of atoms and molecules. The second,special relativity in his miracle year of 1905 and general relativity eleven years later, combined the ideas of space and time into a unified space-time with a non-Euclidean 7 Chapter curvature that goes beyond Newton’s theory of gravitation. Einstein’s relativity theory explained the precession of the orbit of Mercury and predicted the bending of light as it passes the sun, confirmed by Arthur Stanley Eddington in 1919. He also predicted that galaxies can act as gravitational lenses, focusing light from objects far beyond, as was confirmed in 1979. He also predicted gravitational waves, only detected in 2016, one century after Einstein wrote down the equations that explain them.. What are we to make of this man who could see things that others could not? Our thesis is that if we look very closely at the things he said, especially his doubts expressed privately to friends, today’s mysteries of quantum mechanics may be lessened. As great as Einstein’s theories of Brownian motion and relativity are, they were accepted quickly because measurements were soon made that confirmed their predictions. Moreover, contemporaries of Einstein were working on these problems. Marion Smoluchowski worked out the equation for the rate of diffusion of large particles in a liquid the year before Einstein. -

Patterns in Random Walks and Brownian Motion

Patterns in Random Walks and Brownian Motion Jim Pitman and Wenpin Tang Abstract We ask if it is possible to find some particular continuous paths of unit length in linear Brownian motion. Beginning with a discrete version of the problem, we derive the asymptotics of the expected waiting time for several interesting patterns. These suggest corresponding results on the existence/non-existence of continuous paths embedded in Brownian motion. With further effort we are able to prove some of these existence and non-existence results by various stochastic analysis arguments. A list of open problems is presented. AMS 2010 Mathematics Subject Classification: 60C05, 60G17, 60J65. 1 Introduction and Main Results We are interested in the question of embedding some continuous-time stochastic processes .Zu;0Ä u Ä 1/ into a Brownian path .BtI t 0/, without time-change or scaling, just by a random translation of origin in spacetime. More precisely, we ask the following: Question 1 Given some distribution of a process Z with continuous paths, does there exist a random time T such that .BTCu BT I 0 Ä u Ä 1/ has the same distribution as .Zu;0Ä u Ä 1/? The question of whether external randomization is allowed to construct such a random time T, is of no importance here. In fact, we can simply ignore Brownian J. Pitman ()•W.Tang Department of Statistics, University of California, 367 Evans Hall, Berkeley, CA 94720-3860, USA e-mail: [email protected]; [email protected] © Springer International Publishing Switzerland 2015 49 C. Donati-Martin et al. -

1 Introduction Branching Mechanism in a Superprocess from a Branching

数理解析研究所講究録 1157 巻 2000 年 1-16 1 An example of random snakes by Le Gall and its applications 渡辺信三 Shinzo Watanabe, Kyoto University 1 Introduction The notion of random snakes has been introduced by Le Gall ([Le 1], [Le 2]) to construct a class of measure-valued branching processes, called superprocesses or continuous state branching processes ([Da], [Dy]). A main idea is to produce the branching mechanism in a superprocess from a branching tree embedded in excur- sions at each different level of a Brownian sample path. There is no clear notion of particles in a superprocess; it is something like a cloud or mist. Nevertheless, a random snake could provide us with a clear picture of historical or genealogical developments of”particles” in a superprocess. ” : In this note, we give a sample pathwise construction of a random snake in the case when the underlying Markov process is a Markov chain on a tree. A simplest case has been discussed in [War 1] and [Wat 2]. The construction can be reduced to this case locally and we need to consider a recurrence family of stochastic differential equations for reflecting Brownian motions with sticky boundaries. A special case has been already discussed by J. Warren [War 2] with an application to a coalescing stochastic flow of piece-wise linear transformations in connection with a non-white or non-Gaussian predictable noise in the sense of B. Tsirelson. 2 Brownian snakes Throughout this section, let $\xi=\{\xi(t), P_{x}\}$ be a Hunt Markov process on a locally compact separable metric space $S$ endowed with a metric $d_{S}(\cdot, *)$ . -

Constructing a Sequence of Random Walks Strongly Converging to Brownian Motion Philippe Marchal

Constructing a sequence of random walks strongly converging to Brownian motion Philippe Marchal To cite this version: Philippe Marchal. Constructing a sequence of random walks strongly converging to Brownian motion. Discrete Random Walks, DRW’03, 2003, Paris, France. pp.181-190. hal-01183930 HAL Id: hal-01183930 https://hal.inria.fr/hal-01183930 Submitted on 12 Aug 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Discrete Mathematics and Theoretical Computer Science AC, 2003, 181–190 Constructing a sequence of random walks strongly converging to Brownian motion Philippe Marchal CNRS and Ecole´ normale superieur´ e, 45 rue d’Ulm, 75005 Paris, France [email protected] We give an algorithm which constructs recursively a sequence of simple random walks on converging almost surely to a Brownian motion. One obtains by the same method conditional versions of the simple random walk converging to the excursion, the bridge, the meander or the normalized pseudobridge. Keywords: strong convergence, simple random walk, Brownian motion 1 Introduction It is one of the most basic facts in probability theory that random walks, after proper rescaling, converge to Brownian motion. However, Donsker’s classical theorem [Don51] only states a convergence in law. -

And Nanoemulsions As Vehicles for Essential Oils: Formulation, Preparation and Stability

nanomaterials Review An Overview of Micro- and Nanoemulsions as Vehicles for Essential Oils: Formulation, Preparation and Stability Lucia Pavoni, Diego Romano Perinelli , Giulia Bonacucina, Marco Cespi * and Giovanni Filippo Palmieri School of Pharmacy, University of Camerino, 62032 Camerino, Italy; [email protected] (L.P.); [email protected] (D.R.P.); [email protected] (G.B.); gianfi[email protected] (G.F.P.) * Correspondence: [email protected] Received: 23 December 2019; Accepted: 10 January 2020; Published: 12 January 2020 Abstract: The interest around essential oils is constantly increasing thanks to their biological properties exploitable in several fields, from pharmaceuticals to food and agriculture. However, their widespread use and marketing are still restricted due to their poor physico-chemical properties; i.e., high volatility, thermal decomposition, low water solubility, and stability issues. At the moment, the most suitable approach to overcome such limitations is based on the development of proper formulation strategies. One of the approaches suggested to achieve this goal is the so-called encapsulation process through the preparation of aqueous nano-dispersions. Among them, micro- and nanoemulsions are the most studied thanks to the ease of formulation, handling and to their manufacturing costs. In this direction, this review intends to offer an overview of the formulation, preparation and stability parameters of micro- and nanoemulsions. Specifically, recent literature has been examined in order to define the most common practices adopted (materials and fabrication methods), highlighting their suitability and effectiveness. Finally, relevant points related to formulations, such as optimization, characterization, stability and safety, not deeply studied or clarified yet, were discussed. -

© in This Web Service Cambridge University Press Cambridge University Press 978-0-521-14577-0

Cambridge University Press 978-0-521-14577-0 - Probability and Mathematical Genetics Edited by N. H. Bingham and C. M. Goldie Index More information Index ABC, approximate Bayesian almost complete, 141 computation almost complete Abecasis, G. R., 220 metrizabilityalmost-complete Abel Memorial Fund, 380 metrizability, see metrizability Abou-Rahme, N., 430 α-stable, see subordinator AB-percolation, see percolation Alsmeyer, G., 114 Abramovitch, F., 86 alternating renewal process, see renewal accumulate essentially, 140, 161, 163, theory 164 alternating word, see word Addario-Berry, L., 120, 121 AMSE, average mean-square error additive combinatorics, 138, 162–164 analytic set, 147 additive function, 139 ancestor gene, see gene adjacency relation, 383 ancestral history, 92, 96, 211, 256, 360 Adler, I., 303 ancestral inference, 110 admixture, 222, 224 Anderson, C. W., 303, 305–306 adsorption, 465–468, 477–478, 481, see Anderson, R. D., 137 also cooperative sequential Andjel, E., 391 adsorption model (CSA) Andrews, G. E., 372 advection-diffusion, 398–400, 408–410 anomalous spreading, 125–131 a.e., almost everywhere Antoniak, C. E., 324, 330 Affymetrix gene chip, 326 Applied Probability Trust, 33 age-dependent branching process, see appointments system, 354 branching process approximate Bayesian computation Aldous, D. J., 246, 250–251, 258, 328, (ABC), 214 448 approximate continuity, 142 algorithm, 116, 219, 221, 226–228, 422, Archimedes of Syracuse see also computational complexity, weakly Archimedean property, coupling, Kendall–Møller 144–145 algorithm, Markov chain Monte area-interaction process, see point Carlo (MCMC), phasing algorithm process perfect simulation algorithm, 69–71 arithmeticity, 172 allele, 92–103, 218, 222, 239–260, 360, arithmetic progression, 138, 157, 366, see also minor allele frequency 162–163 (MAF) Arjas, E., 350 allelic partition distribution, 243, 248, Aronson, D.