Long Memory and Self-Similar Processes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Brownian Motion and Relativity Brownian Motion 55

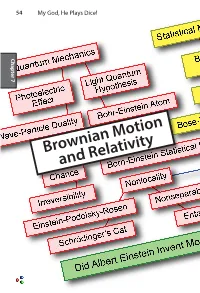

54 My God, He Plays Dice! Chapter 7 Chapter Brownian Motion and Relativity Brownian Motion 55 Brownian Motion and Relativity In this chapter we describe two of Einstein’s greatest works that have little or nothing to do with his amazing and deeply puzzling theories about quantum mechanics. The first,Brownian motion, provided the first quantitative proof of the existence of atoms and molecules. The second,special relativity in his miracle year of 1905 and general relativity eleven years later, combined the ideas of space and time into a unified space-time with a non-Euclidean 7 Chapter curvature that goes beyond Newton’s theory of gravitation. Einstein’s relativity theory explained the precession of the orbit of Mercury and predicted the bending of light as it passes the sun, confirmed by Arthur Stanley Eddington in 1919. He also predicted that galaxies can act as gravitational lenses, focusing light from objects far beyond, as was confirmed in 1979. He also predicted gravitational waves, only detected in 2016, one century after Einstein wrote down the equations that explain them.. What are we to make of this man who could see things that others could not? Our thesis is that if we look very closely at the things he said, especially his doubts expressed privately to friends, today’s mysteries of quantum mechanics may be lessened. As great as Einstein’s theories of Brownian motion and relativity are, they were accepted quickly because measurements were soon made that confirmed their predictions. Moreover, contemporaries of Einstein were working on these problems. Marion Smoluchowski worked out the equation for the rate of diffusion of large particles in a liquid the year before Einstein. -

And Nanoemulsions As Vehicles for Essential Oils: Formulation, Preparation and Stability

nanomaterials Review An Overview of Micro- and Nanoemulsions as Vehicles for Essential Oils: Formulation, Preparation and Stability Lucia Pavoni, Diego Romano Perinelli , Giulia Bonacucina, Marco Cespi * and Giovanni Filippo Palmieri School of Pharmacy, University of Camerino, 62032 Camerino, Italy; [email protected] (L.P.); [email protected] (D.R.P.); [email protected] (G.B.); gianfi[email protected] (G.F.P.) * Correspondence: [email protected] Received: 23 December 2019; Accepted: 10 January 2020; Published: 12 January 2020 Abstract: The interest around essential oils is constantly increasing thanks to their biological properties exploitable in several fields, from pharmaceuticals to food and agriculture. However, their widespread use and marketing are still restricted due to their poor physico-chemical properties; i.e., high volatility, thermal decomposition, low water solubility, and stability issues. At the moment, the most suitable approach to overcome such limitations is based on the development of proper formulation strategies. One of the approaches suggested to achieve this goal is the so-called encapsulation process through the preparation of aqueous nano-dispersions. Among them, micro- and nanoemulsions are the most studied thanks to the ease of formulation, handling and to their manufacturing costs. In this direction, this review intends to offer an overview of the formulation, preparation and stability parameters of micro- and nanoemulsions. Specifically, recent literature has been examined in order to define the most common practices adopted (materials and fabrication methods), highlighting their suitability and effectiveness. Finally, relevant points related to formulations, such as optimization, characterization, stability and safety, not deeply studied or clarified yet, were discussed. -

Lewis Fry Richardson: Scientist, Visionary and Pacifist

Lett Mat Int DOI 10.1007/s40329-014-0063-z Lewis Fry Richardson: scientist, visionary and pacifist Angelo Vulpiani Ó Centro P.RI.ST.EM, Universita` Commerciale Luigi Bocconi 2014 Abstract The aim of this paper is to present the main The last of seven children in a thriving English Quaker aspects of the life of Lewis Fry Richardson and his most family, in 1898 Richardson enrolled in Durham College of important scientific contributions. Of particular importance Science, and two years later was awarded a grant to study are the seminal concepts of turbulent diffusion, turbulent at King’s College in Cambridge, where he received a cascade and self-similar processes, which led to a profound diverse education: he read physics, mathematics, chemis- transformation of the way weather forecasts, turbulent try, meteorology, botany, and psychology. At the beginning flows and, more generally, complex systems are viewed. of his career he was quite uncertain about which road to follow, but he later resolved to work in several areas, just Keywords Lewis Fry Richardson Á Weather forecasting Á like the great German scientist Helmholtz, who was a Turbulent diffusion Á Turbulent cascade Á Numerical physician and then a physicist, but following a different methods Á Fractals Á Self-similarity order. In his words, he decided ‘‘to spend the first half of my life under the strict discipline of physics, and after- Lewis Fry Richardson (1881–1953), while undeservedly wards to apply that training to researches on living things’’. little known, had a fundamental (often posthumous) role in In addition to contributing important results to meteo- twentieth-century science. -

Analytic and Geometric Background of Recurrence and Non-Explosion of the Brownian Motion on Riemannian Manifolds

BULLETIN (New Series) OF THE AMERICAN MATHEMATICAL SOCIETY Volume 36, Number 2, Pages 135–249 S 0273-0979(99)00776-4 Article electronically published on February 19, 1999 ANALYTIC AND GEOMETRIC BACKGROUND OF RECURRENCE AND NON-EXPLOSION OF THE BROWNIAN MOTION ON RIEMANNIAN MANIFOLDS ALEXANDER GRIGOR’YAN Abstract. We provide an overview of such properties of the Brownian mo- tion on complete non-compact Riemannian manifolds as recurrence and non- explosion. It is shown that both properties have various analytic characteri- zations, in terms of the heat kernel, Green function, Liouville properties, etc. On the other hand, we consider a number of geometric conditions such as the volume growth, isoperimetric inequalities, curvature bounds, etc., which are related to recurrence and non-explosion. Contents 1. Introduction 136 Acknowledgments 141 Notation 141 2. Heat semigroup on Riemannian manifolds 142 2.1. Laplace operator of the Riemannian metric 142 2.2. Heat kernel and Brownian motion on manifolds 143 2.3. Manifolds with boundary 145 3. Model manifolds 145 3.1. Polar coordinates 145 3.2. Spherically symmetric manifolds 146 4. Some potential theory 149 4.1. Harmonic functions 149 4.2. Green function 150 4.3. Capacity 152 4.4. Massive sets 155 4.5. Hitting probabilities 159 4.6. Exterior of a compact 161 5. Equivalent definitions of recurrence 164 6. Equivalent definitions of stochastic completeness 170 7. Parabolicity and volume growth 174 7.1. Upper bounds of capacity 174 7.2. Sufficient conditions for parabolicity 177 8. Transience and isoperimetric inequalities 181 9. Non-explosion and volume growth 184 Received by the editors October 1, 1997, and, in revised form, September 2, 1998. -

A Guide to Brownian Motion and Related Stochastic Processes

Vol. 0 (0000) A guide to Brownian motion and related stochastic processes Jim Pitman and Marc Yor Dept. Statistics, University of California, 367 Evans Hall # 3860, Berkeley, CA 94720-3860, USA e-mail: [email protected] Abstract: This is a guide to the mathematical theory of Brownian mo- tion and related stochastic processes, with indications of how this theory is related to other branches of mathematics, most notably the classical the- ory of partial differential equations associated with the Laplace and heat operators, and various generalizations thereof. As a typical reader, we have in mind a student, familiar with the basic concepts of probability based on measure theory, at the level of the graduate texts of Billingsley [43] and Durrett [106], and who wants a broader perspective on the theory of Brow- nian motion and related stochastic processes than can be found in these texts. Keywords and phrases: Markov process, random walk, martingale, Gaus- sian process, L´evy process, diffusion. AMS 2000 subject classifications: Primary 60J65. Contents 1 Introduction................................. 3 1.1 History ................................ 3 1.2 Definitions............................... 4 2 BM as a limit of random walks . 5 3 BMasaGaussianprocess......................... 7 3.1 Elementarytransformations . 8 3.2 Quadratic variation . 8 3.3 Paley-Wiener integrals . 8 3.4 Brownianbridges........................... 10 3.5 FinestructureofBrownianpaths . 10 arXiv:1802.09679v1 [math.PR] 27 Feb 2018 3.6 Generalizations . 10 3.6.1 FractionalBM ........................ 10 3.6.2 L´evy’s BM . 11 3.6.3 Browniansheets ....................... 11 3.7 References............................... 11 4 BMasaMarkovprocess.......................... 12 4.1 Markovprocessesandtheirsemigroups. 12 4.2 ThestrongMarkovproperty. 14 4.3 Generators ............................. -

Math Morphing Proximate and Evolutionary Mechanisms

Curriculum Units by Fellows of the Yale-New Haven Teachers Institute 2009 Volume V: Evolutionary Medicine Math Morphing Proximate and Evolutionary Mechanisms Curriculum Unit 09.05.09 by Kenneth William Spinka Introduction Background Essential Questions Lesson Plans Website Student Resources Glossary Of Terms Bibliography Appendix Introduction An important theoretical development was Nikolaas Tinbergen's distinction made originally in ethology between evolutionary and proximate mechanisms; Randolph M. Nesse and George C. Williams summarize its relevance to medicine: All biological traits need two kinds of explanation: proximate and evolutionary. The proximate explanation for a disease describes what is wrong in the bodily mechanism of individuals affected Curriculum Unit 09.05.09 1 of 27 by it. An evolutionary explanation is completely different. Instead of explaining why people are different, it explains why we are all the same in ways that leave us vulnerable to disease. Why do we all have wisdom teeth, an appendix, and cells that if triggered can rampantly multiply out of control? [1] A fractal is generally "a rough or fragmented geometric shape that can be split into parts, each of which is (at least approximately) a reduced-size copy of the whole," a property called self-similarity. The term was coined by Beno?t Mandelbrot in 1975 and was derived from the Latin fractus meaning "broken" or "fractured." A mathematical fractal is based on an equation that undergoes iteration, a form of feedback based on recursion. http://www.kwsi.com/ynhti2009/image01.html A fractal often has the following features: 1. It has a fine structure at arbitrarily small scales. -

Chapter 2: Algorithms and Pain Relief

Biophysics and Physiological Modeling Chapter 2: Algorithms and pain relief v.4.6 © pHn 2020 Introduction: Camp Omelet The marble game we developed in CHAPTER 1 is an excellent way to understand how diffusion works, but it’s still just a game. In this chapter we’ll learn how to turn the marble game into a realistic simulation of physiological situations ranging from single molecules up to the entire human body (drug elimination). In order to do that, we’ll need to learn an essential skill for later chapters – how to design and implement quantitative models using algorithms. An algorithm is like the recipe for an omelet. It’s a list of ingredients and a set of instructions written in a form that someone else can understand and follow to perform a complex task. Imagine… Your family’s going camping over Mother’s Day weekend. The family tradition is for you to make Mom’s favorite omelets for breakfast, but you’re going to be out of town for an important interview at a research university. Your little brother Bert is willing to cook the omelets, but he’s never made them before. You’re about to get on a plane, but before you do, you need to email instructions to him. The family will be camping in a tent overnight, so Bert will need to collect everything he needs before they leave this afternoon, including the special ingredients – a pinch of dried tarragon and crumbled blue cheese that are lightly beaten into the egg mixture before it’s cooked. -

Self-Similar Processes, Fractional Brownian Motion and Statistical Inference

isid/ms/2003/24 September 11, 2003 http://www.isid.ac.in/ statmath/eprints e Self-Similar Processes, Fractional Brownian Motion and Statistical Inference B. L. S. Prakasa Rao Indian Statistical Institute, Delhi Centre 7, SJSS Marg, New Delhi–110 016, India Self-Similar Processes, Fractional Brownian Motion and Statistical Inference B.L.S. PRAKASA RAO INDIAN STATISTICAL INSTITUTE, NEW DELHI Abstract Self-similar stochastic processes are used for stochastic modeling whenever it is expected that long range dependence may be present in the phenomenon under consideration. After dis- cusing some basic concepts of self-similar processes and fractional Brownian motion, we review some recent work on parametric and nonparametric inference for estimation of parameters for linear systems of stochastic differential equations driven by a fractional Brownian motion. Keywords and phrases: Self-similar process; fractional Brownian motion; fractional Ornstein-Uhlenbeck type process; Girsanov-type theorem; Maximum likelihood estimation; Bayes estimation; Nonparametric inference; Linear stochastic systems. AMS Subject classification (2000): Primary 62M09, Secondary 60G15. 1 Introduction ”Asymptotic Distributions in Some Nonregular Statistical Problems” was the topic of my Ph.D. Dissertation prepared under the guidance of Prof. Herman Rubin at Michigan State University in 1966. One of the nonregular problems studied in the dissertation was the problem of esti- mation of the location of cusp of a continuous density. The approach adapted was to study the limiting distribution if any of the log-likelihood ratio process and then obtain the asymptotic properies of the maximum likelihood estimator. It turned out that the limiting process is a nonstationary gaussian process . The name fractional Brownian motion was not in vogue in those years and the limiting process is nothing but a functional shift of a fractional Brown- ian motion. -

Brownian Motion I

BROWNIAN MOTION I Bertrand Duplantier Service de Physique Theorique,´ Saclay, France DNA & CHROMOSOMES 2006 Physical & Biological Approaches Institut d’Etudes´ Scientifiques de Cargese` 19 June - 1st July 2006 “Thus, let us assume, for example, that someone jots down a number of points at random on a piece of paper. [...] I maintain that it is possible to find a geometric line whose notion is constant and uniform, following a certain rule, such that this line passes through all the points in the same order in which the hand jotted them down [...] But, when the rule is extremely complex, what is in conformity with it passes for irregular.” G. W. LEIBNIZ, Discourse on Metaphysics, 1786. A Possible Precursor to Brown L’astronome,1668, Musee´ du Louvre, Paris; Der Geographer, 1668-69, Stadelsches¨ Kunstinstitut am Main, Frankfurt Johannes VERMEER (1632-1675) Who is in the guise of the Scientist? The Scientist Antony van Leeuwenhoek (1632-1723), ROBERT BROWN Robert Brown (1773-1858) Robert Brown (1827) Edinburgh New Phil. J. 5, 358 (1828): “A Brief Account of Microscopical Observations Made in the Months of June, July and August, 1827, on the Particles Contained in the Pollen of Plants; and on the General Existence of ACTIVE MOLECULES in Organic and Inorganic Bodies” The Scottish Botanist Robert Brown reports on the ceaseless random motion of various particles small enough to be suspended in water. Adolphe Brongniart (1827) Ann. Sci. Naturelles (Paris) 12, note B, pp. 44-46 (1827). Aspect of the Brownian motion of a pollen grain in suspension. The motion is extremely erratic, and apparently never stops; it is universal, applying to organic and inorganic particles as well (Sphinx of Gizeh).` Charles Darwin (1809-1882) about the 1830’s: “I saw a good deal of Robert Brown, “facile Princeps Botanicorum,” as he was called by Humboldt. -

BROWNIAN MOTION and LIOUVILLE's THEOREM Contents

BROWNIAN MOTION AND LIOUVILLE'S THEOREM CHEN HUI GEORGE TEO Abstract. Probability theory has many deep and surprising connections with the theory of partial differential equations. We explore one such connection, namely the proof of Liouvilles theorem by means of stochastic integration. Contents 1. Introduction 1 2. Brownian Motion 2 2.1. Definition and basic properties 2 2.2. Markov property 4 2.3. Harmonic functions and Dirichlet Problem 7 2.4. Recurrence 9 2.5. Martingales 11 3. stochastic Calculus 14 3.1. Definition and basic properties 14 3.2. Ito formula 19 4. Levy's Theorem and Liouville's Theorem 22 4.1. Levy's Theorem 22 4.2. Liouville's Theorem 25 Acknowledgments 25 References 25 1. Introduction In this paper, we prove Liouville's theorem from complex analysis by using re- sults about Brownian motion and stochastic integration. While it is certainly not the easiest proof of Liouville's theorem, it demonstrates the intricate connections between probability and partial differential equations. We assume the reader is familiar with basic abstract measure theory at the level of Kolmogorov [2]. We also assume the reader is familiar with basic results from measure-theoretic probability theory. A reader unfamiliar with measure-theoretic probability theory can consult Williams [6] up to the section on conditional expecta- tion. Finally, throughout this paper we shall use a common probabilists shorthand \a.s" in place of \almost surely". The proof of Liouville's theorem via Brownian motion will proceed by showing the following facts: (1) 2-dimensional Brownian motion is neighborhood recurrent, and therefore dense Date: August 29, 2012. -

Brownian Motion and the Atomic Theory

Brownian Motion and The Atomic Theory Albert Einstein Annus Mirabilis Centenary Lecture Simeon Hellerman Institute for Advanced Study, 5/20/2005 Founders' Day 1 1. What phenomenon did Einstein explain? (a) Random motion of particles in liquid (b) Dependence on temperature (c) Dependence on viscosity of the liquid (d) Dependence on the size of the particles 2. How did he explain it? (a) Statistical Theory of Heat (b) Ideal Gases (c) Treat Microscopic and Macroscopic Objects Uniformly 3. Why was it important? (a) Universal Validity of Statistical Mechanics (b) Reality of Atoms and Molecules 2 Robert Brown's Discovery pollen grains In 1827 Robert Brown, a Scottish botanist and curator of the British Museum, observed that pollen grains suspended in water, instead of remaining stationary or falling downwards, would trace out a random zig-zagging pattern. This process, which could be observed easily with a microscope, gained the name of Brownian motion. 3 1865 MAY 1864 MAY 1865 It is discovered that no matter how long the particles are left in the liquid in a sealed container, they never come to rest or even slow down. The experimenters observed a system of pollen motes in a sealed volume of liquid over a period of one year, and Brown's zig-zagging effect continued undiminished. 4 1870 glycerol ethyl alcohol η = 1.5 Pa . s η = .25 10−3Pa . s It is discovered that the speed with which the pollen motes disperse was slower in more viscous liquid and faster in less viscous liquid. 5 1900 It is discovered that the zig-zagging gets faster as the temperature of the water is raised; also, that the motion becomes slower as the size of the particles is made larger. -

Self-Similar Processes with Self-Similarity: Basic Properties

Appendix A Self-similar Processes with Self-similarity: Basic Properties The first paper to give a rigorous mathematical analysis of self-similar processes is [106]. There are several monographs (see e.g. [75, 160]) that the reader can con- sult in order to build a more complete picture of self-similarity and related topics. A.1 One-Parameter Self-similar Processes Let us define the concept of self-similarity. Definition A.1 A stochastic process (Xt )t≥0 is called self-similar if there exists a H real number H>0 such that for any c>0 the processes (Xct )t≥0 and (c Xt )t≥0 have the same finite dimensional distributions. Remark A.1 A self-similar process satisfies X0 = 0 almost surely. Definition A.2 A stochastic process (Xt )t≥0 is said to be with stationary increments if for any h>0 the distribution of the process (Xt+h − Xh)t≥0 does not depend on h. The self-similar processes with stationary increments all have the same covari- ance. Theorem A.1 Let (Xt )t≥0 be a non-trivial H -self-similar process with stationary 2 ∞ increments such that EX1 < . Then 1 2 2H 2H 2H EXt Xs = EX t + s −|t − s| . 2 1 C.A. Tudor, Analysis of Variations for Self-similar Processes, 251 Probability and Its Applications, DOI 10.1007/978-3-319-00936-0, © Springer International Publishing Switzerland 2013 252 A Self-similar Processes with Self-similarity: Basic Properties Proof Let s ≤ t. Writing 1 2 2 2 Xt Xs = X + X − (Xt − Xs) 2 t s we get 1 2 2 2 EXt Xs = X + X − EX − 2 t s t s 1 = EX2 t2H + s2H −|t − s|2H .