Adaptive Predictors for Extracting Physiological Signals in Two

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Central Florida Future, Vol. 12 No. 14, November 30, 1979

University of Central Florida STARS Central Florida Future University Archives 11-30-1979 Central Florida Future, Vol. 12 No. 14, November 30, 1979 Part of the Mass Communication Commons, Organizational Communication Commons, Publishing Commons, and the Social Influence and oliticalP Communication Commons Find similar works at: https://stars.library.ucf.edu/centralfloridafuture University of Central Florida Libraries http://library.ucf.edu This Newsletter is brought to you for free and open access by the University Archives at STARS. It has been accepted for inclusion in Central Florida Future by an authorized administrator of STARS. For more information, please contact [email protected]. Recommended Citation "Central Florida Future, Vol. 12 No. 14, November 30, 1979" (1979). Central Florida Future. 380. https://stars.library.ucf.edu/centralfloridafuture/380 .U C F LIBRARY ARCHIVES University of .·central Florida) Fr~day, November 30, 1979 N~. l_ ~ . -; ~ _ ill __ Energy. costs exceed university's budget by Barbara °Cowell aaaoclate editor UCF's energy consumption has rise.n and is no longer within the budget .project for : this yea_r, according to Richard V. Neuhaus, assistant director of thP Physical Plant. The proposed budget for the year underestimated the rising cost of energy. Ac·· cording to Neuhaus, the cost per energy unit has riseq over 35 percent and show5 no sign of abating. "At this time," he said, "the cost of the kilowatt hour has risen from three to five cents." "They tell me that at other universities they're having problems. '.with . budgets," said N~uhaus. "Especially campuses like USF and UF, where medical centers are situated and consume a great amount of energy." According to Neuhaus, UCF has implemented a Delta 1000 and 2000 Horeywell system for energy management. -

Modernization of the Czech Air Force

Calhoun: The NPS Institutional Archive Theses and Dissertations Thesis Collection 2001-06 Modernization of the Czech Air Force. Vlcek, Vaclav. http://hdl.handle.net/10945/10888 NAVAL POSTGRADUATE SCHOOL Monterey, California THESIS MODERNIZATION OF THE CZECH AIR FORCE by Vaclav Vlcek June 2001 Thesis Advisor: Raymond Franck Associate Advisor: Gregory Hildebrandt Approved for public release; distribution is unlimited. 20010807 033 REPORT DOCUMENTATION PAGE Form Approved OMBNo. 0704-0188 Public reporting burden for this collection of information is estimated to average 1 hour per response, including the time for reviewing instruction, searching existing data sources, gathering and maintaining the data needed, and completing and reviewing the collection of information. Send comments regarding this burden estimate or any other aspect of this collection of information, including suggestions for reducing this burden, to Washington headquarters Services, Directorate for Information Operations and Reports, 1215 Jefferson Davis Highway, Suite 1204, Arlington, VA 22202-4302, and to the Office of Management and Budget, Paperwork Reduction Project (0704-0188) Washington DC 20503. 1. AGENCY USE ONLY (Leave blank) 2. REPORT DATE 3. REPORT TYPE AND DATES COVERED June 2001 Master's Thesis 4. TITLE AND SUBTITLE : MODERNIZATION OF THE CZECH AIR FORCE 5. FUNDING NUMBERS 6. AUTHOR(S) Vaclav VIcek 8. PERFORMING 7. PERFORMING ORGANIZATION NAME(S) AND ADDRESS(ES) ORGANIZATION REPORT Naval Postgraduate School NUMBER Monterey, CA 93943-5000 9. SPONSORING / MONITORING AGENCY NAME(S) AND ADDRESS(ES) 10. SPONSORING / MONITORING N/A AGENCY REPORT NUMBER 11. SUPPLEMENTARY NOTES The views expressed in this thesis are those of the author and do not reflect the official policy or position of the Department of Defense or the U.S. -

Distributed $(\Delta+1)$-Coloring Via Ultrafast Graph Shattering | SIAM

SIAM J. COMPUT. \bigcircc 2020 Society for Industrial and Applied Mathematics Vol. 49, No. 3, pp. 497{539 DISTRIBUTED (\Delta + 1)-COLORING VIA ULTRAFAST GRAPH SHATTERING\ast y z x YI-JUN CHANG , WENZHENG LI , AND SETH PETTIE Abstract. Vertex coloring is one of the classic symmetry breaking problems studied in distrib- uted computing. In this paper, we present a new algorithm for (\Delta +1)-list coloring in the randomized 0 LOCAL model running in O(Detd(poly log n)) = O(poly(log log n)) time, where Detd(n ) is the de- 0 terministic complexity of (deg +1)-list coloring on n -vertex graphs. (In this problem, each v has a palette of size deg(v)+1.) This improves upon a previousp randomized algorithm of Harris, Schneider,p and Su [J. ACM, 65 (2018), 19] with complexity O( log \Delta +log log n+Detd(poly log n)) = O( log n). Unless \Delta is small, it is also faster than the best known deterministic algorithm of Fraigniaud,Hein- rich, and Kosowski [Proceedings of the 57th Annual IEEE Symposium on Foundations of Com- puter Science (FOCS), 2016] and Barenboim, Elkin, and Goldenberg [Proceedings of the 38th An- nualp ACM Symposium on Principles of Distributed Computing (PODC), 2018], with complexity O( \Delta log \Deltalog\ast \Delta +log\ast n). Our algorithm's running time is syntactically very similar to the \Omega (Det(poly log n)) lower bound of Chang, Kopelowitz, and Pettie [SIAM J. Comput., 48 (2019), pp. 122{143], where Det(n0) is the deterministic complexity of (\Delta + 1)-list coloring on n0-vertex graphs. -

Energy Consumption

COMMONWEALTH OF MASSACHUSETTS EXECUTIVE OFFICE OF ENERGY AND ENVIRONMENTAL AFFAIRS DEPARTMENT OF ENERGY RESOURCES ENERGY MANAGEMENT BASICS FOR MUNICIPAL PLANNERS AND MANAGERS Deval L. Patrick Richard K. Sullivan, Jr. Governor Secretary, Executive Office of Energy and Environmental Affairs Mark Sylvia Commissioner, Department of Energy Resources Acknowledgements This guide was prepared under the direction of Eileen McHugh. Readers may obtain specific information from the Department at (617) 626-7300 DOER would like to thank John Snell at the Peregrine Energy Group for his contribution to the development of this guide. i TABLE OF CONTENTS Introduction 1 How to Use the Guide 2 Defining Energy Management 2 Benefits of Energy Management 3 Cost-Effectiveness 3 Incentives Can Win Support for the Program 3 Communication and Training are Crucial 3 A Program That Will Get Results 4 Summary 4 I: Decide the Next Step 5 Define Energy Management Project Scope 5 Identify Energy Management Stakeholders and Project Leader 5 Identify State and Federal Technical and Financial Support Resources 7 Establish Management Policies, Responsibilities, and Priorities 7 Define an Action Plan 7 Identify and Quantify Energy Consumption 8 Monitor and Improve Building Operating Efficiency and Performance 8 II: Identify and Quantify Energy Consumption 9 Understanding How Buildings Use Energy 9 Energy Consumption Data 9 The Energy Budget: How well is the Building Doing? 11 Energy Consumption: Costs and the Annual Efficiency Index 11 MassEnergyInsight 12 III: Estimate Energy -

Phylogeography of the Manybar Goatfish, Parupeneus Multifasciatus, Reveals Isolation of the Hawaiian Archipelago and a Cryptic Species in the Marquesas Islands

Bull Mar Sci. 90(1):493–512. 2014 research paper http://dx.doi.org/10.5343/bms.2013.1032 Phylogeography of the manybar goatfish, Parupeneus multifasciatus, reveals isolation of the Hawaiian Archipelago and a cryptic species in the Marquesas Islands 1 Hawai‘i Institute of Marine Zoltán Szabó 1 * Biology, University of Hawai‘i, 2 Kaneohe, Hawaii 96744. Brent Snelgrove Matthew T Craig 3 2 Department of Biology, 4 University of Hawai‘i, Honolulu, Luiz A Rocha Hawaii 96822. Brian W Bowen 1 3 Department of Marine Science and Environmental Studies, University of San Diego, San Diego, California 92110. ABSTRACT.—To assess genetic connectivity in a common and abundant goatfish (family Mullidae), we surveyed 4 Section of Ichthyology, California Academy of Sciences, 637 specimens of Parupeneus multifasciatus (Quoy and 55 Music Concourse Dr, San Gaimard, 1825) from 15 locations in the Hawaiian Islands Francisco, California 94118. plus Johnston Atoll, two locations in the Line Islands, two locations in French Polynesia, and two locations in the * Corresponding author email: <[email protected]>. northwestern Pacific. Based on mitochondrial cytochrome b sequences, we found no evidence of population structure across Hawaii and the North Pacific; however, we observed genetic structuring between northern and southern Pacific locations with the equator-straddling Line Islands affiliated with the southern population. The Marquesas Islands sample in the South Pacific was highly divergent d( = 4.12% average sequence divergence from individuals from all other locations) indicating a cryptic species. These findings demonstrate that this goatfish is capable of extensive dispersal consistent with early life history traits in Mullidae, Date Submitted: 4 April, 2013. -

Improving the Air Force Medical Service's

Dissertation Improving the Air Force Medical Service’s Expeditionary Medical Support System: A Simulation Approach Analysis of Mass-Casualty Combat and Disaster Relief Scenarios John A. Hamm This document was submitted as a dissertation in September 2018 in partial fulfillment of the requirements of the doctoral degree in public policy analysis at the Pardee RAND Graduate School. The faculty committee that supervised and approved the dissertation consisted of Brent Thomas (Chair), Bart Bennett, and Jose Sorto. PARDEE RAND GRADUATE SCHOOL For more information on this publication, visit http://www.rand.org/pubs/rgs_dissertations/RGSDA343-1.html Published 2020 by the RAND Corporation, Santa Monica, Calif. R® is a registered trademark Limited Print and Electronic Distribution Rights This document and trademark(s) contained herein are protected by law. This representation of RAND intellectual property is provided for noncommercial use only. Unauthorized posting of this publication online is prohibited. Permission is given to duplicate this document for personal use only, as long as it is unaltered and complete. Permission is required from RAND to reproduce, or reuse in another form, any of its research documents for commercial use. For information on reprint and linking permissions, please visit www.rand.org/pubs/permissions.html. The RAND Corporation is a research organization that develops solutions to public policy challenges to help make communities throughout the world safer and more secure, healthier and more prosperous. RAND is nonprofit, nonpartisan, and committed to the public interest. RAND’s publications do not necessarily reflect the opinions of its research clients and sponsors. Support RAND Make a tax-deductible charitable contribution at www.rand.org/giving/contribute www.rand.org Abstract Research finds minor changes to the Air Force’s Expeditionary Medical Support System (EMEDS) that produce significant impacts on patient outcomes in mass-casualty events. -

1980-81 Volume 101 No

THE OF PHI KAPPA PSI FRATERNITY Vol. 101/No. 1/January, '81 Founded February 19,1852, at Jefferson College, Canonsburg, Pa., by CHARLES PAGE THOMAS MOORE Born Feb. 8,1831, In Greenbrier County, Va. Died July 7,1904, in Mason County, W. Va. WILLIAM HENRY LETTERMAN Born August 12, 1832, at Canonsburg, Pa. Died May 23,1881, at Duffau, Texas Dra^y The Executive Council Officers President, John R. Donnell. Jr 134 Lindbergh Dr., N.E., Atlanta, Ga. 30305 Vice President. John K. Boyd. Ill Minnesota Beta 3 849 West 52nd Terr., Kansas City, Mo. 64112 Treasurer, John A. Burke 235 South East St., Medina, Ohio 44256 Secretary. Bryan P. Muecke The Phi Psi Buyers Guide 4 2222 Rio Grande, Suite D-104, Austin, Tex. 78705 Archon, District I— Todd M. Ryder Phi Kappa Psi Fraternity, 4 Fraternity Circle, 1980 Phi Psi at the Crossroads GAC 6 Kingston, R.I. 02881 Archon, District II—D. Randolph Drosick Phi Kappa Psi Fraternity, 780 Spruce St., GAC Award Winners 11 Morgantown. W. Va. 26505 Archon, District III—Mark R. Ricketts Phi Kappa Psi Fraternity, 122 South Campus Ave., GAC Registration 13 Oxford, Ohio 45056 Archon, District IV—Larry L. Light Phi Kappa Psi Fraternity, P.O. Box 14008, Gainesville, Fla. 32604 What the GAC Did 15 Archon, District V—Gerald "Jay" Donohue, Jr. Phi Kappa Psi Fraternity, 1602 West 15th St., Lawrence, Kans. 66044 An Edict of the Executive Council 16 Archon, District VI—Jack P. Eckley 938 West 28th St., Los Angeles, Calif. 90007 Attorney General, Paul J. LaPuzza Statement on Fraternity Education 17 6910 Pacific, Suite 320, Omaha, Nebr. -

19700032955.Pdf

J INDEX (Editors: This fact sheet contains information on NASAOs space science and applications program. It is suggested that it be retained in your files.) Page Background on the space science and applications program 1-2 Applications Technology Satellite program 3 Atmosphere Explorer program 4 Earth Resources Technology Satellite program 5 Geodetic Earth Orbiting Satellite program 6 Improved TIROS Operational Satellite program 7 etary Monitoring platform program 8 Mariner Mars 1971 program 9 Mariner Venus/Mercury 1973 program 10 Nimbus program 11 Orbiting Astronomical Observatory program 12 Orbiting Geophysical Observatory program 13 Orbiting Solar Observatory program 14 Pioneer F & G program 15 Radio Astronomy Explorer program 16 Small Astronomy Satellite program 17 Small Scientific Satellite program 18 Synchronous Meteorological Satellite program 19 Viking program 20 The Office of Space Science and Applications (OSSA) of the National Aeronautics and Space Administration is responsible for almost all unmanned launches concerned with scientific investigations as well as those having a direct benefit to mankind. OSSA'S Earth-orbiting spacecraft have investigated the near-Earth environment discovering new scientific facts, such as the Van Allen radiation belts, energetic particle and solar activity, the shape of the Earth and meteorological phenomena not possible to obtain in any other way. Scientific space- craft have looked, safely above the atmospheric curtain, far into space to receive more information on the formation of planets, stars and galaxies. Automated spacecraft have observed Mars and Venus, mapped the Moon, observed the Sun from various points in the solar system and future missions will give us even more information on previously visited planets as well as Mercury, Jupiter, Saturn, Uranus and Neptune. -

O51739533 1968.Pdf

1I NASA BUDGET ANALYSIS FY 1968 DATA PUBLICATIONS West Building - Washington National Airport Washington, D. C. 20001 NASA Headquarters library 300 E St. SW Rm. 1120 Washington, DC 20546 ? TABLE OF CONTENTS AN ANALYSIS OF FY 1968 BUDGET 1 NASA BUDGET Research and Development 6 Construction of Facilities 7 Administrative Operations 7 TABLES Research and Development Programs 9 Manned Space Flight 10 Space Science and Applications 11 & 12 Advanced Research and Technology 13 & 14 Tracking and Data Acquisition 15 Construction of Facilities 16 Projects by Installation 17 & 18 Administrative Operations 19 PROGRAMS Apollo Program 20 Physics and Astronomy Program 51 Lunar and Planetary Exploration Program 57 Voyager Program 61 Sustaining University Program 65 Launch Vehicle Development Program 66 Launch Vehicle Procurement Program 67 Bioscience Program 70 Space Applications Program 73 Basic Research Program 82 Space Vehicle Systems Program 85 Electronics Systems Program 89 Human Factor Systems Program 92 Space Power and Electric Propulsion Systems Program 95 Nuclear Rockets Program 99 Chemical Propulsion Program 101 Aeronautics Program 105 Tracking and Data Acquisition Program 115 Technology Utilization Program 118 I Y I.1 1 , FY 1968 BUDGET NATIONAL AERONAUTICS & SPACE ADMINISTRATION A total program of $5,110,000,000 is requested by NASA, to be fi- nanced by $5,050,000,000 in new obligational authority and $60,000,000 of prior year funds, to maintain effort in current programs at a level deemed important to the maintenance of the United States world position in space and aeronautics. The industrial community, under contracts with the NASA, will con- tinue to carry forward the prime design, development and fabrication effort of the NASA program. -

The Complete Book of Spaceflight: from Apollo 1 to Zero Gravity

The Complete Book of Spaceflight From Apollo 1 to Zero Gravity David Darling John Wiley & Sons, Inc. This book is printed on acid-free paper. ●∞ Copyright © 2003 by David Darling. All rights reserved. Published by John Wiley & Sons, Inc., Hoboken, New Jersey Published simultaneously in Canada No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 750-4470, or on the web at www.copyright.com. Requests to the Publisher for permission should be addressed to the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, (201) 748-6011, fax (201) 748-6008, email: [email protected]. Limit of Liability/Disclaimer of Warranty: While the publisher and the author have used their best efforts in preparing this book, they make no representations or warranties with respect to the accuracy or completeness of the contents of this book and specifically disclaim any implied warranties of merchantability or fitness for a particular purpose. No warranty may be created or extended by sales representatives or written sales materials. The advice and strategies contained herein may not be suitable for your situation. You should consult with a professional where appropriate. Neither the publisher nor the author shall be liable for any loss of profit or any other commercial damages, including but not limited to special, incidental, consequential, or other damages. -

DCS M-2000C Flight Manual EN.Pdf

The information provided in this manual is preliminary and subject to revision. Table of Contents Introduction .................................................................................................................................................. 6 Cockpit ...................................................................................................................................................... 6 Engines ...................................................................................................................................................... 7 Payload and armaments ........................................................................................................................... 7 Sensors and avionics ................................................................................................................................. 7 General Characteristics ............................................................................................................................. 9 Acknowledgments ....................................................................................................................................... 11 Keyboard Map ............................................................................................................................................. 12 Chapter 1: Instruments Layout ................................................................................................................... 14 Instruments Panel Map. ......................................................................................................................... -

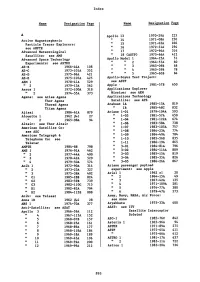

Index Name Designation Page Active Magnetospheric Particle Tracer Explorers: See AMPTE Advanced Meteorological Satellite: See AM

Index Name Designation Page Name Designation Page A Apollo 13 1970-29A 223 Active Magnetospheric II 14 1971-08A 250 Particle Tracer Explorers: II 15 1971-63A 266 see AMPTE II 16 1972-31A 294 Advanced Meteorological II 17 1972-96A 316 Satellite: see AMS II 18 (ASTP) 1975-66A 412 Advanced Space Technology Apollo Model 1 1964-25A 51 II II 2 Experiments: see ASTEX 1964-57A 60 AE-B 1966-44A 108 II II 3 1965-09B 68 AE-C 1973-lOlA 352 II II 4 1965-39B 78 II II 5 AE-D 1975-96A 421 1965-60B 84 AE-E 1975-107A 425 Apollo-Soyuz Test Project: AEM 1 1978-41A 529 see ASTP II 2 1979-13A 562 Apple · 1981-57B 650 Aeros 1 1972-lOOA 318 Applications Explorer II 2 1974-55A 373 Mission: see AEM Agena: see Atlas Agena Applications Technology Thor Agena Satellite: see ATS Thorad Agena Arabsat lA 1985-15A 819 Titan Agena II 1B 1985-48C 832 Ajisai 1986-61A 879 Ariane 1-01 1979-104A 593 Alouette 1 1962 Pal 27 II 1-03 1981-57A 650 II 2 1965-98A 94 II 1-04 1981-122A 674 Altair: see Thor Altair II 1-06 1983-58A 738 American Satellite Co: II 1-07 1983-105A 757 see ASC II 1-08 1984-23A 774 American Telegraph & II 1-09 1984-49A 784 Telephone Co: see II 1-10 1985-56B 835 Telstar II 1-11 1986-19A 865 AMPTE 1984-88 798 II 3-01 1984-81A 796 AMS 1 1976-91A 462 II 3-02 1984-114A 809 II 2 1977-44A 490 II 3-03 1985-15A 819 II 3 1978-42A 529 " 3-04 1985-35A 826 II 4 1979-SOA 574 " 3 ..