Revisiting the Compcars Dataset for Hierarchical Car Classification

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2016 Board of Directors Contents

EFFECTIVE FIRST DAY OF THE MONTH UNLESS OTHERWISE NOTED January 2016 BOARD OF DIRECTORS CONTENTS BOARD OF DIRECTORS | December 4-5, 2015 BOARD OF DIRECTORS 1 SOLO 28 The SCCA National Board of Directors met in Kansas City, Friday, December 4 and SEB Minutes 28 December 5, 2015. Area Directors participating were: John Walsh, Chairman, Dan Helman, Vice-Chairman, Todd Butler, Secretary; Bill Kephart, Treasurer; Dick Patullo, CLUB RACING 32 Lee Hill, Steve Harris, Bruce Lindstrand, Terry Hanushek, Tere Pulliam, Peter Zekert, CRB Minutes 32 Brian McCarthy and KJ Christopher and newly elected directors Arnold Coleman, Bob Technical Bulletin 41 Dowie and Jim Weidenbaum. Court of Appeals 52 Divisional Time Trials Comm. 53 The following SCCA, Inc. staff participated in the meeting: Lisa Noble, President RALLY 54 and CEO; Eric Prill, Chief Operations Officer; Mindi Pfannenstiel, Senior Director of RallyCross 54 Finance and Aimee Thoennes, Executive Assistant. Road Rally 56 Guests attending the meeting were Jim Wheeler, Chairman of the CRB. LINKS 57 The secretary acknowledges that these minutes may not appear in chronological order and that all participants were not present for the entire meeting. The meeting was called to order by Vice Chair Helman. Executive Team Report and Staff Action Items President Noble provided a review of 2015 key programs and deliverables with topic areas of core program growth, scca.com, and partnerships. Current initiatives to streamline event process with e-logbook tied into event registration, modernize timing systems in Pro Solo, connecting experiential programs (eg TNIA, Starting Line) to core programs. Multiple program offerings to enhance weekend events. -

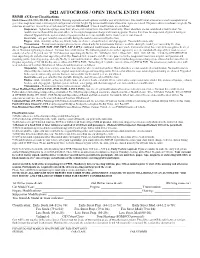

2009 Autocross Entry Form

2021 AUTOCROSS / OPEN TRACK ENTRY FORM RMMR AX Event Classifications Stock Classes (ES, ESL, FS, FSL, LS, LSL): Mustang as produced with options available year of manufacture. One modification allowed over stock to suspension or gear ratio. Suspension must retain original configuration and ride height. Up to two modifications allowed to engine over stock. No power adders (eco boost excepted). No autocross or road race tires with wear indicators of less than 200 allowed. Allowed modifications are as follows: Suspension – higher rate springs, sway bars, wheels, and traction bars. One modification only. Shock absorbers are not considered a modification. No modification is allowed that does not adhere to the original suspension design and mounting points. Devices that allow for suspension alignment tuning are allowed. Upgraded brake system and steering systems that were not available for the model year are not allowed. Gear ratio – any gear ratio that was available during the model years covered by the class. Engine - intake manifold, carburetor, throttle body, air cleaner, exhaust headers, and chip upgrade. Two modifications only. Transmission – Transmission swaps/replacements are allowed only for transmissions that were available during the model year covered by the class. Street Prepared Classes (ESP, ESPL, FSP, FSPL, LSP, LSPL): Additional modifications allowed over stock. Car must be titled, have current license plates, be street driven. No major lightening is allowed. Car must have a full interior. The following models due to their superiority over the standard offerings of their model year are considered as Street Prepared cars:’93-’01 Cobra (non supercharged), Bullitt, ’03 –‘04 Mach1, 2012-13 Boss 302, 2011 - 2021 GT,’06 – ’21 Shelby GT/GTH/GT350 (non supercharged), and other non supercharged Shelby, Roush & Saleen models and all electric power vehicles. -

A Two-Point Vehicle Classification System

178 TRANSPORTATION RESEARCH RECORD 1215 A Two-Point Vehicle Classification System BERNARD C. McCULLOUGH, JR., SrAMAK A. ARDEKANI, AND LI-REN HUANG The counting and classification of vehicles is an important part hours required, however, the cost of such a count was often of transportation engineering. In the past 20 years many auto high. To offset such costs, many techniques for the automatic mated systems have been developed to accomplish that labor counting, length determination, and classification of vehicles intensive task. Unfortunately, most of those systems are char have been developed within the past decade. One popular acterized by inaccurate detection systems and/or classification method, especially in Europe, is the Automatic Length Indi methods that result in many classification errors, thus limiting cation and Classification Equipment method, known as the accuracy of the system. This report describes the devel "ALICE," which was introduced by D. D. Nash in 1976 (1). opment of a new vehicle classification database and computer This report covers a simpler, more accurate system of vehicle program, originally designed for use in the Two-Point-Time counting and classification and details the development of the Ratio method of vehicle classification, which greatly improves classification software that will enable it to surpass previous the accuracy of automated classification systems. The program utilizes information provided by either vehicle detection sen systems in accuracy. sors or the program user to determine the velocity, number Although many articles on vehicle classification methods of axles, and axle spacings of a passing vehicle. It then matches have appeared in transportation journals within the past dec the axle numbers and spacings with one of thirty-one possible ade, most have dealt solely with new types, or applications, vehicle classifications and prints the vehicle class, speed, and of vehicle detection systems. -

Modelling of Emissions and Energy Use from Biofuel Fuelled Vehicles at Urban Scale

sustainability Article Modelling of Emissions and Energy Use from Biofuel Fuelled Vehicles at Urban Scale Daniela Dias, António Pais Antunes and Oxana Tchepel * CITTA, Department of Civil Engineering, University of Coimbra, Polo II, 3030-788 Coimbra, Portugal; [email protected] (D.D.); [email protected] (A.P.A.) * Correspondence: [email protected] Received: 29 March 2019; Accepted: 13 May 2019; Published: 22 May 2019 Abstract: Biofuels have been considered to be sustainable energy source and one of the major alternatives to petroleum-based road transport fuels due to a reduction of greenhouse gases emissions. However, their effects on urban air pollution are not straightforward. The main objective of this work is to estimate the emissions and energy use from bio-fuelled vehicles by using an integrated and flexible modelling approach at the urban scale in order to contribute to the understanding of introducing biofuels as an alternative transport fuel. For this purpose, the new Traffic Emission and Energy Consumption Model (QTraffic) was applied for complex urban road network when considering two biofuels demand scenarios with different blends of bioethanol and biodiesel in comparison to the reference situation over the city of Coimbra (Portugal). The results of this study indicate that the increase of biofuels blends would have a beneficial effect on particulate matter (PM ) emissions reduction for the entire road network ( 3.1% [ 3.8% to 2.1%] by kg). In contrast, 2.5 − − − an overall negative effect on nitrogen oxides (NOx) emissions at urban scale is expected, mainly due to the increase in bioethanol uptake. Moreover, the results indicate that, while there is no noticeable variation observed in energy use, fuel consumption is increased by over 2.4% due to the introduction of the selected biofuels blends. -

Making Markets for Hydrogen Vehicles: Lessons from LPG

Making Markets for Hydrogen Vehicles: Lessons from LPG Helen Hu and Richard Green Department of Economics and Institute for Energy Research and Policy University of Birmingham Birmingham B15 2TT United Kingdom Hu: [email protected] Green: [email protected] +44 121 415 8216 (corresponding author) Abstract The adoption of liquefied petroleum gas vehicles is strongly linked to the break-even distance at which they have the same costs as conventional cars, with very limited market penetration at break-even distances above 40,000 km. Hydrogen vehicles are predicted to have costs by 2030 that should give them a break-even distance of less than this critical level. It will be necessary to ensure that there are sufficient refuelling stations for hydrogen to be a convenient choice for drivers. While additional LPG stations have led to increases in vehicle numbers, and increases in vehicles have been followed by greater numbers of refuelling stations, these effects are too small to give self-sustaining growth. Supportive policies for both vehicles and refuelling stations will be required. 1. Introduction While hydrogen offers many advantages as an energy vector within a low-carbon energy system [1, 2, 3], developing markets for hydrogen vehicles is likely to be a challenge. Put bluntly, there is no point in buying a vehicle powered by hydrogen, unless there are sufficient convenient places to re-fuel it. Nor is there any point in providing a hydrogen refuelling station unless there are vehicles that will use the facility. What is the most effective way to get round this “chicken and egg” problem? Data from trials of hydrogen vehicles can provide information on driver behaviour and charging patterns, but extrapolating this to the development of a mass market may be difficult. -

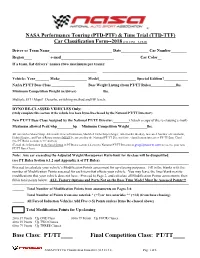

PT & TT Car Classification Form

® NASA Performance Touring (PTD-PTF) & Time Trial (TTD-TTF) Car Classification Form--2018 (v13.1/15.1—1-15-18) Driver or Team Name________________________________ Date______________ Car Number________ Region_____________ e-mail________________________________________ Car Color_______________ If a team, list drivers’ names (two maximum per team): ___________________________________________ ___________________________________________ Vehicle: Year_______ Make______________ Model___________________ Special Edition?____________ NASA PT/TT Base Class _____________ Base Weight Listing (from PT/TT Rules)______________lbs. Minimum Competition Weight (w/driver)_______________lbs. Multiple ECU Maps? Describe switching method and HP levels:_____________________________________________ DYNO RE-CLASSED VEHICLES Only: (Only complete this section if the vehicle has been Dyno Re-classed by the National PT/TT Director!) New PT/TT Base Class Assigned by the National PT/TT Director:_________(Attach a copy of the re-classing e-mail) Maximum allowed Peak whp_________hp Minimum Competition Weight__________lbs. All cars with a Motor Swap, Aftermarket Forced Induction, Modified Turbo/Supercharger, Aftermarket Head(s), Increased Number of Camshafts, Hybrid Engine, and Ported Rotary motors MUST be assessed by the National PT/TT Director for re-classification into a new PT/TT Base Class! (See PT Rules sections 6.3.C and 6.4) (E-mail the information in the listed format in PT Rules section 6.4.2 to the National PT/TT Director at [email protected] to receive your new PT/TT Base Class) Note: Any car exceeding the Adjusted Weight/Horsepower Ratio limit for its class will be disqualified. (see PT Rules Section 6.1.2 and Appendix A of PT Rules). Proceed to calculate your vehicle’s Modification Points assessment for up-classing purposes. -

Form HSMV 83045

FLORIDA DEPARTMENT OF HIGHWAY SAFETY AND MOTOR VEHICLES Application for Registration of a Street Rod, Custom Vehicle, Horseless Carriage or Antique (Permanent) INSTRUCTIONS: COMPLETE APPLICATION AND CHECK APPLICABLE BOX 1 APPLICANT INFORMATION Name of Applicant Applicant’s Email Address Street Address City _ State Zip Telephone Number _ Sex Date of Birth Florida Driver License Number or FEID Number 2 VEHICLE INFORMATION YEAR MAKE BODY TYPE WEIGHT OF VEHICLE COLOR ENGINE OR ID# TITLE# _ PREVIOUS LICENSE PLATE# _ 3 CERTIFICATION (Check Applicable Box) The vehicle described in section 2 is a “Street Rod” which is a modified motor vehicle manufactured prior to 1949. The vehicle meets state equipment and safety requirements that were in effect in this state as a condition of sale in the year listed as the model year on the certificate of title. The vehicle will only be used for exhibition and not for general transportation. A vehicle inspection must be done at a FLHSMV Regional office and the title branded as “Street Rod” prior to the issuance of the Street Rod license plate. The vehicle described in section 2 is a “Custom Vehicle” which is a modified motor vehicle manufactured after 1948 and is 25 years old or older and has been altered from the manufacturer’s original design or has a body constructed from non-original materials. The vehicle meets state equipment and safety requirements that were in effect in this state as a condition of sale in the year listed as the model year on the certificate of title. The vehicle will only be used for exhibition and not for general transportation. -

Car Configuration Setup Guide

Concur Expense: Car Configuration Setup Guide Last Revised: July 21, 2021 Applies to these SAP Concur solutions: Expense Professional/Premium edition Standard edition Travel Professional/Premium edition Standard edition Invoice Professional/Premium edition Standard edition Request Professional/Premium edition Standard edition Table of Contents Section 1: Permissions ................................................................................................ 1 Section 2: Two User Interfaces for Concur Expense End Users .................................... 2 This Guide – What the User Sees ............................................................................ 2 Transition Guide for End Users ................................................................................ 3 Section 3: Overview .................................................................................................... 3 Criteria ..................................................................................................................... 4 Examples of Car Configurations .................................................................................... 4 Dependencies ............................................................................................................ 5 Calculations and Amounts ........................................................................................... 5 Company Car - Variable Rates ................................................................................ 5 Personal Car ........................................................................................................ -

Driving Resistances of Light-Duty Vehicles in Europe

WHITE PAPER DECEMBER 2016 DRIVING RESISTANCES OF LIGHT- DUTY VEHICLES IN EUROPE: PRESENT SITUATION, TRENDS, AND SCENARIOS FOR 2025 Jörg Kühlwein www.theicct.org [email protected] BEIJING | BERLIN | BRUSSELS | SAN FRANCISCO | WASHINGTON International Council on Clean Transportation Europe Neue Promenade 6, 10178 Berlin +49 (30) 847129-102 [email protected] | www.theicct.org | @TheICCT © 2016 International Council on Clean Transportation TABLE OF CONTENTS Executive summary ...................................................................................................................II Abbreviations ........................................................................................................................... IV 1. Introduction ...........................................................................................................................1 1.1 Physical principles of the driving resistances ....................................................................... 2 1.2 Coastdown runs – differences between EU and U.S. ........................................................ 5 1.3 Sensitivities of driving resistance variations on CO2 emissions ..................................... 6 1.4 Vehicle segments ............................................................................................................................. 8 2. Evaluated data sets ..............................................................................................................9 2.1 ICCT internal database ................................................................................................................. -

Louisville's Labor Day Drive Thru Car Show Participant Information

Louisville’s Labor Day Drive Thru Car Show Participant Information COVID-19 Health and Safety Information • Please stay home if you are sick or exhibiting COVID-19 symptoms or if you have been in close contact with a person suspected or confirmed to have COVID-19. • Face Masks: Masks must be worn properly covering the nose and mouth during check-in, when walking around the event, and when talking with car show attendees or other participants. You may remove your mask only when stationed at your vehicle. • Social Distancing: Please maintain a distance of 6’ or greater from event staff, volunteers, attendees, and other participants not from your household during the event. • A list of event participants, staff, and volunteers will be kept on file. Should you receive a positive or suspected COVID-19 diagnosis within 2 weeks following the event, please inform the event organizer. Contact tracing is generally implemented in situations where individuals have had close contact with a person suspected or confirmed to have COVID-19 (generally within 6 feet for at least 15 minutes, depending on level of exposure). General Information and Check-in Details Date and Times: Monday, September 7, 2020 • Participant check-in: 8:30-9:30am o If attendees are lined up and waiting to come through the show, we will begin letting attendees view the show as early as 9:45. • Car Show Hours: 10am-noon o Last attendee will enter the show at 12pm. Participating vehicles should plan to stay until 12:30. Check-in Location—See Attached Map • Ascent Community Church Parking Lot (aka, the “Old Sam’s Club”), 550 McCaslin Blvd, Louisville o Enter near Post Office; Check-in tent is on the north side of parking lot, near Safeway • Cars will generally be parked in the order in which you arrive. -

From Porsche Club of America--Golden Gate Region Date: December 4, 2007 2:39:13 PM PST To: [email protected] Reply-To: [email protected]

From: John Celona <[email protected]> Subject: From Porsche Club of America--Golden Gate Region Date: December 4, 2007 2:39:13 PM PST To: [email protected] Reply-To: [email protected] Porsche Club of America Golden Gate Region December, 2007 - Vol 47, Number 12 In This Issue Dear Porsche Enthusiast, Place dé Leglise Welcome to The Nugget, the email newsletter of the Golden Letter from the Editor Gate Region, Porsche Club of America. Competition Corner If you have any trouble viewing Membership Report this email, you can click here to go to the archive of PDF Board of Directors versions of this newsletter. For AX #10 comments or feedback, click here to email the editor. The Power Chef Thanks for reading. 40 Years Ago in the Nugget Registration for DE #6 Year End GGR Banquet Zone 7 Awards Dinner Crab 34 European Porsche Parade 2008 LA Lit & Toy Show Porsche Web Cinema Porsche Hybrid History Quick Links Event Calendar Classified Ads Join PCA Now Nugget Archive More About Us Zone 7 web site PCA web site Great Links Place dé Leglise --by Claude Leglise, GGR President The State of GGR There are only two events left on the GGR calendar for this year. Driver's Ed at Laguna Seca on December 9 - there are still slots available and the weather is holding up very nicely - and the Friday Night Social on December 21. The club has enjoyed a good year in 2007, yet some challenges face us. Andrew Forrest and the entire Time Trial crew have done a terrific job modernizing and revitalizing the TT Series. -

Limousine New York

SGB LIMOUSINE NEW YORK SGB LIMOUSINES OF NEW YORK | OFFICE (516) 223-5555 | FAX (516) 688-3914 | WEB SGBLIMOUSINE.COM SGB New York LIMOUSINE 24 HOUR LIMO &TO WN CAR SERVICE Your Car is Waiting New York SGB LIMOUSINE 24 HOUR LIMO &TO WN CAR SERVICE AFFILIATE DOCUMENT TABLE OF CONTENTS PAGE 4 WELCOME LETTER PAGE 5 ABOUT SGB PAGE 7 BILLING & CONFIRMATION PAGE 8 OUR FLEET PAGE 10 AIRPORT PROCEDURES PAGE 12 AFFILIATE LOCATIONS New York SGB LIMOUSINE 24 HOUR LIMO &TO WN CAR SERVICE WELCOME TO SGB LIMOUSINE OF NEW YORK On behalf of SGB Limousine, I would like to welcome you as a new affiliate partner. Our team looks forward to serving your needs in the New York Tri State area with the same pride and dedication that we currently provide our loyal customers. Warm Regards, Steven Berry President SGB LIMOUSINE New York SGB LIMOUSINE 24 HOUR LIMO &TO WN CAR SERVICE We are a team of SGB Limousine remains ABOUT professionals whose committed to the idea mission is to provide that every customer, superior chauffeured whether corporate or SGB LIMOUSINES car services that stand individual, is the one above the rest. that matters most. “Your Car is Waiting” 5 New York SGB LIMOUSINE 24 HOUR LIMO &TO WN CAR SERVICE YOU DESERVE THE BEST 24/7 Call Center We are committed to serving your clients around the clock. Experienced Corporate Agents Our agents are always professional and curtious. Professionally Trained Chauffeurs Our chauffeurs are prompt, curtious and professional. We hire only the best. Fleet of Executive Luxury Sedans We have a large fleet of meticulously maintained Chrysler 300Cs.