4. Classical Thermodynamics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

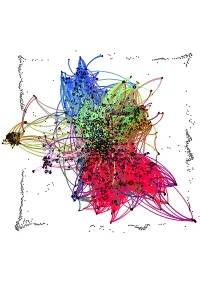

Network Map of Knowledge And

Humphry Davy George Grosz Patrick Galvin August Wilhelm von Hofmann Mervyn Gotsman Peter Blake Willa Cather Norman Vincent Peale Hans Holbein the Elder David Bomberg Hans Lewy Mark Ryden Juan Gris Ian Stevenson Charles Coleman (English painter) Mauritz de Haas David Drake Donald E. Westlake John Morton Blum Yehuda Amichai Stephen Smale Bernd and Hilla Becher Vitsentzos Kornaros Maxfield Parrish L. Sprague de Camp Derek Jarman Baron Carl von Rokitansky John LaFarge Richard Francis Burton Jamie Hewlett George Sterling Sergei Winogradsky Federico Halbherr Jean-Léon Gérôme William M. Bass Roy Lichtenstein Jacob Isaakszoon van Ruisdael Tony Cliff Julia Margaret Cameron Arnold Sommerfeld Adrian Willaert Olga Arsenievna Oleinik LeMoine Fitzgerald Christian Krohg Wilfred Thesiger Jean-Joseph Benjamin-Constant Eva Hesse `Abd Allah ibn `Abbas Him Mark Lai Clark Ashton Smith Clint Eastwood Therkel Mathiassen Bettie Page Frank DuMond Peter Whittle Salvador Espriu Gaetano Fichera William Cubley Jean Tinguely Amado Nervo Sarat Chandra Chattopadhyay Ferdinand Hodler Françoise Sagan Dave Meltzer Anton Julius Carlson Bela Cikoš Sesija John Cleese Kan Nyunt Charlotte Lamb Benjamin Silliman Howard Hendricks Jim Russell (cartoonist) Kate Chopin Gary Becker Harvey Kurtzman Michel Tapié John C. Maxwell Stan Pitt Henry Lawson Gustave Boulanger Wayne Shorter Irshad Kamil Joseph Greenberg Dungeons & Dragons Serbian epic poetry Adrian Ludwig Richter Eliseu Visconti Albert Maignan Syed Nazeer Husain Hakushu Kitahara Lim Cheng Hoe David Brin Bernard Ogilvie Dodge Star Wars Karel Capek Hudson River School Alfred Hitchcock Vladimir Colin Robert Kroetsch Shah Abdul Latif Bhittai Stephen Sondheim Robert Ludlum Frank Frazetta Walter Tevis Sax Rohmer Rafael Sabatini Ralph Nader Manon Gropius Aristide Maillol Ed Roth Jonathan Dordick Abdur Razzaq (Professor) John W. -

A Brief History of Nuclear Astrophysics

A BRIEF HISTORY OF NUCLEAR ASTROPHYSICS PART I THE ENERGY OF THE SUN AND STARS Nikos Prantzos Institut d’Astrophysique de Paris Stellar Origin of Energy the Elements Nuclear Astrophysics Astronomy Nuclear Physics Thermodynamics: the energy of the Sun and the age of the Earth 1847 : Robert Julius von Mayer Sun heated by fall of meteors 1854 : Hermann von Helmholtz Gravitational energy of Kant’s contracting protosolar nebula of gas and dust turns into kinetic energy Timescale ~ EGrav/LSun ~ 30 My 1850s : William Thompson (Lord Kelvin) Sun heated at formation from meteorite fall, now « an incadescent liquid mass » cooling Age 10 – 100 My 1859: Charles Darwin Origin of species : Rate of erosion of the Weald valley is 1 inch/century or 22 miles wild (X 1100 feet high) in 300 My Such large Earth ages also required by geologists, like Charles Lyell A gaseous, contracting and heating Sun 푀⊙ Mean solar density : ~1.35 g/cc Sun liquid Incompressible = 4 3 푅 3 ⊙ 1870s: J. Homer Lane ; 1880s :August Ritter : Sun gaseous Compressible As it shrinks, it releases gravitational energy AND it gets hotter Earth Mayer – Kelvin - Helmholtz Helmholtz - Ritter A gaseous, contracting and heating Sun 푀⊙ Mean solar density : ~1.35 g/cc Sun liquid Incompressible = 4 3 푅 3 ⊙ 1870s: J. Homer Lane ; 1880s :August Ritter : Sun gaseous Compressible As it shrinks, it releases gravitational energy AND it gets hotter Earth Mayer – Kelvin - Helmholtz Helmholtz - Ritter A gaseous, contracting and heating Sun 푀⊙ Mean solar density : ~1.35 g/cc Sun liquid Incompressible = 4 3 푅 3 ⊙ 1870s: J. -

The Legacy of Henri Victor Regnault in the Arts and Sciences

International Journal of Arts & Sciences, CD-ROM. ISSN: 1944-6934 :: 4(13):377–400 (2011) Copyright c 2011 by InternationalJournal.org THE LEGACY OF HENRI VICTOR REGNAULT IN THE ARTS AND SCIENCES Sébastien Poncet Laboratoire M2P2, France Laurie Dahlberg Bard College Annandale, USA The 21 st of July 2010 marked the bicentennial of the birth of Henri Victor Regnault, a famous French chemist and physicist and a pioneer of paper photography. During his lifetime, he received many honours and distinctions for his invaluable scientific contributions, especially to experimental thermodynamics. Colleague of the celebrated chemist Louis-Joseph Gay- Lussac (1778-1850) at the École des Mines and mentor of William Thomson (1824-1907) at the École Polytechnique, he is nowadays conspicuously absent from all the textbooks and reviews (Hertz, 2004) dealing with thermodynamics. This paper is thus the opportunity to recall his major contributions to the field of experimental thermodynamics but also to the nascent field, in those days, of organic chemistry. Avid amateur of photography, he devoted more than twenty years of his life to his second passion. Having initially taken up photography in the 1840s as a potential tool for scientific research, he ultimately made many more photographs for artistic and self-expressive purposes than scientific ones. He was a founding member of the Société Héliographique in 1851 and of the Société Française de Photographie in 1854. Like his scientific work, his photography was quickly forgotten upon his death, but has begun to attract new respect and recognition. Keywords: Henri Victor Regnault, Organic chemistry, Thermodynamics, Paper photography. INTRODUCTION Henri Victor Regnault (1810–1878) (see Figures 1a & 1b) was undoubtedly one of the great figures of thermodynamics of all time. -

Appendix a Rate of Entropy Production and Kinetic Implications

Appendix A Rate of Entropy Production and Kinetic Implications According to the second law of thermodynamics, the entropy of an isolated system can never decrease on a macroscopic scale; it must remain constant when equilib- rium is achieved or increase due to spontaneous processes within the system. The ramifications of entropy production constitute the subject of irreversible thermody- namics. A number of interesting kinetic relations, which are beyond the domain of classical thermodynamics, can be derived by considering the rate of entropy pro- duction in an isolated system. The rate and mechanism of evolution of a system is often of major or of even greater interest in many problems than its equilibrium state, especially in the study of natural processes. The purpose of this Appendix is to develop some of the kinetic relations relating to chemical reaction, diffusion and heat conduction from consideration of the entropy production due to spontaneous processes in an isolated system. A.1 Rate of Entropy Production: Conjugate Flux and Force in Irreversible Processes In order to formally treat an irreversible process within the framework of thermo- dynamics, it is important to identify the force that is conjugate to the observed flux. To this end, let us start with the question: what is the force that drives heat flux by conduction (or heat diffusion) along a temperature gradient? The intuitive answer is: temperature gradient. But the answer is not entirely correct. To find out the exact force that drives diffusive heat flux, let us consider an isolated composite system that is divided into two subsystems, I and II, by a rigid diathermal wall, the subsystems being maintained at different but uniform temperatures of TI and TII, respectively. -

A Chronology of Significant Events in the History of Science and Technology

A Chronology of Significant Events in the History of Science and Technology c. 2725 B.C. - Imhotep in Egypt considered the first medical doctor c. 2540 B.C. - Pyramids of Egypt constructed c. 2000 B.C. - Chinese discovered magnetic attraction c. 700 B.C. - Greeks discovered electric attraction produced by rubbing amber c. 600 B.C. - Anaximander discovered the ecliptic (the angle between the plane of the earth's rotation and the plane of the solar system) c. 600 B.C. - Thales proposed that nature should be understood by replacing myth with logic; that all matter is made of water c. 585 B.C. - Thales correctly predicted solar eclipse c. 530 B.C. - Pythagoras developed mathematical theory c. 500 B.C. - Anaximenes introduced the ideas of condensation and rarefaction c. 450 B.C. - Anaxagoras proposed the first clearly materialist philosophy - the universe is made entirely of matter in motion c. 370 B.C. - Leucippus and Democritus proposed that matter is made of small, indestructible particles 335 B.C. - Aristotle established the Lyceum; studied philosophy, logic c. 300 B.C. - Euclid wrote "Elements", a treatise on geometry c. 300 B.C. - Aristarchus proposed that the earth revolves around the sun; calculated diameter of the earth c. 300 B.C. - The number of volumes in the Library of Alexandria reached 500,000 c. 220 B.C. - Archimedes made discoveries in mathematics and mechanics c. 150 A.D. - Ptolemy studied mathematics, science, geography; proposed that the earth is the center of the solar system 190 - Chinese mathematicians calculated pi -

Review of Thermodynamics from Statistical Physics Using Mathematica © James J

Review of Thermodynamics from Statistical Physics using Mathematica © James J. Kelly, 1996-2002 We review the laws of thermodynamics and some of the techniques for derivation of thermodynamic relationships. Introduction Equilibrium thermodynamics is the branch of physics which studies the equilibrium properties of bulk matter using macroscopic variables. The strength of the discipline is its ability to derive general relationships based upon a few funda- mental postulates and a relatively small amount of empirical information without the need to investigate microscopic structure on the atomic scale. However, this disregard of microscopic structure is also the fundamental limitation of the method. Whereas it is not possible to predict the equation of state without knowing the microscopic structure of a system, it is nevertheless possible to predict many apparently unrelated macroscopic quantities and the relationships between them given the fundamental relation between its state variables. We are so confident in the principles of thermodynamics that the subject is often presented as a system of axioms and relationships derived therefrom are attributed mathematical certainty without need of experimental verification. Statistical mechanics is the branch of physics which applies statistical methods to predict the thermodynamic properties of equilibrium states of a system from the microscopic structure of its constituents and the laws of mechanics or quantum mechanics governing their behavior. The laws of equilibrium thermodynamics can be derived using quite general methods of statistical mechanics. However, understanding the properties of real physical systems usually requires applica- tion of appropriate approximation methods. The methods of statistical physics can be applied to systems as small as an atom in a radiation field or as large as a neutron star, from microkelvin temperatures to the big bang, from condensed matter to nuclear matter. -

Submission from Pat Naughtin

Inquiry into Australia's future oil supply and alternative transport fuels Submission from Pat Naughtin Dear Committee members, My submission will be short and simple, and it applies to all four terms of reference. Here is my submission: When you are writing your report on this inquiry, could you please confine yourself to using the International System Of Units (SI) especially when you are referring to amounts of energy. SI has only one unit for energy — joule — with these multiples to measure larger amounts of energy — kilojoules, megajoules, gigajoules, terajoules, petajoules, exajoules, zettajoules, and yottajoules. This is the end of my submission. Supporting material You probably need to know a few things about this submission. What is the legal situation? See 1 Legal issues. Why is this submission needed? See 2 Deliberate confusion. Is the joule the right unit to use? See 3 Chronology of the joule. Why am I making a submission to your inquiry? See: 4 Why do I care about energy issues and the joule? Who is Pat Naughtin and does he know what he's talking about? See below signature. Cheers and best wishes with your inquiry, Pat Naughtin ASM (NSAA), LCAMS (USMA)* PO Box 305, Belmont, Geelong, Australia Phone 61 3 5241 2008 Pat Naughtin is the editor of the 'Numbers and measurement' chapter of the Australian Government Publishing Service 'Style manual – for writers, editors and printers'; he is a Member of the National Speakers Association of Australia and the International Association of Professional Speakers. He is a Lifetime Certified Advanced Metrication Specialist (LCAMS) with the United States Metric Association. -

Basic Concepts 1 1

BASIC CONCEPTS 1 1 Basic Concepts 1.1 INTRODUCTION It is axiomatic in thermodynamics that when two systems are brought into contact through some kind of wall, energy transfers such as heat and work take place between them. Work is a transfer of energy to a particle which is evidenced by changes in its position when acted upon by a force. Heat, like work, is energy in the process of being transferred. Energy is what is stored, and work and heat are two ways of transferring energy across the boundaries of a system. The amount of energy transfer as heat can be determined from energy conservation consideration (first law of thermodynamics). Two systems are said to be in thermal equilibrium with one another if no energy transfer such as heat occurs between them in a finite period when they are connected through a diathermal (non-adiabatic) wall. Temperature is a property of matter which two bodies in equilibrium have in common. Hot and cold are the adjectives used to describe high and low values of temperature. Energy transfer as heat will take place from the assembly (body) with the higher temperature to that with the lower temperature, if they two are permitted to interact through a diathermal wall (second law of thermodynamics). The transfer and conversion of energy from one form to another is basic to all heat transfer processes and hence they are governed by the first as well as the second law of thermodynamics. This does not and must not mean that the principles governing the transfer of heat can be derived from, or are mere corollaries of, the basic laws of thermodynamics. -

Considerations for the Measurement of Deep-Body, Skin and Mean Body Temperatures

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Portsmouth University Research Portal (Pure) CONSIDERATIONS FOR THE MEASUREMENT OF DEEP-BODY, SKIN AND MEAN BODY TEMPERATURES Nigel A.S. Taylor1, Michael J. Tipton2 and Glen P. Kenny3 Affiliations: 1Centre for Human and Applied Physiology, School of Medicine, University of Wollongong, Wollongong, Australia. 2Extreme Environments Laboratory, Department of Sport & Exercise Science, University of Portsmouth, Portsmouth, United Kingdom. 3Human and Environmental Physiology Research Unit, School of Human Kinetics, University of Ottawa, Ottawa, Ontario, Canada. Corresponding Author: Nigel A.S. Taylor, Ph.D. Centre for Human and Applied Physiology, School of Medicine, University of Wollongong, Wollongong, NSW 2522, Australia. Telephone: 61-2-4221-3463 Facsimile: 61-2-4221-3151 Electronic mail: [email protected] RUNNING HEAD: Body temperature measurement Body temperature measurement 1 CONSIDERATIONS FOR THE MEASUREMENT OF DEEP-BODY, SKIN AND 2 MEAN BODY TEMPERATURES 3 4 5 Abstract 6 Despite previous reviews and commentaries, significant misconceptions remain concerning 7 deep-body (core) and skin temperature measurement in humans. Therefore, the authors 8 have assembled the pertinent Laws of Thermodynamics and other first principles that 9 govern physical and physiological heat exchanges. The resulting review is aimed at 10 providing theoretical and empirical justifications for collecting and interpreting these data. 11 The primary emphasis is upon deep-body temperatures, with discussions of intramuscular, 12 subcutaneous, transcutaneous and skin temperatures included. These are all turnover indices 13 resulting from variations in local metabolism, tissue conduction and blood flow. 14 Consequently, inter-site differences and similarities may have no mechanistic relationship 15 unless those sites have similar metabolic rates, are in close proximity and are perfused by 16 the same blood vessels. -

1. Lecture 1 Opening Remarks

1. LECTURE 1 OPENING REMARKS 1.1 Nature of Heat and Work Thermodynamics deals with heat and work - two processes by which a system transacts energy with its surroundings or with another system. Heat. By touching we can tell a hot body from a hotter body; we can tell a biting cold metal knob from the warm comfort of a wooden door1 on a winter morning. When a hot body comes into contact with a cold body, we often observe, it is the hot body which cools down and the cold body which warms up. Energy flows, naturally and spontaneously, from hot to cold and not and never the other way around2. Heat 3 is a process by which energy flows. Once the two bodies become equally warm, the flow stops. Thermal equilibrium obtains. Thus, an empirical notion of thermal equilibrium should have been known to man a long time ago. Work. Coming to the notion of work, we observe that when an object moves against an opposing force, work is done. • When you pull water up, from a well, against gravity, you do work. • If you want to push a car against the opposing friction, you must do work. • When you stretch a rubber band against the opposing entropic tension you do work. • When a fat man climbs up the stairs against gravity, he does work; • A fat man does more work when he climbs up the stairs than a not-so-fat man. Of course in a free fall, you do no work - you are not opposing gravity - though you will get hurt, for sure, when you hit the ground! 1 Incidentally, the door knob, the wooden door, and the cold surrounding atmosphere have been in contact with each other for so long they should have come into thermal equilibrium with each other. -

The Short History of Science

PHYSICS FOUNDATIONS SOCIETY THE FINNISH SOCIETY FOR NATURAL PHILOSOPHY PHYSICS FOUNDATIONS SOCIETY THE FINNISH SOCIETY FOR www.physicsfoundations.org NATURAL PHILOSOPHY www.lfs.fi Dr. Suntola’s “The Short History of Science” shows fascinating competence in its constructively critical in-depth exploration of the long path that the pioneers of metaphysics and empirical science have followed in building up our present understanding of physical reality. The book is made unique by the author’s perspective. He reflects the historical path to his Dynamic Universe theory that opens an unparalleled perspective to a deeper understanding of the harmony in nature – to click the pieces of the puzzle into their places. The book opens a unique possibility for the reader to make his own evaluation of the postulates behind our present understanding of reality. – Tarja Kallio-Tamminen, PhD, theoretical philosophy, MSc, high energy physics The book gives an exceptionally interesting perspective on the history of science and the development paths that have led to our scientific picture of physical reality. As a philosophical question, the reader may conclude how much the development has been directed by coincidences, and whether the picture of reality would have been different if another path had been chosen. – Heikki Sipilä, PhD, nuclear physics Would other routes have been chosen, if all modern experiments had been available to the early scientists? This is an excellent book for a guided scientific tour challenging the reader to an in-depth consideration of the choices made. – Ari Lehto, PhD, physics Tuomo Suntola, PhD in Electron Physics at Helsinki University of Technology (1971). -

Fysikaalisen Maailmankuvan Kehitys

Fysikaalisen maailmankuvan kehitys Reijo Rasinkangas 20. kesÄakuuta 2005 ii Kirjoittaja varaa tekijÄanoikeudet tekstiin ja kuviin itselleen; muuten tyÄo on kenen tahansa vapaasti kÄaytettÄavissÄa sekÄa opiskelussa ettÄa opetuksessa. Oulun yliopiston Fysikaalisten tieteiden laitoksen kÄayttÄoÄon teksti on tÄaysin vapaata. Esipuhe Fysikaalinen maailmankuva pitÄaÄa sisÄallÄaÄan kÄasityksemme ympÄarÄoivÄastÄa maa- ilmasta, maailmankaikkeuden olemuksesta aineen pienimpiin rakenneosiin ja niiden vuorovaikutuksiin. Kun vielÄa kurkotamme maailmankaikkeuden al- kuun, nÄaitÄa ÄaÄaripÄaitÄa, kosmologiaa ja kvanttimekaniikkaa, ei enÄaÄa voi erottaa toisistaan; tÄassÄa syy miksi fyysikot tavoittelevat | hieman mahtipontisesti mutta eivÄat aivan syyttÄa | 'kaiken teoriaa'. TÄamÄa johdanto fysikaaliseen maailmankuvaan etenee lÄahes kronologisessa jÄarjestyksessÄa. Aluksi tutustumme lyhyesti kehitykseen ennen antiikin aikaa. Sen jÄalkeen antiikille ja keskiajalle (johon renessanssikin kuuluu) on omat lu- kunsa. Uudelta ajalta alkaen materiaali on jaettu aikakausien pÄaÄatutkimusai- heiden mukaan lukuihin, jotka ovat osittain ajallisesti pÄaÄallekkÄaisiÄa. LiitteissÄa esitellÄaÄan mm. muutamia tieteen¯loso¯sia kÄasitteitÄa. Fysiikka tutkii luonnon perusvoimia eli -vuorovaikutuksia, niitÄa hallitse- via lakeja sekÄa niiden vÄalittÄomiÄa seurauksia. 'Puhtaan' fysiikan lisÄaksi tÄassÄa kÄasitellÄaÄan lyhyesti myÄos muita tieteenaloja. TÄahtitiede on melko hyvin edus- tettuna, koska sen kehitys on liittynyt lÄaheisesti fysiikan kehitykseen (toi- saalta jo