Creating Library Linked Data with Wikibase

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Amber Billey Senylrc 4/1/2016

AMBER BILLEY SENYLRC 4/1/2016 Photo by twm1340 - Creative Commons Attribution-ShareAlike License https://www.flickr.com/photos/89093669@N00 Created with Haiku Deck Today’s slides: http://bit.ly/SENYLRC_BF About me... ● Metadata Librarian ● 10 years of cataloging and digitization experience for cultural heritage institutions ● MLIS from Pratt in 2009 ● Zepheira BIBFRAME alumna ● Curious by nature [email protected] @justbilley Photo by GBokas - Creative Commons Attribution-NonCommercial-ShareAlike License https://www.flickr.com/photos/36724091@N07 Created with Haiku Deck http://digitalgallery.nypl.org/nypldigital/id?1153322 02743cam 22004094a 45000010008000000050017000080080041000250100017000660190014000830200031000970200028001280200 03800156020003500194024001600229035002400245035003900269035001700308040005300325050002200378 08200120040010000300041224500790044225000120052126000510053330000350058449000480061950400640 06675050675007315200735014066500030021416500014021717000023021858300049022089000014022579600 03302271948002902304700839020090428114549.0080822s2009 ctua b 001 0 eng a 2008037446 a236328594 a9781591585862 (alk. paper) a1591585864 (alk. paper) a9781591587002 (pbk. : alk. paper) a159158700X (pbk. : alk. paper) a99932583184 a(OCoLC) ocn236328585 a(OCoLC)236328585z(OCoLC)236328594 a(NNC)7008390 aDLCcDLCdBTCTAdBAKERdYDXCPdUKMdC#PdOrLoB-B00aZ666.5b.T39 200900a0252221 aTaylor, Arlene G., d1941-14aThe organization of information /cArlene G. Taylor and Daniel N. Joudrey. a3rd ed. aWestport, Conn. :bLibraries Unlimited,c2009. axxvi, -

White House Photographs April 19, 1975

Gerald R. Ford Presidential Library White House Photographs April 19, 1975 This database was created by Library staff and indexes all photographs taken by the Ford White House photographers on this date. Use the search capabilities in your PDF reader to locate key words within this index. Please note that clicking on the link in the “Roll #” field will display a 200 dpi JPEG image of the contact sheet (1:1 images of the 35 mm negatives). Gerald Ford is always abbreviated “GRF” in the "Names" field. If the "Geographic" field is blank, the photo was taken within the White House complex. The date on the contact sheet image is the date the roll of film was processed, not the date the photographs were taken. All photographs taken by the White House photographers are in the public domain and reproductions (600 dpi scans or photographic prints) of individual images may be purchased and used without copyright restriction. Please include the roll and frame numbers when contacting the Library staff about a specific photo (e.g., A1422-10). To view photo listings for other dates, to learn more about this project or other Library holdings, or to contact an archivist, please visit the White House Photographic Collection page View President Ford's Daily Diary (activities log) for this day Roll # Frames Tone Subject - Proper Subject - Generic Names Geographic Location Photographer A4085 3-4 BW Prior to State Dinner For President & Mrs. seated in circle, talking; Kissinger, Others Second Floor - Kennerly Kenneth Kaunda of Zambia formal wear Yellow Oval -

Worldcat Data Licensing

WorldCat Data Licensing 1. Why is OCLC recommending an open data license for its members? Many libraries are now examining ways that they can make their bibliographic records available for free on the Internet so that they can be reused and more fully integrated into the broader Web environment. Libraries may want to release catalog data as linked data, as MARC21 or as MARCXML. For an OCLC® member institution, these records may often contain data derived from WorldCat®. Coupled with a reference to the community norms articulated in WorldCat Rights and Responsibilities for the OCLC Cooperative, the Open Data Commons Attribution (ODC-BY) license provides a good way to share records that is consistent with the cooperative nature of OCLC cataloging. Best practices in the Web environment include making data available along with a license that clearly sets out the terms under which the data is being made available. Without such a license, users can never be sure of their rights to use the data, which can impede innovation. The VIAF project and the addition of Schema.org linked data to WorldCat.org records were both made available under the ODC-BY license. After much research and discussion, it was clear that ODC-BY was the best choice of license for many OCLC data services. The recommendation for members to also adopt this clear and consistent approach to the open licensing of shared data, derived from WorldCat, flowed from this experience. An OCLC staff group, aided by an external open-data licensing expert, conducted a structured investigation of available licensing alternatives to provide OCLC member institutions with guidance. -

Mate Choice from Avicenna's Perspective

Journal of Law, Policy and Globalization www.iiste.org ISSN 2224-3240 (Paper) ISSN 2224-3259 (Online) Vol.26, 2014 Mate choice from Avicenna’s perspective 1 2 3 Masoud Raei , Maryam sadat fatemi Hassanabadi * , Hossein Mansoori 1. Assistant Professor Faculty of law and Elahiyat and Maaref Eslami, Najafabad Branch, Islamic Azad University, Najafabad, Isfahan, Iran. 2. MA Student. Fundamentals jurisprudence Islamic Law. , Islamic Azad Khorasgan University, Isfahan, Iran. 3. MA in History and Philosophy of Education, University of Isfahan, Isfahan, Iran. * E-mail of the corresponding author: hosintaghi@ gmail.com Abstract The aim of the present research was examining mate choice from Avicenna’s perspective. Being done through application of qualitative approach and a descriptive-analytic method as well, this study attempted to analyze and examine Avicenna’s perspective on effects of mate choice and on criteria for selecting an appropriate spouse and its necessities as well as the hurts discussed in this regard. The research results showed that Avicenna has encouraged all people in marriage, since it brings to them economic and social outcomes, peace and sexual satisfaction as well. Avicenna stated some criteria for appropriate mate choice, and in addition to its necessities, he advised us to follow such principles as the obvious marriage occurrence, its stability, wife’s not being common and a suitable age range for marriage. Moreover, he has examined issues and hurts related to mate choice referring to such cases as nature incongruity, marital infidelity, the state of not having any babies and ethical conflict which may happen in mate choice and marriage, suggesting some solutions to such problems. -

Download; (2) the Appropriate Log-In and Password to Access the Server; and (3) Where on the Server (I.E., in What Folder) the File Was Kept

AN ALCTS MONOGRAPH LINKED DATA FOR THE PERPLEXED LIBRARIAN SCOTT CARLSON CORY LAMPERT DARNELLE MELVIN AND ANNE WASHINGTON chicago | 2020 alastore.ala.org © 2020 by the American Library Association Extensive effort has gone into ensuring the reliability of the information in this book; however, the publisher makes no warranty, express or implied, with respect to the material contained herein. ISBNs 978-0-8389-4746-3 (paper) 978-0-8389-4712-8 (PDF) 978-0-8389-4710-4 (ePub) 978-0-8389-4711-1 (Kindle) Library of Congress Control Number: 2019053975 Cover design by Alejandra Diaz. Text composition by Dianne M. Rooney in the Adobe Caslon Pro and Archer typefaces. This paper meets the requirements of ANSI/NISO Z39.48–1992 (Permanence of Paper). Printed in the United States of America 23 24 22 21 20 5 4 3 2 1 alastore.ala.org CONTENTS Acknowledgments vii Introduction ix One Enquire Within upon Everything 1 The Origins of Linked Data Two Unfunky and Obsolete 17 From MARC to RDF Three Mothership Connections 39 URIs and Serializations Four What Is a Thing? 61 Ontologies and Linked Data Five Once upon a Time Called Now 77 Real-World Examples of Linked Data Six Tear the Roof off the Sucker 105 Linked Library Data Seven Freaky and Habit-Forming 121 Linked Data Projects That Even Librarians Can Mess Around With EPILOGUE The Unprovable Pudding: Where Is Linked Data in Everyday Library Life? 139 Bibliography 143 Glossary 149 Figure Credits 153 About the Authors 155 Index 157 alastore.ala.orgv INTRODUCTION ince the mid-2000s, the greater GLAM (galleries, libraries, archives, and museums) community has proved itself to be a natural facilitator S of the idea of linked data—that is, a large collection of datasets on the Internet that is structured so that both humans and computers can understand it. -

Preparing for a Linked Data Approach to Name Authority Control in an Institutional Repository Context

Title Assessing author identifiers: preparing for a linked data approach to name authority control in an institutional repository context Author details Corresponding author: Moira Downey, Digital Repository Content Analyst, Duke University ([email protected] ; 919 660 2409; https://orcid.org/0000-0002-6238-4690) 1 Abstract Linked data solutions for name authority control in digital libraries are an area of growing interest, particularly among institutional repositories (IRs). This article first considers the shift from traditional authority files to author identifiers, highlighting some of the challenges and possibilities. An analysis of author name strings in Duke University's open access repository, DukeSpace, is conducted in order to identify a suitable source of author URIs for Duke's newly launched repository for research data. Does one of three prominent international authority sources—LCNAF, VIAF, and ORCID—demonstrate the most comprehensive uptake? Finally, recommendations surrounding a technical approach to leveraging author URIs at Duke are briefly considered. Keywords Name authority control, Authority files, Author identifiers, Linked data, Institutional repositories 2 Introduction Linked data has increasingly been looked upon as an encouraging model for storing metadata about digital objects in the libraries, archives and museums that constitute the cultural heritage sector. Writing in 2010, Coyle draws a connection between the affordances of linked data and the evolution of what she refers to as "bibliographic control," that is, "the organization of library materials to facilitate discovery, management, identification, and access" (2010, p. 7). Coyle notes the encouragement of the Working Group on the Future of Bibliographic Control to think beyond the library catalog when considering avenues by which users seek and encounter information, as well as the group's observation that the future of bibliographic control will be "collaborative, decentralized, international in scope, and Web- based" (2010, p. -

OCLC and the Ethics of Librarianship: Using a Critical Lens to Recast a Key Resource

OCLC and the Ethics of Librarianship: Using a Critical Lens to Recast a Key Resource Maurine McCourry* Introduction Since its founding in 1967, OCLC has been a catalyst for dramatic change in the way that libraries organize and share resources. The organization’s structure has been self-described as a cooperative throughout its his- tory, but its governance has never strictly fit that model. There have always been librarians involved, and it has remained a non-profit organization, but its business model is strongly influenced by the corporate world, and many in the upper echelon of its leadership have come from that realm. As a result, many librarians have questioned OCLC’s role as a partner with libraries in providing access to information. There has been an undercurrent of belief that the organization does not really share the values of the profession, and does not necessarily ascribe to its ethics. This paper will explore the role the ethics of the profession of librarianship have, or should have, in both the governance of OCLC as an organization, and the use of its services by the library community. Using the frame- work of critical librarianship, it will attempt to answer the following questions: 1) What obligation does OCLC have to observe the ethics of librarianship?; 2) Do OCLC’s current practices fit within the guidelines established by the accepted ethics of the profession?; and 3) What are the responsibilities of the library community in ob- serving the ethics of the profession in relation to the services provided by OCLC? OCLC was founded with a mission of service to academic libraries, seeking to save library staff time and money while facilitating cooperative borrowing. -

Scripts, Languages, and Authority Control Joan M

49(4) LRTS 243 Scripts, Languages, and Authority Control Joan M. Aliprand Library vendors’ use of Unicode is leading to library systems with multiscript capability, which offers the prospect of multiscript authority records. Although librarians tend to focus on Unicode in relation to non-Roman scripts, language is a more important feature of authority records than script. The concept of a catalog “locale” (of which language is one aspect) is introduced. Restrictions on the structure and content of a MARC 21 authority record are outlined, and the alternative structures for authority records containing languages written in non- Roman scripts are described. he Unicode Standard is the universal encoding standard for all the charac- Tters used in writing the world’s languages.1 The availability of library systems based on Unicode offers the prospect of library records not only in all languages but also in all the scripts that a particular system supports. While such a system will be used primarily to create and provide access to bibliographic records in their actual scripts, it can also be used to create authority records for the library, perhaps for contribution to communal authority files. A number of general design issues apply to authority records in multiple languages and scripts, design issues that affect not just the key hubs of communal authority files, but any institution or organization involved with authority control. Multiple scripts in library systems became available in the 1980s in the Research Libraries Information Network (RLIN) with the addition of Chinese, Japanese, and Korean (CJK) capability, and in ALEPH (Israel’s research library network), which initially provided Latin and Hebrew scripts and later Arabic, Cyrillic, and Greek.2 The Library of Congress continued to produce catalog cards for material in the JACKPHY (Japanese, Arabic, Chinese, Korean, Persian, Hebrew, and Yiddish) languages until all of the scripts used to write these languages were supported by an automated system. -

There Are No Limits to Learning! Academic and High School

Brick and Click Libraries An Academic Library Symposium Northwest Missouri State University Friday, November 5, 2010 Managing Editors: Frank Baudino Connie Jo Ury Sarah G. Park Co-Editor: Carolyn Johnson Vicki Wainscott Pat Wyatt Technical Editor: Kathy Ferguson Cover Design: Sean Callahan Northwest Missouri State University Maryville, Missouri Brick & Click Libraries Team Director of Libraries: Leslie Galbreath Co-Coordinators: Carolyn Johnson and Kathy Ferguson Executive Secretary & Check-in Assistant: Beverly Ruckman Proposal Reviewers: Frank Baudino, Sara Duff, Kathy Ferguson, Hong Gyu Han, Lisa Jennings, Carolyn Johnson, Sarah G. Park, Connie Jo Ury, Vicki Wainscott and Pat Wyatt Technology Coordinators: Sarah G. Park and Hong Gyu Han Union & Food Coordinator: Pat Wyatt Web Page Editors: Lori Mardis, Sarah G. Park and Vicki Wainscott Graphic Designer: Sean Callahan Table of Contents Quick & Dirty Library Promotions That Really Work! 1 Eric Jennings, Reference & Instruction Librarian Kathryn Tvaruzka, Education Reference Librarian University of Wisconsin Leveraging Technology, Improving Service: Streamlining Student Billing Procedures 2 Colleen S. Harris, Head of Access Services University of Tennessee – Chattanooga Powerful Partnerships & Great Opportunities: Promoting Archival Resources and Optimizing Outreach to Public and K12 Community 8 Lea Worcester, Public Services Librarian Evelyn Barker, Instruction & Information Literacy Librarian University of Texas at Arlington Mobile Patrons: Better Services on the Go 12 Vincci Kwong, -

Remarks at Old North Bridge, Concord, Massachusetts” of the President’S Speeches and Statements: Reading Copies at the Gerald R

The original documents are located in Box 7, folder “4/19/75 - Remarks at Old North Bridge, Concord, Massachusetts” of the President’s Speeches and Statements: Reading Copies at the Gerald R. Ford Presidential Library. Copyright Notice The copyright law of the United States (Title 17, United States Code) governs the making of photocopies or other reproductions of copyrighted material. Gerald Ford donated to the United States of America his copyrights in all of his unpublished writings in National Archives collections. Works prepared by U.S. Government employees as part of their official duties are in the public domain. The copyrights to materials written by other individuals or organizations are presumed to remain with them. If you think any of the information displayed in the PDF is subject to a valid copyright claim, please contact the Gerald R. Ford Presidential Library. Digitized from Box 7 of President's Speeches and Statements: Reading Copies at the Gerald R. Ford Presidential Library THE PRES ID:I:FT JB S SEE11 .~ .- 0 REMARKS AT OLD NORTH BRIDGE CONCORD# MASSACHU~ETTS SATURDAY# APRIL 19# 1975 - I - TWO HUNDRED YEARS AGO TODAY, AMERICAN MINUTEMEN RAISED THEIR MUSKETS AT THE OLD NORTH BRIDGE AND ANSWERED A BRITISH VOLLEY. RALPH WALDO EMERSON CALLED IT 11THE SHOT HEARD 'ROUND THE WORLD. 11 - 2 - THE BRITISH WERE SOON IN FULL RETREAT BACK TO BOSTON~ BUT THERE WAS NO TURNING BACK FOR THE COLONISTS; THE AMERICAN REVOLUTION HAD BEGUN. - 3 - TODAY -- lWO CENTURIES LATER -- THE PRESIDENT OF FIFTY UNITED STATES AND lWO HUNDRED AND THIRTEEN MILLION PEOPLE-- STANDS BEFORE A NEW GENERATION OF AMERICANS WHO HAVE COME TO THIS HALLOWED GROUND. -

James Hayward

JAMES HAYWARD Born April 4, I 750 Killed in the Battle of Lexington April 19, 1775 With Genealogical Notes Relating to the Haywards Illustrated Privately Printed Springfield, Massachusetts 1911 { I i I i / \ \ I \ f / f I' l i / I / i I I A Powder-Horn now in possession of the Public Library, Acton, Massa chusetts. "James Hayward of Acton, Massachusetts, who was killed at Lexington on April z9, r775, by a ball which passed_throug/1, his powder-horn into his body Presented to the town of Acton." James Hayward was a great-uncte of Everett Hosmer Barney. JAMES HAYWARD EVERETT HOSMER BARNEY GEORGE MURRAY BARNEY Copyright 1911 By William Frederick Adams NoTE.-Collecting records for one line of the Barney Family has resulted in the accumulation of other material not directly relating to the line in quest, but is of such value that it should be preserved. It is for this object that this volume is published. CONTENTS PAGE Hayward Genealogical Notes. 19 Concord-Lexington Fight April 19, 1775........ 39 If Lexington is the "Birthplace of Liberty"..... 42 List of Captain Isaac Davis' Company. 45 The Davis Monument, Acton, Massachusetts. 49 Index. 55 ILLUSTRATIONS James Hayward's Powder-Hom FRONTISPIECE Everett Hosmer Barney, Portrait Captain John Hayward's Company, List of Names Fisk's Hill, Lexington, Massachusetts James Hayward Tablet The Original Hayward Pump "Minute-man" ''Minute-man'' The Spirit of '76 Captain Isaac Davis' Company Davis Monument, Acton, Massachusetts George Murray Barney, Portrait Stone on which Captain Davis fell Hayward I GEORGE, 1635, Concord, Massachusetts I II JOSEPH III SIMEON IV SAMUEL V BENJAMIN VI AARON (married Rebecca, daughter of Joel Hosmer, Acton, and sister to Harriet Hosmer, mother of EVERETT HOSMER BAR.t~EY and grandmother of GEORGE MURRAY BARNEY) 19 Hayward I-GEORGE1 Settled in Concord, Massachusetts, 1635 Born-- Married Mary ( ) Born-- Died 1693 He died March 29, 1671 Children: 1. -

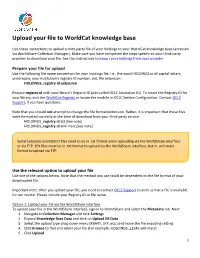

Upload Your File to Worldcat Knowledge Base

Upload your file to WorldCat knowledge base Use these instructions to upload a third-party file of your holdings to your WorldCat knowledge base (accessed via WorldShare Collection Manager). Make sure you have completed the steps specific to your third-party provider to download your file. See the instructions to export your holdings from your provider. Prepare your file for upload Use the following file name convention for your holdings file, i.e., the word HOLDINGS in all capital letters, underscore, your institution's registry ID number, dot, file extension: HOLDINGS_registry-id.extension Replace registry-id with your library's Registry ID (also called OCLC Institution ID). To locate the Registry ID for your library, visit the WorldCat Registry or locate the module in OCLC Service Configuration. Contact OCLC Support, if you have questions. Note that you should not attempt to change the file format/extension. Rather, it is important that these files were formatted correctly at the time of download from your third-party service. HOLDINGS_registry-id.txt [See note] HOLDINGS_registry-id.xml-marc [See note] Serial Solutions and EBSCO files need to be in .txt format when uploading via the WorldShare interface or via FTP. SFX files must be in .txt format to upload via the WorldShare interface, but in .xml-marc format to upload via FTP. Use the relevant option to upload your file Use one of the options below. Note that the method you use could be dependent on the file format of your downloaded file. Important note: After you upload your file, you need to contact OCLC Support to alert us that a file is available for our review.