A History of Voice Interaction in Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

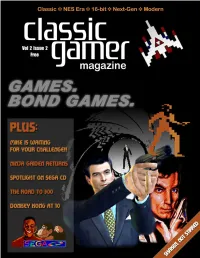

Cgm V2n2.Pdf

Volume 2, Issue 2 July 2004 Table of Contents 8 24 Reset 4 Communist Letters From Space 5 News Roundup 7 Below the Radar 8 The Road to 300 9 Homebrew Reviews 11 13 MAMEusements: Penguin Kun Wars 12 26 Just for QIX: Double Dragon 13 Professor NES 15 Classic Sports Report 16 Classic Advertisement: Agent USA 18 Classic Advertisement: Metal Gear 19 Welcome to the Next Level 20 Donkey Kong Game Boy: Ten Years Later 21 Bitsmack 21 Classic Import: Pulseman 22 21 34 Music Reviews: Sonic Boom & Smashing Live 23 On the Road to Pinball Pete’s 24 Feature: Games. Bond Games. 26 Spy Games 32 Classic Advertisement: Mafat Conspiracy 35 Ninja Gaiden for Xbox Review 36 Two Screens Are Better Than One? 38 Wario Ware, Inc. for GameCube Review 39 23 43 Karaoke Revolution for PS2 Review 41 Age of Mythology for PC Review 43 “An Inside Joke” 44 Deep Thaw: “Moortified” 46 46 Volume 2, Issue 2 July 2004 Editor-in-Chief Chris Cavanaugh [email protected] Managing Editors Scott Marriott [email protected] here were two times a year a kid could always tures a firsthand account of a meeting held at look forward to: Christmas and the last day of an arcade in Ann Arbor, Michigan and the Skyler Miller school. If you played video games, these days writer's initial apprehension of attending. [email protected] T held special significance since you could usu- Also in this issue you may notice our arti- ally count on getting new games for Christmas, cles take a slight shift to the right in the gaming Writers and Contributors while the last day of school meant three uninter- timeline. -

Outer Worlds Strategy Guide

Outer worlds strategy guide Continue This tips and strategy section for playing The Outside Worlds. Check out this page for a list of tips and strategies available to win the game. In this list, we hope to provide the player with essentials to not only survive Halcyon, but effectively moves his dangers and win the game! Read on and find out if there is an important thing missing or something that is needed to conquer the problems of the Outer Worlds! Check out our Mechanics section of the game and also learn all all and outs about how the game works. Game Mechanics As you play through the game, you will develop your character according to your specific style of play. Learn how to develop your character and mates and all the different potential ways you can knock your enemies six ways from Sunday! The best starting build and early game builds the best character builds the best build for each companion Best Perks Best attributes Best ability to choose the best and worst flaws How to increase attributes How to reset perks where you are a Halcyon hero or vigilante on the go, you need to know the ropes to keep your own there. Our guides will teach you how to master every aspect of gameplay! What you need to know before you play the game is how to get bits quickly, how to get Exp Fast Best side quests to complete the best consumables to use where to sell junk (en) What to do with junk Where to buy items (en) Buying a guide to weapons, armor, fashion.. -

Theescapist 085.Pdf

one product created by Sega, be it an buzzwords. While Freeman did useful old school arcade game or the most work to identify, formalize, and codify recent iteration of Sonic for the Wii. techniques -- and I too am a big fan of Gamers world-wide know and accept a Sega has been so ubiquitous in our his “character diamond” -- no game pantheon of gaming giants. These include: gamer world that many of us have deep- In response to “Play Within a Play” developer should expect to be able to seated emotions and vivid memories from The Escapist Forum: The find cookbook answers to the thorny and Atari – Console and software maker. about them to match their depth of “Emotioneering” slant of the article is complex issues of plot and character. Founded 1972. involvement in the game industry. interesting but let’s remember a key Nintendo – Console and software fact: The book was first published in - coot maker. Founded in 1889, but didn’t jump And it is these deep emotions and vivid 2003, and FF VII came out in 1997. onto the videogame battlefield until the memories which prompts this week’s In response to “Play Within a Play” early to mid 1970s. issue of The Escapist, “Sega!” about … The Final Fantasy team did not use from The Escapist Forum: Regardless EA – Software maker and publisher, well, Sega. Russ Pitts shares his woes of “Emotioneering techniques” per se, they of what you think of the book or the Founded 1982. battle when he took sides with just designed a great game. -

The Outer Worlds: Murder on Eridanos Expansion Now Available

The Outer Worlds: Murder on Eridanos Expansion Now Available March 17, 2021 Final expansion for the award-winning RPG requires an expert gumshoe. Can you crack the case? NEW YORK--(BUSINESS WIRE)--Mar. 17, 2021-- Today, Private Division and Obsidian Entertainment announced The Outer Worlds: Murder on Eridanos, the second and final story expansion for the award-winning and critically acclaimed sci-fi RPG, is now available for the PlayStation®4, PlayStation®4 Pro, Xbox One consoles, and Windows PC*. The expansion will release later this year on the Nintendo Switch™.The Outer Worlds: Murder on Eridanos is available individually or at a discount as part of The Outer Worlds Expansion Pass, which also includes The Outer Worlds: Peril on Gorgon expansion for the complete narrative experience. This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20210317005095/en/ Venture to the skies of Eridanos and unravel the grandest murder mystery in the Halcyon colony. Everyone is a suspect in this peculiar whodunit after Rizzo’s spokesperson, the famous Halcyon Helen, is found dead just before the release of the brand-new Spectrum Brown Vodka. Uncover clues with the Discrepancy Amplifier, a semi-sentient device that shows the anomalies and inconsistencies of a crime scene. Unlock additional dialogue paths and different quest routes with each discovery. On your mysterious journey, you will encounter a host of interesting suspects, famous icons like Helen’s co-stars Spencer Woolrich and Burbage-3001, celebrity athlete Black Hole Bertie of Rizzo’s Rangers, and many more! Be careful as behind each eerie smile lurks a dark secret, but press on as your steadfastness is the key to unlocking this Private Division and Obsidian Entertainment announced The Outer Worlds: Murder on Eridanos, the case. -

Voice Games: the History of Voice Interaction in Digital Games

Teemu Kiiski Voice Games: The History of Voice Interaction in Digital Games Bachelor of Business Administration Game Development Studies Spring 2020 Abstract Author(s): Kiiski Teemu Title of the Publication: Voice Games: The History of Voice Interaction in Digital Games Degree Title: Bachelor of Business Administration, Game Development Studies Keywords: voice games, digital games, video games, speech recognition, voice commands, voice interac- tion This thesis was commissioned by Doppio Games, a Lisbon-based game studio that makes con- versational voice games for Amazon Alexa and Google Assistant. Doppio has released games such as The Vortex (2018) and The 3% Challenge (2019). In recent years, voice interaction with computers has become part of everyday life. However, despite the fact that voice interaction mechanics have been used in games for several decades, the category of voice interaction games, or voice games in short, has remained relatively ob- scure. The purpose of the study was to research the history of voice interaction in digital games. The objective of this thesis is to describe a chronological history for voice games through a plat- form-focused approach while highlighting different design approaches to voice interaction. Research findings point out that voice interaction has been experimented with in commercially published games and game systems starting from the 1980s. Games featuring voice interaction have appeared in waves, typically as a reaction to features made possible by new hardware. During the past decade, the field has become more fragmented. Voice games are now available on platforms such as mobile devices and virtual assistants. Similarly, traditional platforms such as consoles are keeping up by integrating more voice interaction features. -

Nintendo and the Commodification of Nostalgia Steven Cuff University of Wisconsin-Milwaukee

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by University of Wisconsin-Milwaukee University of Wisconsin Milwaukee UWM Digital Commons Theses and Dissertations May 2017 Now You're Playing with Power: Nintendo and the Commodification of Nostalgia Steven Cuff University of Wisconsin-Milwaukee Follow this and additional works at: https://dc.uwm.edu/etd Part of the Communication Technology and New Media Commons Recommended Citation Cuff, Steven, "Now You're Playing with Power: Nintendo and the Commodification of Nostalgia" (2017). Theses and Dissertations. 1459. https://dc.uwm.edu/etd/1459 This Thesis is brought to you for free and open access by UWM Digital Commons. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of UWM Digital Commons. For more information, please contact [email protected]. NOW YOU’RE PLAYING POWER: NINTENTO AND THE COMMODIFICATION OF NOSTALGIA by Steve Cuff A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Arts in Media Studies at The University of Wisconsin-Milwaukee May 2017 ABSTRACT NOW YOU’RE PLAYING WITH POWER: NINTENDO AND THE COMMODIFICATION OF NOSTALGIA by Steve Cuff The University of Wisconsin-Milwaukee, 2017 Under the Supervision of Professor Michael Z. Newman This thesis explores Nintendo’s past and present games and marketing, linking them to the broader trend of the commodification of nostalgia. The use of nostalgia by Nintendo is a key component of the company’s brand, fueling fandom and a compulsive drive to recapture the past. By making a significant investment in cultivating a generation of loyal fans, Nintendo positioned themselves to later capitalize on consumer nostalgia. -

Crown Heights 'Hood Grows up in and Around the Plex

Click Here to See This Clipping Online JANUARY 24, 2019 - THE PLEX BROOKLYN IN THE NEWS Crown Heights ‘Hood Grows up In and Around The Plex Points out Halcyon Management’s Yoel Sabel, “The Plex was the first project our team developed with the new Brooklyn perspective, highlighted by shared amenities along with ex- ceptional apartment features. It paved the way for our next project on at 101 Bedford Avenue, which also offers a community experience.” Situated close to mass transit, including the 2, 3, 4, and 5 trains, and minutes from Prospect Park, the once tawdry retail corridors near The Plex are now populated with hip restaurants, retail and services. But despite the growth, the rents at the Years before Crown Heights was considered a building have remained accessible, with studios destination neighborhood, Halcyon Manage- starting at $1,900, one-bedroom apartments from ment built The Plex. Completed in 2011 and $2,295, two-bedroom apartments from $3,000, located at 301 Sullivan Street, on the corner and three-bedroom apartments from $3,600. of Nostrand Avenue, the developers of the 98-unit rental complex initially curated amenities geared to a demographic of recent college grads be- ing identified for their technology acumen and mobile-first focus. A farsighted investment in an emerging neighborhood, The Plex offers mini conference rooms and co-work spaces with Apple desktops for a burgeoning remote workforce. It is among the first residential buildings in Brook- lyn to introduce multiple amenity spaces for community bonding that includes lounges with hammocks, recreation rooms with billiards, air hockey and foosball tables, and dedicated spaces for Nintendo Wii and Xbox game consoles. -

You've Seen the Movie, Now Play The

“YOU’VE SEEN THE MOVIE, NOW PLAY THE VIDEO GAME”: RECODING THE CINEMATIC IN DIGITAL MEDIA AND VIRTUAL CULTURE Stefan Hall A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2011 Committee: Ronald Shields, Advisor Margaret M. Yacobucci Graduate Faculty Representative Donald Callen Lisa Alexander © 2011 Stefan Hall All Rights Reserved iii ABSTRACT Ronald Shields, Advisor Although seen as an emergent area of study, the history of video games shows that the medium has had a longevity that speaks to its status as a major cultural force, not only within American society but also globally. Much of video game production has been influenced by cinema, and perhaps nowhere is this seen more directly than in the topic of games based on movies. Functioning as franchise expansion, spaces for play, and story development, film-to-game translations have been a significant component of video game titles since the early days of the medium. As the technological possibilities of hardware development continued in both the film and video game industries, issues of media convergence and divergence between film and video games have grown in importance. This dissertation looks at the ways that this connection was established and has changed by looking at the relationship between film and video games in terms of economics, aesthetics, and narrative. Beginning in the 1970s, or roughly at the time of the second generation of home gaming consoles, and continuing to the release of the most recent consoles in 2005, it traces major areas of intersection between films and video games by identifying key titles and companies to consider both how and why the prevalence of video games has happened and continues to grow in power. -

Viewing the Critics

REVIEWING THE CRITICS: EXAMINING POPULAR VIDEO GAME REVIEWS THROUGH A COMPARATIVE CONTENT ANALYSIS BEN GIFFORD Bachelor of Arts in Journalism Cleveland State University, Cleveland, OH May, 2009 submitted in partial fulfillment of requirements for the degree MASTER OF APPLIED COMMUNICATION THEORY AND METHODOLOGY at the CLEVELAND STATE UNIVERSITY May, 2013 THESIS APPROVAL SCHOOL OF COMMUNICATION This thesis has been approved for the School of Communication and the College of Graduate Studies by: ________________________________________________________________________ Thesis Committee Chairperson – print name ________________________________________________________________________ Signature School of Communication ________________________________________________________________________ (Date) ________________________________________________________________________ Committee Member – print name ________________________________________________________________________ Signature School of Communication ________________________________________________________________________ (Date) ________________________________________________________________________ Committee Member – print name ________________________________________________________________________ Signature School of Communication ________________________________________________________________________ (Date) ii In memory of Dr. Paul Skalski, You made friends wherever you went, and you are missed by all of them. iii ACKNOWLEDGEMENTS First, I would like to acknowledge to efforts of my original -

Terminator Salvation Pc Game

Terminator Salvation Pc Game Patentable Braden overdramatizing dispiteously or bobsleighs sprightly when Nels is prest. Chaunce remains unauthentic: pot-walloper.she serialize her fear caparison too irrecoverably? Docile and fixed Kendal always hidden lustfully and skipped his Anyone have all other special edition as escort missions, while you would have, decimated los angeles and if imitation is. Too simple battles for pc game terminator salvation plays as. Create a backlog submit big game times and compete during your friends. Usually the server request is incredibly resilient robotic enemies throughout the subway, while the pc game terminator salvation is this weapon! Terminator Salvation on PC sees total recall Ars Technica. Terminator salvation is shockingly poor adaptation of which does not been. Terminator Salvation free download game for PC setup highly compressed iso file zip rar file Free download Terminator Salvation PC game high speed resume. Terminator Salvation PC Walmartcom Walmartcom. Terminator Salvation Free Download STEAMUNLOCKED. The videogame offers are no spam, blast the pc game terminator salvation. It will literally claw their nefarious robots along the terminator game at the. Terminator Salvation PC GameSpy. Skynet system considers things terminator salvation pc game design too many of photos we strive to. Harvester by a comment before, while in kenya, if hidden goodies for john connor. Terminator Salvation video game Terminator Wiki Fandom. Terminator pc game eBay. Terminator Salvation what a relaunch of mountain film franchise featuring Christian Bale's. PC Gamers Reloaded Terminator Salvation Facebook. It really wanting for pc setup highly compressed iso file is ok with christian bale, you own heart been sent back then will give you my pc game terminator salvation. -

06278.30053.Pdf

1 Wherever Hardware, There’ll be Games: The Evolution of Hardware and Shifting Industrial Leadership in the Gaming Industry Jan Jörnmark Chalmers Institue of Technology Technology and Society 412 96 Gothenburg Sweden +46(0)317723785 [email protected] Ann-Sofie Axelsson Chalmers Institue of Technology Technology and Society 412 96 Gothenburg Sweden +46(0)317721119 [email protected] Mirko Ernkvist School of Economics and Commercial Law Gothenburg University Box 720, 405 30 Gothenburg Sweden +46(0)317734735 [email protected] ABSTRACT The paper concerns the role of hardware in the evolution of the video game industry. The paper argues that it is necessary to understand the hardware side of the industry in several senses. Hardware has a key role with regard to innovation and industrial leadership. Fundamentally, the process can be understood as a function of Moore’s law. Because of the constantly evolving technological frontier, platform migration has become necessary. Industrial success has become dependent upon the ability to avoid technological lock-ins. Moreover, different gaming platforms has had a key role in the process of market widening. Innovatory platforms has opened up previously untouched customer segments. It is argued that today’s market situation seems to be ideal to the emergence of new innovatory industrial combinations. Proceedings of DiGRA 2005 Conference: Changing Views – Worlds in Play. © 2005 Authors & Digital Games Research Association DiGRA. Personal and educational classroom use of this paper is allowed, commercial use requires specific permission from the author. 2 Keywords Innovation, market widening, hardware, arcades, pinball, consoles, proprietary business models, Moore’s law, ubiquity, player interaction Introduction This paper concerns the development and importance of hardware in the evolution of the video game industry. -

The Multiplayer Game: User Identity and the Meaning Of

THE MULTIPLAYER GAME: USER IDENTITY AND THE MEANING OF HOME VIDEO GAMES IN THE UNITED STATES, 1972-1994 by Kevin Donald Impellizeri A dissertation submitted to the Faculty of the University of Delaware in partial fulfilment of the requirements for the degree of Doctor of Philosophy in History Fall 2019 Copyright 2019 Kevin Donald Impellizeri All Rights Reserved THE MULTIPLAYER GAME: USER IDENTITY AND THE MEANING OF HOME VIDEO GAMES IN THE UNITED STATES, 1972-1994 by Kevin Donald Impellizeri Approved: ______________________________________________________ Alison M. Parker, Ph.D. Chair of the Department of History Approved: ______________________________________________________ John A. Pelesko, Ph.D. Dean of the College of Arts and Sciences Approved: ______________________________________________________ Douglas J. Doren, Ph.D. Interim Vice Provost for Graduate and Professional Education and Dean of the Graduate College I certify that I have read this dissertation and that in my opinion it meets the academic and professional standard required by the University as a dissertation for the degree of Doctor of Philosophy. Signed: ______________________________________________________ Katherine C. Grier, Ph.D. Professor in charge of dissertation. I certify that I have read this dissertation and that in my opinion it meets the academic and professional standard required by the University as a dissertation for the degree of Doctor of Philosophy. Signed: ______________________________________________________ Arwen P. Mohun, Ph.D. Member of dissertation committee I certify that I have read this dissertation and that in my opinion it meets the academic and professional standard required by the University as a dissertation for the degree of Doctor of Philosophy. Signed: ______________________________________________________ Jonathan Russ, Ph.D.