Building a Dictionary for Genomes: Identification of Presumptive Regulatory Sites by Statistical Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DNA Sequencing and Sorting: Identifying Genetic Variations

BioMath DNA Sequencing and Sorting: Identifying Genetic Variations Student Edition Funded by the National Science Foundation, Proposal No. ESI-06-28091 This material was prepared with the support of the National Science Foundation. However, any opinions, findings, conclusions, and/or recommendations herein are those of the authors and do not necessarily reflect the views of the NSF. At the time of publishing, all included URLs were checked and active. We make every effort to make sure all links stay active, but we cannot make any guaranties that they will remain so. If you find a URL that is inactive, please inform us at [email protected]. DIMACS Published by COMAP, Inc. in conjunction with DIMACS, Rutgers University. ©2015 COMAP, Inc. Printed in the U.S.A. COMAP, Inc. 175 Middlesex Turnpike, Suite 3B Bedford, MA 01730 www.comap.com ISBN: 1 933223 71 5 Front Cover Photograph: EPA GULF BREEZE LABORATORY, PATHO-BIOLOGY LAB. LINDA SHARP ASSISTANT This work is in the public domain in the United States because it is a work prepared by an officer or employee of the United States Government as part of that person’s official duties. DNA Sequencing and Sorting: Identifying Genetic Variations Overview Each of the cells in your body contains a copy of your genetic inheritance, your DNA which has been passed down to you, one half from your biological mother and one half from your biological father. This DNA determines physical features, like eye color and hair color, and can determine susceptibility to medical conditions like hypertension, heart disease, diabetes, and cancer. -

A Tool for Detecting Base Mis-Calls in Multiple Sequence Alignments by Semi-Automatic Chromatogram Inspection

ChromatoGate: A Tool for Detecting Base Mis-Calls in Multiple Sequence Alignments by Semi-Automatic Chromatogram Inspection Nikolaos Alachiotis Emmanouella Vogiatzi∗ Scientific Computing Group Institute of Marine Biology and Genetics HITS gGmbH HCMR Heidelberg, Germany Heraklion Crete, Greece [email protected] [email protected] Pavlos Pavlidis Alexandros Stamatakis Scientific Computing Group Scientific Computing Group HITS gGmbH HITS gGmbH Heidelberg, Germany Heidelberg, Germany [email protected] [email protected] ∗ Affiliated also with the Department of Genetics and Molecular Biology of the Democritian University of Thrace at Alexandroupolis, Greece. Corresponding author: Nikolaos Alachiotis Keywords: chromatograms, software, mis-calls Abstract Automated DNA sequencers generate chromatograms that contain raw sequencing data. They also generate data that translates the chromatograms into molecular sequences of A, C, G, T, or N (undetermined) characters. Since chromatogram translation programs frequently introduce errors, a manual inspection of the generated sequence data is required. As sequence numbers and lengths increase, visual inspection and manual correction of chromatograms and corresponding sequences on a per-peak and per-nucleotide basis becomes an error-prone, time-consuming, and tedious process. Here, we introduce ChromatoGate (CG), an open-source software that accelerates and partially automates the inspection of chromatograms and the detection of sequencing errors for bidirectional sequencing runs. To provide users full control over the error correction process, a fully automated error correction algorithm has not been implemented. Initially, the program scans a given multiple sequence alignment (MSA) for potential sequencing errors, assuming that each polymorphic site in the alignment may be attributed to a sequencing error with a certain probability. -

A Brief History of Sequence Logos

Biostatistics and Biometrics Open Access Journal ISSN: 2573-2633 Mini-Review Biostat Biometrics Open Acc J Volume 6 Issue 3 - April 2018 Copyright © All rights are reserved by Kushal K Dey DOI: 10.19080/BBOAJ.2018.06.555690 A Brief History of Sequence Logos Kushal K Dey* Department of Statistics, University of Chicago, USA Submission: February 12, 2018; Published: April 25, 2018 *Corresponding author: Kushal K Dey, Department of Statistics, University of Chicago, 5747 S Ellis Ave, Chicago, IL 60637, USA. Tel: 312-709- 0680; Email: Abstract For nearly three decades, sequence logo plots have served as the standard tool for graphical representation of aligned DNA, RNA and protein sequences. Over the years, a large number of packages and web applications have been developed for generating these logo plots and using them handling and the overall scope of these plots in biological applications and beyond. Here I attempt to review some popular tools for generating sequenceto identify logos, conserved with a patterns focus on in how sequences these plots called have motifs. evolved Also, over over time time, since we their have origin seen anda considerable how I view theupgrade future in for the these look, plots. flexibility of data Keywords : Graphical representation; Sequence logo plots; Standard tool; Motifs; Biological applications; Flexibility of data; DNA sequence data; Python library; Interdependencies; PLogo; Depletion of symbols; Alphanumeric strings; Visualizes pairwise; Oligonucleotide RNA sequence data; Visualize succinctly; Predictive power; Initial attempts; Widespread; Stylistic configurations; Multiple sequence alignment; Introduction based comparisons and predictions. In the next section, we The seeds of the origin of sequence logos were planted in review the modeling frameworks and functionalities of some of early 1980s when researchers, equipped with large amounts of these tools [5]. -

Bioinformatics Manual Sequence Data Analysis with CLC Main Workbench

Introduction to Molecular Biology and Bioinformatics Workshop Biosciences eastern and central Africa - International Livestock Research Institute Hub (BecA-ILRI Hub), Nairobi, Kenya May 5-16, 2014 Bioinformatics Manual Sequence data analysis with CLC Main Workbench Written and compiled by Joyce Njuguna, Mark Wamalwa, Rob Skilton 1 Getting started with CLC Main Workbench A. Quality control of sequenced data B. Assembling sequences C. Nucleotide and protein sequence manipulation D. BLAST sequence search E. Aligning sequences F. Building phylogenetic trees Welcome to CLC Main Workbench -- a user-friendly sequence analysis software package for analysing Sanger sequencing data and for supporting your daily bioinformatics work. Definition Sequence: the order of nucleotide bases [or amino acids] in a DNA [or protein] molecule DNA Sequencing: Biochemical methods used to determine the order of nucleotide bases, adenine(A), guanine(G),cytosine(C)and thymine(T) in a DNA strand TIP CLC Main Workbench includes an extensive Help function, which can be found in the Help menu of the program’s Menu bar. The Help can also be shown by pressing F1. The help topics are sorted in a table of contents and the topics can be searched. Also, it is recommended that you view the Online presentations where a product specialist from CLC bio demonstrates the software. This is a very easy way to get started using the program. Read more about online presentations here: http://clcbio.com/presentation. A. Getting Started with CLC Main Workbench 1. Download the trial version of CLC Main Workbench 6.8.1 from the following URL: http://www.clcbio.com/products/clc-main-workbench-direct-download/ 2. -

A STAT Protein Domain That Determines DNA Sequence Recognition Suggests a Novel DNA-Binding Domain

Downloaded from genesdev.cshlp.org on September 25, 2021 - Published by Cold Spring Harbor Laboratory Press A STAT protein domain that determines DNA sequence recognition suggests a novel DNA-binding domain Curt M. Horvath, Zilong Wen, and James E. Darnell Jr. Laboratory of Molecular Cell Biology, The Rockefeller University, New York, New York 10021 Statl and Stat3 are two members of the ligand-activated transcription factor family that serve the dual functions of signal transducers and activators of transcription. Whereas the two proteins select very similar (not identical) optimum binding sites from random oligonucleotides, differences in their binding affinity were readily apparent with natural STAT-binding sites. To take advantage of these different affinities, chimeric Statl:Stat3 molecules were used to locate the amino acids that could discriminate a general binding site from a specific binding site. The amino acids between residues -400 and -500 of these -750-amino-acid-long proteins determine the DNA-binding site specificity. Mutations within this region result in Stat proteins that are activated normally by tyrosine phosphorylation and that dimerize but have greatly reduced DNA-binding affinities. [Key Words: STAT proteins; DNA binding; site selection] Received January 6, 1995; revised version accepted March 2, 1995. The STAT (signal transducers and activators if transcrip- Whereas oligonucleotides representing these selected se- tion) proteins have the dual purpose of, first, signal trans- quences exhibited slight binding preferences, the con- duction from ligand-activated receptor kinase com- sensus sites overlapped sufficiently to be recognized by plexes, followed by nuclear translocation and DNA bind- both factors. However, by screening different natural ing to activate transcription (Darnell et al. -

Bioinformatics I Sanger Sequence Analysis

Updated: October 2020 Lab 5: Bioinformatics I Sanger Sequence Analysis Project Guide The Wolbachia Project Page Contents 3 Activity at a Glance 4 Technical Overview 5-7 Activity: How to Analyze Sanger Sequences 8 DataBase Entry 9 Appendix I: Illustrated BLAST Alignment 10 Appendix II: Sanger Sequencing Quick Reference Content is made available under the Creative Commons Attribution-NonCommercial-No Derivatives International License. Contact ([email protected]) if you would like to make adaptations for distribution beyond the classroom. The Wolbachia Project: Discover the Microbes Within! was developed by a collaboration of scientists, educators, and outreach specialists. It is directed by the Bordenstein Lab at Vanderbilt University. https://www.vanderbilt.edu/wolbachiaproject 2 Activity at a Glance Goals To analyze and interpret the quality of Sanger sequences To generate a consensus DNA sequence for bioinformatics analyses Learning Objectives Upon completion of this activity, students will (i) understand the Sanger method of sequencing, also known as the chain-termination method; (ii) be able to interpret chromatograms; (iii) evaluate sequencing Quality Scores; and (iv) generate a consensus DNA sequence based on forward and reverse Sanger reactions. Prerequisite Skills While no computer programming skills are necessary to complete this work, prior exposure to personal computers and the Internet is assumed. Teaching Time: One class period Recommended Background Tutorials • DNA Learning Center Animation: Sanger Method of -

Identification of Factors That Interact with the E IA-Inducible Adenovirus E3 Promoter

Downloaded from genesdev.cshlp.org on September 30, 2021 - Published by Cold Spring Harbor Laboratory Press Identification of factors that interact with the E IA-inducible adenovirus E3 promoter Helen C. Hurst and Nicholas C. Jones Gene Regulation Group, Imperial Cancer Research Fund, London, England, WC2A 3PX We have investigated the E1A-inducible E3 promoter of adenovirus type 5 with respect to its ability to bind specific nuclear proteins. Four distinct nucleoprotein-binding sites were detected, located between positions - 7 to -33, -44 to -68, -81 to - 103, and - 154 to - 183, relative to the E3 cap site. These sites contain sequences previously shown to be functionally important for efficient E3 transcription. No major qualitative or quantitative differences were found in the binding pattern between nucleoprotein extracts prepared from uninfected or adenovirus-infected HeLa cells. Competition experiments suggest that the factors binding to the - 154 to - 183 and -81 to - 103 sites are the previously identified nucleoproteins, NFI and AP1, respectively. The factor binding to the -44 to -68 site, which we term ATF, also interacts with other E1A-inducible promoters and is very similar and probably identical to the factor that binds to the cAMP-responsive element of somatostatin. We have purified this factor, which is a protein of 43 kD in size. [Key Words: Trans-activation; gel retardation; footprinting; nuclear factors] Received June 12, 1987; revised version accepted October 5, 1987. The control of the rate of transcription initiation is an (Borrelli et al. 1984; Velcich and Ziff 1985), whereas the important element in the regulation of eukaryotic gene protein of 243 amino acids, although it represses very expression. -

Lecture 7: Sequence Motif Discovery

Sequence motif: definitions COSC 348: Computing for Bioinformatics • In Bioinformatics, a sequence motif is a nucleotide or amino-acid sequence pattern that is widespread and has Lecture 7: been proven or assumed to have a biological significance. Sequence Motif Discovery • Once we know the sequence pattern of the motif, then we can use the search methods to find it in the sequences (i.e. Lubica Benuskova Boyer-Moore algorithm, Rabin-Karp, suffix trees, etc.) • The problem is to discover the motifs, i.e. what is the order of letters the particular motif is comprised of. http://www.cs.otago.ac.nz/cosc348/ 1 2 Examples of motifs in DNA Sequence motif: notations • The TATA promoter sequence is an example of a highly • An example of a motif in a protein: N, followed by anything but P, conserved DNA sequence motif found in eukaryotes. followed by either S or T, followed by anything but P − One convention is to write N{P}[ST]{P} where {X} means • Another example of motifs: binding sites for transcription any amino acid except X; and [XYZ] means either X or Y or Z. factors (TF) near promoter regions of genes, etc. • Another notation: each ‘.’ signifies any single AA, and each ‘*’ Gene 1 indicates one member of a closely-related AA family: Gene 2 − WDIND*.*P..*...D.F.*W***.**.IYS**...A.*H*S*WAMRN Gene 3 Gene 4 • In the 1st assignment we have motifs like A??CG, where the Gene 5 wildcard ? Stands for any of A,U,C,G. Binding sites for TF 3 4 Sequence motif discovery from conservation Motif discovery based on alignment • profile analysis is another word for this. -

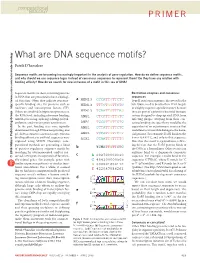

What Are DNA Sequence Motifs?

PRIMER What are DNA sequence motifs? Patrik D’haeseleer Sequence motifs are becoming increasingly important in the analysis of gene regulation. How do we define sequence motifs, and why should we use sequence logos instead of consensus sequences to represent them? Do they have any relation with binding affinity? How do we search for new instances of a motif in this sea of DNA? Sequence motifs are short, recurring patterns Restriction enzymes and consensus in DNA that are presumed to have a biologi- sequences cal function. Often they indicate sequence- a HEM13 CCCATTGTTCTC Type II restriction enzymes, discovered in the specific binding sites for proteins such as HEM13 TTTCTGGTTCTC late 1960s, need to bind to their DNA targets http://www.nature.com/naturebiotechnology nucleases and transcription factors (TF). in a highly sequence-specific manner, because Others are involved in important processes at HEM13 TCAATTGTTTAG they are part of a primitive bacterial immune the RNA level, including ribosome binding, ANB1 CTCATTGTTGTC system designed to chop up viral DNA from mRNA processing (splicing, editing, polyad- infecting phages. Straying from their con- enylation) and transcription termination. ANB1 TCCATTGTTCTC sensus binding site specificity would be the In the past, binding sites were typically ANB1 CCTATTGTTCTC equivalent of an autoimmune reaction that determined through DNase footprinting, and could lead to irreversible damage to the bacte- gel-shift or reporter construct assays, whereas ANB1 TCCATTGTTCGT rial genome. For example, EcoRI binds to the binding affinities to artificial sequences were ROX1 CCAATTGTTTTG 6-mer GAATTC, and only to that sequence. explored using SELEX. Nowadays, com- Note that this motif is a palindrome, reflect- putational methods are generating a flood ing the fact that the EcoRI protein binds to Nature Publishing Group Group Nature Publishing b YCHATTGTTCTC 6 of putative regulatory sequence motifs by the DNA as a homodimer. -

Identification of a Consensus DNA-Binding Site for The

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Biochemistry -- Faculty Publications Biochemistry, Department of 2008 Identification of a Consensus DNA-Binding Site for the Arabidopsis thaliana SBP Domain Transcription Factor, AtSPL14, and Binding Kinetics by Surface Plasmon Resonance Xinwen Liang University of Nebraska - Lincoln, [email protected] Tara J. Nazarenus University of Nebraska-Lincoln, [email protected] Julie M. Stone University of Nebraska-Lincoln, [email protected] Follow this and additional works at: https://digitalcommons.unl.edu/biochemfacpub Part of the Biochemistry, Biophysics, and Structural Biology Commons Liang, Xinwen; Nazarenus, Tara J.; and Stone, Julie M., "Identification of a Consensus DNA-Binding Site for the Arabidopsis thaliana SBP Domain Transcription Factor, AtSPL14, and Binding Kinetics by Surface Plasmon Resonance" (2008). Biochemistry -- Faculty Publications. 84. https://digitalcommons.unl.edu/biochemfacpub/84 This Article is brought to you for free and open access by the Biochemistry, Department of at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Biochemistry -- Faculty Publications by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. Published in Biochemistry 47:12 (2008), pp 3645–3653; doi: 10.1021/bi701431y Copyright © 2008 American Chemical Society. Used by permission. Published online February 27, 2008. Identification of a Consensus DNA-Binding Site for the Arabidopsis thaliana SBP Domain Transcription -

Genome-Wide Profiling of PPAR :RXR and RNA Polymerase II Occupancy

Downloaded from genesdev.cshlp.org on September 26, 2021 - Published by Cold Spring Harbor Laboratory Press Genome-wide profiling of PPAR␥:RXR and RNA polymerase II occupancy reveals temporal activation of distinct metabolic pathways and changes in RXR dimer composition during adipogenesis Ronni Nielsen,1,4 Thomas Åskov Pedersen,1,4 Dik Hagenbeek,2,4 Panagiotis Moulos,2,3 Rasmus Siersbæk,1 Eva Megens,2 Sergei Denissov,2 Michael Børgesen,1 Kees-Jan Francoijs,2 Susanne Mandrup,1,6 and Hendrik G. Stunnenberg2,5 1Department of Biochemistry and Molecular Biology, University of Southern Denmark, 5230 Odense M, Denmark; 2Department of Molecular Biology, Nijmegen Center for Molecular Life Sciences, Radboud University, 6500 HB Nijmegen, The Netherlands; 3Metabolic Engineering and Bioinformatics Group, Institute of Biological Research and Biotechnology, National Hellenic Research Foundation, 11635 Athens, Greece The nuclear receptor peroxisome proliferator-activated receptor ␥ (PPAR␥) is a key regulator of adipocyte differentiation in vivo and ex vivo and has been shown to control the expression of several adipocyte-specific genes. In this study, we used chromatin immunoprecipitation combined with deep sequencing to generate genome-wide maps of PPAR␥ and retinoid X receptor (RXR)-binding sites, and RNA polymerase II (RNAPII) occupancy at very high resolution throughout adipocyte differentiation of 3T3-L1 cells. We identify >5000 high-confidence shared PPAR␥:RXR-binding sites in adipocytes and show that during early stages of differentiation, many of these are preoccupied by non-PPAR␥ RXR-heterodimers. Different temporal and compositional patterns of occupancy are observed. In addition, we detect co-occupancy with members of the C/EBP family. -

Consensus Sequence Design As a General Strategy to Create Hyperstable, Biologically Active Proteins

bioRxiv preprint doi: https://doi.org/10.1101/466391; this version posted November 17, 2018. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC 4.0 International license. Biological sciences; biophysics and computational biology Consensus sequence design as a general strategy to create hyperstable, biologically active proteins Matt Sternke1, Katherine W. Tripp1, & Doug Barrick1* 1The T.C. Jenkins Department of Biophysics, Johns Hopkins University, 3400 N. Charles St., Baltimore, MD 21218 USA. *To whom correspondence should be addressed. Email addresses: [email protected], [email protected], and [email protected] Keywords: consensus sequence design, protein stability, protein function 1 bioRxiv preprint doi: https://doi.org/10.1101/466391; this version posted November 17, 2018. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC 4.0 International license. Abstract Consensus sequence design offers a promising strategy for designing proteins of high stability while retaining biological activity since it draws upon an evolutionary history in which residues important for both stability and function are likely to be conserved. Although there have been several reports of successful consensus design of individual targets, it is unclear from these anecdotal studies how often this approach succeeds, and how often it fails. Here, we attempt to assess the generality of consensus by designing consensus sequences for a set of six protein families over a range of chain-lengths, structures, and activities.