Student Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

My Personal Callsign List This List Was Not Designed for Publication However Due to Several Requests I Have Decided to Make It Downloadable

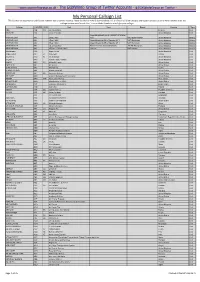

- www.egxwinfogroup.co.uk - The EGXWinfo Group of Twitter Accounts - @EGXWinfoGroup on Twitter - My Personal Callsign List This list was not designed for publication however due to several requests I have decided to make it downloadable. It is a mixture of listed callsigns and logged callsigns so some have numbers after the callsign as they were heard. Use CTL+F in Adobe Reader to search for your callsign Callsign ICAO/PRI IATA Unit Type Based Country Type ABG AAB W9 Abelag Aviation Belgium Civil ARMYAIR AAC Army Air Corps United Kingdom Civil AgustaWestland Lynx AH.9A/AW159 Wildcat ARMYAIR 200# AAC 2Regt | AAC AH.1 AAC Middle Wallop United Kingdom Military ARMYAIR 300# AAC 3Regt | AAC AgustaWestland AH-64 Apache AH.1 RAF Wattisham United Kingdom Military ARMYAIR 400# AAC 4Regt | AAC AgustaWestland AH-64 Apache AH.1 RAF Wattisham United Kingdom Military ARMYAIR 500# AAC 5Regt AAC/RAF Britten-Norman Islander/Defender JHCFS Aldergrove United Kingdom Military ARMYAIR 600# AAC 657Sqn | JSFAW | AAC Various RAF Odiham United Kingdom Military Ambassador AAD Mann Air Ltd United Kingdom Civil AIGLE AZUR AAF ZI Aigle Azur France Civil ATLANTIC AAG KI Air Atlantique United Kingdom Civil ATLANTIC AAG Atlantic Flight Training United Kingdom Civil ALOHA AAH KH Aloha Air Cargo United States Civil BOREALIS AAI Air Aurora United States Civil ALFA SUDAN AAJ Alfa Airlines Sudan Civil ALASKA ISLAND AAK Alaska Island Air United States Civil AMERICAN AAL AA American Airlines United States Civil AM CORP AAM Aviation Management Corporation United States Civil -

Appendix 25 Box 31/3 Airline Codes

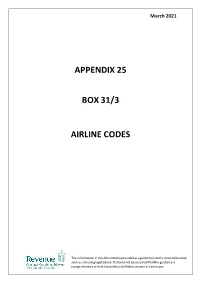

March 2021 APPENDIX 25 BOX 31/3 AIRLINE CODES The information in this document is provided as a guide only and is not professional advice, including legal advice. It should not be assumed that the guidance is comprehensive or that it provides a definitive answer in every case. Appendix 25 - SAD Box 31/3 Airline Codes March 2021 Airline code Code description 000 ANTONOV DESIGN BUREAU 001 AMERICAN AIRLINES 005 CONTINENTAL AIRLINES 006 DELTA AIR LINES 012 NORTHWEST AIRLINES 014 AIR CANADA 015 TRANS WORLD AIRLINES 016 UNITED AIRLINES 018 CANADIAN AIRLINES INT 020 LUFTHANSA 023 FEDERAL EXPRESS CORP. (CARGO) 027 ALASKA AIRLINES 029 LINEAS AER DEL CARIBE (CARGO) 034 MILLON AIR (CARGO) 037 USAIR 042 VARIG BRAZILIAN AIRLINES 043 DRAGONAIR 044 AEROLINEAS ARGENTINAS 045 LAN-CHILE 046 LAV LINEA AERO VENEZOLANA 047 TAP AIR PORTUGAL 048 CYPRUS AIRWAYS 049 CRUZEIRO DO SUL 050 OLYMPIC AIRWAYS 051 LLOYD AEREO BOLIVIANO 053 AER LINGUS 055 ALITALIA 056 CYPRUS TURKISH AIRLINES 057 AIR FRANCE 058 INDIAN AIRLINES 060 FLIGHT WEST AIRLINES 061 AIR SEYCHELLES 062 DAN-AIR SERVICES 063 AIR CALEDONIE INTERNATIONAL 064 CSA CZECHOSLOVAK AIRLINES 065 SAUDI ARABIAN 066 NORONTAIR 067 AIR MOOREA 068 LAM-LINHAS AEREAS MOCAMBIQUE Page 2 of 19 Appendix 25 - SAD Box 31/3 Airline Codes March 2021 Airline code Code description 069 LAPA 070 SYRIAN ARAB AIRLINES 071 ETHIOPIAN AIRLINES 072 GULF AIR 073 IRAQI AIRWAYS 074 KLM ROYAL DUTCH AIRLINES 075 IBERIA 076 MIDDLE EAST AIRLINES 077 EGYPTAIR 078 AERO CALIFORNIA 079 PHILIPPINE AIRLINES 080 LOT POLISH AIRLINES 081 QANTAS AIRWAYS -

Airline Schedules

Airline Schedules This finding aid was produced using ArchivesSpace on January 08, 2019. English (eng) Describing Archives: A Content Standard Special Collections and Archives Division, History of Aviation Archives. 3020 Waterview Pkwy SP2 Suite 11.206 Richardson, Texas 75080 [email protected]. URL: https://www.utdallas.edu/library/special-collections-and-archives/ Airline Schedules Table of Contents Summary Information .................................................................................................................................... 3 Scope and Content ......................................................................................................................................... 3 Series Description .......................................................................................................................................... 4 Administrative Information ............................................................................................................................ 4 Related Materials ........................................................................................................................................... 5 Controlled Access Headings .......................................................................................................................... 5 Collection Inventory ....................................................................................................................................... 6 - Page 2 - Airline Schedules Summary Information Repository: -

Air Passenger Origin and Destination, Canada-United States Report

Catalogue no. 51-205-XIE Air Passenger Origin and Destination, Canada-United States Report 2005 How to obtain more information Specific inquiries about this product and related statistics or services should be directed to: Aviation Statistics Centre, Transportation Division, Statistics Canada, Ottawa, Ontario, K1A 0T6 (Telephone: 1-613-951-0068; Internet: [email protected]). For information on the wide range of data available from Statistics Canada, you can contact us by calling one of our toll-free numbers. You can also contact us by e-mail or by visiting our website at www.statcan.ca. National inquiries line 1-800-263-1136 National telecommunications device for the hearing impaired 1-800-363-7629 Depository Services Program inquiries 1-800-700-1033 Fax line for Depository Services Program 1-800-889-9734 E-mail inquiries [email protected] Website www.statcan.ca Information to access the product This product, catalogue no. 51-205-XIE, is available for free in electronic format. To obtain a single issue, visit our website at www.statcan.ca and select Publications. Standards of service to the public Statistics Canada is committed to serving its clients in a prompt, reliable and courteous manner. To this end, the Agency has developed standards of service which its employees observe in serving its clients. To obtain a copy of these service standards, please contact Statistics Canada toll free at 1-800-263-1136. The service standards are also published on www.statcan.ca under About us > Providing services to Canadians. Statistics Canada Transportation Division Aviation Statistics Centre Air Passenger Origin and Destination, Canada-United States Report 2005 Published by authority of the Minister responsible for Statistics Canada © Minister of Industry, 2007 All rights reserved. -

BEECH D18S/ D18C & RCAF EXPEDITER Mk.3 (Built at Wichita, Kansas Between 1945 and 1957)

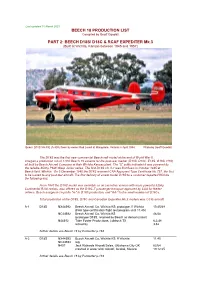

Last updated 10 March 2021 BEECH 18 PRODUCTION LIST Compiled by Geoff Goodall PART 2: BEECH D18S/ D18C & RCAF EXPEDITER Mk.3 (Built at Wichita, Kansas between 1945 and 1957) Beech D18S VH-FIE (A-808) flown by owner Rod Lovell at Mangalore, Victoria in April 1984. Photo by Geoff Goodall The D18S was the first new commercial Beechcraft model at the end of World War II. It began a production run of 1,800 Beech 18 variants for the post-war market (D18S, D18C, E18S, G18S, H18), all built by Beech Aircraft Company at their Wichita Kansas plant. The “S” suffix indicated it was powered by the reliable 450hp P&W Wasp Junior series. The first D18S c/n A-1 was first flown in October 1945 at Beech field, Wichita. On 5 December 1945 the D18S received CAA Approved Type Certificate No.757, the first to be issued to any post-war aircraft. The first delivery of a new model D18S to a customer departed Wichita the following day. From 1947 the D18C model was available as an executive version with more powerful 525hp Continental R-9A radials, also offered as the D18C-T passenger transport approved by CAA for feeder airlines. Beech assigned c/n prefix "A-" to D18S production, and "AA-" to the small number of D18Cs. Total production of the D18S, D18C and Canadian Expediter Mk.3 models was 1,035 aircraft. A-1 D18S NX44592 Beech Aircraft Co, Wichita KS: prototype, ff Wichita 10.45/48 (FAA type certification flight test program until 11.45) NC44592 Beech Aircraft Co, Wichita KS 46/48 (prototype D18S, retained by Beech as demonstrator) N44592 Tobe Foster Productions, Lubbock TX 6.2.48 retired by 3.52 further details see Beech 18 by Parmerter p.184 A-2 D18S NX44593 Beech Aircraft Co, Wichita KS: ff Wichita 11.45 NC44593 reg. -

Crash Survivability and the Emergency Brace Position

航空宇宙政策․法學會誌 第 33 卷 第 2 號 논문접수일 2018. 11. 30 2018년 12월 30일 발행, pp. 199~224 논문심사일 2018. 12. 14 http://dx.doi.org/10.31691/KASL33.2.6. 게재확정일 2018. 12. 30 Regulatory Aspects of Passenger and Crew Safety: Crash Survivability and the Emergency Brace Position Jan M. Davies* 46) CONTENTS Ⅰ. Introduction Ⅱ. Passenger and Crew Crash Survivability and the Emergency Brace Position Ⅲ. Regulations, and their Gaps, Relating to the Emergency Brace Position Ⅳ. Conclusions * Professor Jan M Davies MSc MD FRCPC FRAeS is a Professor of Anesthesiology, Perioperative and Pain Medicine in the Cumming School of Medicine and an Adjunct Professor of Psychology in the Faculty of Arts, University of Calgary. She is the chair of IBRACE, the International Board for Research into Aircraft Crash Events. (https://en. wikipedia.org/wiki/International_Board_for_Research_into_Aircraft_Crash_Events) Amongst other publications, she is the co-author, with Linda Campbell, of An Investigation into Serial Deaths During Oral Surgery. In: Selby H (Ed) The Inquest Handbook, Leichardt, NSW, Australia: The Federation Press; 1998;150-169 and co-author with Drs. Keith Anderson, Christopher Pysyk and JN Armstrong of Anaesthesia. In: Freckelton I and Selby H (Eds). Expert Evidence. Thomson Reuters, Australia, 2017. E-Mail : [email protected] 200 航空宇宙政策․法學會誌 第 33 卷 第 2 號 Ⅰ. Introduction Barely more than a century has passed since the first passenger was carried by an aircraft. That individual was Henri Farman, an Anglo-French painter turned aviator. He was a passenger on a flight piloted by Léon Delagrange, a French sculptor turned aviator, and aircraft designer and manufacturer. -

The Transition to Safety Management Systems (SMS) in Aviation: Is Canada Deregulating Flight Safety?, 81 J

Journal of Air Law and Commerce Volume 81 | Issue 1 Article 3 2002 The rT ansition to Safety Management Systems (SMS) in Aviation: Is Canada Deregulating Flight Safety? Renè David-Cooper Federal Court of Appeal of Canada Follow this and additional works at: https://scholar.smu.edu/jalc Recommended Citation Renè David-Cooper, The Transition to Safety Management Systems (SMS) in Aviation: Is Canada Deregulating Flight Safety?, 81 J. Air L. & Com. 33 (2002) https://scholar.smu.edu/jalc/vol81/iss1/3 This Article is brought to you for free and open access by the Law Journals at SMU Scholar. It has been accepted for inclusion in Journal of Air Law and Commerce by an authorized administrator of SMU Scholar. For more information, please visit http://digitalrepository.smu.edu. THE TRANSITION TO SAFETY MANAGEMENT SYSTEMS (SMS) IN AVIATION: IS CANADA DEREGULATING FLIGHT SAFETY? RENE´ DAVID-COOPER* ABSTRACT In 2013, the International Civil Aviation Organization (ICAO) adopted Annex 19 to the Chicago Convention to implement Safety Management Systems (SMS) for airlines around the world. While most ICAO Member States worldwide are still in the early stages of introducing SMS, Canada became the first and only ICAO country in 2008 to fully implement SMS for all Canadian-registered airlines. This article will highlight the documented shortcomings of SMS in Canada during the implementation of the first ever SMS framework in civil aviation. While air carriers struggled to un- derstand and introduce SMS into their operations, this article will illustrate how Transport Canada (TC) did not have the knowledge or the necessary resources to properly guide airline operators during this transition, how SMS was improperly tai- lored for smaller air carriers, and how the Canadian govern- ment canceled safety inspections around the country, leaving many air carriers partially unregulated. -

Human Factors Industry News ! Volume XV

Aviation Human Factors Industry News ! Volume XV. Issue 02, January 20, 2019 Hello all, To subscribe send an email to: [email protected] In this weeks edition of Aviation Human Factors Industry News you will read the following stories: ★37 years ago: The horror and ★Florida man decapitated in freak heroism of Air Florida Flight 90 helicopter accident identified, authorities say ★Crash: Saha B703 at Fath on Jan at14th Bedford 2019, landed a few atyears wrong ago airport was a perfect★Mechanic's example of error professionals blamed for 2017 who had drifted over years into a very unsafeGerman operation. helicopter This crash crew in Mali ★ACCIDENT CASE STUDY BLIND wasOVER literally BAKERSFIELD an “accident waiting to happen”★11 Aviation and never Quotes through That Coulda conscious decision. This very same processSave fooledYour Life a very smart bunch of★Aviation engineers Safety and managersNetwork at NASA and brought down two US space shuttles!Publishes This 2018 process Accident is builtStatistics into our human★Australian software. Safety Authority Seeks Proposal On Fatigue Rules ★ACCIDENT CASE STUDY BLIND OVER BAKERSFIELD Human Factors Industry News 1 37 years ago: The horror and heroism of Air Florida Flight 90 A U.S. Park Police helicopter pulls two people from the wreckage of an Air Florida jetliner that crashed into the Potomac River when it hit a bridge after taking off from National Airport in Washington, D.C., on Jan. 13, 1982. On last Sunday, the nation's capital was pummeled with up to 8 inches of snow, the first significant winter storm in Washington in more than three years. -

Jeox FP)1.0 CANADIAN TRANSPORTATION RESEARCH FORUM Lip LE GROUPE DE RECHERCHES SUR LES TRANSPORTS AU CANADA

jEOX FP)1.0 CANADIAN TRANSPORTATION RESEARCH FORUM Lip LE GROUPE DE RECHERCHES SUR LES TRANSPORTS AU CANADA 20th ANNUAL MEETING PROCEEDINGS TORONTO, ONTARIO MAY 1985 591 AT THE CROSSROADS - THE FINANCIAL HEALTH OF CANADA'S LEVEL I AIRLINES by R.W. Lake,. J.M. Serafin and A., Mozes Research Branch, Canadian Transport Commission INTRODUCTION In 1981 the Air Transport Committee and the 'Research Branch of the Canadian Transport Commission on a joint basis, and in conjunction with the major Canadian airlines, (who formed a Task Force) undertook a programme of studies concerning airline pricing and financial performance. This paper is based on a CTC Working Paper' which presented current data on the topic, and interpreted them in the context of the financial and regulatory circumstances faced by the airlines as of July 1984. ECONOMIC PERSPECTIVE The trends illustrated in Figure 1 suggest that air trans- portation may have reached the stage of a mature industry with air . travel/transport no longer accounting for an increasing proportion of economic activity. This mile/stone in the industry's life cycle, if in fact it has been reached, would suggest that an apparent fall in the income elasticity of demand for air travel between 1981 and 1983 could persist. As data reflecting the apparent demand re- surgence of 1984 become available, the picture may change, but 1 LAKE Figure 2 Figure 1 AIR FARE INDICES AIR TRANSPORT REVENUE AS A PERCENT OF GROSS NATIONAL PRODUCT 1.90 1.20.. 1.80. 1.70 - 1.10 -, 1.60. ..... 1.50. .. 8 .: . 1.40 -, r,. -

363 Part 238—Contracts With

Immigration and Naturalization Service, Justice § 238.3 (2) The country where the alien was mented on Form I±420. The contracts born; with transportation lines referred to in (3) The country where the alien has a section 238(c) of the Act shall be made residence; or by the Commissioner on behalf of the (4) Any country willing to accept the government and shall be documented alien. on Form I±426. The contracts with (c) Contiguous territory and adjacent transportation lines desiring their pas- islands. Any alien ordered excluded who sengers to be preinspected at places boarded an aircraft or vessel in foreign outside the United States shall be contiguous territory or in any adjacent made by the Commissioner on behalf of island shall be deported to such foreign the government and shall be docu- contiguous territory or adjacent island mented on Form I±425; except that con- if the alien is a native, citizen, subject, tracts for irregularly operated charter or national of such foreign contiguous flights may be entered into by the Ex- territory or adjacent island, or if the ecutive Associate Commissioner for alien has a residence in such foreign Operations or an Immigration Officer contiguous territory or adjacent is- designated by the Executive Associate land. Otherwise, the alien shall be de- Commissioner for Operations and hav- ported, in the first instance, to the ing jurisdiction over the location country in which is located the port at where the inspection will take place. which the alien embarked for such for- [57 FR 59907, Dec. 17, 1992] eign contiguous territory or adjacent island. -

Canada's Aviation Hall of Fame 4 New Inductees

Volume 36, No. 1 THE Winter Issue January 2018 Canada’s Aviation Hall of Fame Contents of this Issue: John Maris Gen. (ret’d) Dr. Gregory Powell Paul Manson John Bogie 4 New Inductees 45th Annual Induction Ceremony & Dinner Canada’s Aviation Hall of Fame BOARD OF DIRECTORS: (Volunteers) Rod Sheridan, ON Chairman Chris Cooper-Slipper, ON Vice Chairman Miriam Kavanagh, ON Secretary Panthéon de l’Aviation du Canada Michael Bannock, ON Treasurer Bruce Aubin, ON CONTACT INFORMATION: Gordon Berturelli, AB Denis Chagnon, QC Canada’s Aviation Hall of Fame Lynn Hamilton, AB P.O. Box 6090 Jim McBride, AB Wetaskiwin, AB T9A 2E8 Canada Anna Pangrazzi, ON Craig Richmond, BC Phone: 780.312.2065 / Fax: 780.361.1239 David Wright, AB Website: www.cahf.ca Email: see listings below: Tyler Gandam, Mayor of Wetaskiwin, AB (ex-officio) STAFF: Executive Director: Robert Porter 780.312.2073 OPERATIONS COMMITTEE: (Wetaskiwin) ([email protected]) (Volunteers) Collections Manager: Aja Cooper 780.312.2084 ([email protected]) David Wright, Chairman Blain Fowler, Past Chairman John Chalmers OFFICE HOURS: Denny May Tuesday - Friday: 9 am - 4:30 pm Margaret May Closed Mondays Mary Oswald Robert Porter CAHF DISPLAYS (HANGAR) HOURS: Aja Cooper Tuesday to Sunday: 10 am - 5 pm Noel Ratch (non-voting, Closed Mondays representing Reynolds-Alberta Museum) Winter Hours: 1 pm - 4 pm (Please call to confirm opening times.) THE FLYER COMMITTEE: To change your address, Mary Oswald, Editor ([email protected]) contact The Hall at 780.312.2073 780.469.3547 John Chalmers, CAHF Historian Janice Oppen, Design and Layout PORTRAITS: 2 Information about The Hall Robert Bailey 3 Chairman’s Message 3 A Treasure in our Collection PATRON: 4 Announcing the New Inductees To be announced 5 Memories of Early Days 6-7 Memories of Induction Gala 2017 8-9 Speaking of Members 9 A New Memorial Airport February: April: 10 The Plant Feb. -

Canada's Aviation Hall of Fame to Induct Four New Members And

Canada’s Aviation Hall of Fame to Induct Four New Members and Honour a Belt of Orion Recipient in 2017 Wetaskiwin, Alberta – November 14, 2016… Canada’s Aviation Hall of Fame (CAHF) will induct four new members, and recognize a Belt of Orion recipient, at its 44th annual gala dinner and ceremony, to be held Thursday June 15, 2017, at Vancouver International Airport. The new members are: • James Erroll Boyd: WWI pilot and co-founder of the Air Scouts of Canada • Robert John Deluce: Aviation executive; Founder of Porter Airlines • Daniel A Sitnam; Aviation executive; Founder of Helijet Airways and Pacific Heliport Services • Rogers Eben Smith: NASA and NRC test pilot; RCAF Pilot • Royal Canadian Air Force "Golden Hawks" aerobatic team: Belt of Orion Award for Excellence CAHF inductees are selected for their contributions to Canada’s development through their integral roles in the nation’s aviation history. This year’s inductees will join the ranks of the 224 esteemed men and women inducted since the Hall’s formation in 1973. Rod Sheridan, CAHF chairman of the board of directors, said, “The CAHF is proud to honour these four well-deserving individuals for their significant contributions to Canadian aviation, and to Canada’s development as a nation. “Our 2017 inductees come from backgrounds that span the width of Canada’s unique aviation industry. Aviation has brought Canadians together as a country, unlike any other form of transport. Our new inductees reflect that cohesion through their pioneering activities and spirit.” James Errol Boyd was an early entrant into the Royal Naval Air Service from the Canadian Infantry, flew anti Zeppelin operations over the UK and coastal patrols from Dunkirk until being interned in the Netherlands.