Resurrecting Submodularity for Neural Text Generation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cartoon Network Amazone Water Themepark Tour from Pattaya Thailand, Thailand | 8 Hours | 1 - 10 Pax

Cartoon Network Amazone Water Themepark Tour from Pattaya Thailand, Thailand | 8 hours | 1 - 10 Pax Overview Enjoy 30 state-of-the-art water rides spread out across 10 entertainment zones. After being welcomed by beloved Cartoon Network characters like Ben 10, the Powerpuff Girls, Gumball, Darwin, and others, set out to the park`s diverse array of attractions. Find perfect surfing conditions in the Surfarena, or relax in the gentle, rolling sea of Mega Wave, where you can also frolic in the sun along sandy beaches. Then take to a tube and drift along the Riptide Rapids, passing an artificial jungle that emulates the Amazon Rainforest. Continue on to the Omniverse and face some of the fastest water roller coasters in the world. Ride the Omnitrix and hold on for a splash landing as you speed down a 77-foot (23-m) tower. On the Goop Loop, challenge yourself to a gravity-defying coaster; its 12-m vertical free fall propels you into an exhilarating 360-degree loop. If you`re hungry, take a break in Foodville, where chefs prepare local Thai cuisine and international favorites. Bring the day`s fun to a close with some live shows, which feature acrobatics, exciting stunts, digital light displays, and cartoon characters in grand action sequences. Highlight - Inclusion & Exclusion Price Included Lunch (package with lunch only),Hotel pick up & drop off (package with transfer only),Lunch Price Excluded Food and drinks, unless specified,Gratuities,Personal expenses,Locker,Cabana,Towel,An additional surcharge for hotel pickup outside a 3-mile (5-km) radius of the city center is applicable and payable on the day of your activity. -

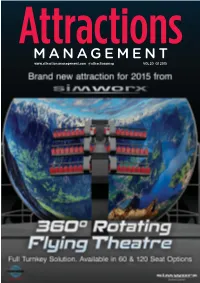

Attractions Management Issue 1 2015

www.attractionsmanagement.com @attractionsmag VOL20 Q1 2015 www.attractionsmanagement.com @attractionsmag VOL20 Q1 2015 BEHIND THE SCENES OF THE 9/11 MUSEUM Click here to subscribe to the print edition COVER IMAGE: JEWEL SAMAD/AFP/GETTY IMAGES JEWEL SAMAD/AFP/GETTY IMAGE: COVER www.attractionsmanagement.com/subs NWAVE PICTURES DISTRIBUTION PRESENTS WATCH TRAILER AT /nWavePictures GET READY FOR THE DARKEST RIDE NEWNE AMERICAS INTERNATIONAL M R 3D I L Janine Baker Goedele Gillis IDE F +1 818-565-1101 +32 2 347-63-19 [email protected] [email protected] DragonMineRide.nWave.com [email protected] | nWave.com | /nWavePicturesDistribution | /nWave nWave® is a registered trademark of nWave Pictures SA/NV - ©2014 nWave Pictures SA/NV - All Rights Reserved NWAVE PICTURES DISTRIBUTION PRESENTS E NEW N O A 4D I T T R AC T WATCH TRAILER AT /nWavePictures AMERICAS INTERNATIONAL Janine Baker Goedele Gillis +1 818-565-1101 +32 2 347-63-19 [email protected] [email protected] TheHouseOfMagic4D.nWave.com Directed by Ben Stassen & Jeremy Degruson [email protected] | nWave.com | /nWavePicturesDistribution | /nWave ©2014 nWave Pictures SA/NV. - All Rights Reserved | nWave is a registered trademark of nWave Pictures SA/NV. nWave Pictures Distribution presents MEDIEVAL MAYHEM: E THE BATTLE BEGINS. NEW N O A 4D I T T R AC T WATCH TRAILER AT /nWavePictures AMERICAS INTERNATIONAL Janine Baker Goedele Gillis +1 818-565-1101 +32 2 347-63-19 [email protected] [email protected] KnightsQuest.nWave.com Directed by James Rodgers [email protected] | nWave.com | /nWavePicturesDistribution | /nWave nWave is a registered trademark of nWave Pictures SA/NV. -

POLIN to Supply Waterslides for CN Amazone Waterpark

FOR IMMEDIATE RELEASE March 20, 2012 Istanbul, Turkey POLIN to supply waterslides for Cartoon Network AMAZONE Waterpark! (Istanbul, Turkey), (March 20, 2012) – POLIN recently announced that they are the official waterslide supplier for the new Cartoon Network AMAZONE waterpark, currently under construction in idyllic Bang Saray, close to Pattaya, on the East Coast of Thailand. Scheduled to open in 2013, Cartoon Network AMAZONE is a nod to the lush Amazon Rainforest while infusing the world’s most popular animated series and toon heroes including Ben 10 , The Power puff Girls , Johnny Bravo and The Amazing World of Gumball . The park will feature signature attractions including a gigantic family wave pool, a winding adventure river, speed-racing slides, family raft slides and one of the world's largest interactive water play fortresses for kids. Polin will supply unique and thrilling waterslides throughout the park all impressively themed. Cartoon Network AMAZONE is being developed in conjunction with Amazon Falls Co. Ltd, an attractions and resorts developer in Thailand. Phase one of the waterpark is already underway and when completed will cover 14 acres of coastal plains in Bang Saray, just 15 minutes from Pattaya Beach City. Liakat Dhanji, Chairman, Amazon Falls Co. Ltd said, “Thailand has excellent tourism credentials, a vibrant culture with a vast history and also the most welcoming people in the world. The addition of Cartoon Network AMAZONE marks Thailand's very first internationally branded water theme park. Cartoon Network is a global household name, and with this strategic alliance, we have no doubt that by welcoming our guests to meet their favourite Cartoon Network heroes, it will be a great draw for our park." “Polin is pleased to be working with Cartoon Network AMAZONE waterpark on creating thrilling themed waterslides,” says Alper Tunga Cetiner, Project Manager from Polin. -

Leading Edge Award

Leading Edge Award Awarded to park and supplier members, who through their combined efforts, have brought a project, product, service or program to fruition, thereby creating industry innovation and leadership. WWA AWARDS PROGRAM 2014 u 19 Leading Edge Award Aquav"t$e at Atl&t' The P)m AND WhiteWat, W-t FORInd THE “./TOWERi -OF PLOSEIDON”td. ATLANTIS THE PALM DUBAI, UNITED ARAB EMIRATES WHITEWATER WEST INDUSTRIES LTD. RICHMOND, BC, CANADA Aquaventure Waterpark is the largest and most dynamic water-themed attraction in Dubai and the number one waterpark in the Middle East and Europe. Encompassing 17 beachfront hectares along the apex of The Palm’s crescent, the waterpark is known as an over-the-top adventure playground. Awe inspiring water rides, a record-breaking zipline circuit and non-stop water play are the signature elements of the magnifcent waterscape, located at Atlantis, The Palm. The park also stands out by being the only waterpark in the UAE that has 700 meters of white sand beach. In 2013, Atlantis, The Palm raised the stakes in entertainment with the spectacular “Tower of Poseidon”—a $27 million (US), 40-meter-tall attraction. The Kerzner development team worked with Whitewater West Industries Ltd. to create the ultimate new waterpark adventure. Using 3,300 square meters of concrete and 550 tons of reinforcing steel, the development rose swiftly. Filled with record-breaking rides, these high-octane rides allow friends and guests to compete for thrills. Attractions within “Tower of Poseidon” include: “Poseidon’s Revenge,” with two terrify- ing trapdoors; “Slitherines,” the world’s frst ever dual waterslides within a waterslide; 6-person rafting adventures “Aquaconda” and “Zoomerango,” which feature the world’s largest waterslide tube and massive vertical wall that allows guests to experience weightlessness. -

Ramayana Water Park Cartoon Network Amazone

一家大細玩轉 ‧ 芭堤雅 Pattaya 3日2夜 [出發日期 : 至2018年10月31日] ︳3 Days 2 Nights [Travel Period : Till 31Oct2018] 親子「游」與家人一起體驗悠閑的海灘度假,欣賞泰國 最美的海濱日落及玩轉水上樂園,共享一家人幸福時刻 RamaYana Water Park Cartoon Network Amazone 玩水至夠 FUN.玩轉水上樂園 國泰航空來回香港與曼谷經濟客位機票 Roundtrip economy class air ticket between Hong Kong and Bangkok by CX 2晚酒店住宿連早餐 2 nights hotel accommodation with daily breakfast 來回曼谷機場至酒店私人專車接送 Mövenpick Siam Hotel Pattaya Roundtrip transfers between airport and hotel by private car RamaYana Water Pak 或 Cartoon Network Amazone 水上樂園一日門票 (2選1) One day admission ticket of RamaYana Water Park or Cartoon Network Amazone Water Park (choice either one) www.movenpick.com/en/asia/thailand/pattaya/moevenpick-siam-hotel-pattaya 來回酒店與水上樂園穿梭巴士接送 Roundtrip transfers between hotel and water park by shuttle bus Mövenpick Siam Hotel Pattaya 酒店外型像艘帆船,位於芭堤雅南部 Jomtien Beach 遊艇俱樂部旁邊, 入住 2 晚 距離芭堤雅市區約15分鐘車程,正好有別芭堤雅的紛紛擾擾,又有優美 海岸線的景色,再加上五星級酒店的設施,是「秘密奢華」的享受。 2位成人 第3位成人 小童不佔床 [包早餐及門票] 每位 $3420+ 3350+ 2030+ Deluxe Sea View ︳ 最多可住 : 2位成人+1位小童 ︳506 - 538 平方尺 ︳ 不適用於 2 – 7/1 ※ 套票以淡季價格計算,於旺季、假期前夕或大型節日期間出發, 需另付附加費。詳情向本社職員查詢作準。 有關套票的條款及細則請參閱最後一頁 Page 1 of 8 Ref No. : 39075 (Supersedes Ref No.: 38911) 一家大細玩轉 ‧ 芭堤雅 Pattaya 3日2夜 [出發日期 : 至2018年10月31日] ︳3 Days 2 Nights [Travel Period : Till 31Oct2018] www.movenpick.com/en/asia/thailand/pattaya/moevenpick-siam-hotel-pattaya Mövenpick Siam Hotel Pattaya 2位成人 第3位成人 小童不佔床 [包早餐及門票] 每位 $3490+ 3350+ 2030+ Premier Sea View ︳ Mövenpick Siam Hotel Pattaya 最多可住 : 2位成人+1位小童或3位成人 ︳538 平方尺 ︳ 2位成人 第3位成人 小童不佔床 [包早餐及門票] 每位 + + + 4150 3410 2750 Junior Suite ︳ 最多可住 : 2位成人+2位小童或3位成人 ︳721 - 764 平方尺套房 ︳ 2位成人 第3位成人 小童不佔床 [包早餐及門票] 每位 + + + 4400 3410 2750 Executive Suite Sea View ︳ 最多可住 : 2位成人+2位小童或3位成人 ︳893 平方尺大套房 ︳ ※尊享早餐、小食和雞尾酒等於行政酒廊禮遇 (只適用於 Junior uit 及 Executive ite ※ 套票以淡季價格計算,於旺季、 假期前夕或大型節日期間出發, Family Offer 家庭優惠 需另付附加費。詳情向本社職員 查詢作準。 6歲或以下兒童可免費與父母同床及享用早餐 7 -11歲兒童可免費加床,早餐需另外收費 有關套票的條款及細則請參閱最後一頁 Page 2 of 8 Ref No. -

Attractions Management Issue 2 2012

Attractionswww.attractionsmanagement.com management MFC(.H))'() Attractionswww.attractionsmanagement.com management 0CCA02C8>=B<0=064<4=C MFC(.H))'() K\cljJgXib :XeX[XËjÔijkgligfj\$Yl`ck 9Xik[\9f\i jZ`\eZ\Z\eki\]fi[\ZX[\j <]k\c`e^Ëj:<FZ\c\YiXk\jk_\gXibËj-'k_Xee`m\ijXip ■)'()Fcpdg`Zj ■;`Xdfe[AlY`c\\ ■:lkkpJXib ■?XiipGfkk\i ■K`kXe`Z9\c]Xjk K@K8E@:9<C=8JK :FDD<DFI8K@E>K?<NFIC;ËJDFJK=8DFLJJ?@G B@;Q8E@8BL8C8CLDGLI 8E<NIFC<DF;<C=FI:?@C;I<EËJ8KKI8:K@FEJ6 Read Attractions Management online: www.attractionsmanagement.com/digital follow us on twitter @attractionsmag K?<D<G8IBJsJ:@<E:<:<EKI<JsQFFJ8HL8I@LDJsDLJ<LDJ?<I@K8><sK<:?EFCF>Ps;<JK@E8K@FEJs<OGFJsN8K<IG8IBJsM@J@KFI8KKI8:K@FEJs>8CC<I@<Js<EK<IK8@ED<EK Inventing the Future Triotech is proud to introduce the next generation of immersive and interactive thrill rides. Our flagship products offer intense and realistic ride film experi- ences via a multi seat motion platform, interactive gameplay and realtime graphics, creating excellent revenue for operators. Triotech Head Office International Sales China Sales 2030 Pie-IX Blvd. Suite 307 Gabi Salabi Weitao Liu Montreal (Qc), Canada H1V 2C8 [email protected] [email protected] +1 514-354-8999 WWW.TRIO-TECH.COM ATTRACTIONS MANAGEMENT EDITOR’S LETTER CELEBRATING THE UK ON THE COVER: lthough Attractions Management has a com- Titanic Belfast, p38 pletely global readership and we balance our A coverage across all world regions in every edi- READER SERVICES tion, we hope you’ll forgive us for highjacking part of SUBSCRIPTIONS this very special edition to celebrate a unique year Denise Gildea +44 (0)1462 471930 for the UK, with our focus on the Best of British. -

Attractions Management Q3 2014

Attractionswww.attractionsmanagement.com VOL 19 Q3 2014 THE LOST TEMPLE a new Immersive Tunnel ride from now open at Movie Park Germany CLICK HERE TO SUBSCRIBE TO THE PRINT EDITION WWW.LEISUREMEDIA.COM/SUBS NWAVE PICTURES DISTRIBUTION PRESENTS NEW N O A 4D I T T R AC T Special Screenings nWave Booth #8519 September 23-25, 2014 AMERICAS INTERNATIONAL Janine Baker Goedele Gillis +1 818-565-1101 +32 2 347-63-19 WATCH TRAILER AT [email protected] [email protected] /nWavePictures TheHouseOfMagic.nWave.com Directed by Ben Stassen & Jeremy Degruson [email protected] | nWave.com | /nWavePicturesDistribution | /nWave ©2014 nWave Pictures SA/NV. - All Rights Reserved | nWave is a registered trademark of nWave Pictures SA/NV. W NE N O A 4D I T T R AC T WATCH TRAILER AT /nWavePictures Directed by Ben Stassen & Jeremy Degruson HauntedMansion.nWave.com [email protected] | nWave.com | /nWavePicturesDistribution | /nWave nWave is a registered trademark of nWave Pictures SA/NV. ©2014 nWave Pictures SA/NV. - All Rights Reserved NEWNEW M M RR 3D3DI LI L IIDDEE FF DRIVEDRIVE FOR FOR YOUR YOUR LIFE LIFE ! ! WATCH TRAILER TRAILER AT AT /nWavePictures /nWavePictures DirectedDirected by by James James Rodgers Rodgers GlacierRace.nWave.comGlacierRace.nWave.com [email protected]@nWave.com | nWave.com | nWave.com | | /nWavePicturesDistribution /nWavePicturesDistribution | |/nWave /nWave nWave nWaveis a registered is a registered trademark trademark of nWave of Pictures nWave SA/NV. Pictures SA/NV. ©2014 ©2014nWave nWave Pictures Pictures SA/NV. -SA/NV. ©2014 - Red ©2014 Star RedFilms Star Ltd. Films - All RightsLtd. - AllReserved Rights Reserved blog.attractionsmanagement.com HERE COMES CHINA As China opens up to the West, the nation’s attractions are booming, with existing attractions reporting record attendances and new museums, theme parks and science centres planned. -

Thailand Welcomes World's First Cartoon Network Amazone

Thailand welcomes world’s first Cartoon Network Amazone waterpark Monday 13 October, 2014 Cartoonival Zone Bangkok, 13 October, 2014 – The highly-anticipated Cartoon Network Amazone waterpark has opened its doors on 3 October, 2014. This one-of-a-kind and first in the world waterpark boasts 30 world-class water rides and slides, live Cartoon Network entertainment shows, mascot meet and greets, thrills attractions and interactive water play fun. Mr. Thawatchai Arunyik, the Governor of Tourism Authority of Thailand (TAT) said, “The Cartoon Network Amazone waterpark will certainly become a must-visit attraction for tourists visiting Thailand, especially Pattaya. Cartoon Network’s characters are well-known and much-loved around the world, and we appreciate Amazon Falls and Turner International Asia Pacific for bringing the life of Cartoon Network’s heroes to Thailand in this one-of-a-kind attraction.” Jake Jump, Adventure Zone Cartoon Network Amazone is Thailand’s first international water theme park, as well as the new home away from home of Cartoon Network’s beloved heroes; such as, the Powerpuff Girls, Ben 10 and Adventure Time. The facility is a licensed agreement between Amazon Falls, the developer of the waterpark, and Turner International Asia Pacific, the owner and operator of the Cartoon Network. The waterpark has been designed to be a ‘daycation’ destination – an attraction like no other in Thailand or in Asia, a place where music and live entertainment with Cartoon Network characters would equally be as important as the water attractions, according to Mr. Liakat Dhanji, Chairman of Amazon Falls. Cartoon Network Amazone waterpark features 10 themed-entertainment zones and also boasts captivating live and multimedia entertainment that incorporates the latest in interactive smart-screen technology. -

Spotlight on Safety

IAAPA EXPO RECAP — PAGES 43-54 © TM Your Amusement Industry NEWS Leader! Vol. 17 • Issue 10 JANUARY 2014 SPOTLIGHT ON SAFETY AIMS seminar offering 100-plus hours of new classes NAARSO’s 27th Annual Forum STORY: Pam Sherborne at the Doubletree by Hilton heads to Charlotte and Carowinds [email protected] Orlando at SeaWorld. Some of the new class- STORY: ORLANDO, Fla. — This Pam Sherborne es include Wood Pole In- [email protected] year’s Amusement Industry spections; Introduction to Manufacturers and Suppliers Ziplines, Operations and ORLANDO, Fla. — Clyde (AIMS) International Safety Standards; Operations Duck Wagner, 2013 president of Na- Seminar, set to run January Dynasty Style; and My At- tional Association Ride Safety 12-17, will offer an abundance traction Got Hacked – What Officials (NAARSO), was very of new classes. is Your Attraction Network pleased with the success of The annual AIMS Security? the association’s newest op- International Safety Seminar “We also offer a great erations certification program is a comprehensive safety- son for the AIMS seminar, autism class as well as a introduced during the 2013 training experience for said of the 360 hours of class- class about ADA for Aquat- Annual Ride Safety Inspection individuals responsible es offered this year, 100 plus ics,” Beazley said. “We are Forum. for the care and safety of hours are new classes. He anticipated that this also very pleased to be able County Convention Center in the amusement industry’s “Registration for atten- to offer a Level I program in second year for the operations certification program as part of Orlando. -

Media Marketing

2016 Media & Marketing { PLANNER } JuNe 2015 Te Ofcial Magazine of the World Waterpark Association october/november 2015 Dollywood’s CONVENTION ISSUE Splash Country ation Turns 15! Pigeon Forge, Tenn., u.S.A. Te Ofcial Magazine of the World Waterpark Associ What A Wonderful SPLASHWORLD!In Provence, France Evolution Of A Waterpark At Illinois’ Bensenville Wat A Wonderful World Ofer ParkAquatics The City of Henderson, Nevada Splashing With Tropical Style! offers a great mix of aquatic ven At Tianmu Lake Water ues World in Jiangsu Province, China regina centre conference travelodge Hotel & Getting Totally Soaked! Soaked Waterpark! at adds Safari SplasH erpark case studies Giraffes, Snakes, Elephants & Waterslides! exas’ Fort Worth Zoo t sumption ratios using fve wat One Drop At A Time, Part II Understanding water con MAGAZINE The ONLY mONThLY magaziNe devOTed TO waTerparks & resOrTs & wwa ONLiNe CONNeCT A fast, convenient way to reach qualifed buyers in the water leisure industry Why {advertise?} “Some of the best “World Waterpark Magazine” and WWA Online Connect ofer you the inside track products we use in our to water leisure buyers and decision makers. More waterpark professionals read our publications than any others. Tat is because our publications ofer news, best practices, park have come from the park case studies and more—all contributed by members and other trusted industry profles and articles found sources. When you advertise with the WWA, you have the potential to reach an audience in ‘World Waterpark comprised of waterpark owners, operators, developers & administrators Magazine.’ It’s a must- who represent 10,000+ readers on the forefront of the water leisure industry, many read for any waterpark of whom make the purchasing decisions for their departments in their facilities. -

Amusementtodaycom

2012 PARK PREVIEW — SEE PAGES 7–10 TM Vol. 16 • Issue 2 MAY 2012 A tale of two (more) towers Wild Eagle soars over Dollywood Carowinds, Kings Dominion introduce Ride fundraiser nets newest icons: Mondial WindSeekers $36,000 for American Eagle Foundation STORY: Scott Rutherford ride manufacturer Mon- STORY: Tim Baldwin [email protected] dial, the new $6.5 million [email protected] WindSeekers’ three minute CHARLOTTE, N.C. and cycle begins when the cen- PIGEON FORGE, Tenn. — DOSWELL, Va. — Follow- tral carriage, supporting 32 After a media day on March 23 ing the debut of WindSeeker two-passenger seats, slowly and a preview for season pass tower swing rides at four of starts to rotate while climb- holders, Dollywood officially its parks in 2011, Cedar Fair ing to the 301 foot level. debuted North America’s first continues the trend this sea- Once aloft and traveling at wing coaster by Bolliger & Ma- son with the introduction of full tilt, riders experience a billard on March 24. Namesake WindSeekers for two of its sense of weightlessness as Dolly Parton was on hand with southeastern properties. the arms extend outward at a specifically written song for Amusement Today was on a 45-degree angle and reach the premier of Wild Eagle — hand for the launch of both speeds up to 30 mph. “Be an Eagle, Not a Chicken.” The bragging rights to hav- WindSeekers, which took The first wing coaster in the ing the first of a new breed of place at Carowinds (March Carowinds U.S. opened on March 23, coaster in the nation should 31) and Kings Dominion Ranking as the tallest 2012 at Dollywood, follow- be accolade enough, but the (April 6). -

Cartoon Network Amazone

291217 3DAYS 2NIGHTS BANGKOK FAMILY FUN (CARTOON NETWORK AMAZONE) MINIMUM 2 PAX TO GO [FITBKK3D003] Valid till 31st MARCH 2017 PACKAGE RATE PER PERSON: [CASH ONLY] HOTEL LOCATION TWIN CHILD CHILD EXTRA BED NO BED 3* GRAND ALPHINE PRATUNAM PRATUNAM 299 245 179 4* ASIA HOTEL BANGKOK PRATUNAM 339 275 179 4* REMBRANDT HOTEL & TOWER SERVICED APRTMENT SUKHUMVIT 425 339 190 4* LIT BANGKOK HOTEL MBK 459 365 200 5* GRANDE CENTRE POINT HOTEL PLOENCHIT BANGKOK CENTRAL WORLD 566 445 210 …………………………………………………………………………………………………………………………………………………….…………………………………………………………………………. INCLUDES DAY 1 ARRIVAL BANGKOK • 2 nights’ accommodation Upon arrival at Bangkok airport, meet and transfer to hotel for check-in. Then free at your own • Private return airport leisure for the rest of the day. transfer as stated. • Cartoon Network Amazon DAY 2 BANGKOK – CARTOON NETWORK AMAZONE (Breakfast) with return Transfer Pick up from Hotel at 9.30am. (include 1 day pass) Cartoon Network Amazone is the world’s first Cartoon Network-themed waterpark. Here you can splash out with all of your favorite Cartoon Network friends, including Ben 10 and his aliens, EXCLUDES Adventure Time’s Finn and Jake, The Powerpuff Girls, Johnny Bravo and many more. • Return International Airfare. Explore 10 themed zones catered to suit the preferred experience of every guest. Blast down heart- • Tipping pounding high-speed water rollercoasters, enjoy a relaxing meander down the Riptide Rapids • Thailand visa River, or splash about at Cartoonival, the world’s largest aqua playground boasting 150 different • Travel Insurance water features. And there’s always the Megawave pool if you feel like some beach-inspired action - REMARKS even Ben 10s alien Humungousaur would be impressed by its size! • Package rates are subject to change due to room Once you’ve finished splashing and sliding, be mesmerized by dynamic dancers and acrobats in availability.