Classification of Electronic Club-Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Electro House 2015 Download Soundcloud

Electro house 2015 download soundcloud CLICK TO DOWNLOAD TZ Comment by Czerniak Maciek. Lala bon. TZ Comment by Czerniak Maciek. Yeaa sa pette. TZ. Users who like Kemal Coban Electro House Club Mix ; Users who reposted Kemal Coban Electro House Club Mix ; Playlists containing Kemal Coban Electro House Club Mix Electro House by EDM Joy. Part of @edmjoy. Worldwide. 5 Tracks. Followers. Stream Tracks and Playlists from Electro House @ EDM Joy on your desktop or mobile device. An icon used to represent a menu that can be toggled by interacting with this icon. Best EDM Remixes Of Popular Songs - New Electro & House Remix; New Electro & House Best Of EDM Mix; New Electro & House Music Dance Club Party Mix #5; New Dirty Party Electro House Bass Ibiza Dance Mix [ FREE DOWNLOAD -> Click "Buy" ] BASS BOOSTED CAR MUSIC MIX BEST EDM, BOUNCE, ELECTRO HOUSE. FREE tracks! PLEASE follow and i will continue to post songs:) ALL CREDIT GOES TO THE PRODUCERS OF THE SONG, THIS PAGE IS FOR PROMOTIONAL USE ONLY I WILL ONLY POST SONGS THAT ARE PUT UP FOR FREE. 11 Tracks. Followers. Stream Tracks and Playlists from Free Electro House on your desktop or mobile device. Listen to the best DJs and radio presenters in the world for free. Free Download Music & Free Electronic Dance Music Downloads and Free new EDM songs and tracks. Get free Electro, House, Trance, Dubstep, Mixtape downloads. Exclusive download New Electro & House Best of Party Mashup, Bootleg, Remix Dance Mix Click here to download New Electro & House Best of Party Mashup, Bootleg, Remix Dance Mix Maximise Network, Eric Clapman and GANGSTA-HOUSE on Soundcloud Follow +1 entry I've already followed Maximise Music, Repost Media, Maximise Network, Eric. -

Funkids Amb La Black Music Big Band & Brodas Junior

Dossier pedagògic — FunKids amb la Black Music Big Band Dossier pedagògic FunKids amb la Black Music Big Band & Brodas Junior Auditori de Girona Dossier pedagògic — FunKids amb la Black Music Big Band —3 Presentació —4 Fitxa artística —5 Black Music Big Band- BMBB —6 Què és una Big Band: una gran orquestra de jazz —7 Ball urbà — 2 Dossier pedagògic — FunKids amb la Black Music Big Band Presentació Qui no es mou amb la música funky? La proposta fresca Un espectacle i rítmica de l’Auditori Obert, en què els alumnes desco- briran l’essència de la música negra amb el funky i el soul amb més de 45 com a protagonistes. Un concert formatiu sobre la Big participants dalt Band, els seus instruments i estil amb explicacions en català i pinzellades en anglès (fàcilment comprensibles de l’escenari per als nens i nenes). Tot plegat de la mà dels joves de la entre músics i BMBB i al costat dels ballarins de Brodas Junior, que fa- ballarins ran del FunKids un concert ple d’espectacularitat i ritme! Qui no es mou amb la música funky? Arriba una proposta fresca i rítmica de l’Auditori Obert, en la qual els més joves descobriran l’essència de la música negra amb el funky i el soul com a protagonistes. Un concert formatiu i pedagògic que ens parlarà de la Big Band, els seus instru- ments i estil, el mon del ball urbà, etc.... Què podrem conèixer a FunKids? - Els seus instruments: secció de saxos, trompetes, trombons i la base rítmica - Escoltarem les diferents veus de la Black Music - Viurem els diferents estils de balls urbans com el locking, el popping, el bboying o el hiphop I tot això amb explicacions en català i pinzellades en anglès. -

EDM (Dance Music): Disco, Techno, House, Raves… ANTHRO 106 2018

EDM (Dance Music): Disco, Techno, House, Raves… ANTHRO 106 2018 Rebellion, genre, drugs, freedom, unity, sex, technology, place, community …………………. Disco • Disco marked the dawn of dance-based popular music. • Growing out of the increasingly groove-oriented sound of early '70s and funk, disco emphasized the beat above anything else, even the singer and the song. • Disco was named after discotheques, clubs that played nothing but music for dancing. • Most of the discotheques were gay clubs in New York • The seventies witnessed the flowering of gay clubbing, especially in New York. For the gay community in this decade, clubbing became 'a religion, a release, a way of life'. The camp, glam impulses behind the upsurge in gay clubbing influenced the image of disco in the mid-Seventies so much that it was often perceived as the preserve of three constituencies - blacks, gays and working-class women - all of whom were even less well represented in the upper echelons of rock criticism than they were in society at large. • Before the word disco existed, the phrase discotheque records was used to denote music played in New York private rent or after hours parties like the Loft and Better Days. The records played there were a mixture of funk, soul and European imports. These "proto disco" records are the same kind of records that were played by Kool Herc on the early hip hop scene. - STARS and CLUBS • Larry Levan was the first DJ-star and stands at the crossroads of disco, house and garage. He was the legendary DJ who for more than 10 years held court at the New York night club Paradise Garage. -

The THREE's a CROWD

The THREE’S A CROWD exhibition covers areas ranging from Hong Kong to Slavic vixa parties. It looks at modern-day streets and dance floors, examining how – through their physical presence in the public space – bodies create temporary communities, how they transform the old reality and create new conditions. And how many people does it take to make a crowd. The autonomous, affect-driven and confusing social body is a dynamic 19th-century construct that stands in opposition to the concepts of capitalist productivity, rationalism, and social order. It is more of a manifestation of social dis- order: the crowd as a horde, the crowd as a swarm. But it happens that three is already a crowd. The club culture remembers cases of criminalizing the rhythmic movement of at least three people dancing to the music based on repetitive beats. We also remember this pandemic year’s spring and autumn events, when the hearts of the Polish police beat faster at the sight of crowds of three and five people. Bodies always function in relation to other bodies. To have no body is to be nobody. Bodies shape social life in the public space through movement and stillness, gath- ering and distraction. Also by absence. Regardless of whether it is a grassroots form of bodily (dis)organization, self-cho- reographed protests, improvised social dances, artistic, activist or artivist actions, bodies become a field of social and political struggle. Together and apart. ARTISTS: International Festival of Urban ARCHIVES OF PUBLIC Art OUT OF STH VI: SPACE PROTESTS ABSORBENCY -

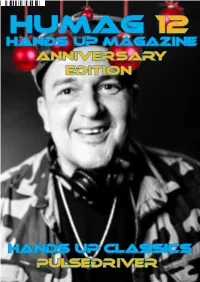

Hands up Classics Pulsedriver Hands up Magazine Anniversary Edition

HUMAG 12 Hands up Magazine anniversary Edition hANDS Up cLASSICS Pulsedriver Page 1 Welcome, The time has finally come, the 12th edition of the magazine has appeared, it took a long time and a lot has happened, but it is done. There are again specials, including an article about pulsedriver, three pages on which you can read everything that has changed at HUMAG over time and how the de- sign has changed, one page about one of the biggest hands up fans, namely Gabriel Chai from Singapo- re, who also works as a DJ, an exclusive interview with Nick Unique and at the locations an article about Der Gelber Elefant discotheque in Mühlheim an der Ruhr. For the first time, this magazine is also available for download in English from our website for our friends from Poland and Singapore and from many other countries. We keep our fingers crossed Richard Gollnik that the events will continue in 2021 and hopefully [email protected] the Easter Rave and the TechnoBase birthday will take place. Since I was really getting started again professionally and was also ill, there were unfortu- nately long delays in the publication of the magazi- ne. I apologize for this. I wish you happy reading, Have a nice Christmas and a happy new year. Your Richard Gollnik Something Christmas by Marious The new Song Christmas Time by Mariousis now available on all download portals Page 2 content Crossword puzzle What is hands up? A crossword puzzle about the An explanation from Hands hands up scene with the chan- Up. -

Quantifying Music Trends and Facts Using Editorial Metadata from the Discogs Database

QUANTIFYING MUSIC TRENDS AND FACTS USING EDITORIAL METADATA FROM THE DISCOGS DATABASE Dmitry Bogdanov, Xavier Serra Music Technology Group, Universitat Pompeu Fabra [email protected], [email protected] ABSTRACT Discogs metadata contains information about music re- leases (such as albums or EPs) including artists name, track While a vast amount of editorial metadata is being actively list including track durations, genre and style, format (e.g., gathered and used by music collectors and enthusiasts, it vinyl or CD), year and country of release. It also con- is often neglected by music information retrieval and mu- tains information about artist roles and relations as well sicology researchers. In this paper we propose to explore as recording companies and labels. The quality of the data Discogs, one of the largest databases of such data available in Discogs is considered to be high among music collec- in the public domain. Our main goal is to show how large- tors because of its strict guidelines, moderation system and scale analysis of its editorial metadata can raise questions a large community of involved enthusiasts. The database and serve as a tool for musicological research on a number contains contributions by more than 347,000 people. It of example studies. The metadata that we use describes contains 8.4 million releases by 5 million artists covering a music releases, such as albums or EPs. It includes infor- wide range of genres and styles (although the database was mation about artists, tracks and their durations, genre and initially focused on Electronic music). style, format (such as vinyl, CD, or digital files), year and Remarkably, it is the largest open database containing country of each release. -

Progressive House Music Download

Progressive house music download click here to download Into progressive house music? Explore our selection of progressive house tracks on Beatport - the world's largest store for DJs. Listen to Free downloadNew MusicProgressive House Deep House Tech House shows. Under Waves with Damon Marshall & Adnan Jakubovic (Mar) Damon Marshall - Ocean Sound Podcast (Apr 13 ). Progressive House. Waste Music Busters · Immortal Lover (In My Nex ANDREW BAYER FEAT ALISON MAY · Immortal Lover (In My Nex Anjunabeats . Nicky Romero returns to the sound that started it all on his newest track "Duality," and it's guaranteed to bring back fond memories of earlier dance music days. Progressive house mixes Download MP3 for free. Also Live Progressive house Sets, Podcasts and Electronic Music mix shows. Also subgenres from. Create your free download gates with www.doorway.ru - Get free SoundCloud reposts to Stream Tracks and Playlists from Progressive House on your desktop or. Welcome to 8tracks radio: free music streaming for any time, place, or mood. tagged with house, progressive, and electronic. You can also download one of our. Posts about Progressive House written by f.a.r.e.s. Download page (Bandcamp ) Ambient piece, this shows how well he's been all along at making music.”. Please enable JavaScript or install the latest flash player to our play music tracks: www.doorway.ru No tracks found. Add to Cart Download. Best Livesets & Dj Sets from Progressive House Free Electronic Dance Music download from various sources like Zippyshare www.doorway.ru Soundcloud and. Progressive house is a subgenre of house music. It emerged in the early s. -

320+ Halloween Songs and Albums

320+ Halloween Songs and Albums Over 320 Songs for Halloween Theme Rides in Your Indoor Cycling Classes Compiled by Jennifer Sage, updated October 2014 Halloween presents a unique opportunity for some really fun musically themed classes—the variety is only limited by your imagination. Songs can include spooky, dark, or classic-but-cheesy Halloween tunes (such as Monster Mash). Or you can imagine the wide variety of costumes and use those themes. I’ve included a few common themes such as Sci-Fi and Spy Thriller in my list. Over the years I’ve gotten many of these song suggestions from various online forums, other instructors, and by simply searching online music sources for “Halloween”, “James Bond”, “Witch”, “Ghost” and other key words. This is my most comprehensive list to date. If you have more song ideas, please email them to me so I can continually update this list for future versions. [email protected]. This year’s playlist contains 50 new specific song suggestions and numerous new album suggestions. I’ve included a lot more from the “darkwave” and “gothic” genres. I’ve added “Sugar/Candy” as its own theme. Sources: It’s impossible to list multiple sources for every song but to speed the process up for you, we list at least one source so you don’t spend hours searching for these songs. As with music itself, you have your own preference for downloading sources, so you may want to check there first. Also, some countries may not have the same music available due to music rights. -

Progressive House: from Underground to the Big Room

PROGRESSIVE HOUSE: FROM UNDERGROUND TO THE BIG ROOM A Bachelor thesis written by Sonja Hamhuis. Student number 5658829 Study Ba. Musicology Course year 2017-2018 Semester 1 Faculty Humanities Supervisor dr. Michiel Kamp Date 22-01-2017 ABSTRACT The electronic dance industry consists of many different subgenres. All of them have their own history and have developed over time, causing uncertainties about the actual definition of these subgenres. Among those is progressive house, a British genre by origin, that has evolved in stages over the past twenty-five years and eventually gained popularity with the masses. Within the field of musicology, these processes have not yet been covered. Therefore, this research provides insights in the historical process of the defining and popularisation of progressive house music. By examining interviews, media articles and academic literature, and supported by case studies on Leftfield’s ‘Not Forgotten’ en the Swedish House Mafia’s ‘One (Your Name)’, it hypothesizes that there is a connection between the musical changes in progressive house music and its popularisation. The results also show that the defining of progressive house music has been - and is - an extensive process fuelled by center collectivities and gatekeepers like fan communities, media, record labels, and artists. Moreover, it suggests that the same center collectivities and gatekeepers, along with the on-going influences of digitalisation, played a part in the popularisation of the previously named genres. Altogether, this thesis aims to open doors for future research on genre defining processes and electronic dance music culture. i TABLE OF CONTENTS Introduction ............................................................................................................... 1 Chapter 1 - On the defining of genre ....................................................................... -

Formal Devices of Trance and House Music: Breakdowns

FORMAL DEVICES OF TRANCE AND HOUSE MUSIC: BREAKDOWNS, BUILDUPS AND ANTHEMS Devin Iler, B.M. Thesis Prepared for the Degree of MASTER OF MUSIC UNIVERSITY OF NORTH TEXAS December 2011 APPROVED: David B. Schwarz, Major Professor Thomas Sovik, Minor Professor Ana Alonso-Minutti, Committee Member Lynn Eustis, Director of Graduate Studies in the College of Music James Scott, Dean of the College of Music James D. Meernik, Acting Dean of the Toulouse Graduate School Iler, Devin. Formal Devices of Trance and House Music: Breakdowns, Buildups, and Anthems. Master of Music (Music Theory), December 2011, 121 pp., 53 examples, reference list, 41 titles. Trance and house music are sub-genres within the genre of electronic dance music. The form of breakdown, buildup and anthem is the main driving force behind trance and house music. This thesis analyzes transcriptions from 22 trance and house songs in order to establish and define new terminology for formal devices used within the breakdown, buildup and anthem sections of the music. Copyright 2011 by Devin Iler ii TABLE OF CONTENTS Page LIST OF EXAMPLES……………………………………………………………………iv CHAPTER 1—INTRODUCTION……………………………………………………......1 Definition of Terms/Theoretical Background…………………………………......1 A Brief Historical Context………………………………………………………...6 CHAPTER 2— TYPICAL BUILDUP FEATURES……………………………………...9 Snare Rolls and Bass Hits…………………………………………………………9 Bass + Snare + High Hat Leads and Rest Measures……………………………..24 CHAPTER 3— BUILDUPS WITHOUT TYPICAL FEATURES……………………...45 Synth Sweeps…………………………………………………………………….45 -

Suggested Serving: 1. WOODEN STARS 2. COLDCUT 3. HANIN ELIAS 4. WINDY & CARL 5. SOUTHERN CULTURE on the SKIDS 6. CORNERSHOP

December, 1997 That Etiquette Magazine from CiTR 101.9 fM FREE! 1. Soupspoon 2. Dinner knife 3. Plate 4. Dessert fork 5. Salad fork 6. Dinner fork 7. Napkin 8. Bread and butter plate 9. Butter spreader 10. Tumbler u Suggested Serving: 1. WOODEN STARS 2. COLDCUT 3. HANIN ELIAS 4. WINDY & CARL 5. SOUTHERN CULTURE on the SKIDS 6. CORNERSHOP 7. CiTR ON-AIR PROGRAMMING GUIDE Dimm 1997 I//W 179 f€fltUf€J HANIN ELIAS 9 WOODEN STARS 11 WINDY & CARL 12 CORNERSHOP 14 SOUTHERN CULTURE ON THE SKIDS 16 COLDCUT 19 GARAGESHOCK 20 COUUAM COWSHEAD CHRONICLES VANCOUVER SPECIAL 4 INTERVIEW HELL 6 SUBCULT. 8 THE KINETOSCOPE 21 BASSLINES 22 SEVEN INCH 22 PRINTED MATTERS 23 UNDER REVIEW 24 REAL LIVE ACTION 26 ON THE DIAL 28 e d i t r i x : miko hoffman CHARTS 30 art director: kenny DECEMBER DATEBOOK 31 paul ad rep: kevin pendergraft production manager: tristan winch Comic* graphic design/ Layout: kenny, atomos, BOTCHED AMPALLANG 4 michael gaudet, tanya GOOD TASTY COMIC 25 Schneider production: Julie colero, kelly donahue, bryce dunn, andrea gin, ann Coucr goncalves, patrick gross, jenny herndier, erin hoage, christa 'Tis THE SEASON FOR EATIN', LEAVING min, katrina mcgee, sara minogue, erin nicholson, stefan YER ELBOWS OFF THE TABLE AND udell, malcolm van deist, shane MINDING YER MANNERS! PROPER van der meer photogra- ETIQUETTE COVER BY ARTIST phy/i L Lustrations: jason da silva, ted dave, TANYA SCHNEIDER. richard folgar, sydney hermant, mary hosick, kris rothstein, corin sworn contribu © "DiSCORDER* 1997 by the Student Radio Society of the University of British Columbia. -

Various Hardstyle Legends Top 100 Mp3, Flac, Wma

Various Hardstyle Legends Top 100 mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Electronic Album: Hardstyle Legends Top 100 Country: Netherlands Released: 2012 Style: Hard House, Hardstyle, Jumpstyle MP3 version RAR size: 1397 mb FLAC version RAR size: 1279 mb WMA version RAR size: 1228 mb Rating: 4.7 Votes: 933 Other Formats: XM AUD AAC AA TTA RA ADX Tracklist Hide Credits 1-1 –Jack & The Ripper Whores In Da House 1:30 1-2 –Da Hustler Shake It 1:16 1-3 –Ruthless & Vorwerk Whoaat? 1:03 1-4 –Digital Punk The Punk Soul Brother 1:23 1-5 –Da Emperor Nasty Bitch 1:10 1-6 –Pavo & Zany Sex 1:50 1-7 –Showtek Puta Madre 1:20 1-8 –Shithead Shithead 3002 2:08 1-9 –The Beholder & Max Enforcer Bitcrusher 1:50 1-10 –Southstylers Pwoap ! 1:16 1-11 –DJ Zany Pillzz 1:42 1-12 –The Beholder & Max Enforcer Bigger, Better, Louder! 1:27 1-13 –Technoboy Atomic (Technoboy's King Konga Mix) 1:20 1-14 –Zatox Overdose 1:43 1-15 –Max Enforcer Catchin' Up 2:20 1-16 –Donkey Rollers Hardstyle Rockers 2:01 1-17 –Kold Konexion Minus One 1:19 1-18 –A-Lusion Perfect It 1:03 Blackout (Brennan Heart Rem!x) 1-19 –Unknown Analoq 1:18 Remix – Brennan Heart 1-20 –The Prophet Feat. Wildstylez Cold Rocking 1:29 1-21 –Frontliner Tuuduu 1:10 1-22 –Josh & Wesz Tempo Pusher 1:54 1-23 –Brian NRG How We Roll 1:27 1-24 –A-Drive Sound & E-Motion 1:02 1-25 –Luna* Meets Trilok & Chiren DHHD (The Anthem) 1:23 1-26 –Headhunterz The Power Of The Mind 1:45 1-27 –Deepack One Night In Paris 1:48 1-28 –DJ Rob Bang Bang 2:26 1-29 –Showtek Black 2008 2:03 1-30 –The Prophet & Brennan Heart Payback 1:20 1-31 –Wildstylez Revenge 1:10 1-32 –Crypsis & Sasha F Get Hit 1:55 1-33 –Deepack & Charly Lownoise Feat.