Institutionen För Datavetenskap Department of Computer and Information Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![Reversing Malware [Based on Material from the Textbook]](https://docslib.b-cdn.net/cover/8924/reversing-malware-based-on-material-from-the-textbook-1438924.webp)

Reversing Malware [Based on Material from the Textbook]

SoftWindows 11/23/05 Reversing Malware [based on material from the textbook] Reverse Engineering (Reversing Malware) © SERG What is Malware? • Malware (malicious software) is any program that works against the interest of the system’s user or owner. • Question: Is a program that spies on the web browsing habits of the employees of a company considered malware? • What if the CEO authorized the installation of the spying program? Reverse Engineering (Reversing Malware) © SERG Reversing Malware • Revering is the strongest weapon we have against the creators of malware. • Antivirus researchers engage in reversing in order to: – analyze the latest malware, – determine how dangerous the malware is, – learn the weaknesses of malware so that effective antivirus programs can be developed. Reverse Engineering (Reversing Malware) © SERG Distributed Objects 1 SoftWindows 11/23/05 Uses of Malware • Why do people develop and deploy malware? – Financial gain – Psychological urges and childish desires to “beat the system”. – Access private data – … Reverse Engineering (Reversing Malware) © SERG Typical Purposes of Malware • Backdoor access: – Attacker gains unlimited access to the machine. • Denial-of-service (DoS) attacks: – Infect a huge number of machines to try simultaneously to connect to a target server in hope of overwhelming it and making it crash. • Vandalism: – E.g., defacing a web site. • Resource Theft: – E.g., stealing other user’s computing and network resources, such as using your neighbors’ Wireless Network. • Information Theft: – E.g., stealing other user’s credit card numbers. Reverse Engineering (Reversing Malware) © SERG Types of Malware • Viruses • Worms • Trojan Horses • Backdoors • Mobile code • Adware • Sticky software Reverse Engineering (Reversing Malware) © SERG Distributed Objects 2 SoftWindows 11/23/05 Viruses • Viruses are self-replicating programs that usually have a malicious intent. -

Deeppanda Paging Dr. Smith Melissa L. Markey, Esq

DeepPanda Paging Dr. Smith Melissa L. Markey, Esq. Hall, Render, Killian, Heath & Lyman, PC 1512 Larimer Street Suite 300 Denver CO 80202 248-310-4876 1 A Brief Introduction… Data Breaches © Hall, Render, Killian, Heath & Lyman, P.C. The Threats Ransomware Identity Theft Business Email Cyberstalking Compromise Fraud, Extortion, Phishing/Whaling/ etc. Vishing/Pharming DDoS attacks Spoofing Social Engineering Botnets Fileless Attacks Malware Medjacking Logic Bombs Dronejacking Trojans, Worms, Viruses The Players FBI/Cyber Action Teams Department of Justice DoD/DCIS Secret Service Electronic Crimes Task Force US Postal Inspection Service Internet Safety Enforcement Team AG State Police Local Police Interpol FTC ATF Internet Crime Complaint Center Is This Real? How real is the threat of cybercrime against a healthcare provider? Sometimes the healthcare provider is the target Healthcare providers are a treasure-trove of ID theft information Celebrity patients, public health emergencies… all are fodder for the media Healthcare has all the best data Sometimes the healthcare provider is just collateral damage Lots of computers to be taken over by a botnet Because it's there… Hospital as a Target Israeli Hospital Hacked Infections Shut Down Services A Reader’s Comment: “…It's the computer system that has a virus not the doctor. The patient knows what time their appointment's for and may already have arranged to take time off work. The doctor knows what time they have a clinic…..” “…a hospital should not be totally dependent on functioning IT systems. It sounds like the decision of an administrator totally divorced from any perception of patients' circumstances…” It’s More Than The EMR… It’s More Than The EMR… Hospitals as Collateral Damage Health care as a target: A piece of critical infrastructure Not an Effective Security Stance Motivations Data Breaches © Hall, Render, Killian, Heath & Lyman, P.C. -

Cyber-Crimes: a Practical Approach to the Application of Federal Computer Crime Laws Eric J

Santa Clara High Technology Law Journal Volume 16 | Issue 2 Article 1 January 2000 Cyber-Crimes: A Practical Approach to the Application of Federal Computer Crime Laws Eric J. Sinrod William P. Reilly Follow this and additional works at: http://digitalcommons.law.scu.edu/chtlj Part of the Law Commons Recommended Citation Eric J. Sinrod and William P. Reilly, Cyber-Crimes: A Practical Approach to the Application of Federal Computer Crime Laws, 16 Santa Clara High Tech. L.J. 177 (2000). Available at: http://digitalcommons.law.scu.edu/chtlj/vol16/iss2/1 This Article is brought to you for free and open access by the Journals at Santa Clara Law Digital Commons. It has been accepted for inclusion in Santa Clara High Technology Law Journal by an authorized administrator of Santa Clara Law Digital Commons. For more information, please contact [email protected]. ARTICLES CYBER-CRIMES: A PRACTICAL APPROACH TO THE APPLICATION OF FEDERAL COMPUTER CRIME LAWS Eric J. Sinrod' and William P. Reilly" TABLE OF CONTENTS I. Introduction ................................................................................178 II. Background ................................................................................ 180 A. The State of the Law ................................................................... 180 B. The Perpetrators-Hackers and Crackers ..................................... 181 1. H ackers ................................................................................... 181 2. Crackers ................................................................................. -

Computer Parasitology

Computer Parasitology Carey Nachenberg Symantec AntiVirus Research Center [email protected] Posted with the permission of Virus Bulletin http://www.virusbtn.com/ Table of Contents Table of Contents............................................................................................................................................................. 2 Introduction....................................................................................................................................................................... 4 Worm Classifications...................................................................................................................................................... 4 Worm Transport Classifications............................................................................................................................... 4 E-mail Worms ......................................................................................................................................................... 4 Arbitrary Protocol Worms: IRC Worms, TCP/IP Worms, etc. ...................................................................... 4 Worm Launch Classifications................................................................................................................................... 5 Self-launching Worms ........................................................................................................................................... 5 User-launched Worms ........................................................................................................................................... -

Code Red, Code Red II, and Sircam Attacks Highlight Need for Proactive Measures

United States General Accounting Office GAO Testimony Before the Subcommittee on Government Efficiency, Financial Management, and Intergovernmental Relations, Committee on Government Reform, House of Representatives For Release on Delivery Expected at 10 a.m., PDT INFORMATION Wednesday August 29, 2001 SECURITY Code Red, Code Red II, and SirCam Attacks Highlight Need for Proactive Measures Statement of Keith A. Rhodes Chief Technologist GAO-01-1073T Mr. Chairman and Members of the Subcommittee: Thank you for inviting me to participate in today’s hearing on the most recent rash of computer attacks. This is the third time I’ve testified before Congress over the past several years on specific viruses—first, the “Melissa” virus in April 1999 and second, the “ILOVEYOU” virus in May 2000. At both hearings, I stressed that the next attack would likely propagate faster, do more damage, and be more difficult to detect and counter. Again, we are having to deal with destructive attacks that are reportedly costing billions. In the past 2 months, organizations and individuals have had to contend with several particularly vexing attacks. The most notable, of course, is Code Red but potentially more damaging are Code Red II and SirCam. Together, these attacks have infected millions of computer users, shut down Web sites, slowed Internet service, and disrupted business and government operations. They have already caused billions of dollars of damage and their full effects have yet to be completely assessed. Today, I would like to discuss the makeup and potential threat that each of these viruses pose as well as reported damages. I would also like to talk about progress being made to protect federal operations and assets from these types of attacks and the substantial challenges still ahead. -

Virus Bulletin, March 2000

ISSN 0956-9979 MARCH 2000 THE INTERNATIONAL PUBLICATION ON COMPUTER VIRUS PREVENTION, RECOGNITION AND REMOVAL Editor: Francesca Thorneloe CONTENTS Technical Consultant: Fraser Howard Technical Editor: Jakub Kaminski COMMENT What Support Technicians Really, Really Want 2 Consulting Editors: NEWS & VIRUS PREVALENCE TABLE 3 Nick FitzGerald, Independent consultant, NZ Ian Whalley, IBM Research, USA LETTERS 4 Richard Ford, Independent consultant, USA VIRUS ANALYSES Edward Wilding, Maxima Group Plc, UK 1. 20/20 Visio 6 2. Kak-astrophic? 7 IN THIS ISSUE: OPINION Add-in Insult to Injury 8 • Limited editions: Make sure you don’t miss out on the chance to own one of Virus Bulletin’s 10 year anniversary EXCHANGE T-shirts. Details of how to order and pay are on p.3. Hollow Vic-tory 10 • Is it a bird? Is it a plane? Our corporate case study this CASE STUDY month comes from Boeing’s Computer Security Product Boeing all the Way 12 Manager, starting on p.12. FEATURE • Denial of service with a smile: Nick FitzGerald explains the latest menace and what you can or cannot do to avoid it, The File Virus Swansong? 15 starting on p.16. TUTORIAL • Exchange & mark: We kick off our new series of What DDoS it all Mean? 16 groupware anti-virus product reviews with GroupShield for Exchange on p.20. OVERVIEW Testing Exchange 18 PRODUCT REVIEWS 1. FRISK F-PROT v3.06a 19 2. NAI GroupShield v4.04 for Exchange 20 END NOTES AND NEWS 24 VIRUS BULLETIN ©2000 Virus Bulletin Ltd, The Pentagon, Abingdon, Oxfordshire, OX14 3YP, England. www.virusbtn.com /2000/$0.00+2.50 No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form without the prior written permission of the publishers. -

Cyber Conflicts in International Relations: Framework and Case Studies

Cyber Conflicts in International Relations: Framework and Case Studies Alexander Gamero-Garrido Engineering Systems Division Massachusetts Institute of Technology [email protected] | [email protected] Executive Summary Overview Although cyber conflict is no longer considered particularly unusual, significant uncertainties remain about the nature, scale, scope and other critical features of it. This study addresses a subset of these issues by developing an internally consistent framework and applying it to a series of 17 case studies. We present each case in terms of (a) its socio-political context, (b) technical features, (c) the outcome and inferences drawn in the sources examined. The profile of each case includes the actors, their actions, tools they used and power relationships, and the outcomes with inferences or observations. Our findings include: • Cyberspace has brought in a number of new players – activists, shady government contractors – to international conflict, and traditional actors (notably states) have increasingly recognized the importance of the domain. • The involvement of the private sector on cybersecurity (“cyber defense”) has been critical: 16 out of the 17 cases studied involved the private sector either in attack or defense. • All of the major international cyber conflicts presented here have been related to an ongoing conflict (“attack” or “war”) in the physical domain. • Rich industrialized countries with a highly developed ICT infrastructure are at a higher risk concerning cyber attacks. • Distributed Denial of Service (DDoS) is by far the most common type of cyber attack. • Air-gapped (not connected to the public Internet) networks have not been exempt from attacks. • A perpetrator does not need highly specialized technical knowledge to intrude computer networks. -

2000 CERT Incident Notes

2000 CERT Incident Notes CERT Division [DISTRIBUTION STATEMENT A] Approved for public release and unlimited distribution. http://www.sei.cmu.edu REV-03.18.2016.0 Copyright 2017 Carnegie Mellon University. All Rights Reserved. This material is based upon work funded and supported by the Department of Defense under Contract No. FA8702-15-D-0002 with Carnegie Mellon University for the operation of the Software Engineering Institute, a federally funded research and development center. The view, opinions, and/or findings contained in this material are those of the author(s) and should not be con- strued as an official Government position, policy, or decision, unless designated by other documentation. References herein to any specific commercial product, process, or service by trade name, trade mark, manu- facturer, or otherwise, does not necessarily constitute or imply its endorsement, recommendation, or favoring by Carnegie Mellon University or its Software Engineering Institute. This report was prepared for the SEI Administrative Agent AFLCMC/AZS 5 Eglin Street Hanscom AFB, MA 01731-2100 NO WARRANTY. THIS CARNEGIE MELLON UNIVERSITY AND SOFTWARE ENGINEERING INSTITUTE MATERIAL IS FURNISHED ON AN "AS-IS" BASIS. CARNEGIE MELLON UNIVERSITY MAKES NO WARRANTIES OF ANY KIND, EITHER EXPRESSED OR IMPLIED, AS TO ANY MATTER INCLUDING, BUT NOT LIMITED TO, WARRANTY OF FITNESS FOR PURPOSE OR MERCHANTABILITY, EXCLUSIVITY, OR RESULTS OBTAINED FROM USE OF THE MATERIAL. CARNEGIE MELLON UNIVERSITY DOES NOT MAKE ANY WARRANTY OF ANY KIND WITH RESPECT TO FREEDOM FROM PATENT, TRADEMARK, OR COPYRIGHT INFRINGEMENT. [DISTRIBUTION STATEMENT A] This material has been approved for public release and unlimited distribu- tion. Please see Copyright notice for non-US Government use and distribution. -

Prosecuting Computer Virus Authors: the Eedn for an Adequate and Immediate International Solution Kelly Cesare University of the Pacific, Mcgeorge School of Law

Global Business & Development Law Journal Volume 14 Issue 1 Symposium: Biotechnology and International Article 10 Law 1-1-2001 Prosecuting Computer Virus Authors: The eedN for an Adequate and Immediate International Solution Kelly Cesare University of the Pacific, McGeorge School of Law Follow this and additional works at: https://scholarlycommons.pacific.edu/globe Part of the International Law Commons Recommended Citation Kelly Cesare, Prosecuting Computer Virus Authors: The Need for an Adequate and Immediate International Solution, 14 Transnat'l Law. 135 (2001). Available at: https://scholarlycommons.pacific.edu/globe/vol14/iss1/10 This Comments is brought to you for free and open access by the Journals and Law Reviews at Scholarly Commons. It has been accepted for inclusion in Global Business & Development Law Journal by an authorized editor of Scholarly Commons. For more information, please contact [email protected]. Comments Prosecuting Computer Virus Authors: The Need for an Adequate and Immediate International Solution Kelly Cesare * TABLE OF CONTENTS 1. INTRODUCTION ............................................... 136 II. THE CRIME OF THE COMPUTER VIRUS ............................. 138 A. The New Crime: The Computer Virus ......................... 139 1. What is a Computer Virus? .............................. 139 2. The Role of the Computer Virus in CriminalLaw ............ 141 3. Illustrative Examples of Recent Virus Outbreaks ............. 143 a. M elissa .......................................... 143 b. Chernobyl ....................................... -

Virus Bulletin, October 2001

ISSN 0956-9979 OCTOBER 2001 THE INTERNATIONAL PUBLICATION ON COMPUTER VIRUS PREVENTION, RECOGNITION AND REMOVAL Editor: Helen Martin CONTENTS Technical Consultant: Matt Ham Technical Editor: Jakub Kaminski COMMENT A Worm by Any Other Name 2 Consulting Editors: VIRUS PREVALENCE TABLE 3 Nick FitzGerald, Independent consultant, NZ Ian Whalley, IBM Research, USA NEWS Richard Ford, Independent consultant, USA 1. US Disaster the Aftermath 3 Edward Wilding, Independent consultant, UK 2. Every Trick in the Book? 3 BOOK REVIEW IN THIS ISSUE: Reading Between the Worms 4 CONFERENCE PREVIEW Sing a Rainbow: Weve had Code Red, Code Green and AVAR 2001 5 now Adrian Marinescu brings us an analysis of the latest addition to the rainbow of worm colours, BlueCode. VIRUS ANALYSIS See p.6. Red Turning Blue 6 Arabian Nights: Eddy Willems was disconcerted to find TECHNICAL FEATURE his possessions being rifled through by Saudi customs officials, but not as horrified as when he witnessed them Red Number Day 8 inserting virus-infected disks into their unprotected sys- FEATURES tems. Read the full horror story starting on p.10. 1. Virus Hunting in Saudi Arabia 10 Passing the Blame: Who should be held responsible for 2. Viral Solutions in Large Companies 11 the damages caused by malware outbreaks and can we sue FEATURE SERIES any of them? Ray Glath ponders the legalities and shares his opinions on p.16. Worming the Internet Part 1 14 OPINION Sue Who? 16 PRODUCT REVIEW Sophos Anti-Virus and SAVAdmin 18 END NOTES AND NEWS 24 VIRUS BULLETIN ©2001 Virus Bulletin Ltd, The Pentagon, Abingdon, Oxfordshire, OX14 3YP, England. -

The Administrator Shortcut Guide to Email Protection

Chapter 1 Introduction By Sean Daily, Series Editor Welcome to The Administrator Shortcut Guide to Email Protection! The book you are about to read represents an entirely new modality of book publishing and a major first in the publishing industry. The founding concept behind Realtimepublishers.com is the idea of providing readers with high-quality books about today’s most critical IT topics—at no cost to the reader. Although this may sound like a somewhat impossible feat to achieve, it is made possible through the vision and generosity of corporate sponsors such as Sybari, who agree to bear the book’s production expenses and host the book on its Web site for the benefit of its Web site visitors. It should be pointed out that the free nature of these books does not in any way diminish their quality. Without reservation, I can tell you that this book is the equivalent of any similar printed book you might find at your local bookstore (with the notable exception that it won’t cost you $30 to $80). In addition to the free nature of the books, this publishing model provides other significant benefits. For example, the electronic nature of this eBook makes events such as chapter updates and additions, or the release of a new edition of the book possible to achieve in a far shorter timeframe than is possible with printed books. Because we publish our titles in “real- time”—that is, as chapters are written or revised by the author—you benefit from receiving the information immediately rather than having to wait months or years to receive a complete product. -

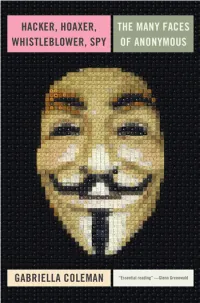

Hacker, Hoaxer, Whistleblower, Spy: the Story of Anonymous

hacker, hoaxer, whistleblower, spy hacker, hoaxer, whistleblower, spy the many faces of anonymous Gabriella Coleman London • New York First published by Verso 2014 © Gabriella Coleman 2014 The partial or total reproduction of this publication, in electronic form or otherwise, is consented to for noncommercial purposes, provided that the original copyright notice and this notice are included and the publisher and the source are clearly acknowledged. Any reproduction or use of all or a portion of this publication in exchange for financial consideration of any kind is prohibited without permission in writing from the publisher. The moral rights of the author have been asserted 1 3 5 7 9 10 8 6 4 2 Verso UK: 6 Meard Street, London W1F 0EG US: 20 Jay Street, Suite 1010, Brooklyn, NY 11201 www.versobooks.com Verso is the imprint of New Left Books ISBN-13: 978-1-78168-583-9 eISBN-13: 978-1-78168-584-6 (US) eISBN-13: 978-1-78168-689-8 (UK) British Library Cataloguing in Publication Data A catalogue record for this book is available from the British library Library of Congress Cataloging-in-Publication Data A catalog record for this book is available from the library of congress Typeset in Sabon by MJ & N Gavan, Truro, Cornwall Printed in the US by Maple Press Printed and bound in the UK by CPI Group Ltd, Croydon, CR0 4YY I dedicate this book to the legions behind Anonymous— those who have donned the mask in the past, those who still dare to take a stand today, and those who will surely rise again in the future.