September 10, 2009

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Approval of George W. Bush: Economic and Media Impacts Gino Tozzi Jr

Wayne State University Wayne State University Dissertations 1-1-2011 Approval of George W. Bush: Economic and media impacts Gino Tozzi Jr. Wayne State University, Follow this and additional works at: http://digitalcommons.wayne.edu/oa_dissertations Part of the Mass Communication Commons, and the Political Science Commons Recommended Citation Tozzi Jr., Gino, "Approval of George W. Bush: Economic and media impacts" (2011). Wayne State University Dissertations. Paper 260. This Open Access Dissertation is brought to you for free and open access by DigitalCommons@WayneState. It has been accepted for inclusion in Wayne State University Dissertations by an authorized administrator of DigitalCommons@WayneState. APPROVAL OF GEORGE W. BUSH: ECONOMIC AND MEDIA IMPACTS by GINO J. TOZZI JR. DISSERTATION Submitted to the Graduate School of Wayne State University, Detroit, Michigan in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY 2011 MAJOR: POLITICAL SCIENCE Approved by: _________________________________ Chair Date _________________________________ _________________________________ _________________________________ © COPYRIGHT BY GINO J. TOZZI JR. 2011 All Rights Reserved DEDICATION To my Father ii ACKNOWLEDGMENTS I want to thank my committee chair Professor Ewa Golebiowska for the encouragement and persistence on only accepting the best from me in this process. I also owe my committee of Professor Ronald Brown, Professor Jodi Nachtwey, and Professor David Martin a debt of gratitude for their help and advisement in this endeavor. All of them were instrumental in keeping my focus narrowed to produce the best research possible. I also owe appreciation to the commentary, suggestions, and research help from my wonderful wife Courtney Tozzi. This project was definitely more enjoyable with her encouragement and help. -

Mitch Mcconnell Looks Poised to Win a Senate Race in Kentucky Which May Be the Most Expensive in U.S

blogs.lse.ac.uk http://blogs.lse.ac.uk/usappblog/2014/10/14/mitch-mcconnell-looks-poised-to-win-a-senate-race-in-kentucky-which-may-be-the- most-expensive-in-u-s-history/ Mitch McConnell looks poised to win a Senate race in Kentucky which may be the most expensive in U.S. history. Kentucky’s Senate race has so far been a hard fought one, with Democrat Alison Lundergan Grimes hoping to unseat the current Senate Minority Leader, Mitch McConnell. Despite McConnell’s relative low popularity ratings, Anne Cizmar argues that Grimes faces an uphill battle, having already had to distance herself from President Obama. She writes that barring a shake up to the campaign, McConnell looks on course to win a race which may see more than $100 million spent between the two candidates. Unseating a U.S. Senator is a steep uphill battle. Since the Carter-Reagan election of 1980, a full 75 percent of Senate incumbents have survived their six-yearly appearance on the ballot, with 91 percent winning reelection in 2012. The incumbent’s popularity isn’t always an indicator of trouble—good news for Kentucky’s Mitch McConnell who seems likely to win his hard-fought battle with Alison Lundergan Grimes despite his Obama-low job approval ratings. McConnell, a Republican and 30-year incumbent, is the current Senate Minority Leader. He’s not well liked in Kentucky polling at around 34 percent job approval in the state. But the state is increasingly Republican; 5 out of 6 House of Representatives members from Kentucky are now from the GOP, and Kentucky has voted for the Republican presidential candidate in every election since 1996. -

FOR IMMEDIATE RELEASE July 9, 2014 the Centrist Project Voice

FOR IMMEDIATE RELEASE July 9, 2014 The Centrist Project Voice Endorses Susan Collins, Larry Pressler, Michelle Nunn, Greg Orman and Jill Bossi for U.S. Senate Candidates Focused on Core Issues of Broad Importance to Americans Washington, DC — The Centrist Project Voice, the nation’s first political action committee supporting candidates ready to set aside partisan politics and focus on the core issues of critical importance to all Americans, today announced that it has endorsed the following candidates running for U.S. senate seats this fall: Susan Collins of Maine, Larry Pressler of South Dakota, Michelle Nunn of Georgia, Greg Orman of Kansas, and Jill Bossi of South Carolina. "The Centrist Project Voice is pleased to be able to support Susan Collins, Larry Pressler, Michelle Nunn, Greg Orman, and Jill Bossi in their bids to serve the American people by focusing on the critical and mainstream issues that face our country," said Centrist Project Founder Charles Wheelan, a senior lecturer and policy fellow at the Rockefeller Center at Dartmouth College and the bestselling author of Naked Economics. "Each of these candidates embody the Centrist Principles, (fiscal responsibility, environmental stewardship, social tolerance, economic opportunity and a pragmatic approach to solving core policy challenges) as being the bedrock for how to approach public policy matters in the Senate in order to break the partisan deadlock that grips Congress and move America forward." "Our nation needs different kinds of leaders—people [who] will fight to end the point scoring and political bickering that is going on right now in Washington," Nunn said. "We need more leaders who will bring common-sense and a collaborative sensibility to our broken political process." “We are on the verge of something truly historic,” said Larry Pressler. -

Electoral Order and Political Participation: Election Scheduling, Calendar Position, and Antebellum Congressional Turnout

Electoral Order and Political Participation: Election Scheduling, Calendar Position, and Antebellum Congressional Turnout Sara M. Butler Department of Political Science UCLA Los Angeles, CA 90095-1472 [email protected] and Scott C. James Associate Professor Department of Political Science UCLA Los Angeles, CA 90095-1472 (310) 825-4442 [email protected] An earlier version of this paper was presented at the Annual Meeting of the Midwest Political Science Association, April 3-6, 2008, Chicago, IL. Thanks to Matt Atkinson, Kathy Bawn, and Shamira Gelbman for helpful comments. ABSTRACT Surge-and-decline theory accounts for an enduring regularity in American politics: the predictable increase in voter turnout that accompanies on-year congressional elections and its equally predictable decrease at midterm. Despite the theory’s wide historical applicability, antebellum American political history offers a strong challenge to its generalizability, with patterns of surge-and-decline nowhere evident in the period’s aggregate electoral data. Why? The answer to this puzzle lies with the institutional design of antebellum elections. Today, presidential and on-year congressional elections are everywhere same-day events. By comparison, antebellum states scheduled their on- year congressional elections in one of three ways: before, after, or on the same day as the presidential election. The structure of antebellum elections offers a unique opportunity— akin to a natural experiment—to illuminate surge-and-decline dynamics in ways not possible by the study of contemporary congressional elections alone. Utilizing quantitative and qualitative materials, our analysis clarifies and partly resolves this lack of fit between theory and historical record. It also adds to our understanding of the effects of political institutions and electoral design on citizen engagement. -

Brooks Pierce Election Update, March 4, 2020

Brooks Pierce Election Update, March 4, 2020 03.04.2020 North Carolina Voters Cast Primary Ballots Over 2.1 million North Carolina voters cast primary election ballots on March 3 and during the early voting period. Most races have been decided, but a few may require runoffs. In races where no candidate receives 30% of the vote, the second place finisher may call for a runoff. Given that many political observers see North Carolina as a “purple” state, the November elections are expected to be hotly contested. While the official results have not yet been certified and the possibility for recounts or runoffs in some races remains… Here’s what we know: President President Donald Trump (R) won the Republican contest with 94% and Democrat Joe Biden won his party’s primary with 43 % to 24 % for Bernie Sanders, 13% for Michael Bloomberg and 10% for Elizabeth Warren. Governor Democratic Gov. Roy Cooper and Republican Lt. Gov. Dan Forest both won their party’s primary by large margins and will face off in November. Lt. Governor Republican Mark Robinson appears to have won with 33% and there may be a runoff on the Democratic side between Wake County Rep. Yvonne Holley (27%) and Buncombe County Sen. Terry Van Duyn (20%). Attorney General Democratic Attorney General Josh Stein will face Republican winner Jim O’Neill, the Forsyth County District Attorney, who received 47%. Agriculture Republican Commissioner Steve Troxler will face Democratic winner Jenna Wadsworth, a Wake County Soil and Water Supervisor, who received 54%. Auditor BROOKSPIERCE.COM Brooks Pierce Election Update, March 4, 2020 Democratic Auditor Beth Wood will face Republican Tony Street, a Brunswick County businessman, who received 56%. -

![Marginals [PDF]](https://docslib.b-cdn.net/cover/5579/marginals-pdf-235579.webp)

Marginals [PDF]

SUPRC / USA TODAY Kansas General Election Voters FINAL KANSAS SEPTEMBER TOPLINES Area Code: (N=500) n % KC Area ----------------------------------------------------------- 163 32.60 East/Topeka ----------------------------------------------------- 109 21.80 Wichita/South ---------------------------------------------------- 105 21.00 West --------------------------------------------------------------- 123 24.60 ********************************************************************************************************************************** {INSERT QUOTAS} INTRO SECTION> Hello, my name is __________ and I am conducting a survey for Suffolk University and I would like to get your opinions on some questions about the upcoming elections in Kansas. Would you be willing to spend five minutes answering some brief questions? (quota – youngest in that household). Are you currently registered to vote? (N=500) n % Male ---------------------------------------------------------------- 236 47.20 Female ------------------------------------------------------------ 264 52.80 S2 Thank You. How likely are you to vote in the Kansas election for U.S. Senate and Governor – very likely, somewhat likely, not very likely, or not at all likely? (N=500) n % Very Likely-------------------------------------------------------- 481 96.20 Somewhat Likely -------------------------------------------------- 19 3.80 1. Are you currently enrolled as a Democrat, Republican, Unaffiliated/Independent, Libertarian or something else? (N=500) n % Democrat --------------------------------------------------------- -

OPENING PANDORA's BOX David Cameron's Referendum Gamble On

OPENING PANDORA’S BOX David Cameron’s Referendum Gamble on EU Membership Credit: The Economist. By Christina Hull Yale University Department of Political Science Adviser: Jolyon Howorth April 21, 2014 Abstract This essay examines the driving factors behind UK Prime Minister David Cameron’s decision to call a referendum if the Conservative Party is re-elected in 2015. It addresses the persistence of Euroskepticism in the United Kingdom and the tendency of Euroskeptics to generate intra-party conflict that often has dire consequences for Prime Ministers. Through an analysis of the relative impact of political strategy, the power of the media, and British public opinion, the essay argues that addressing party management and electoral concerns has been the primary influence on David Cameron’s decision and contends that Cameron has unwittingly unleashed a Pandora’s box that could pave the way for a British exit from the European Union. Acknowledgments First, I would like to thank the Bates Summer Research Fellowship, without which I would not have had the opportunity to complete my research in London. To Professor Peter Swenson and the members of The Senior Colloquium, Gabe Botelho, Josh Kalla, Gabe Levine, Mary Shi, and Joel Sircus, who provided excellent advice and criticism. To Professor David Cameron, without whom I never would have discovered my interest in European politics. To David Fayngor, who flew halfway across the world to keep me company during my summer research. To my mom for her unwavering support and my dad for his careful proofreading. And finally, to my adviser Professor Jolyon Howorth, who worked with me on this project for over a year and a half. -

Tennessee Mayors Growing Civic Engagement

J A N U A R Y 2 0 2 0 tennessee mayors growing civic engagement H O W L O C A L L E A D E R S C A N L E V E R A G E T H E I R O F F I C E T O E N G A G E V O T E R S introduction For over a decade, Tennessee has consistently trailed most other states in voter registration and turnout. Participation in local elections is particularly low: Voter turnout fell below 25 percent in recent elections in our state's four largest cities. As a state with a proud civic history, Tennessee has an important opportunity to examine practices, from election administration to civic engagement, that may offer room for improvement. Systemic reforms, such as policies that modernize and secure election machines and voter- registration databases, are critical, but so too are efforts that better connect voters to their communities and elected representatives. Although state leaders, election officials, community groups and individual residents all have roles to play, city and county mayors are uniquely suited to promote civic engagement at the community level. This report highlights Tennessee Mayors Growing Civic Engagment (TMGCE), a new cohort of city and county mayors who collectively committed to making civic engagement a priority in their communities. Within these pages, readers will learn why mayors are well-placed to lead civic engagement efforts, be able to review specific examples from the participating Tennessee leaders and have access to tools and templates to build a civic-engagement action plan all their own. -

District Policy Group Provides Top-Line Outcomes and Insight, with Emphasis on Health Care Policy and Appropriations, Regarding Tuesday’S Midterm Elections

District Policy Group provides top-line outcomes and insight, with emphasis on health care policy and appropriations, regarding Tuesday’s midterm elections. Election Outcome and Impact on Outlook for 114th Congress: With the conclusion of Tuesday’s midterm elections, we have officially entered that Lame Duck period of time between the end of one Congress and the start of another. Yesterday’s results brought with them outcomes that were both surprising and those that were long-anticipated. For the next two years, the House and Senate will be controlled by the Republicans. However, regardless of the predictions that pundits made, the votes are in, Members of the 114th Congress (2015-2016) have been determined, and we can now begin to speculate about what these changes will mean for business interests and advocacy organizations. Even though we now have a Republican majority in Congress, for the next two years, President Obama remains resident at 1600 Pennsylvania Avenue. Although President Obama will be a Lame Duck President, he still has issues and priority policies he wishes to pursue. Many other Lame Duck presidents have faced Congresses controlled by the opposite party and how a President responds to the challenge often can determine his legacy. Given the total number of Republican pick-ups in the House and Senate, we anticipate the GOP will feel emboldened to pursue its top policy priorities; as such, we do not suspect that collaboration and bipartisanship will suddenly arrive at the Capitol. We anticipate the Democrats will work hard to try to keep their caucus together, but this may prove challenging for Senate Minority Leader Reid, especially with the moderate Democrats and Independents possibly deciding to ally with the GOP. -

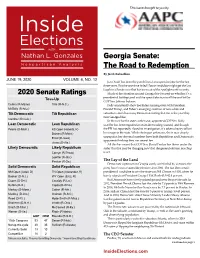

June 19, 2020 Volume 4, No

This issue brought to you by Georgia Senate: The Road to Redemption By Jacob Rubashkin JUNE 19, 2020 VOLUME 4, NO. 12 Jon Ossoff has been the punchline of an expensive joke for the last three years. But the one-time failed House candidate might get the last laugh in a Senate race that has been out of the spotlight until recently. 2020 Senate Ratings Much of the attention around Georgia has focused on whether it’s a Toss-Up presidential battleground and the special election to fill the seat left by GOP Sen. Johnny Isakson. Collins (R-Maine) Tillis (R-N.C.) Polls consistently show Joe Biden running even with President McSally (R-Ariz.) Donald Trump, and Biden’s emerging coalition of non-white and Tilt Democratic Tilt Republican suburban voters has many Democrats feeling that this is the year they turn Georgia blue. Gardner (R-Colo.) In the race for the state’s other seat, appointed-GOP Sen. Kelly Lean Democratic Lean Republican Loeffler has been engulfed in an insider trading scandal, and though Peters (D-Mich.) KS Open (Roberts, R) the FBI has reportedly closed its investigation, it’s taken a heavy toll on Daines (R-Mont.) her image in the state. While she began unknown, she is now deeply Ernst (R-Iowa) unpopular; her abysmal numbers have both Republican and Democratic opponents thinking they can unseat her. Jones (D-Ala.) All this has meant that GOP Sen. David Perdue has flown under the Likely Democratic Likely Republican radar. But that may be changing now that the general election matchup Cornyn (R-Texas) is set. -

Emerge Speaker Bios

Emerge Speaker Bios Jonathan Beatty Jonathan Beatty is a corporate analyst turned Social Entrepreneur from Hazard, Kentucky. He graduated with honors from the Gatton College of Business and Economics with a double major in Marketing and Management. Over his college career, Jonathan was very active in Lexington, serving in a multitude of leadership positions within Alpha Phi Alpha Fraternity, Inc., the National Association of Black Accountants, and Alpha Kappa Psi business fraternity. In addition, he regularly volunteered with Big Brothers/Big Sisters, the Ronald McDonald House, the Salvation Army, and Habitat for Humanity. After graduation, Jonathan went on to earn a M.B.A. as a Lyman T. Johnson Fellow, and later began his career as a Senior Analyst at Procter & Gamble in Cincinnati, Ohio. After almost seven years with P&G, Jonathan left the company to pursue his dream of working in San Francisco, California where he served as a Finance Manager for McKesson Corporation and Big Heart Pet Brands. During his time in San Francisco, Jonathan was responsible for providing financial leadership for McKesson’s $3 Billion Technology segment, and developing key strategies while at Big Heart Pet Brands. Jonathan left the corporate realm and founded Servonta Strategic Philanthropy to create positive, measurable, and sustainable social change. Shawn Burns Shawn Burns is a community-engaged businessman in Lexington. Prior to his current business consulting work, he spent several years in mega event venues with Aramark, Levy and Keeneland. His roles included Director of Purchasing, Operations and Assistant GM at Paul Brown Stadium, Keeneland and various PGA and ATP venues. -

Who Is the Attorney General's Client?

\\jciprod01\productn\N\NDL\87-3\NDL305.txt unknown Seq: 1 20-APR-12 11:03 WHO IS THE ATTORNEY GENERAL’S CLIENT? William R. Dailey, CSC* Two consecutive presidential administrations have been beset with controversies surrounding decision making in the Department of Justice, frequently arising from issues relating to the war on terrorism, but generally giving rise to accusations that the work of the Department is being unduly politicized. Much recent academic commentary has been devoted to analyzing and, typically, defending various more or less robust versions of “independence” in the Department generally and in the Attorney General in particular. This Article builds from the Supreme Court’s recent decision in Free Enterprise Fund v. Public Co. Accounting Oversight Board, in which the Court set forth key principles relating to the role of the President in seeing to it that the laws are faithfully executed. This Article draws upon these principles to construct a model for understanding the Attorney General’s role. Focusing on the question, “Who is the Attorney General’s client?”, the Article presumes that in the most important sense the American people are the Attorney General’s client. The Article argues, however, that that client relationship is necessarily a mediated one, with the most important mediat- ing force being the elected head of the executive branch, the President. The argument invokes historical considerations, epistemic concerns, and constitutional structure. Against a trend in recent commentary defending a robustly independent model of execu- tive branch lawyering rooted in the putative ability and obligation of executive branch lawyers to alight upon a “best view” of the law thought to have binding force even over plausible alternatives, the Article defends as legitimate and necessary a greater degree of presidential direction in the setting of legal policy.