Case 1:17-Cr-00130-JTN ECF No. 53 Filed 02/23/18 Pageid.2039 Page 1 of 10

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Denial the Death of Kurt Cobain the Seattle

A DENIAL THE DEATH OF KURT COBAIN THE SEATTLE POLIECE DEPARTMENT’S SUBSTANDARD INVESTIGATION & THE REPROCUSSIONS TO JUSTICE by BREE DONOVAN A Capstone Submitted to The Graduate School-Camden Rutgers, The State University, New Jersey in partial fulfillment of the requirements for the degree of Masters of Arts in Liberal Studies Under the direction of Dr. Joseph C. Schiavo and approved by _________________________________ Capstone Director _________________________________ Program Director Camden, New Jersey, December 2015 CAPSTONE ABSTRACT A Denial The Death of Kurt Cobain The Seattle Police Department’s Substandard Investigation & The Repercussions to Justice By: BREE DONOVAN Capstone Director: Dr. Joseph C. Schiavo Kurt Cobain (1967-1994) musician, artist songwriter, and founder of The Rock and Roll Hall of Fame band, Nirvana, was dubbed, “the voice of a generation”; a moniker he wore like his faded cardigan sweater, but with more than some distain. Cobain’s journey to the top of the Billboard charts was much more Dickensian than many of the generations of fans may realize. During his all too short life, Cobain struggled with physical illness, poverty, undiagnosed depression, a broken family, and the crippling isolation and loneliness brought on by drug addiction. Cobain may have shunned the idea of fame and fortune but like many struggling young musicians (who would become his peers) coming up in the blue collar, working class suburbs of Aberdeen and Tacoma Washington State, being ii on the cover of Rolling Stone magazine wasn’t a nightmare image. Cobain, with his unkempt blond hair, polarizing blue eyes, and fragile appearance is a modern-punk-rock Jesus; a model example of the modern-day hero. -

Acm's Fy14 Annual Report

acm’s annual report for FY14 DOI:10.1145/2691599 ACM Council PRESIDENT ACM’s FY14 Vinton G. Cerf VICE PRESIDENT Annual Report Alexander L. Wolf SECRETAry/TREASURER As I write this letter, ACM has just issued Vicki L. Hanson a landmark announcement revealing the PAST PRESIDENT monetary level for the ACM A.M. Turing Alain Chesnais Award will be raised this year to $1 million, SIG GOVERNING BOARD CHAIR Erik Altman with all funding provided by Google puter science and mathematics. I was PUBLICATIONS BOARD Inc. The news made the global rounds honored to be a participant in this CO-CHAIRS in record time, bringing worldwide inaugural event; I found it an inspi- Jack Davidson visibility to the award and the Associa- rational gathering of innovators and Joseph A. Konstan tion. Long recognized as the equiva- understudies who will be the next MEMBERS-AT-LARGE lent to the Nobel Prize for computer award recipients. Eric Allman science, ACM’s Turing Award now This year also marked the publica- Ricardo Baeza-Yates carries the financial clout to stand on tion of highly anticipated ACM-IEEE- Cherri Pancake the same playing field with the most CS collaboration Computer Science Radia Perlman esteemed scientific and cultural prizes Curricula 2013 and the acclaimed Re- Mary Lou Soffa honoring game changers whose con- booting the Pathway to Success: Prepar- Eugene Spafford tributions have transformed the world. ing Students for Computer Workforce Salil Vadhan This really is an extraordinary time Needs in the United States, an exhaus- SGB COUNCIL to be part of the world’s largest educa- tive report from ACM’s Education REPRESENTATIVES tional and scientific society in comput- Policy Committee that focused on IT Brent Hailpern ing. -

Software Technical Report

A Technical History of the SEI Larry Druffel January 2017 SPECIAL REPORT CMU/SEI-2016-SR-027 SEI Director’s Office Distribution Statement A: Approved for Public Release; Distribution is Unlimited http://www.sei.cmu.edu Copyright 2016 Carnegie Mellon University This material is based upon work funded and supported by the Department of Defense under Contract No. FA8721-05-C-0003 with Carnegie Mellon University for the operation of the Software Engineer- ing Institute, a federally funded research and development center. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the United States Department of Defense. References herein to any specific commercial product, process, or service by trade name, trade mark, manufacturer, or otherwise, does not necessarily constitute or imply its endorsement, recommendation, or favoring by Carnegie Mellon University or its Software Engineering Institute. This report was prepared for the SEI Administrative Agent AFLCMC/PZM 20 Schilling Circle, Bldg 1305, 3rd floor Hanscom AFB, MA 01731-2125 NO WARRANTY. THIS CARNEGIE MELLON UNIVERSITY AND SOFTWARE ENGINEERING INSTITUTE MATERIAL IS FURNISHED ON AN “AS-IS” BASIS. CARNEGIE MELLON UNIVERSITY MAKES NO WARRANTIES OF ANY KIND, EITHER EXPRESSED OR IMPLIED, AS TO ANY MATTER INCLUDING, BUT NOT LIMITED TO, WARRANTY OF FITNESS FOR PURPOSE OR MERCHANTABILITY, EXCLUSIVITY, OR RESULTS OBTAINED FROM USE OF THE MATERIAL. CARNEGIE MELLON UNIVERSITY DOES NOT MAKE ANY WARRANTY OF ANY KIND WITH RESPECT TO FREEDOM FROM PATENT, TRADEMARK, OR COPYRIGHT INFRINGEMENT. [Distribution Statement A] This material has been approved for public release and unlimited distribu- tion. -

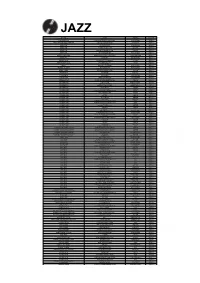

Order Form Full

JAZZ ARTIST TITLE LABEL RETAIL ADDERLEY, CANNONBALL SOMETHIN' ELSE BLUE NOTE RM112.00 ARMSTRONG, LOUIS LOUIS ARMSTRONG PLAYS W.C. HANDY PURE PLEASURE RM188.00 ARMSTRONG, LOUIS & DUKE ELLINGTON THE GREAT REUNION (180 GR) PARLOPHONE RM124.00 AYLER, ALBERT LIVE IN FRANCE JULY 25, 1970 B13 RM136.00 BAKER, CHET DAYBREAK (180 GR) STEEPLECHASE RM139.00 BAKER, CHET IT COULD HAPPEN TO YOU RIVERSIDE RM119.00 BAKER, CHET SINGS & STRINGS VINYL PASSION RM146.00 BAKER, CHET THE LYRICAL TRUMPET OF CHET JAZZ WAX RM134.00 BAKER, CHET WITH STRINGS (180 GR) MUSIC ON VINYL RM155.00 BERRY, OVERTON T.O.B.E. + LIVE AT THE DOUBLET LIGHT 1/T ATTIC RM124.00 BIG BAD VOODOO DADDY BIG BAD VOODOO DADDY (PURPLE VINYL) LONESTAR RECORDS RM115.00 BLAKEY, ART 3 BLIND MICE UNITED ARTISTS RM95.00 BROETZMANN, PETER FULL BLAST JAZZWERKSTATT RM95.00 BRUBECK, DAVE THE ESSENTIAL DAVE BRUBECK COLUMBIA RM146.00 BRUBECK, DAVE - OCTET DAVE BRUBECK OCTET FANTASY RM119.00 BRUBECK, DAVE - QUARTET BRUBECK TIME DOXY RM125.00 BRUUT! MAD PACK (180 GR WHITE) MUSIC ON VINYL RM149.00 BUCKSHOT LEFONQUE MUSIC EVOLUTION MUSIC ON VINYL RM147.00 BURRELL, KENNY MIDNIGHT BLUE (MONO) (200 GR) CLASSIC RECORDS RM147.00 BURRELL, KENNY WEAVER OF DREAMS (180 GR) WAX TIME RM138.00 BYRD, DONALD BLACK BYRD BLUE NOTE RM112.00 CHERRY, DON MU (FIRST PART) (180 GR) BYG ACTUEL RM95.00 CLAYTON, BUCK HOW HI THE FI PURE PLEASURE RM188.00 COLE, NAT KING PENTHOUSE SERENADE PURE PLEASURE RM157.00 COLEMAN, ORNETTE AT THE TOWN HALL, DECEMBER 1962 WAX LOVE RM107.00 COLTRANE, ALICE JOURNEY IN SATCHIDANANDA (180 GR) IMPULSE -

Computer Viruses and Malware Advances in Information Security

Computer Viruses and Malware Advances in Information Security Sushil Jajodia Consulting Editor Center for Secure Information Systems George Mason University Fairfax, VA 22030-4444 email: [email protected] The goals of the Springer International Series on ADVANCES IN INFORMATION SECURITY are, one, to establish the state of the art of, and set the course for future research in information security and, two, to serve as a central reference source for advanced and timely topics in information security research and development. The scope of this series includes all aspects of computer and network security and related areas such as fault tolerance and software assurance. ADVANCES IN INFORMATION SECURITY aims to publish thorough and cohesive overviews of specific topics in information security, as well as works that are larger in scope or that contain more detailed background information than can be accommodated in shorter survey articles. The series also serves as a forum for topics that may not have reached a level of maturity to warrant a comprehensive textbook treatment. Researchers, as well as developers, are encouraged to contact Professor Sushil Jajodia with ideas for books under this series. Additional tities in the series: HOP INTEGRITY IN THE INTERNET by Chin-Tser Huang and Mohamed G. Gouda; ISBN-10: 0-387-22426-3 PRIVACY PRESERVING DATA MINING by Jaideep Vaidya, Chris Clifton and Michael Zhu; ISBN-10: 0-387- 25886-8 BIOMETRIC USER AUTHENTICATION FOR IT SECURITY: From Fundamentals to Handwriting by Claus Vielhauer; ISBN-10: 0-387-26194-X IMPACTS AND RISK ASSESSMENT OF TECHNOLOGY FOR INTERNET SECURITY.'Enabled Information Small-Medium Enterprises (TEISMES) by Charles A. -

An Interview With

An Interview with LANCE HOFFMAN OH 451 Conducted by Rebecca Slayton on 1 July 2014 George Washington University Washington, D.C. Charles Babbage Institute Center for the History of Information Technology University of Minnesota, Minneapolis Copyright, Charles Babbage Institute Lance Hoffman Interview 1 July 2014 Oral History 451 Abstract This interview with security pioneer Lance Hoffman discusses his entrance into the field of computer security and privacy—including earning a B.S. in math at the Carnegie Institute of Technology, interning at SDC, and earning a PhD at Stanford University— before turning to his research on computer security risk management at as a Professor at the University of California–Berkeley and George Washington University. He also discusses the relationship between his PhD research on access control models and the political climate of the late 1960s, and entrepreneurial activities ranging from the creation of a computerized dating service to the starting of a company based upon the development of a decision support tool, RiskCalc. Hoffman also discusses his work with the Association for Computing Machinery and IEEE Computer Society, including his role in helping to institutionalize the ACM Conference on Computers, Freedom, and Privacy. The interview concludes with some reflections on the current state of the field of cybersecurity and the work of his graduate students. This interview is part of a project conducted by Rebecca Slayton and funded by an ACM History Committee fellowship on “Measuring Security: ACM and the History of Computer Security Metrics.” 2 Slayton: So to start, please tell us a little bit about where you were born, where you grew up. -

The Meaning of Velvet Jennifer Jean Wright Iowa State University

Iowa State University Capstones, Theses and Retrospective Theses and Dissertations Dissertations 2000 The meaning of velvet Jennifer Jean Wright Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/rtd Part of the Creative Writing Commons, and the English Language and Literature Commons Recommended Citation Wright, Jennifer Jean, "The meaning of velvet" (2000). Retrospective Theses and Dissertations. 16209. https://lib.dr.iastate.edu/rtd/16209 This Thesis is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Retrospective Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. The meaning of velvet by Jennifer Jean Wright A thesis submitted to the graduate faculty in partial fulfillment of the requirements for the degree of MASTER OF ARTS Major: English (Creative Writing) Major Professor: Stephen Pett Iowa State University Ames, Iowa 2000 Copyright © Jennifer Jean Wright, 2000. All rights reserved. iii that's the girl that he takes around town. she appears composed, so she is I suppose, who can really tell? she shows no emotion at all stares into space like a dead china doll. --Elliot Smith, waltz #2 The ashtray says you were up all night when you went to bed with your darkest mind your pillow wept and covered your eyes you finally slept while the sun caught fire You've changed. --Wilco, a shot in the arm two headed boy all floating in glass the sun now it's blacker then black I can hear as you tap on your jar I am listening to hear where you are .. -

Criminal Law and Procedure

Volume 27 Issue 3 Article 10 1982 Criminal Law and Procedure Various Editors Follow this and additional works at: https://digitalcommons.law.villanova.edu/vlr Part of the Constitutional Law Commons, Criminal Law Commons, and the Criminal Procedure Commons Recommended Citation Various Editors, Criminal Law and Procedure, 27 Vill. L. Rev. 672 (1982). Available at: https://digitalcommons.law.villanova.edu/vlr/vol27/iss3/10 This Issues in the Third Circuit is brought to you for free and open access by Villanova University Charles Widger School of Law Digital Repository. It has been accepted for inclusion in Villanova Law Review by an authorized editor of Villanova University Charles Widger School of Law Digital Repository. Editors: Criminal Law and Procedure [VOL. 27: p. 672 Criminal Law and Procedure CRIMINAL PROCEDURE-DOUBLE JEOPARDY-RETRIAL AFTER MIS- TRIAL-WHEN MISTRIAL Is BASED ON INCONSISTENT JURY VERDICT, TRIAL COURT'S DECISION Is AFFORDED BROAD DEFERENCE IN DETER- MINATION OF WHETHER GRANT OF MISTRIAL WAS RESULT OF "MANI- FEST NECESSITY." Crawford v. Fenton (1981) On April 19, 1977, the petitioner, Rooks Edward Crawford, was in- dicted for conspiring to violate the narcotics laws of the state of New Jersey.' The trial judge provided the jury with special interrogatories contained in a verdict form, 2 and the jury returned a verdict finding petitioner guilty of conspiracy. 3 Believing that there was a possible inconsistency between the guilty verdict and the answers to the special interrogatories, 4 the trial judge ordered the jury to continue its delibera- tions.5 After further deliberation, the jury asked to be released.6 Over the defendant's objection, the trial judge declared a mistrial 7 stating that there was a "manifest necessity" to do so.8 While being held for retrial, the petitioner filed a petition for a writ of habeas corpus in the United States District Court for the Dis- 1. -

ROUTE66 – Drfeelgood Well If You Ever Plan to Motor West Just Take

ROUTE66 – DrFeelgood https://www.youtube.com/watch?v=G-vleD9AUtk A D A A D D A A E D A A E7 Well if you ever plan to motor west Just take my way it's the highway that's the best Get your kicks on Route 66 Well it winds from Chicago to L.A. More than 2000 miles all the way Get your kicks on Route 66 Well it goes from St Louis, down to Missouri Oklahoma city looks oh so pretty You'll see Amarillo and Gallup, New Mexico Flagstaff, Arizona don't forget Winona Kingsman, Barstaw, San Bernadino Would you get hip to this kindly trip And take that California trip Get your kick on Route 66 It goes from St. Louis, down to Missouri Oklahoma city looks oh so pretty You'll see Amarillo and Gallup, New Mexico Flagstaff, Arizona don't forget Winona Kingsman, Barstaw, San Berdino Would you get hip to this kindly trip Take that California trip Get your kicks on Route 66 Get your kicks on Route 66 Get your kicks on Route 66 Get your kicks on Route 66 GET BACK – The Beatles https://www.youtube.com/watch?v=YEESfv-11ng Estrofa: A A7 D A A A7 D A Estribillo: A A7 D A G D A A7 D A Jojo was a man who thought he was a loner But he knew it wouldn't last Jojo left his home in Tucson, Arizona For some California grass Get back, get back Get back to where you once belonged Get back, get back Get back to where you once belonged Get back Jojo Get back, get back Back to where you once belonged Get back, get back Back to where you once belonged Get back Jo Sweet Loretta Martin thought she was a woman But she was another man All the girls around her say she's got it coming -

Berkeley Technology Law Journal

ARTICLE Liabilities of System Operators on THE Internet Giorgio Bovenzi † TABLE OF CONTENTS I. INTRODUCTION II. NETWORK PROVIDERS POTENTIALLY LIABLE FOR THE TORTS OF THEIR USERS A. The Internet and Internet Service Providers B. Commercial On-line Services C. Bulletin Board Systems III. PRIVACY A. The Right to Privacy B. Privacy, the "Right of the People Peaceably to Assemble" and Anonymity C. Relevant Federal Statutes D. Traditional Mail v. E-Mail E. Conclusion IV. FLAMING A. Defamation B. Cubby v. CompuServe C. Stratton Oakmont, Inc. v. Prodigy Services Co. D. Religious Technology Center v. Netcom On-line Communication Services, Inc. E. Analogies for Sysop's Liability F. Sysop's Liability: A Tentative Conclusion V. COMPUTER SYSTEMS, PUBLIC FORA AND POLICIES VI. CONCLUSION I. Introduction In the thirteenth century, Emperor Frederick II proclaimed instruments written on paper to be invalid.1 Despite resistance from the legal community, however, paper was eventually deemed to be dignified enough to replace parchment in legal use.2 Centuries later, typewriters made handwritten documents obsolete, although lawyers realized that they made forgery much simpler.3 Time and again, technologically new ways of conducting business encounter initial resistance, but ultimately are embraced by the common law. The law ultimately seeks fairness and ease of trade, preferring the substance of a transaction over its form. Attorneys sense, with good reason, that the law will track technological changes, and they gladly put new technology to use. Today, the choice of the medium through which a transaction is executed affects the negotiation process. Often, the methods successfully employed in one case might be ineffective in another.4 Rather than dismissing electronic transactions just because the controls are imperfect,5 lawyers must acquire new skills to negotiate effectively in the electronic world. -

BBST Course 3 -- Test Design

BLACK BOX SOFTWARE TESTING: INTRODUCTION TO TEST DESIGN: A SURVEY OF TEST TECHNIQUES CEM KANER, J.D., PH.D. PROFESSOR OF SOFTWARE ENGINEERING: FLORIDA TECH REBECCA L. FIEDLER, M.B.A., PH.D. PRESIDENT: KANER, FIEDLER & ASSOCIATES This work is licensed under the Creative Commons Attribution License. To view a copy of this license, visit http://creativecommons.org/licenses/by-sa/2.0/ or send a letter to Creative Commons, 559 Nathan Abbott Way, Stanford, California 94305, USA. These notes are partially based on research that was supported by NSF Grants EIA- 0113539 ITR/SY+PE: “Improving the Education of Software Testers” and CCLI-0717613 “Adaptation & Implementation of an Activity-Based Online or Hybrid Course in Software Testing.” Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. BBST Test Design Copyright (c) Kaner & Fiedler (2011) 1 NOTICE … … AND THANKS The practices recommended and The BBST lectures evolved out of practitioner-focused courses co- discussed in this course are useful for an authored by Kaner & Hung Quoc Nguyen and by Kaner & Doug Hoffman introduction to testing, but more (now President of the Association for Software Testing), which then experienced testers will adopt additional merged with James Bach’s and Michael Bolton’s Rapid Software Testing practices. (RST) courses. The online adaptation of BBST was designed primarily by Rebecca L. Fiedler. I am writing this course with the mass- market software development industry in Starting in 2000, the course evolved from a practitioner-focused course mind. -

Ebola and Marburg Virus Disease Epidemics: Preparedness, Alert, Control and Evaluation

EBOLA STRATEGY Ebola and Marburg virus disease epidemics: preparedness, alert, control and evaluation August 2014 © World Health Organization 2014. All rights reserved. The designations employed and the presentation of the material in this publication do not imply the expression of any opinion whatsoever on the part of the World Health Organization concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries. Dotted and dashed lines on maps represent approximate border lines for which there may not yet be full agreement. All reasonable precautions have been taken by the World Health Organization to verify the information contained in this publication. However, the published material is being distributed without warranty of any kind, either expressed or implied. The responsibility for the interpretation and use of the material lies with the reader. In no event shall the World Health Organization be liable for damages arising from its use. WHO/HSE/PED/CED/2014.05 Contents Acknowledgements ............................................................................................................ 5 List of abbreviations and acronyms ................................................................................... 6 Chapter 1 – Introduction .................................................................................................... 7 1.1 Purpose of the document and target audience ....................................................................