Integration of Robotic Platforms in a Communicating Environment with Application in the Aid of Elderly

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Humanoid Robot

NAIN 1.0 – A HUMANOID ROBOT by Shivam Shukla (1406831124) Shubham Kumar (1406831131) Shashank Bhardwaj (1406831117) Department of Electronics & Communication Engineering Meerut Institute of Engineering & Technology Meerut, U.P. (India)-250005 May, 2018 NAIN 1.0 – HUMANOID ROBOT by Shivam Shukla (1406831124) Shubham Kumar (1406831131) Shashank Bhardwaj (1406831117) Submitted to the Department of Electronics & Communication Engineering in partial fulfillment of the requirements for the degree of Bachelor of Technology in Electronics & Communication Meerut Institute of Engineering & Technology, Meerut Dr. A.P.J. Abdul Kalam Technical University, Lucknow May, 2018 DECLARATION I hereby declare that this submission is my own work and that, to the best of my knowledge and belief, it contains no material previously published or written by another person nor material which to a substantial extent has been accepted for the award of any other degree or diploma of the university or other institute of higher learning except where due acknowledgment has been made in the text. Signature Signature Name: Mr. Shivam Shukla Name: Mr. Shashank Bhardwaj Roll No. 1406831124 Roll No. 1406831117 Date: Date: Signature Name: Mr. Shubham Kumar Roll No. 1406831131 Date: ii CERTIFICATE This is to certify that Project Report entitled “Humanoid Robot” which is submitted by Shivam Shukla (1406831124), Shashank Bhardwaj (1406831117), Shubahm Kumar (1406831131) in partial fulfillment of the requirement for the award of degree B.Tech in Department of Electronics & Communication Engineering of Gautam Buddh Technical University (Formerly U.P. Technical University), is record of the candidate own work carried out by him under my/our supervision. The matter embodied in this thesis is original and has not been submitted for the award of any other degree. -

An Introduction to the NASA Robotics Alliance Cadets Program

Session F An Introduction to the NASA Robotics Alliance Cadets Program David R. Schneider, Clare van den Blink NASA, DAVANNE, & Cornell University / Cornell University CIT [email protected], [email protected] Abstract The 2006 report National Defense Education and Innovation Initiative highlighted this nation’s growing need to revitalize undergraduate STEM education. In response, NASA has partnered with the DAVANNE Corporation to create the NASA Robotics Alliance Cadets Program to develop innovative, highly integrated and interactive curriculum to redesign the first two years of Mechanical Engineering, Electrical Engineering and Computer Science. This paper introduces the NASA Cadets Program and provides insight into the skill areas targeted by the program as well as the assessment methodology for determining the program’s effectiveness. The paper also offers a brief discussion on the capabilities of the program’s robotic platform and a justification for its design into the program. As an example of the integration of the robotic platform with the program’s methodologies, this paper concludes by outlining one of the first educational experiments of NASA Cadets Program at Cornell University to be implemented in the Spring 2007 semester. I. Introduction To be an engineer is to be a designer, a creator of new technology, and the everyday hero that solves society’s problems through innovative methods and products by making ideas become a reality. However, the opportunity to truly explore these key concepts of being an engineer are often withheld from most incoming engineering students until at least their junior year causing many new students to lose motivation and potentially leave the program. -

Towards a Robot Learning Architecture

From: AAAI Technical Report WS-93-06. Compilation copyright © 1993, AAAI (www.aaai.org). All rights reserved. Towards a Robot Learning Architecture Joseph O’Sullivan* School Of Computer Science, Carnegie Mellon University, Pittsburgh, PA 15213 email: josu][email protected] Abstract continuously improves its performance through learning. I summarize research toward a robot learning Such a robot must be capable of aa autonomous exis- architecture intended to enable a mobile robot tence during which its world knowledge is continuously to learn a wide range of find-and-fetch tasks. refined from experience as well as from teachings. In particular, this paper summarizesrecent re- It is all too easy to assumethat a learning agent has search in the Learning Robots Laboratory at unrealistic initial capabilities such as a prepared environ- Carnegie Mellon University on aspects of robot ment mapor perfect knowledgeof its actions. To ground learning, and our current worktoward integrat- our research, we use s Heath/Zenith Hero 2000 robot ing and extending this within a single archi- (named simply "Hero"), a commerc/aI wheeled mobile tecture. In previous work we developed sys- manipulator with a two finger hand, as a testbed on tems that learn action models for robot ma- whichsuccess or failure is judged. nipulation, learn cost-effective strategies for us- In rids paper, I describe the steps being taken to design ing sensors to approach and classify objects, and implement a learning robot agent. In Design Prin- learn models of sonar sensors for map build- ciples, an outline is stated of the believed requirements ing, learn reactive control strategies via rein- for a successful agent. -

The Impact of Robot Projects on Girl's Attitudes Toward Science And

2007 RSS Robotics in Education Workshop The Impact of Robot Projects on Girl’s Attitudes Toward Science and Engineering Jerry B. Weinberg+, Jonathan C. Pettibone+, Susan L. Thomas+, Mary L. Stephen*, and Cathryne Stein‡ +Southern Illinois University Edwardsville *Saint Louis University, Reinert Center for Teaching Excellence ‡KISS Institute for Practical Robotics Introduction The gender gap in engineering, science, and technology has been well documented [e.g., 1, 2], and a variety of programs at the k-12 level have been created with the intent of both increasing girl’s interest in these areas and their consideration of them for careers [e.g., 3, 4, 5]. With the development of robotics platforms that are both accessible for k-12 students and reasonably affordable, robotics projects have become a focus for these programs [e.g., 6, 7, 8]. While there is evidence that shows robotics projects are engaging educational tools [8, 9], a question that remains open is how effective they are in reducing the gender gap. Over the past year, an in-depth study of participants in a robotics educational program was conducted to determine if such programs have a positive impact on girls’ self-perception of their achievement in science, technology, engineering, and math areas (STEM), and whether this translates into career choices. To examine social and cultural issues, this study applied a long-standing model in motivation theory, Wigfield and Eccles’s [10] expectancy-value theory, to examine a variety of factors that surround girls’ perceptions of their achievement. Expectancy-value theory considers that individuals’ choices are directly related to their “belief about how well they will do on an activity and the extent to which they value the activity” [5, p. -

College4kids Summer Career Academies 2014

College4KidsSummer Career Academies 2014 at Piedmont Virginia Community College For Rising 3rd-9th Graders • June 16-Aug. 15, 2014 www.pvcc.edu/academies 1 For Rising 6th-9th Graders Acting for Film Lights! Camera! Action! Want to be a film actor? You’ll “Great teacher. Best computer class yet.” College4Kids have a blast in this workshop learning script analysis, – ComputeR SCIENCE STUDENT experiencing moment-to-moment acting techniques, Summer Career Academies 2014 performing for the camera and establishing a at Piedmont Virginia Community College confident performance through the pursuit of dramatic intention. You’ll be assigned a scene from a Hollywood Building a Computer From Parts • Can you count to two? If yes, then you can build a For Rising 3rd-9th Graders June 16-Aug. 15, 2014 movie to develop a character, work with scene complete computer system from parts and load the partners and perform on camera. Robert Wray is a operating system. Learn about computer system teacher, playwright and actor, with appearances in fundamentals including the binary number system and numerous films and New York City theater productions. logic gates, the elements of any computer system and Explore your interests with new friends. M-F 7/7-7/11 8:45-11:45 a.m. $125 operations at a basic level. Architecture M-F 6/16-6/20 12:30-3:30 p.m. $189 Have fun while you learn! If you could design your own building, what would it M-F 7/14-7/18 12:30-3:30 p.m. $189 look like? Dream big, then design it in detail! Create Computer Numerical Control (CNC): • Enjoy hands-on, project-based learning! architectural drawings by hand, build a physical 3-D model and then create a virtual model with Google Learn to Manufacture! SketchUp. -

Intelligent Robot Systems Based on PDA for Home Automation Systems in Ubiquitous 279

Intelligent Robot Systems based on PDA for Home Automation Systems in Ubiquitous 279 Intelligent Robot Systems based on PDA for Home Automation Systems 18X in Ubiquitous In-Kyu Sa, Ho Seok Ahn, Yun Seok Ahn, Seon-Kyu Sa and Jin Young Choi Intelligent Robot Systems based on PDA for Home Automation Systems in Ubiquitous In-Kyu Sa*, Ho Seok Ahn**, Yun Seok Ahn***, Seon-Kyu Sa**** and Jin Young Choi** Samsung Electronics Co.*, Seoul National University**, MyongJi University***, Mokwon University**** Republic of Korea 1. Introduction Koreans occasionally introduce their country with phrases like, ‘The best internet penetration in the world’, or ‘internet power’. A huge infra-structure for the internet has been built in recent years. The internet is becoming a general tool for anyone to exploit anytime and anywhere. Additionally, concern about the silver industry, which is related to the life of elderly people, has increased continuously by extending the average life span. In this respect, application of robots also has increased concern about advantages of autonomous robots such as convenience of robots or help from autonomous robots. This increase in concern has caused companies to launch products containing built-in intelligent environments and many research institutes have increased studies on home automation projects. In this book chapter, we address autonomous systems by designing fusion systems including intelligent mobility and home automation. We created home oriented robots which can be used in a real home environment and developed user friendly external design of robots to enhance user convenience. We feel we have solved some difficulties of the real home environment by fusing PDA based systems and home servers. -

Robots in Education: New Trends and Challenges from the Japanese Market

Themes in Science & Technology Education, 6(1), 51-62, 2013 Robots in education: New trends and challenges from the Japanese market Fransiska Basoeki1, Fabio Dalla Libera2, Emanuele Menegatti2 , Michele Moro2 [email protected], [email protected], [email protected], [email protected] 1 Department of System Innovation, Osaka University, Suita, Osaka, 565-0871 Japan 2 Department of Information Engineering, University of Padova, Italy Abstract. The paper introduces and compares the use of current robotics kits developed by different companies in Japan for education purposes. These kits are targeted to a large audience: from primary school students, to university students and also up to adult lifelong learning. We selected company and kits that are most successful in the Japanese market. Unfortunately, most information regarding the technical specifications, the practical usages, and the actual educational activities carried out with these kits are currently available in Japanese only. The main motivation behind this paper is to give non-Japanese speakers interested in educational robotics an overview of the use of educational kits in Japan. The paper is completed by a short description of a new pseudo-natural language we propose for effectively programming one of the presented robots, the educational humanoid Robovie-X. Keywords: educational robot kits, humanoid robots, wheeled robots, education Introduction With the advance of technology, robotics have been successfully used and integrated into different sectors of our life. Industrial robot arms almost completely replaced the manual workload. Service robots, such as Roomba, serve as home helpers in the households, dusting the house automatically. Also in the field of entertainment, many robots have also been developed, the robot dog AIBO provides a successful example of this. -

Surveillance Robot Controlled Using an Android App Project Report Submitted in Partial Fulfillment of the Requirements for the Degree Of

Surveillance Robot controlled using an Android app Project Report Submitted in partial fulfillment of the requirements for the degree of Bachelor of Engineering by Shaikh Shoeb Maroof Nasima (Roll No.12CO92) Ansari Asgar Ali Shamshul Haque Shakina (Roll No.12CO106) Khan Sufiyan Liyaqat Ali Kalimunnisa (Roll No.12CO81) Mir Ibrahim Salim Farzana (Roll No.12CO82) Supervisor Prof. Kalpana R. Bodke Co-Supervisor Prof. Amer Syed Department of Computer Engineering, School of Engineering and Technology Anjuman-I-Islam’s Kalsekar Technical Campus Plot No. 2 3, Sector -16, Near Thana Naka, Khanda Gaon, New Panvel, Navi Mumbai. 410206 Academic Year : 2014-2015 CERTIFICATE Department of Computer Engineering, School of Engineering and Technology, Anjuman-I-Islam’s Kalsekar Technical Campus Khanda Gaon,New Panvel, Navi Mumbai. 410206 This is to certify that the project entitled “Surveillance Robot controlled using an Android app” is a bonafide work of Shaikh Shoeb Maroof Nasima (12CO92), Ansari Asgar Ali Shamshul Haque Shakina (12CO106), Khan Sufiyan Liyaqat Ali Kalimunnisa (12CO81), Mir Ibrahim Salim Farzana (12CO82) submitted to the University of Mumbai in partial ful- fillment of the requirement for the award of the degree of “Bachelor of Engineering” in De- partment of Computer Engineering. Prof. Kalpana R. Bodke Prof. Amer Syed Supervisor/Guide Co-Supervisor/Guide Prof. Tabrez Khan Dr. Abdul Razak Honnutagi Head of Department Director Project Approval for Bachelor of Engineering This project I entitled Surveillance robot controlled using an android application by Shaikh Shoeb Maroof Nasima, Ansari Asgar Ali Shamshul Haque Shakina, Mir Ibrahim Salim Farzana,Khan Sufiyan Liyaqat Ali Kalimunnisa is approved for the degree of Bachelor of Engineering in Department of Computer Engineering. -

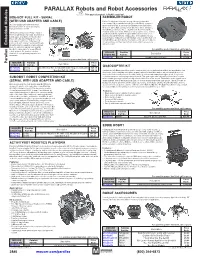

PARALLAX Robots and Robot Accessories This Page of Product Is Rohs Compliant

PARALLAX Robots and Robot Accessories This page of product is RoHS compliant. BOE-BOT FULL KIT - SERIAL SCRIBBLER ROBOT (WITH USB ADAPTER AND CABLE) Perfect for beginners age eight and up, this reprogrammable robot comes fully assembled including a built-in BASIC Stamp® 2 The ever-popular Boe-Bot® Robot Full microcontroller brain. It arrives pre-programmed with eight demo Kit (serial version) is our most complete modes, including light-seeking, object detection, object avoidance, reprogrammable robot kit. line-following, and more. Place a marker in its Pen Port and the Scribbler draws as it drives. Write your own programs in two formats: We're particularly proud of Andy Lindsay's graphically with the Scribbler Program Maker GUI software, or as Robotics with the Boe-Bot text. The Robotics PBASIC text with the BASIC Stamp Editor. The Scribbler is a fully text includes 41 activities for the Boe-Bot programmable, intelligent robot with multiple sensor systems that let it Robot with structured PBASIC 2.5 source interact with people and objects. It navigates on its own as it explores code support and bonus challenges with its surroundings, and then reports back about what it senses using solutions in each chapter. Starting with basic Embedded Modules Embedded light and sound. movement and proceeding to sensor-based projects, customers quickly learn how the Boe-Bot is expandable for many different For quantities greater than listed, call for quote. robotic projects. No previous robotics, MOUSER Parallax Price electronics or programming experience is Description necessary. STOCK NO. Part No. Each 619-28136 28136 Scribbler Robot (serial) 113.74 For quantities greater than listed, call for quote. -

POVERTY ALLEVIATION: a Role for Technology and Infrastructure?

Fondazione per la Collaborazione tra i Popoli Foundation for World Wide Cooperation Presidente Romano Prodi POVERTY ALLEVIATION: A Role for Technology and Infrastructure? CONCEPT NOTE ENERGY CONNECTIVITY (Communication and learning) HEALTH & FOOD ROMA, 2015 MAY Fondazione per la Collaborazione tra i Popoli Foundation for World Wide Cooperation Presidente Romano Prodi CONCEPT NOTE Poverty Alleviation: A Role for Technology and Infrastructure? The goal of the conference is twofold: to assess the results of technology-based poverty alleviation projects and to explore the social and political effects of this technology. In particular, we want to start floating an idea that may be out of the 'mainstream’ of political thinking. We all agree that peace and security are crucial to work for poverty alleviation. The common approach of international aid agencies is to build institutional and governance reform. While this is indispensable, it should not be the only focus. While rushing to create multi-party, parliamentary systems, independent judiciaries and free press, we should not forget the human factor, i.e. the need to build trust and communication among different individuals who will need, quite simply, to work together in order to make those institutions function. Today, in the twenty-first century, some of the most exciting tools available for addressing these issues are technology and innovation. Even with the best of governance and a visionary leadership, if there is no inclusive development, a country cannot move forward. Throughout history technology has been a powerful instrument for economic and social development. Technology played a critical role in reducing poverty in vast areas of the world in the past and can play today a crucial function in the battle against poverty. -

Using Fluid Power Workshops to Increase Stem Interest in K-12 Students

Paper ID #10577 Using fluid power workshops to increase STEM interest in K-12 students Dr. Jose M Garcia, Purdue University (Statewide Technology) Assistant Professor Engineering Technology Mr. Yury Alexandrovich Kuleshov, Purdue University, West Lafayette Dr. John H. Lumkes Dr. John Lumkes is an associate professor in agricultural and biological engineering at Purdue University. He earned a BS in engineering from Calvin College, an MS in engineering from the University of Michi- gan, and a PhD in mechanical engineering from the University of Wisconsin-Madison. His research focus is in the area of machine systems and fluid power. He is the advisor of a Global Design Team operating in Bangang, Cameroon, concentrating on affordable, sustainable utility transportation for rural villages in Africa. Page 24.1330.1 Page c American Society for Engineering Education, 2014 USING FLUID POWER WORKSHOPS TO INCREASE STEM INTEREST IN K-12 STUDENTS 1. Abstract This study addresses the issue of using robotics in K-12 STEM education. The authors applied intrinsic motivation theory to measure participant perceptions during a series of robotic workshops for K-12 students at Purdue University. A robotic excavator arm using fluid power components was developed and tested as a tool to generate interest in STEM careers. Eighteen workshops were held with a total number of 451 participants. Immediately after the workshop, participants were provided with a questionnaire that included both quantitative and qualitative questions. Fourteen of the questions are quantitative, where a participant would characterize their after-workshop experience using a 1 to 7- Likert scale. According to the intrinsic motivation theory it was hypothesized that participant perceptions should differ depending on their gender, race, and age. -

Down-Line Loading on Hero Robot

University of Wollongong Research Online Department of Computing Science Working Faculty of Engineering and Information Paper Series Sciences 1984 Down-line loading on hero robot John Fulcher University of Wollongong, [email protected] Follow this and additional works at: https://ro.uow.edu.au/compsciwp Recommended Citation Fulcher, John, Down-line loading on hero robot, Department of Computing Science, University of Wollongong, Working Paper 84-13, 1984, 39p. https://ro.uow.edu.au/compsciwp/9 Research Online is the open access institutional repository for the University of Wollongong. For further information contact the UOW Library: [email protected] Down-line loading on Hero robot John Fulcher Department of Computing Science University of Wollongong ABSTRACT Expansion of ET-18 (Hero 1.0) Educational Robot in order to provide down-line loading capability from the Unix time-shared operating system is described. This expansion has also enabled up-line saving of programs from Hero to Unix. These facilities are implemented in EPROM form (2716) in the optional ROM socket on Hero's CPU board. The program stored in EPROM is essentially the same as the Utility EPROM used on the expanded ET-3400 Microprocessor Trainers in the Department's Microcomputer Laboratory, with minor modifications to run on Hero. As a direct result of incorporating these facilities into Hero, the Unix down-line load program 'dll' has been upgraded (to allow for up- line save), and an r-file for the Unix general-purpose cross-~ssembler 'mac' has been written to allow for the use of Robot Interpreter commands (Hero utilizes unused M6800 op.