Open Research Online Oro.Open.Ac.Uk

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download the Report

Oregon Cultural Trust fy2011 annual report fy2011 annual report 1 Contents Oregon Cultural Trust fy2011 annual report 4 Funds: fy2011 permanent fund, revenue and expenditures Cover photos, 6–7 A network of cultural coalitions fosters cultural participation clockwise from top left: Dancer Jonathan Krebs of BodyVox Dance; Vital collaborators – five statewide cultural agencies artist Scott Wayne 8–9 Indiana’s Horse Project on the streets of Portland; the Museum of 10–16 Cultural Development Grants Contemporary Craft, Portland; the historic Astoria Column. Oregonians drive culture Photographs by 19 Tatiana Wills. 20–39 Over 11,000 individuals contributed to the Trust in fy2011 oregon cultural trust board of directors Norm Smith, Chair, Roseburg Lyn Hennion, Vice Chair, Jacksonville Walter Frankel, Secretary/Treasurer, Corvallis Pamela Hulse Andrews, Bend Kathy Deggendorfer, Sisters Nick Fish, Portland Jon Kruse, Portland Heidi McBride, Portland Bob Speltz, Portland John Tess, Portland Lee Weinstein, The Dalles Rep. Margaret Doherty, House District 35, Tigard Senator Jackie Dingfelder, Senate District 23, Portland special advisors Howard Lavine, Portland Charlie Walker, Neskowin Virginia Willard, Portland 2 oregon cultural trust December 2011 To the supporters and partners of the Oregon Cultural Trust: Culture continues to make a difference in Oregon – activating communities, simulating the economy and inspiring us. The Cultural Trust is an important statewide partner to Oregon’s cultural groups, artists and scholars, and cultural coalitions in every county of our vast state. We are pleased to share a summary of our Fiscal Year 2011 (July 1, 2010 – June 30, 2011) activity – full of accomplishment. The Cultural Trust’s work is possible only with your support and we are pleased to report on your investments in Oregon culture. -

Film, Television and Video Productions Featuring Brass Bands

Film, Television and Video productions featuring brass bands Gavin Holman, October 2019 Over the years the brass bands in the UK, and elsewhere, have appeared numerous times on screen, whether in feature films or on television programmes. In most cases they are small appearances fulfilling the role of a “local” band in the background or supporting a musical event in the plot of the drama. At other times band have a more central role in the production, featuring in a documentary or being a major part of the activity (e.g. Brassed Off, or the few situation comedies with bands as their main topic). Bands have been used to provide music in various long-running television programmes, an example is the 40 or more appearances of Chalk Farm Salvation Army Band on the Christmas Blue Peter shows on BBC1. Bands have taken part in game shows, provided the backdrop for and focus of various commercial advertisements, played bands of the past in historical dramas, and more. This listing of 450 entries is a second attempt to document these appearances on the large and small screen – an original list had been part of the original Brass Band Bibliography in the IBEW, but was dropped in the early 2000s. Some overseas bands are included. Where the details of the broadcast can be determined (or remembered) these have been listed, but in some cases all that is known is that a particular band appeared on a certain show at some point in time - a little vague to say the least, but I hope that we can add detail in future as more information comes to light. -

Ofcom Content Sanctions Committee

Ofcom Content Sanctions Committee Consideration of The British Broadcasting Corporation (“the BBC”) in sanctions against respect of its service BBC 1. For Breaches of the Ofcom Broadcasting Code (“the Code”) of: Rule 2.11: “Competitions should be conducted fairly, prizes should be described accurately and rules should be clear and appropriately made known.” Relating to the following conduct: Faking the winner of a viewer competition, in the live transmission of Comic Relief 2007 On 17 March 2007 between 00:10 and 01:30. Decision To impose a financial penalty (payable to HM Paymaster General) of £45,000. 1 Summary 1.1 For the reasons set out in full in the Decision, under powers delegated from the Ofcom Board to Ofcom’s Content Sanctions Committee (“the Committee”), the Committee decided to impose statutory sanctions on the BBC in light of the serious nature of its failure to ensure compliance with the Ofcom Broadcasting Code (“the Code”). 1.2 This adjudication under the Code relates to the broadcast of Comic Relief 2007 (“Comic Relief”) on BBC1 on 17 March 2007 between 00:10 and 01:301. 1.3 Comic Relief is a charity, regulated by the Charity Commission. In alternate years it holds “Red Nose Day”, which includes a live ‘telethon’ programme shown on BBC1, also known as Comic Relief. Comic Relief was the eleventh programme in a series that began on BBC1 in 1988. The programme started at 19:00 on Friday 16 March 2007 and ended at 03:30 on Saturday 17 March. As with previous Comic Relief programmes it was produced by the BBC with a largely freelance workforce and in partnership with Comic Relief, its longstanding charity partner. -

Annual Report on the BBC 2019/20

Ofcom’s Annual Report on the BBC 2019/20 Published 25 November 2020 Raising awarenessWelsh translation available: Adroddiad Blynyddol Ofcom ar y BBC of online harms Contents Overview .................................................................................................................................... 2 The ongoing impact of Covid-19 ............................................................................................... 6 Looking ahead .......................................................................................................................... 11 Performance assessment ......................................................................................................... 16 Public Purpose 1: News and current affairs ........................................................................ 24 Public Purpose 2: Supporting learning for people of all ages ............................................ 37 Public Purpose 3: Creative, high quality and distinctive output and services .................... 47 Public Purpose 4: Reflecting, representing and serving the UK’s diverse communities .... 60 The BBC’s impact on competition ............................................................................................ 83 The BBC’s content standards ................................................................................................... 89 Overview of our duties ............................................................................................................ 96 1 Overview This is our third -

I Am Writing to You to Express My Deep Concern About the Recent

I am writing to you to express my deep concern about the recent news from the BBC that they have applied to OFCOM to ask if they can reduce news output on the CBBC channel – by axing the main afternoon edition of the much loved Newsround. I am appalled that the BBC would even think this is a good decision. As far as i understand, they say that less children are watching Newsround and more are getting their news online, from the Newsround website and other sources. I cannot understand this reasoning. This is same reasoning they applied to the removal of BBC3 as linear service – and we all know that the end result of that was the BBC reached even less young people. To say more children are watching things online is a cop out. Why are children spending more time watching online? The answer is because they are poorly catered for by linear services. Don’t children spend enough time online as it is? Do we really want to encourage them to spend more time online? Newsround has been a fixture in the CBBC schedule for over 40 years. Surely, this is what the BBC should be spending their money on – a daily news programme for children aged 7- 15. CBBC broadcasts for 14 hours a day- is it really too much to ask that they provide 7 minutes of news in the afternoon / evening? The CBBC channel narrowed its focus to 7-12 year olds in 2013. Some of the continuity presenters can hardly string a legible sentence together and others would be more suited to Cbeebies. -

2 BBC Children in Need: the Best Bits 3 We Beat the All Blacks 4 Gower 5 Coming Home: Gethin Jones 6 Scrum V Live: Edinburgh V Ospreys 7 Pobol Y Cwm

Week/Wythnos 47 November/Tachwedd 17-23, 2012 Pages/Tudalennau: 2 BBC Children in Need: the Best Bits 3 We Beat The All Blacks 4 Gower 5 Coming Home: Gethin Jones 6 Scrum V Live: Edinburgh v Ospreys 7 Pobol y Cwm Places of interest: Anglesey 2 Barry 5 Cardiff 2 Gower 4 Llanelli 3 Pontyberem 5 Swansea 4, 6 Tonypandy 2 Follow us on Twitter to keep up with all the latest news from BBC Cymru Wales @BBCWalesPress Dilynwch ni ar Twitter am y newyddion diweddaraf gan BBC Cymru Wales @BBCWalesPress Pictures are available at www.bbcpictures.com / Lluniau ar gael yn www.bbcpictures.com NOTE TO EDITORS: All details correct at time of going to press, but programmes are liable to change. Please check with BBC Cymru Wales Communications before publishing. NODYN I OLYGYDDION: Mae’r manylion hyn yn gywir wrth fynd i’r wasg, ond mae rhaglenni yn gallu newid. Cyn cyhoeddi gwybodaeth, cysylltwch â’r Adran Gyfathrebu. BBC CHILDREN IN NEED: THE BEST BITS Saturday, November 17, BBC One Wales, 5.30pm bbc.co.uk/pudsey Welsh television personality Gethin Jones will look back at the best bits of this year’s BBC Children in Need appeal in Wales, with a highlights programme on BBC One Wales. Highlights include a performance from Tonypandy’s own West End star Sophie Evans, who will sing with the BBC National Orchestra of Wales. Sophie will perform songs including Over the Rainbow from The Wizard of Oz and Good Morning Baltimore from the musical Hairspray. The Orchestra and Sophie Evans will be joined by Côr Ieuenctid Môn (Anglesey Youth Choir), and a choir of school children from Cardiff and Tonypandy - who will link up with choirs from around the UK for a special performance. -

M5 Mcd Cocaine.Pdf

For many centuries, natives of South America have made offerings to Mama Coca, the Goddess of the coca plant in exchange for a good harvest. Today, local consumers regularly make large offerings of cash to a less traditional deity called ‘Papa Dealer’. or many centuries, natives of South America have used the leaves of the coca plant as a medicine, a food, and an all-purpose means of getting through the day. Rich in thiamine, riboflavin and vitamin coca leaves are believed to tone the muscles, aid concentration, breathing in the mountainous regions, and exertion in the heavy labour of the Andean tin mines. Mama Coca is the Goddess of the Coca Plant, and South Americans still make offerings of coca leaves to Mama Coca, in exchange for a good harvest. Cocaine hydrochloride is a white crystalline powder, derived from the coca plant, and today, local consumers regularly make large offers of cash to a less traditional deity called ‘Papa Dealer’, in exchange for a bag of said product. Unlike coca leaves, cocaine hydrochloride has no known nutritional value whatsoever. why do people take ocaine? For the same reasons that people take any drug. It’s exciting, it’s sexy, it’s enjoyable and it’s slightly dangerous. For a while, at least. The primary effect is an overwhelming sense of confidence and well being. Think of James Cagney on top of that gas tank, wasting coppers left and right. ‘Top of the world, ma!’ Who on earth doesn’t want to feel absolutely certain that, as long as the coke lasts, they’re wittier, smarter and cooler than anyone else? There’s an important caveat here though. -

Doctor Who 1 Doctor Who

Doctor Who 1 Doctor Who This article is about the television series. For other uses, see Doctor Who (disambiguation). Doctor Who Genre Science fiction drama Created by • Sydney Newman • C. E. Webber • Donald Wilson Written by Various Directed by Various Starring Various Doctors (as of 2014, Peter Capaldi) Various companions (as of 2014, Jenna Coleman) Theme music composer • Ron Grainer • Delia Derbyshire Opening theme Doctor Who theme music Composer(s) Various composers (as of 2005, Murray Gold) Country of origin United Kingdom No. of seasons 26 (1963–89) plus one TV film (1996) No. of series 7 (2005–present) No. of episodes 800 (97 missing) (List of episodes) Production Executive producer(s) Various (as of 2014, Steven Moffat and Brian Minchin) Camera setup Single/multiple-camera hybrid Running time Regular episodes: • 25 minutes (1963–84, 1986–89) • 45 minutes (1985, 2005–present) Specials: Various: 50–75 minutes Broadcast Original channel BBC One (1963–1989, 1996, 2005–present) BBC One HD (2010–present) BBC HD (2007–10) Picture format • 405-line Black-and-white (1963–67) • 625-line Black-and-white (1968–69) • 625-line PAL (1970–89) • 525-line NTSC (1996) • 576i 16:9 DTV (2005–08) • 1080i HDTV (2009–present) Doctor Who 2 Audio format Monaural (1963–87) Stereo (1988–89; 1996; 2005–08) 5.1 Surround Sound (2009–present) Original run Classic series: 23 November 1963 – 6 December 1989 Television film: 12 May 1996 Revived series: 26 March 2005 – present Chronology Related shows • K-9 and Company (1981) • Torchwood (2006–11) • The Sarah Jane Adventures (2007–11) • K-9 (2009–10) • Doctor Who Confidential (2005–11) • Totally Doctor Who (2006–07) External links [1] Doctor Who at the BBC Doctor Who is a British science-fiction television programme produced by the BBC. -

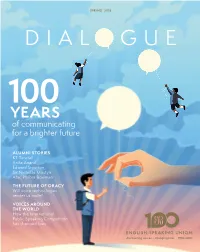

DIALOGUE 100 YEARS of Communicating for a Brighter Future

SPRING 2018 DIALOGUE 100 YEARS of communicating for a brighter future ALUMNI STORIES KT Tunstall Anita Anand Edward Stourton Sir Nicholas Mostyn Abie Philbin Bowman THE FUTURE OF ORACY Will voice technologies render us mute? VOICES AROUND THE WORLD How the International Public Speaking Competition has changed lives FROM THE CHAIRMAN DISCOVER DEBATING A centenary message from our Chairman HELPING STUDENTS DISCOVER THEIR VOICE LORD PAUL BOATENG Do your students have trouble communicating their ideas? Do they find it difficult to express how they feel, or struggle to understand the viewpoints of others? If so, ne hundred years ago, after a devastating war, from the society of which we all need to be a part. our Discover Debating programme can help. our founders sought to make a better life for all This plays into another challenge, too. The world is Offered as a two- or three-term curriculum, by promoting effective communication in the changing. There has never been a greater need for global it encourages confidence about speaking up English language, and encouraging global dialogue and understanding. Technology purports to make and teaches important active listening skills. O dialogue through increasing personal connectivity. communication easier (see page 16), but there is more to Research shows oracy interventions at I learnt early on the power of language. My father communication than posting something on the internet or was a lawyer and subsequently an activist in the struggle sending a tweet. True communication is not just expressing younger ages are particularly effective for independence of what was then the Gold Coast. -

Future of Childrens Television

An independent report on The Future of Children’s Television Programming Deliberative research summary Prepared by Opinion Leader Publication date: 2nd October 2007 1 Contents 1. Executive summary 2. Introduction 3. The role of children's television 4. Satisfaction with children’s television 5. Public Service Broadcasting 6. Availability of children's programmes and channels 7. Range of children’s programme genres 8. New programmes and repeats 9. Country of origin of children’s programmes 10. Ideal futures for the provision of children’s programmes 11. Appendices 2 Executive summary Background Ofcom initiated the review of children’s television programming in response to a number of consumer and market changes. These include an increase in the range of media available to many children and a growing number of dedicated children’s channels, as well as changes in the way children consume media. As a result, traditional commercial public service broadcasters are facing significant pressures on their ability to fund original programming for children. These changes are occurring in the context of a new framework for the regulation of children’s programming, set out in the Communications Act 2003. Since the Act, ITV1, which had historically played a role in delivering a strong alternative voice to the BBC in terms of children’s programmes, has significantly reduced its commitments to children’s programming. This development, together with the other consumer and market changes outlined above, has led many to ask how public service children’s programming can continue to be delivered in the future. The Communications Act requires Ofcom to report on the fulfilment of the public service broadcasters’ public service remit at least once every five years and to make recommendations with a view to maintaining and strengthening the quality of public service broadcasting in the future. -

All in Your Stride... Mediacityuk Area Presenters, and the Memorial to the First-Ever Blue Peter Dog, Petra, Which Was Flown in by Helicopter

Cross The Quays mini-roundabout, when you come to the Metrolink tramlines, cross with 10 care and walk through The Green, bearing left past the Blue Peter Garden, to Piazza and the MediaCityUK Studios. The Blue Peter Garden, well-known nationally by Blue Peter viewers of all generations, was moved here with BBC Children’s TV, when that department transferred to Salford from London. The garden contains footprints in cement of various Blue Peter All in your stride... MediaCityUK area presenters, and the memorial to the first-ever Blue Peter dog, Petra, which was flown in by helicopter. Continue along Huron Basin to the On The Green outside The Studios, Piazza, MediaCityUK. START/FINISH 1 BROADWAY 9 mini-roundabout at The Lowry. The digital creative world that is MediaCityUK stands on the site of Manchester Racecourse, which O H The Lowry opened in 2000 as Salford’s Millennium Project. It was I was located here for over 30 years, 1867-1902. Buffalo Bill’s Wild West show played here to sell-out O A planned primarily to display Salford Council’s wonderful paintings M V I audiences in the winter of 1888. C E H N by local artist, L S Lowry – the largest publicly-owned collection I U G A E N of Lowry paintings in the world. The shape of The Lowry building Y A WA V AD E Turn right, walk along The Green between Quay House and Bridge BRO N loosely resembles a ship and evokes the heritage of this area. U Broadway E 2 Harbour City YS House, and cross the footbridge over the Manchester Ship Canal. -

Lorraine Heggessey Media Executive Media Masters – July 9 , 2020 Listen to the Podcast Online, Visit

Lorraine Heggessey Media Executive Media Masters – July 9 , 2020 Listen to the podcast online, visit www.mediamasters.fm Welcome to Media Masters, a series of one-to-one interviews with people at the top of the media game. Today I'm joined down the line by Lorraine Heggessey. Educated at Durham University and initially starting in newspaper journalism, she began her TV career joining the BBC in 1979 as a news trainee. After five years, she left for a stint in the commercial sector, working on ITV's This Week and various programs for Channel 4. She returned to the BBC in 1993, working in the science department, editing QED and executive producing Animal Hospital and The Human Body. In 1997, she became head of children's BBC, memorably firing Blue Peter presenter, Richard Bacon, for taking cocaine. In 2000 Lorraine was appointed the first female controller of BBC One, commissioning Strictly Come Dancing and bringing back Dr. Who. In 2005 she left the Beeb and was appointed Chief Executive of Talkback Thames, responsible for over 800 hours of tele a year, including The X Factor and Britain's Got Talent. In 2017 she left the broadcast world and became Chief Executive of The Royal Foundation, the charity for the Duke and Duchess of Cambridge and Prince Harry. She moved on two years later when the Sussex's set up their own charitable initiative. Today she works as a media consultant, public speaker, advisor to Channel 4's Growth Fund, and is also chair of the Grierson Trust, which helps young people from diverse backgrounds get into documentary making.