Best Practices: Adoption of Symmetric Multiprocessing Using Vxworks and Intel® Multicore Processors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wind River Vxworks Platforms 3.8

Wind River VxWorks Platforms 3.8 The market for secure, intelligent, Table of Contents Build System ................................ 24 connected devices is constantly expand- Command-Line Project Platforms Available in ing. Embedded devices are becoming and Build System .......................... 24 VxWorks Edition .................................2 more complex to meet market demands. Workbench Debugger .................. 24 New in VxWorks Platforms 3.8 ............2 Internet connectivity allows new levels of VxWorks Simulator ....................... 24 remote management but also calls for VxWorks Platforms Features ...............3 Workbench VxWorks Source increased levels of security. VxWorks Real-Time Operating Build Configuration ...................... 25 System ...........................................3 More powerful processors are being VxWorks 6.x Kernel Compatibility .............................3 considered to drive intelligence and Configurator ................................. 25 higher functionality into devices. Because State-of-the-Art Memory Host Shell ..................................... 25 Protection ..................................3 real-time and performance requirements Kernel Shell .................................. 25 are nonnegotiable, manufacturers are VxBus Framework ......................4 Run-Time Analysis Tools ............... 26 cautious about incorporating new Core Dump File Generation technologies into proven systems. To and Analysis ...............................4 System Viewer ........................ -

Wind River Linux Subscription and Services Offerings

WIND RIVER LINUX SUBSCRIPTION AND SERVICES OFFERINGS The industry’s most advanced embedded Linux development platform, with a comprehensive suite of products, tools, and lifecycle services to help our customers build and support intelligent edge devices Wind River® Linux enables you to build and deploy robust, reliable, and secure Linux-based edge devices and systems without the risk and development effort associated with roll-your-own (RYO) in-house efforts. Let Wind River keep your code base up to date, track and fix defects, apply security patches, customize your runtime to adhere to strict market specifications and certifications, facilitate your IP and export compliance, and significantly reduce your costs. Wind River is the global leader in the embedded software industry, with decades of expertise, more than 15 years as an active contributor and committed champion of open source, and a proven track record of helping customers build and deploy use case–optimized devices and systems. Wind River Linux is running on hundreds of millions of deployed devices worldwide, and the Wind River Linux suite of products and services offers you a high degree of confidence and flexibility to prototype, develop, and move to real deployment. Table 1. Comparison of Wind River Linux Delivery Release Options Freely Available Long Term Support (LTS) Continuous Delivery (CD) Annually, with Annually, with predictable Rolling Ongoing, with every-3-weeks Frequency community Cumulative Patch Layer (RCPL) cadence release cadence maintenance 3 weeks, until next -

Mac Labs. More Creativity

Mac labs. More creativity. More engagement. More learning. Every Mac comes ready for 21st-century learning. Mac OS X Server creates the ultimate lab environment. Creativity and innovation are critical skills in the modern Mac OS X Server includes simplified tools for creating wikis and workplace, which makes them critical skills to teach today’s blogs, so students and staff can set up and manage them with students. Apple offers comprehensive lab solutions for your little or no help from IT. The Spotlight Server feature makes it school or university that will creatively engage students and easy for students collaborating on projects to find and view motivate them to take an interest in their own learning. The content stored anywhere on the network. Of course, access iLife ’09 suite of applications comes standard on every Mac, controls are built in, so they only get the search results they’re so students can immediately begin creating podcasts, editing authorised to see. Mac OS X Server also allows for real-time videos, composing music, producing photo essays and more. streaming of audio and video content, which lets everyone get right to work instead of waiting for downloads – saving major Mac or PC? Have both. storage across your network. Now one lab really can solve all your computing needs. Every Mac is powered by an Intel processor and features Launch careers from your Mac lab. Mac OS X – the world’s most advanced operating system. As an Apple Authorised Training Centre for Education, your Mac OS X comes with an amazing dual-boot feature called school can build a bridge between students and real-world Boot Camp that lets students run Windows XP or Vista natively careers. -

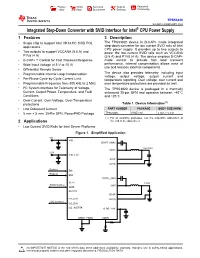

Integrated Step-Down Converter with SVID Interface for Intel® CPU

Product Order Technical Tools & Support & Folder Now Documents Software Community TPS53820 SLUSE33 –FEBRUARY 2020 Integrated Step-Down Converter with SVID Interface for Intel® CPU Power Supply 1 Features 3 Description The TPS53820 device is D-CAP+ mode integrated 1• Single chip to support Intel VR13.HC SVID POL applications step-down converter for low current SVID rails of Intel CPU power supply. It provides up to two outputs to • Two outputs to support VCCANA (5.5 A) and power the low current SVID rails such as VCCANA P1V8 (4 A) (5.5 A) and P1V8 (4 A). The device employs D-CAP+ • D-CAP+™ Control for Fast Transient Response mode control to provide fast load transient • Wide Input Voltage (4.5 V to 15 V) performance. Internal compensation allows ease of use and reduces external components. • Differential Remote Sense • Programmable Internal Loop Compensation The device also provides telemetry, including input voltage, output voltage, output current and • Per-Phase Cycle-by-Cycle Current Limit temperature reporting. Over voltage, over current and • Programmable Frequency from 800 kHz to 2 MHz over temperature protections are provided as well. 2 • I C System Interface for Telemetry of Voltage, The TPS53820 device is packaged in a thermally Current, Output Power, Temperature, and Fault enhanced 35-pin QFN and operates between –40°C Conditions and 125°C. • Over-Current, Over-Voltage, Over-Temperature (1) protections Table 1. Device Information • Low Quiescent Current PART NUMBER PACKAGE BODY SIZE (NOM) • 5 mm × 5 mm, 35-Pin QFN, PowerPAD Package TPS53820 RWZ (35) 5 mm × 5 mm (1) For all available packages, see the orderable addendum at 2 Applications the end of the data sheet. -

Introduction to Intel® FPGA IP Cores

Introduction to Intel® FPGA IP Cores Updated for Intel® Quartus® Prime Design Suite: 20.3 Subscribe UG-01056 | 2020.11.09 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. Introduction to Intel® FPGA IP Cores..............................................................................3 1.1. IP Catalog and Parameter Editor.............................................................................. 4 1.1.1. The Parameter Editor................................................................................. 5 1.2. Installing and Licensing Intel FPGA IP Cores.............................................................. 5 1.2.1. Intel FPGA IP Evaluation Mode.....................................................................6 1.2.2. Checking the IP License Status.................................................................... 8 1.2.3. Intel FPGA IP Versioning............................................................................. 9 1.2.4. Adding IP to IP Catalog...............................................................................9 1.3. Best Practices for Intel FPGA IP..............................................................................10 1.4. IP General Settings.............................................................................................. 11 1.5. Generating IP Cores (Intel Quartus Prime Pro Edition)...............................................12 1.5.1. IP Core Generation Output (Intel Quartus Prime Pro Edition)..........................13 1.5.2. Scripting IP Core Generation.................................................................... -

Introducing the New 11Th Gen Intel® Core™ Desktop Processors

Product Brief 11th Gen Intel® Core™ Desktop Processors Introducing the New 11th Gen Intel® Core™ Desktop Processors The 11th Gen Intel® Core™ desktop processor family puts you in control of your compute experience. It features an innovative new architecture for reimag- ined performance, immersive display and graphics for incredible visuals, and a range of options and technologies for enhanced tuning. When these advances come together, you have everything you need for fast-paced professional work, elite gaming, inspired creativity, and extreme tuning. The 11th Gen Intel® Core™ desktop processor family gives you the power to perform, compete, excel, and power your greatest contributions. Product Brief 11th Gen Intel® Core™ Desktop Processors PERFORMANCE Reimagined Performance 11th Gen Intel® Core™ desktop processors are intelligently engineered to push the boundaries of performance. The new processor core architecture transforms hardware and software efficiency and takes advantage of Intel® Deep Learning Boost to accelerate AI performance. Key platform improvements include memory support up to DDR4-3200, up to 20 CPU PCIe 4.0 lanes,1 integrated USB 3.2 Gen 2x2 (20G), and Intel® Optane™ memory H20 with SSD support.2 Together, these technologies bring the power and the intelligence you need to supercharge productivity, stay in the creative flow, and game at the highest level. Product Brief 11th Gen Intel® Core™ Desktop Processors Experience rich, stunning, seamless visuals with the high-performance graphics on 11th Gen Intel® Core™ desktop -

Intel® Omni-Path Architecture (Intel® OPA) for Machine Learning

Big Data ® The Intel Omni-Path Architecture (OPA) for Machine Learning Big Data Sponsored by Intel Srini Chari, Ph.D., MBA and M. R. Pamidi Ph.D. December 2017 mailto:[email protected] Executive Summary Machine Learning (ML), a major component of Artificial Intelligence (AI), is rapidly evolving and significantly improving growth, profits and operational efficiencies in virtually every industry. This is being driven – in large part – by continuing improvements in High Performance Computing (HPC) systems and related innovations in software and algorithms to harness these HPC systems. However, there are several barriers to implement Machine Learning (particularly Deep Learning – DL, a subset of ML) at scale: • It is hard for HPC systems to perform and scale to handle the massive growth of the volume, velocity and variety of data that must be processed. • Implementing DL requires deploying several technologies: applications, frameworks, libraries, development tools and reliable HPC processors, fabrics and storage. This is www.cabotpartners.com hard, laborious and very time-consuming. • Training followed by Inference are two separate ML steps. Training traditionally took days/weeks, whereas Inference was near real-time. Increasingly, to make more accurate inferences, faster re-Training on new data is required. So, Training must now be done in a few hours. This requires novel parallel computing methods and large-scale high- performance systems/fabrics. To help clients overcome these barriers and unleash AI/ML innovation, Intel provides a comprehensive ML solution stack with multiple technology options. Intel’s pioneering research in parallel ML algorithms and the Intel® Omni-Path Architecture (OPA) fabric minimize communications overhead and improve ML computational efficiency at scale. -

Intel® Arria® 10 Device Overview

Intel® Arria® 10 Device Overview Subscribe A10-OVERVIEW | 2020.10.20 Send Feedback Latest document on the web: PDF | HTML Contents Contents Intel® Arria® 10 Device Overview....................................................................................... 3 Key Advantages of Intel Arria 10 Devices........................................................................ 4 Summary of Intel Arria 10 Features................................................................................4 Intel Arria 10 Device Variants and Packages.....................................................................7 Intel Arria 10 GX.................................................................................................7 Intel Arria 10 GT............................................................................................... 11 Intel Arria 10 SX............................................................................................... 14 I/O Vertical Migration for Intel Arria 10 Devices.............................................................. 17 Adaptive Logic Module................................................................................................ 17 Variable-Precision DSP Block........................................................................................18 Embedded Memory Blocks........................................................................................... 20 Types of Embedded Memory............................................................................... 21 Embedded Memory Capacity in -

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

Multiprocessing and Scalability

Multiprocessing and Scalability A.R. Hurson Computer Science and Engineering The Pennsylvania State University 1 Multiprocessing and Scalability Large-scale multiprocessor systems have long held the promise of substantially higher performance than traditional uni- processor systems. However, due to a number of difficult problems, the potential of these machines has been difficult to realize. This is because of the: Fall 2004 2 Multiprocessing and Scalability Advances in technology ─ Rate of increase in performance of uni-processor, Complexity of multiprocessor system design ─ This drastically effected the cost and implementation cycle. Programmability of multiprocessor system ─ Design complexity of parallel algorithms and parallel programs. Fall 2004 3 Multiprocessing and Scalability Programming a parallel machine is more difficult than a sequential one. In addition, it takes much effort to port an existing sequential program to a parallel machine than to a newly developed sequential machine. Lack of good parallel programming environments and standard parallel languages also has further aggravated this issue. Fall 2004 4 Multiprocessing and Scalability As a result, absolute performance of many early concurrent machines was not significantly better than available or soon- to-be available uni-processors. Fall 2004 5 Multiprocessing and Scalability Recently, there has been an increased interest in large-scale or massively parallel processing systems. This interest stems from many factors, including: Advances in integrated technology. Very -

Design of a Message Passing Interface for Multiprocessing with Atmel Microcontrollers

DESIGN OF A MESSAGE PASSING INTERFACE FOR MULTIPROCESSING WITH ATMEL MICROCONTROLLERS A Design Project Report Presented to the Engineering Division of the Graduate School of Cornell University in Partial Fulfillment of the Requirements of the Degree of Master of Engineering (Electrical) by Kalim Moghul Project Advisor: Dr. Bruce R. Land Degree Date: May 2006 Abstract Master of Electrical Engineering Program Cornell University Design Project Report Project Title: Design of a Message Passing Interface for Multiprocessing with Atmel Microcontrollers Author: Kalim Moghul Abstract: Microcontrollers are versatile integrated circuits typically incorporating a microprocessor, memory, and I/O ports on a single chip. These self-contained units are central to embedded systems design where low-cost, dedicated processors are often preferred over general-purpose processors. Embedded designs may require several microcontrollers, each with a specific task. Efficient communication is central to such systems. The focus of this project is the design of a communication layer and application programming interface for exchanging messages among microcontrollers. In order to demonstrate the library, a small- scale cluster computer is constructed using Atmel ATmega32 microcontrollers as processing nodes and an Atmega16 microcontroller for message routing. The communication library is integrated into aOS, a preemptive multitasking real-time operating system for Atmel microcontrollers. Report Approved by Project Advisor:__________________________________ Date:___________ Executive Summary Microcontrollers are versatile integrated circuits typically incorporating a microprocessor, memory, and I/O ports on a single chip. These self-contained units are central to embedded systems design where low-cost, dedicated processors are often preferred over general-purpose processors. Some embedded designs may incorporate several microcontrollers, each with a specific task. -

4-Phase, 140-A Reference Design for Intel® Stratix® 10 GX Fpgas Using

TI Designs: PMP20176 Four-Phase, 140-A Reference Design for Intel® Stratix® 10 GX FPGAs Using TPS53647 Description Features This reference design focuses on providing a compact, • All Ceramic Output Capacitors high-performance, multiphase solution suitable for • D-CAP+™ Modulator for Superior Current-Sharing powering Intel®Stratix® 10 GX FPGAs with a specific Capabilities and Transient Response focus on the 1SG280-1IV variant. Integrated PMBus™ • Peak Efficiency of 91.5% at 400 kHz, VIN = 12 V, allows for easy output voltage setting and telemetry of V = 0.9 V, I = 60 A key design parameters. The design enables OUT OUT programming, configuration, smart VID adjustment, • Excellent Thermal Performance Under No Airflow and control of the power supply, while providing Conditions monitoring of input and output voltage, current, power, • Overvoltage, Overcurrent, and Overtemperature and temperature. TI's Fusion Digital Power™ Designer Protection is used for programming, monitoring, validation, and • PMBus Compatibility for Output Voltage Setting characterization of the FPGA power design. and Telemetry for VIN, VOUT, IOUT, and Temperature • PMBus and Pinstrapping Programming Options Resources Applications PMP20176 Design Folder • FPGA Core Rail Power TPS53647 Product Folder CSD95472Q5MC Product Folder • Ethernet Switches Fusion Digital Power • Firewalls and Routers Product Folder Designer • Telecom and Base Band Units • Test and Measurement ASK Our E2E Experts CSD95472Q5MC Smart Power Stage CSD95472Q5MC Smart Power Stage TPS53647 ® Four-Phase Stratix 10 GX Controller Core Rail CSD95472Q5MC Smart Power Stage Onboard Load Generator CSD95472Q5MC Smart Power Intel® Load Stage Slammers Copyright © 2017, Texas Instruments Incorporated An IMPORTANT NOTICE at the end of this TI reference design addresses authorized use, intellectual property matters and other important disclaimers and information.