Kindergarten Readiness Assessment South Carolina Technical Report For

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Technical Reference Manual for the Standardization of Geographical Names United Nations Group of Experts on Geographical Names

ST/ESA/STAT/SER.M/87 Department of Economic and Social Affairs Statistics Division Technical reference manual for the standardization of geographical names United Nations Group of Experts on Geographical Names United Nations New York, 2007 The Department of Economic and Social Affairs of the United Nations Secretariat is a vital interface between global policies in the economic, social and environmental spheres and national action. The Department works in three main interlinked areas: (i) it compiles, generates and analyses a wide range of economic, social and environmental data and information on which Member States of the United Nations draw to review common problems and to take stock of policy options; (ii) it facilitates the negotiations of Member States in many intergovernmental bodies on joint courses of action to address ongoing or emerging global challenges; and (iii) it advises interested Governments on the ways and means of translating policy frameworks developed in United Nations conferences and summits into programmes at the country level and, through technical assistance, helps build national capacities. NOTE The designations employed and the presentation of material in the present publication do not imply the expression of any opinion whatsoever on the part of the Secretariat of the United Nations concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries. The term “country” as used in the text of this publication also refers, as appropriate, to territories or areas. Symbols of United Nations documents are composed of capital letters combined with figures. ST/ESA/STAT/SER.M/87 UNITED NATIONS PUBLICATION Sales No. -

A Global Leader in Pre-K–12 Education Technology

A global leader in pre-K–12 education technology ©Copyright 2020 Renaissance Learning Inc. All rights reserved. 1 Assessing for Kindergarten Readiness Isabel Turner July 14, 2021 ©Copyright 2020 Renaissance Learning Inc. All rights reserved. 2 “To accelerate learning for all children Our mission and adults of all ability levels and ethnic and social backgrounds, worldwide.” ©Copyright 2020 Renaissance Learning Inc. All rights reserved. 3 Agenda Defining Kindergarten Readiness 1 Purpose for screening K Readiness Assessment 2 Preparing your students, Supporting ELC’s, Student and Admin Experience 3 Support and Resources ©Copyright 2020 Renaissance Learning Inc. All rights reserved. 4 Defining Kindergarten Readiness Who takes the test? When do they test? Metrics • All public school and • Pre-Test in Fall • Kindergarten charter school K • July 22 – September 24 (test within first 30 school days) • Fall –SS 530 • All public Pre-K • Post –Test in Spring • Spring –SS 681 • All Collaborative Pre-K • March 21,2022 –April 29,2022 • Pre-K -Spring – SS 498 • School 500 K students • Key Dates Document ©Copyright 2020 Renaissance Learning Inc. All rights reserved. 5 Purpose for Universal Screening ©Copyright 2020 Renaissance Learning Inc. All rights reserved. 6 Star items are on the Star scale 300 900 Star items ©Copyright 2020 Renaissance Learning Inc. All rights reserved. 7 Learning progression skills are on the Star scale 675 Scaled Score (SS) 300 900 Star items Student performance Learning progression skills ©Copyright 2020 Renaissance Learning Inc. All rights reserved. 8 Basics of Star Early Literacy • Computer Adaptive • Designed for Pre-K - 3 grade non-readers • Assess within 3 domains (including early numeracy) • 27 items • Scores most closely align with a student’s age • Preferences are available ©Copyright 2020 Renaissance Learning Inc. -

Important Papers KRA Days and the First Days

We are very happy to welcome you to Marysville Schools and our Kindergarten program at Raymond Elementary! This is a wonderful time for you and your kindergartner! Whether this is your first child entering kindergarten or last, you should find the following letter filled with information to make those first days and weeks a bit easier. Our goal is for you to feel comfortable and have many of your questions answered before the first day of school. We are pleased to introduce our new kindergarten teacher, Mrs. Jess Gompf. Mrs. Gompf was a substitute teacher for the district last year and she also has experience teaching kindergarten at a private school. Her passion for students, strong work ethic and knowledge of kindergarten learning standards made her a stand out during the interview process! Our kindergartners will attend school on “red” days again this year. Please remember that the Red Class attends school on Mondays/Thursdays and alternating Wednesdays. You will want to refer to your Kindergarten Calendar for specific days and more information regarding our school calendar. We are including a copy of this calendar and it can also be found on the Marysville Schools website at www.marysville.k12.oh.us . Our families who are choosing the All Day/Everyday option will be attending Edgewood Elementary this year. We would also like to wish Mrs. Weiser the best of luck as she continues her career at Navin Elementary this year. Mrs. Weiser made the tough decision to move to Navin when an All Day/Every Day class became available. -

Cataloging Service Bulletin 004, Spring 1979

- LIBRARY OF CONGRESS/WASHINGTON CATALOGING SERVICE BULLETIN PROCESSING SERVICES Number 4, Spring 1979 Editor: Robert M. Hiatt CONTENTS GENERAL Correspond.ence Ad.dressed. to the Library of Congress Indexes DESCRIPTIVE CATALOGING Correspondence of Interest to Other Librarians Rule Interpretations Revised Corporate Name Headings Cataloging of Videorecordings IFLA Draft Recommend.ations 6 '. SUBJECT HEADINGS Changing Subject Headings and Closing the Catalogs Policy on Policy Statements Multiples in LCSH " ~0rnpend.s" Subscriptions to and additional copies of Cataloging Sewice Bullelin are dvl\il - able upon request and at no charge from the Cataloging Distribution Servic.~.. - Library of Congress, Building 159, Navy Yard Annex, Washington, D.C. 20541. Library of Congress Catalog Card Number 78-51400 lSSN 0160-8029 Key title: Cataloging service bulletin CONTENTS (Cont 'd) LC CLASSIFICATION Classifying Works on Library Resources Erratm DECIMAL CLASSTFICATION Dewey 19 n 2 Cataloging Service Bulletin, No. 4 / Spring 1979 GENERAL Correspond.ence Addressed to the Library of Congress The Library of Congress welcomes inquiries regarding catalog- ing matters. In ord.er to exped.ite replies, please write directly to the LC officer responsible for the area of the inquiry, as ind.icated.below. Replies will be returned. as soon as practicable. This is a revision of the list that appeared. in Cataloging Service, bulletin 119. At the Library of Congress most cataloging is divid.ed, admin- istratively into d.escriptive cataloging and. subject cataloging. The term d.escriptive cataloging refers to the choice and form of the main entry heading, bibliographic das cription, and added entries (secondary entries numbered, with roman numerals), and. subject cataloging refers to subject head.ings (second.ary entries numbered, with arabic numerals) and, the LC classification system (includ.ing cuttering ) . -

1 Symbols (2286)

1 Symbols (2286) USV Symbol Macro(s) Description 0009 \textHT <control> 000A \textLF <control> 000D \textCR <control> 0022 ” \textquotedbl QUOTATION MARK 0023 # \texthash NUMBER SIGN \textnumbersign 0024 $ \textdollar DOLLAR SIGN 0025 % \textpercent PERCENT SIGN 0026 & \textampersand AMPERSAND 0027 ’ \textquotesingle APOSTROPHE 0028 ( \textparenleft LEFT PARENTHESIS 0029 ) \textparenright RIGHT PARENTHESIS 002A * \textasteriskcentered ASTERISK 002B + \textMVPlus PLUS SIGN 002C , \textMVComma COMMA 002D - \textMVMinus HYPHEN-MINUS 002E . \textMVPeriod FULL STOP 002F / \textMVDivision SOLIDUS 0030 0 \textMVZero DIGIT ZERO 0031 1 \textMVOne DIGIT ONE 0032 2 \textMVTwo DIGIT TWO 0033 3 \textMVThree DIGIT THREE 0034 4 \textMVFour DIGIT FOUR 0035 5 \textMVFive DIGIT FIVE 0036 6 \textMVSix DIGIT SIX 0037 7 \textMVSeven DIGIT SEVEN 0038 8 \textMVEight DIGIT EIGHT 0039 9 \textMVNine DIGIT NINE 003C < \textless LESS-THAN SIGN 003D = \textequals EQUALS SIGN 003E > \textgreater GREATER-THAN SIGN 0040 @ \textMVAt COMMERCIAL AT 005C \ \textbackslash REVERSE SOLIDUS 005E ^ \textasciicircum CIRCUMFLEX ACCENT 005F _ \textunderscore LOW LINE 0060 ‘ \textasciigrave GRAVE ACCENT 0067 g \textg LATIN SMALL LETTER G 007B { \textbraceleft LEFT CURLY BRACKET 007C | \textbar VERTICAL LINE 007D } \textbraceright RIGHT CURLY BRACKET 007E ~ \textasciitilde TILDE 00A0 \nobreakspace NO-BREAK SPACE 00A1 ¡ \textexclamdown INVERTED EXCLAMATION MARK 00A2 ¢ \textcent CENT SIGN 00A3 £ \textsterling POUND SIGN 00A4 ¤ \textcurrency CURRENCY SIGN 00A5 ¥ \textyen YEN SIGN 00A6 -

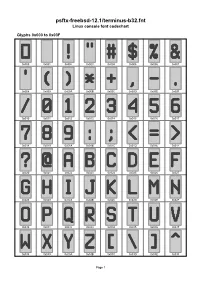

Psftx-Freebsd-12.1/Terminus-B32.Fnt Linux Console Font Codechart

psftx-freebsd-12.1/terminus-b32.fnt Linux console font codechart Glyphs 0x000 to 0x03F 0x000 0x001 0x002 0x003 0x004 0x005 0x006 0x007 0x008 0x009 0x00A 0x00B 0x00C 0x00D 0x00E 0x00F 0x010 0x011 0x012 0x013 0x014 0x015 0x016 0x017 0x018 0x019 0x01A 0x01B 0x01C 0x01D 0x01E 0x01F 0x020 0x021 0x022 0x023 0x024 0x025 0x026 0x027 0x028 0x029 0x02A 0x02B 0x02C 0x02D 0x02E 0x02F 0x030 0x031 0x032 0x033 0x034 0x035 0x036 0x037 0x038 0x039 0x03A 0x03B 0x03C 0x03D 0x03E 0x03F Page 1 Glyphs 0x040 to 0x07F 0x040 0x041 0x042 0x043 0x044 0x045 0x046 0x047 0x048 0x049 0x04A 0x04B 0x04C 0x04D 0x04E 0x04F 0x050 0x051 0x052 0x053 0x054 0x055 0x056 0x057 0x058 0x059 0x05A 0x05B 0x05C 0x05D 0x05E 0x05F 0x060 0x061 0x062 0x063 0x064 0x065 0x066 0x067 0x068 0x069 0x06A 0x06B 0x06C 0x06D 0x06E 0x06F 0x070 0x071 0x072 0x073 0x074 0x075 0x076 0x077 0x078 0x079 0x07A 0x07B 0x07C 0x07D 0x07E 0x07F Page 2 Glyphs 0x080 to 0x0BF 0x080 0x081 0x082 0x083 0x084 0x085 0x086 0x087 0x088 0x089 0x08A 0x08B 0x08C 0x08D 0x08E 0x08F 0x090 0x091 0x092 0x093 0x094 0x095 0x096 0x097 0x098 0x099 0x09A 0x09B 0x09C 0x09D 0x09E 0x09F 0x0A0 0x0A1 0x0A2 0x0A3 0x0A4 0x0A5 0x0A6 0x0A7 0x0A8 0x0A9 0x0AA 0x0AB 0x0AC 0x0AD 0x0AE 0x0AF 0x0B0 0x0B1 0x0B2 0x0B3 0x0B4 0x0B5 0x0B6 0x0B7 0x0B8 0x0B9 0x0BA 0x0BB 0x0BC 0x0BD 0x0BE 0x0BF Page 3 Glyphs 0x0C0 to 0x0FF 0x0C0 0x0C1 0x0C2 0x0C3 0x0C4 0x0C5 0x0C6 0x0C7 0x0C8 0x0C9 0x0CA 0x0CB 0x0CC 0x0CD 0x0CE 0x0CF 0x0D0 0x0D1 0x0D2 0x0D3 0x0D4 0x0D5 0x0D6 0x0D7 0x0D8 0x0D9 0x0DA 0x0DB 0x0DC 0x0DD 0x0DE 0x0DF 0x0E0 0x0E1 0x0E2 0x0E3 0x0E4 0x0E5 0x0E6 -

The Brill Typeface User Guide & Complete List of Characters

The Brill Typeface User Guide & Complete List of Characters Version 2.06, October 31, 2014 Pim Rietbroek Preamble Few typefaces – if any – allow the user to access every Latin character, every IPA character, every diacritic, and to have these combine in a typographically satisfactory manner, in a range of styles (roman, italic, and more); even fewer add full support for Greek, both modern and ancient, with specialised characters that papyrologists and epigraphers need; not to mention coverage of the Slavic languages in the Cyrillic range. The Brill typeface aims to do just that, and to be a tool for all scholars in the humanities; for Brill’s authors and editors; for Brill’s staff and service providers; and finally, for anyone in need of this tool, as long as it is not used for any commercial gain.* There are several fonts in different styles, each of which has the same set of characters as all the others. The Unicode Standard is rigorously adhered to: there is no dependence on the Private Use Area (PUA), as it happens frequently in other fonts with regard to characters carrying rare diacritics or combinations of diacritics. Instead, all alphabetic characters can carry any diacritic or combination of diacritics, even stacked, with automatic correct positioning. This is made possible by the inclusion of all of Unicode’s combining characters and by the application of extensive OpenType Glyph Positioning programming. Credits The Brill fonts are an original design by John Hudson of Tiro Typeworks. Alice Savoie contributed to Brill bold and bold italic. The black-letter (‘Fraktur’) range of characters was made by Karsten Lücke. -

A Generalization of the Farkas and Kra Partition Theorem for Modulus 7

A GENERALIZATION OF THE FARKAS AND KRA PARTITION THEOREM FOR MODULUS 7 S. OLE WARNAAR Dedicated to George Andrews on the occasion of his 65th birthday Abstract. We prove generalizations of some partition theorems of Farkas and Kra. 1. The Farkas and Kra partition theorem and its generalization In a recent talk given at the University of Melbourne, Hershel Farkas asked for a(nother) proof of the following elegant partition theorem. Theorem 1.1 (Farkas and Kra [2],[3, Chapter 7]). Consider the pos- itive integers such that multiples of 7 occur in two copies, say 7k and 7k. Let E(n) be the number of partitions of the even integer 2n into distinct even parts and let O(n) be the number of partitions of the odd integer 2n + 1 into distinct odd parts. Then E(n) = O(n). For example, E(9) = O(9) = 9, with the following admissible parti- tions of 18 and 19: (18), (16, 2), (14, 4), (14, 4), (12, 6), (12, 4, 2), (10, 8), (10, 6, 2), (8, 6, 4) and (19), (15, 3, 1), (13, 5, 1), (11, 7, 1), (11, 7, 1), (11, 5, 3), (9, 7, 3), (9, 7, 3), (7, 7, 5). Farkas and Kra proved their theorem as follows. It is not difficult to see that the generating function identity underlying Theorem 1.1 is 2 7 14 2 7 14 (1) (−q; q )∞(−q ; q )∞ − (q; q )∞(q ; q )∞ 2 2 14 14 = 2q(−q ; q )∞(−q ; q )∞, Q n where (a; q)∞ = n≥0(1 − aq ). -

On Circulant Matrices Irwin Kra and Santiago R

On Circulant Matrices Irwin Kra and Santiago R. Simanca ome mathematical topics—circulant ma- years, the authors’ interest was rekindled by the trices, in particular—are pure gems that casual discovery by S. Simanca that these matrices cry out to be admired and studied with are connected with algebraic geometry over the different techniques or perspectives in mythical field of one element. mind. Circulant matrices are prevalent in many parts SOur work on this subject was originally moti- of mathematics (see, for example, [8]). We point vated by the apparent need of the first author to the reader to the elegant treatment given in [4, derive a specific result, in the spirit of Proposition §5.2] and to the monograph [1] devoted to the 24, to be applied in his investigation of theta subject. These matrices appear naturally in areas constant identities [9]. Although progress on that of mathematics where the roots of unity play a front eliminated the need for such a theorem, role, and some of the reasons for this will unfurl the search for it continued and was stimulated in our presentation. However ubiquitous they are, by enlightening conversations with Yum-Tong Siu many facts about these matrices can be proven during a visit to Vietnam. Upon the first author’s using only basic linear algebra. This makes the return to the U.S., a visit by Paul Fuhrmann brought area quite accessible to undergraduates looking to his attention a vast literature on the subject, for research problems or mathematics teachers including the monograph [4]. Conversations in searching for topics of unique interest to present the Stony Brook mathematics common room at- to their students. -

Multilingualism, the Needs of the Institutions of the European Community

COMMISSION Bruxelles le, 30 juillet 1992 DES COMMUNAUTÉS VERSION 4 EUROPÉENNES SERVICE DE TRADUCTION Informatique SdT-02 (92) D/466 M U L T I L I N G U A L I S M The needs of the Institutions of the European Community Adresse provisoire: rue de la Loi 200 - B-1049 Bruxelles, BELGIQUE Téléphone: ligne directe 295.00.94; standard 299.11.11; Telex: COMEU B21877 - Adresse télégraphique COMEUR Bruxelles - Télécopieur 295.89.33 Author: P. Alevantis, Revisor: Dorothy Senez, Document: D:\ALE\DOC\MUL9206.wp, Produced with WORDPERFECT for WINDOWS v. 5.1 Multilingualism V.4 - page 2 this page is left blanc Multilingualism V.4 - page 3 TABLE OF CONTENTS 0. INTRODUCTION 1. LANGUAGES 2. CHARACTER RÉPERTOIRE 3. ORDERING 4. CODING 5. KEYBOARDS ANNEXES 0. DEFINITIONS 1. LANGUAGES 2. CHARACTER RÉPERTOIRE 3. ADDITIONAL INFORMATION CONCERNING ORDERING 4. LIST OF KEYBOARDS REFERENCES Multilingualism V.4 - page 4 this page is left blanc Multilingualism V.4 - page 5 0. INTRODUCTION The Institutions of the European Community produce documents in all 9 official languages of the Community (French, English, German, Italian, Dutch, Danish, Greek, Spanish and Portuguese). The need to handle all these languages at the same time is a political obligation which stems from the Treaties and cannot be questionned. The creation of the European Economic Space which links the European Economic Community with the countries of the European Free Trade Association (EFTA) together with the continuing improvement in collaboration with the countries of Central and Eastern Europe oblige the European Institutions to plan for the regular production of documents in European languages other than the 9 official ones on a medium-term basis (i.e. -

The Unicode Standard, Version 3.0, Issued by the Unicode Consor- Tium and Published by Addison-Wesley

The Unicode Standard Version 3.0 The Unicode Consortium ADDISON–WESLEY An Imprint of Addison Wesley Longman, Inc. Reading, Massachusetts · Harlow, England · Menlo Park, California Berkeley, California · Don Mills, Ontario · Sydney Bonn · Amsterdam · Tokyo · Mexico City Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and Addison-Wesley was aware of a trademark claim, the designations have been printed in initial capital letters. However, not all words in initial capital letters are trademark designations. The authors and publisher have taken care in preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The Unicode Character Database and other files are provided as-is by Unicode®, Inc. No claims are made as to fitness for any particular purpose. No warranties of any kind are expressed or implied. The recipient agrees to determine applicability of information provided. If these files have been purchased on computer-readable media, the sole remedy for any claim will be exchange of defective media within ninety days of receipt. Dai Kan-Wa Jiten used as the source of reference Kanji codes was written by Tetsuji Morohashi and published by Taishukan Shoten. ISBN 0-201-61633-5 Copyright © 1991-2000 by Unicode, Inc. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or other- wise, without the prior written permission of the publisher or Unicode, Inc. -

Predicting the Window Period of Stroke by Ocular Risk Factors

perim Ex en l & ta a l O ic p in l h t C h Journal of Clinical and Experimental f a o l m l a o n l r o g u y o J Ophthalmology ISSN: 2155-9570 Short Communication Predicting the Window Period of Stroke by Ocular Risk Factors Sanjoy Chowdhury* Department of Ophthalmology, Toho University Sakura Medical Center, Chiba, India ABSTRACT Aim: To predict the window period of stroke (CVA) by analyzing ocular risk factors. Method: A retrospective study was performed in all consenting patients with diagnosis of stroke made by the department of neurology. Conclusion: Ocular risk factors can predict imminent stroke. HTN retinopathy and Diabetic retinopathy are the long term risk factors whereas BRAO and CRAO, CRVO are short term risk factors. Hence early prediction can help in early management thus reducing the occurrence of stroke. Keywords: Diabetic Retinopathy; Bacterial identification; HTN retinopathy; Hypertensive retinopathy INTRODUCTION and might act as surrogate markers for cerebral small-vessel Stroke is a major health problem characterized by sudden onset diseases [6]. In the last decade there have been several studies of focal and neurological signs and symptoms caused by which have consistently shown that retinal micro vascular signs cerebrovascular origin by rapidly developing focal or global are predictors of clinical stroke events, [7] stroke death [8] and disturbances of cerebral function lasting >24hr [1]. are associated with sub-clinical measures of cerebral disease [9]. In this study we aimed to predict the window period of stroke by There is increasing evidence that small-vessel disease is a systemic above ocular risk factors [10].