Boosting Shared-Memory Supercomputing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Inside Intel® Core™ Microarchitecture Setting New Standards for Energy-Efficient Performance

White Paper Inside Intel® Core™ Microarchitecture Setting New Standards for Energy-Efficient Performance Ofri Wechsler Intel Fellow, Mobility Group Director, Mobility Microprocessor Architecture Intel Corporation White Paper Inside Intel®Core™ Microarchitecture Introduction Introduction 2 The Intel® Core™ microarchitecture is a new foundation for Intel®Core™ Microarchitecture Design Goals 3 Intel® architecture-based desktop, mobile, and mainstream server multi-core processors. This state-of-the-art multi-core optimized Delivering Energy-Efficient Performance 4 and power-efficient microarchitecture is designed to deliver Intel®Core™ Microarchitecture Innovations 5 increased performance and performance-per-watt—thus increasing Intel® Wide Dynamic Execution 6 overall energy efficiency. This new microarchitecture extends the energy efficient philosophy first delivered in Intel's mobile Intel® Intelligent Power Capability 8 microarchitecture found in the Intel® Pentium® M processor, and Intel® Advanced Smart Cache 8 greatly enhances it with many new and leading edge microar- Intel® Smart Memory Access 9 chitectural innovations as well as existing Intel NetBurst® microarchitecture features. What’s more, it incorporates many Intel® Advanced Digital Media Boost 10 new and significant innovations designed to optimize the Intel®Core™ Microarchitecture and Software 11 power, performance, and scalability of multi-core processors. Summary 12 The Intel Core microarchitecture shows Intel’s continued Learn More 12 innovation by delivering both greater energy efficiency Author Biographies 12 and compute capability required for the new workloads and usage models now making their way across computing. With its higher performance and low power, the new Intel Core microarchitecture will be the basis for many new solutions and form factors. In the home, these include higher performing, ultra-quiet, sleek and low-power computer designs, and new advances in more sophisticated, user-friendly entertainment systems. -

Data Sampling on MDS-Resistant 10Th Generation Intel Core (Ice Lake) 1

Data Sampling on MDS-resistant 10th Generation Intel Core (Ice Lake) Daniel Moghimi Worcester Polytechnic Institute, Worcester, MA, USA Abstract the store buffer. MSBDS has been shown in attacks on the kernel space as well as on SGX [1]. In addition to the leakage Microarchitectural Data Sampling (MDS) is a set of hardware component, MSBDS can also be turned around easily to tran- vulnerabilities in Intel CPUs that allows an attacker to leak siently inject values into a victim, an attack technique known bytes of data from memory loads and stores across various as Load Value Injection (LVI) [14]. security boundaries. On affected CPUs, some of these vulner- abilities were patched via microcode updates. Additionally, To mitigate these vulnerabilities, Intel released software Intel announced that the newest microarchitectures, namely workarounds and microcode updates for affected CPUs [4]. Cascade Lake and Ice Lake, were not affected by MDS. While One of the most recent microarchitectures, Ice Lake, is re- Cascade Lake turned out to be vulnerable to the ZombieLoad ported to be unaffected by MDS attacks [6,8]. Intel has also v2 MDS attack (also known as TAA), Ice Lake was not af- explicitly listed Ice Lake processors as not being vulnera- fected by this attack. ble to LVI-SB [14], which exploits MSBDS for Load Value Injection [7]. In this technical report, we show a variant of MSBDS (CVE- 2018-12126), an MDS attack, also known as Fallout, that To better analyze the MDS vulnerabilities, and potentially works on Ice Lake CPUs. This variant was automatically find new variants, Moghimi et al. -

8Th Generation Intel® Core™ Processors Product Brief

PRODUCT BRIEF 8TH GENERATION INTEL® CORE™ PROCESSORS Mobile U-Series: Peak Performance on the Go The new 8th generation Intel® Core™ Processor U-series—for sleek notebooks and 2 in 1s elevates your computing experience with an astounding 40 percent leap in productivity performance over 7th Gen PCs,1 brilliant 4K Ultra HD entertainment, and easier, more convenient ways to interact with your PC. With 10 hour battery life2 and robust I/O support, Intel’s first quad-core U-series processors enable portable, powerhouse thin and light PCs, so you can accomplish more on the go. Extraordinary Performance and Responsiveness With Intel’s latest power-efficient microarchitecture, advanced process technology, and silicon optimizations, the 8th generation Intel Core processor (U-series) is Intel’s fastest 15W processor3 with up to 40 percent greater productivity than 7th Gen processors and 2X more productivity vs. comparable 5-year-old processors.4 • Get fast and responsive web browsing with Intel® Speed Shift Technology. • Intel® Turbo Boost Technology 2.0 lets you work more productively by dynamically controlling power and speed—across cores and graphics— boosting performance precisely when it is needed, while saving energy when it counts. • With up to four cores, 8th generation Intel Core Processor U-series with Intel® Hyper-Threading Technology (Intel® HT Technology) supports up to eight threads, making every day content creation a compelling experience on 2 in 1s and ultra-thin clamshells. • For those on the go, PCs enabled with Microsoft Windows* Modern Standby wake instantly at the push of a button, so you don’t have to wait for your system to start up. -

6Th Gen Intel® Core™ Desktop Processor Product Brief

Product Brief 6th Gen Intel® Core™ Processors for Desktops: S-series LOOKING FOR AN AMAZING PROCESSOR for your next desktop PC? Look no further than 6th Gen Intel® Core™ processors. With amazing performance and stunning visuals, PCs powered by 6th Gen Intel Core processors will help take things to the next level and transform how you use a PC. The performance of 6th Gen Intel Core processors enable great user experiences today and in the future, including no passwords and more natural user interfaces. When paired with Intel® RealSense™ technology and Windows* 10, 6th Gen Intel Core processors can help remove the hassle of remembering and typing in passwords. Product Brief 6th Gen Intel® Core™ Processors for Desktops: S-series THAT RESPONSIVE PERFORMANCE EXTRA New architecture and design in 6th Gen Intel Core processors for desktops bring: BURST OF Support for DDR4 RAM memory technology in mainstream platforms, allowing systems to have up to 64GB of memory and higher transfer PERFORMANCE speeds at lower power when compared to DDR3 (DDR4 speed 2133 MT/s at 1.2V vs DDR3 speed 1600 MT/s at 1.5). 6th Gen Intel Core i7 and Core i5 processors come with Intel® Turbo boost 2.0 Technology which gives you that extra burst of performance for those jobs that require a bit more frequency.1 Intel ® Hyper-Threading Technology1 allows each processor core to work on two tasks at the same time, improving multitasking, speeding up the workfl ow, and accomplishing more in less time. With the Intel Core i7 processor you can have up to 8 threads running at the same time. -

Introducing the New 11Th Gen Intel® Core™ Desktop Processors

Product Brief 11th Gen Intel® Core™ Desktop Processors Introducing the New 11th Gen Intel® Core™ Desktop Processors The 11th Gen Intel® Core™ desktop processor family puts you in control of your compute experience. It features an innovative new architecture for reimag- ined performance, immersive display and graphics for incredible visuals, and a range of options and technologies for enhanced tuning. When these advances come together, you have everything you need for fast-paced professional work, elite gaming, inspired creativity, and extreme tuning. The 11th Gen Intel® Core™ desktop processor family gives you the power to perform, compete, excel, and power your greatest contributions. Product Brief 11th Gen Intel® Core™ Desktop Processors PERFORMANCE Reimagined Performance 11th Gen Intel® Core™ desktop processors are intelligently engineered to push the boundaries of performance. The new processor core architecture transforms hardware and software efficiency and takes advantage of Intel® Deep Learning Boost to accelerate AI performance. Key platform improvements include memory support up to DDR4-3200, up to 20 CPU PCIe 4.0 lanes,1 integrated USB 3.2 Gen 2x2 (20G), and Intel® Optane™ memory H20 with SSD support.2 Together, these technologies bring the power and the intelligence you need to supercharge productivity, stay in the creative flow, and game at the highest level. Product Brief 11th Gen Intel® Core™ Desktop Processors Experience rich, stunning, seamless visuals with the high-performance graphics on 11th Gen Intel® Core™ desktop -

HP 250 Notebook PC

HP 250 Notebook PC Our value-priced notebook PC with powerful essentials ideal for everyday computing on the go. HP recommends Windows. Built for mobile use Designed for productivity For professionals on the move, the HP 250 features a Connect with colleagues online and email from the durable chassis that helps protect against the rigors of office or from your favorite hotspots with Wi-Fi the day. CERTIFIED™ WLAN.5 Carry the HP 250 from meeting to meeting. Enjoy great From trainings to sales presentations, view high-quality views on the 15.6-inch diagonal HD2 display, without multimedia and audio right from your notebook with sacrificing portability. preinstalled software and the built-in Altec Lansing speaker. Powered for business Tackle projects with the power of the Intel® third Make an impact with presentations when you connect 2 6 7 generation Core™ i3 or i5 dual-core processors.3 to larger HD displays through the HDMI port. Develop stunning visuals without slowing down your notebook, thanks to Intel’s integrated graphics. 4 HP, a world leader in PCs brings you Windows 8 Pro designed to fit the needs of business. HP 250 Notebook PC HP recommends Windows. SPECIFICATIONS Operating system Preinstalled: Windows 8 644 Windows 8 Pro 644 Ubuntu Linux FreeDOS Processor 3rd Generation Intel® Core™ i5 & i33; 2nd Generation Intel® Core™ i33; Intel® Pentium® 3; Intel® Celeron® 3 Chipset Mobile Intel® HM70 Express (Intel® Pentium® and Celeron® processors) Mobile Intel® HM75 Express (Intel® Core™ i3 and i5 processor) Memory DDR3 SDRAM (1600 MHz) -

Product Change Notification 109134

Product Change Notification 109134 - 00 Information in this document is provided in connection with Intel products. No license, express or implied, by estoppel or otherwise, to any intellectual property rights is granted by this document. Except as provided in Intel’s Terms and Conditions of Sale for such products, Intel assumes no liability whatsoever, and Intel disclaims any express or implied warranty, relating to sale and/or use of Intel products including liability or warranties relating to fitness for a particular purpose, merchantability, or infringement of any patent, copyright or other intellectual property right. Intel products are not intended for use in medical, life saving, or life sustaining applications. Intel may make changes to specifications and product descriptions at any time, without notice. Should you have any issues with the timeline or content of this change, please contact the Intel Representative(s) for your geographic location listed below. No response from customers will be deemed as acceptance of the change and the change will be implemented pursuant to the key milestones set forth in this attached PCN. Americas Contact: [email protected] Asia Pacific Contact: [email protected] Europe Email: [email protected] Japan Email: [email protected] Copyright © Intel Corporation 2009. Other names and brands may be claimed as the property of others. Celeron, Centrino, Intel, the Intel logo, Intel Core, Intel NetBurst, Intel NetMerge, Intel NetStructure, Intel SingleDriver, Intel SpeedStep, Intel StrataFlash, Intel Viiv, Intel XScale, Itanium, MMX, Paragon, PDCharm, Pentium, and Xeon are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. -

Intel Core I7-7700K Processor

Intel Core i7-7700K Processor Product Collection 7th Generation Intel® Core™ i7 Processors Code Name Products formerly Kaby Lake Vertical Segment Desktop Processor Number i7-7700K Status Launched Launch Date Q1'17 Lithography 14 nm Recommended Customer Price $339.00 - $350.00 Performance # of Cores 4 # of Threads 8 Processor Base Frequency 4.20 GHz Max Turbo Frequency 4.50 GHz Cache 8 MB SmartCache Bus Speed 8 GT/s DMI3 # of QPI Links 0 TDP 91 W Supplemental Information Embedded Options Available No Conflict Free Yes Datasheet View now Learn how Intel is pursuing conflict-free technology. Memory Specifications Max Memory Size (dependent on memory type) 64 GB Memory Types DDR4-2133/2400, DDR3L-1333/1600 @ 1.35V Max # of Memory Channels 2 ECC Memory Supported ‡ No Graphics Specifications Processor Graphics ‡ Intel® HD Graphics 630 Graphics Base Frequency 350 MHz Graphics Max Dynamic Frequency 1.15 GHz Graphics Video Max Memory 64 GB 4K Support Yes, at 60Hz Max Resolution (HDMI 1.4)‡ 4096x2304@24Hz Max Resolution (DP)‡ 4096x2304@60Hz Max Resolution (eDP - Integrated Flat Panel)‡ 4096x2304@60Hz DirectX* Support 12 OpenGL* Support 4.4 Intel® Quick Sync Video Yes Intel® InTru™ 3D Technology Yes Intel® Clear Video HD Technology Yes Intel® Clear Video Technology Yes # of Displays Supported ‡ 3 Device ID 0x5912 Expansion Options Scalability 1S Only PCI Express Revision 3.0 PCI Express Configurations ‡ Up to 1x16, 2x8, 1x8+2x4 Max # of PCI Express Lanes 16 Package Specifications Sockets Supported FCLGA1151 -

Inside Intel® Core™ Microarchitecture and Smart Memory Access an In-Depth Look at Intel Innovations for Accelerating Execution of Memory-Related Instructions

White Paper Inside Intel® Core™ Microarchitecture and Smart Memory Access An In-Depth Look at Intel Innovations for Accelerating Execution of Memory-Related Instructions Jack Doweck Intel Principal Engineer, Merom Lead Architect Intel Corporation Entdecken Sie weitere interessante Artikel und News zum Thema auf all-electronics.de! Hier klicken & informieren! White Paper Intel Smart Memory Access and the Energy-Efficient Performance of the Intel Core Microarchitecture Introduction . 2 The Five Major Ingredients of Intel® Core™ Microarchitecture . 3 Intel® Wide Dynamic Execution . 3 Intel® Advanced Digital Media Boost . 4 Intel® Intelligent Power Capability . 4 Intel® Advanced Smart Cache . 5 Intel® Smart Memory Access . .5 How Intel Smart Memory Access Improves Execution Throughput . .6 Memory Disambiguation . 7 Predictor Lookup . 8 Load Dispatch . 8 Prediction Verification . .8 Watchdog Mechanism . .8 Instruction Pointer-Based (IP) Prefetcher to Level 1 Data Cache . .9 Traffic Control and Resource Allocation . 10 Prefetch Monitor . 10 Summary . .11 Author’s Bio . .11 Learn More . .11 References . .11 2 Intel Smart Memory Access and the Energy-Efficient Performance of the Intel Core Microarchitectures White Paper Introduction The Intel® Core™ microarchitecture is a new foundation for Intel® architecture-based desktop, mobile, and mainstream server multi-core processors. This state-of-the-art, power-efficient multi-core microarchi- tecture delivers increased performance and performance per watt, thus increasing overall energy efficiency. Intel Core microarchitecture extends the energy-efficient philosophy first delivered in Intel's mobile microarchitecture (Intel® Pentium® M processor), and greatly enhances it with many leading edge microarchitectural advancements, as well as some improvements on the best of Intel NetBurst® microarchitecture. -

8Th Generation Intel® Core™ Desktop Processors Prepare to Be Amazed with the 8Th Generation Intel® Core™ Desktop Processor Family

PRODUCT BRIEF 8TH GENERATION INTEL® CORE™ DESKTOP PROCESSORS Prepare to be Amazed with the 8th Generation Intel® Core™ Desktop Processor Family Be ready for amazing experiences in gaming, VR, and entertainment wherever your computing takes you with the 8th Generation Intel® Core™ processor family. This new generation of processors extends all the capabilities users have come to love in our desktop platforms with advanced innovations that deliver exciting new features to immerse you in incredible experiences on a variety of form factors. Exceptional Platform Performance The 8th Generation Intel Core processors redefine mainstream desktop PC performance with up to six cores for more processing power—that’s two more cores than the previous generation Intel Core processor family—Intel® Turbo Boost 2.0 technology to increase the maximum turbo frequency up to 4.7 GHz, and up to 12 MB of cache memory.1 Intel® Hyper-Threading Technology1 delivers up to 12-way multitasking support in the latest generation of Intel Core processors. For the enthusiast, the unlocked 8th Generation Intel® Core™ i7-8700K processor provides you the opportunity to tweak the platform performance to its fullest potential and enjoy great gaming and VR experiences. The new 8th Generation Intel Core processor family delivers: • An impressive portfolio of standard and unlocked systems for a broad range of usages and performance levels. • New system acceleration when paired with Intel® Optane™ memory to deliver amazing system responsiveness.1 • Intel Turbo Boost 2.0 technology to give you that extra burst of performance when you need it. • Intel Hyper-Threading technology, which allows each processor core to work on two tasks at the same time, improving multitasking, speeding up workflows, and accomplishing more in less time. -

7Th and 8Th Generation Intel® Core™ Processor Family

7th and 8th Generation Intel® Core™ Processor Family Specification Update Supporting 7th Generation Intel Core™ Processor Families based on Y/U/H/S-Processor Line, Y/U With iHDCP2.2- Processor Line , Intel Pentium Processors , Intel Celeron Processor and Intel Xeon E3-1200 v6 Processors. Supporting Intel Core™ X-Series Processor Families – 7740X, 7640X Supporting 8th Generation Intel Core™ Processor Family for U 4-Core Platforms, formerly known as Kaby Lake-R and Y 2- Cores, formerly known as Amber Lake Platform November 2019 Revision 011 Document Number: 334663-011 You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. Intel technologies' features and benefits depend on system configuration and may require enabled hardware, software or service activation. Learn more at Intel.com, or from the OEM or retailer. No computer system can be absolutely secure. Intel does not assume any liability for lost or stolen data or systems or any damages resulting from such losses. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. -

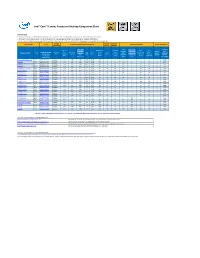

Intel® Core™ X-Series Processors Desktop Comparison Chart

Intel® Core™ X-series Processors Desktop Comparison Chart INSTRUCTIONS 1. If the column heading is underlined, clicking on that will take you to a web site or document where you can get more information on that term. 2. Clicking on the individual processor number will take you to an Intel database that contains an expanded set of processor specifications. 3. Clicking on the individual box order code will take you to an Intel database that will show which Intel® Desktop Boards are compatible with that processor. Package Processor Security & Processor Name Ordering Frequancy/Turbo/Cache/Cores/Threads/TDP Advanced Technologies Memory Specifications Specification Graphics Reliability Intel® Turbo Intel® # of AVX-512 * Maximum Processor TDP (Thermal Intel® AES Intel® Intel® Boost Max Advanced FMA (Fused Max # of DDR4 Launch Processors Base Max Turbo Cache Cores/ Design Intel® New Virtualization Optane™ Processors Number Box Order Number Technology Vector Multiply Add Memory Supported Year Socket Frequency Frequency (MB) Threads Power) Graphics Instructions Technology Memory (Click to link to 3.0 Extensions instructions) Channels Memory (GHz) (Watts) (AES) (VT-x) Supported Compatible Frequency ‡ (AVX) Units Speed (MHz) Motherboards) i9-10980XE Extreme Edition Q4'19 BX8069510980XE LGA2066 3.00 4.60 4.80 24.75 18/36 165 No Yes Yes 2 Yes Yes 4 2933 i9-10940X Q4'19 BX8069510940X LGA2066 3.30 4.60 4.80 19.25 14/28 165 No Yes Yes 2 Yes Yes 4 2933 i9-10920X Q4'19 BX8069510920X LGA2066 3.50 4.60 4.80 19.25 12/24 165 No Yes Yes 2 Yes Yes 4 2933 i9-10900X