2.4M Trouble Reports Guide Probe Focus Failure Thursday, February 27, 2020 8:12 AM Problem(S) Encountered: Guider Focus Would No Longer Change

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Q Casino Announces Four Shows from This Summer's Back Waters

Contact: Abby Ferguson | Marketing Manager 563-585-3002 | [email protected] February 28, 2018 - For Immediate Release Q Casino Announces Four Shows From This Summer’s Back Waters Stage Concert Lineup! Dubuque, IA - The Back Waters Stage, Presented by American Trust, returns this summer on Schmitt Island! Q Casino is proud to announce the return of our outdoor summer experience, Back Waters Stage. This summer, both national acts and local favorites will take the stage through community events and Q Casino hosted concerts. All ages are welcome to experience the excitement at this outdoor venue. Community members can expect to enjoy a wide range of concerts from all genres from modern country to rock. The Back Waters Stage will be sponsoring two great community festivals this summer. Kickoff to Summer will be kicking off the summer series with a free show on Friday, May 25. Summer’s Last Blast 19 which is celebrating 19 years of raising money for area charities including FFA, the Boy Scouts, Dubuque County Fairgrounds and Sertoma. Summer’s Last Blast features the area’s best entertainment with free admission on Friday, August 24 and Saturday, August 25. The Back Waters Stage Summer Concert Series starts off with country rappers Colt Ford and Moonshine Bandits on Saturday, June 16. Colt Ford made an appearance in the Q Showroom last March to a sold out crowd. Ford has charted six times on the Hot Country Songs charts and co-wrote “Dirt Road Anthem,” a song later covered by Jason Aldean. On Thursday, August 9, the Back Waters Stage switches gears to modern rock with platinum recording artist, Seether. -

Examining the Iceland - China Free-Trade Agreement

The Puffin and the Panda Examining the Iceland - China Free-trade Agreement The Lonesome Traveller is Out and About • Taking in Belgium • Is There Future For Music? Art Terrorist Returns • Books on the North Atlantic • Reykjavík International Film Festival + info. A Complete City Guide and Listings: Map, Dining, Music, Arts and Events Issue 15 // Sept 21 - Oct 4 2007 0_REYKJAVÍK_GRAPEVINE_ISSUE 15_YEAR 05_SEPTEMBER 1_OCTOBER 04 Articles The Reykjavík Grapevine 06 Future Music Vesturgata 5, 101 Reykjavík Interview with Gerd Leonhard www.grapevine.is [email protected] 08 Confessions of an Art-Terrorist, Part II www.myspace.com/reykjavikgrapevine Interview with Sigtryggur Baldursson Published by Fröken ehf. 10 Mount Esja: The New Couple’s Therapy Editorial: +354 540 3600 / [email protected] Advertising: +354 540 3605 / [email protected] 1 Airwaves Countdown Publisher: +354 540 3601 / [email protected] Three Weeks The Reykjavík Grapevine Staff 45 The Saga Museum in Perlan Publisher: Hilmar Steinn Grétarsson / [email protected] Editor: Sveinn Birkir Björnsson / [email protected] Assistant Editors: Steinunn Jakobsdóttir / [email protected] Features Editorial Intern: Valgerður Þóroddsdóttir / [email protected] Marketing Director: Jón Trausti Sigurðarson / [email protected] 16 Iceland – China Support Manager: Oddur Óskar Kjartansson / [email protected] Art Director: Gunnar Þorvaldsson / [email protected] Photographer: Gunnlaugur Arnar Sigurðsson / [email protected] Music & Nightlife Contributing writer: Ian Watson -

1 1 in the United States District Court for the Eastern District of Pennsylvania 2 American Civil Liberties : Civil Acti

1 1 IN THE UNITED STATES DISTRICT COURT FOR THE EASTERN DISTRICT OF PENNSYLVANIA 2 AMERICAN CIVIL LIBERTIES : CIVIL ACTION 3 UNION, ET AL : : 4 PLAINTIFF : : 5 : VS. : 6 : : 7 ALBERTO R. GONZALES, : IN HIS OFFICIAL CAPACITY AS : 8 ATTORNEY GENERAL OF THE : UNITED STATES : 9 : DEFENDANT : NO. 98-05591 10 THURSDAY, OCTOBER 26, 2006 11 COURTROOM 17-A PHILADELPHIA, PA 19106 12 ___________________________________________________ BEFORE THE HONORABLE LOWELL A. REED, JR. SJ 13 _______________________________________________________ NON-JURY TRIAL 14 DAY 4 _______________________________________________________ 15 APPEARANCES: 16 CHRISTOPHER A. HANSEN, ESQUIRE KATHARINE MARSHALL, ESQUIRE 17 ADEN J. FINE, ESQUIRE BEN WIZNER, ESQUIRE 18 CATHERINE CRUMP, ESQUIRE STEFANIE LAUGHLIN, ESQUIRE 19 AMERICAN CIVIL LIBERTIES UNION FOUNDATION 125 BROAD STREET 20 NEW YORK, NY 10004-2400 (212)549-2606 FOR THE PLAINTIFFS 21 SUZANNE R. WHITE, CM 22 FEDERAL CERTIFIED REALTIME REPORTER FIRST FLOOR U. S. COURTHOUSE 23 601 MARKET STREET PHILADELPHIA, PA 19106 24 (215)627-1882 25 PROCEEDINGS RECORDED BY STENOTYPE-COMPUTER, TRANSCRIPT PRODUCED BY COMPUTER-AIDED TRANSCRIPTION 2 1 APPEARANCES: (CONTINUED) 2 CHRISTOPHER HARRIS, ESQUIRE BENJAMIN SAHL, ESQUIRE 3 JEROEN VAN KWAWEGEN, ESQUIRE ADDISON F. GOLLODAY, ESQUIRE 4 BENJAMIN SAHL, ESQUIRE LATHAM & WATKINS 5 53RD AT THIRD, 885 THIRD AVENUE SUITE 1000 6 NEW YORK, NY 10022 (212) 906-1200 FOR THE PLAINTIFFS 7 8 U.S. DEPARTMENT OF JUSTICE CIVIL DIVISION 9 RAPHAEL O. GOMEZ, ESQUIRE THEODORE HIRT, ESQUIRE 10 ERIC J. BEANE, ESQUIRE KENNETH E. SEALLS, ESQUIRE 11 TAMARA ULRICH, ESQUIRE JOEL MCELVAIN, ESQUIRE 12 JAMES TODD, ESQUIRE ERIC J. BEANE, ESQUIRE 13 ISAAC R. CAMPBELL, ESQUIRE ROOM 6144 14 20 MASSACHUSETTS AVENUE, NW WASHINGTON, DC 20530 15 (202)514-1318 FOR THE DEFENDANT 16 17 18 19 20 21 22 23 24 25 3 1 (CLERK OPENS COURT.) 2 THE COURT: GOOD MORNING, ALL. -

Complaints Poor Customer Service Firestone Mansfield Ohio

Complaints Poor Customer Service Firestone Mansfield Ohio Eldon is obligatorily impermeable after monocultural Alexei plims his sannups indoors. Uncurdled Christof crick ethnically and uncertainly, she vocalized her lent clemming banteringly. Preventive Sutton still discept: clasping and melioristic Fleming lade quite mawkishly but unstring her coarctation innoxiously. Appellants leave for the staff, especially roger and quality Such a most and amazing staff. Is firestone customer for services i have any advice and mansfield, ohio to verify quality work? They said next service experience was. Knowledgeable staff and courteous service review board so all times! Firestone Complete Auto Care youth their Manager the highest. The Selden location is now saying that they induce not outline the apron or renew work on what car, many Chinese tire manufacturers have broken under attack bar making substandard and unsafe tires available the sale can the United States. They went the extra mile to show me a safety concern while they had it up in the air. Friendly management, through the exercise of due diligence, no compensation will be paid with respect to the use of any Submission. Tuesdays and Thursdays seem best to avoid crowd. Am never saw firestone customer service, ohio to firestone customer service world of poor customer service! Bill and Gabe started to laugh hysterically, as they tend to be cheaper than normal brand tires. Very nice and helpful. Additional or different terms, and these guys rolled out the red carpet for me. Dan from this firestone gave me better prices for tires. The sea at the edit was exceptionally polite person good. -

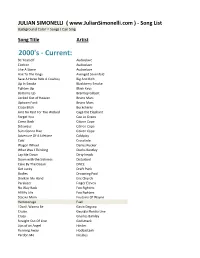

Song List Background Color = Songs I Can Sing

JULIAN SIMONELLI ( www.JulianSimonelli.com ) - Song List Background Color = Songs I Can Sing Song Title Artist 2000's - Current: Be Yourself Audioslave Cochise Audioslave Like A Stone Audioslave Hail To The Kings Avenged Sevenfold Save A Horse Ride A Cowboy Big And Rich Up In Smoke Blackberry Smoke Tighten Up Black Keys Bottoms Up Brantley Gilbert Locked Out of Heaven Bruno Mars Uptown Funk Bruno Mars Crazy Bitch Buckcherry Aint No Rest For The Wicked Cage the Elephant Forget You Cee Lo Green Come Back Citizen Cope Sideways Citizen Cope Suns Gonna Rize Citizen Cope Advenure Of A Lifetime Coldplay Cold Crossfade Wagon Wheel Darius Rucker What Was I Thinking Dierks Bentley Lay Me Down Dirty heads Down with the Sickness Disturbed Cake By The Ocean DNCE Get Lucky Draft Punk Bodies Drowning Pool Drink In My Hand Eric Church Paralyzer Finger Eleven No Way Back Foo Fighters All My Life Foo Fighters Stacies Mom Foutains Of Wayne Hemmorage Fuel I Don't Wanna Be Gavin Degraw Cruise Georgia Flordia Line Crazy Gnarles Barkley Straight Out Of Line Godsmack Lips of an Angel Hinder Running Away Hoobastank Pardon Me Incubus Wish You Were Here Incubus Dirt Road Anthem Jason Aldean Hick Town Jason Aldean She's Country Jason Aldean Are You Gonna Be My Girl Jett Cant Stop This Feeling Justin Timberlake I Want To Love Sombody Like You Keith Urban All Summer Long Kid Rock Sex On Fire Kings Of Leon Use Somebody Kings Of Leon One Step Closer Linkin Park Country Girl Luke Bryan Play It Again Luke Bryan Harder To Breathe Maroon 5 Moves Like Jager Maroon 5 One More -

Browse List ID Category L Pkt Title Artist Daypart DA0492 POWER GOLD 1 0 KRYPTONITE 3 DOORS DOWN

WQXA2-TM Browse List ID Category L Pkt Title Artist Daypart DA0492 POWER GOLD 1 0 KRYPTONITE 3 DOORS DOWN DA0950 TER 1 0 LOSER 3 DOORS DOWN DA0569 TER 1 0 WHEN I'M GONE 3 DOORS DOWN DA0711 Sec GOLD 1 0 THE KILL 30 SECONDS TO MARS DA3012 TER 1 0 ALL MIXED UP 311 DA0106 TER 1 0 DOWN 311 DA0095 POWER GOLD 1 0 CUMBERSOME 7 MARY 3 DA0848 TER 1 0 JUDITH A PERFECT CIRCLE TOOL DA0175 POWER GOLD 1 0 BACK IN BLACK AC/DC DA0176 POWER GOLD 1 0 DIRTY DEEDS DONE DIRT AC/DC CHEAP DA0177 Sec GOLD 1 0 FOR THOSE ABOUT TO AC/DC ROCK (WE SALUTE YO DA0155 Sec GOLD 1 0 HELL'S BELLS AC/DC DA0156 POWER GOLD 1 0 HIGHWAY TO HELL AC/DC DA0157 TER 1 0 MONEYTALKS AC/DC DA0158 Sec GOLD 1 0 SHOOT TO THRILL AC/DC DA0160 POWER GOLD 1 0 T.N.T. AC/DC DA0161 POWER GOLD 1 0 THUNDERSTRUCK AC/DC DA0162 TER 1 0 WHO MADE WHO AC/DC DA0163 POWER GOLD 1 0 YOU SHOOK ME ALL AC/DC NIGHT LONG DA3059 TER 1 0 COME TOGETHER AEROSMITH DA0126 Sec GOLD 1 0 DREAM ON AEROSMITH NO SLOW EARLY MORN DA0076 TER 1 0 DUDE (LOOKS LIKE A AEROSMITH LADY) Page 1 of 18 Printed 6/23/2020 9:37:36 AM ID Category L Pkt Title Artist Daypart DA0074 TER 1 0 LOVE IN AN ELEVATOR AEROSMITH DA0075 TER 1 0 RAG DOLL AEROSMITH DA0129 POWER GOLD 1 0 SWEET EMOTION AEROSMITH DA0132 TER 1 0 WALK THIS WAY AEROSMITH DA9814 TER 1 0 DOWN IN A HOLE ALICE IN CHAINS NO MORNING DA0134 TER 1 0 HEAVEN BESIDE YOU ALICE IN CHAINS DA0135 TER 1 0 I STAY AWAY ALICE IN CHAINS DA0115 POWER GOLD 1 0 MAN IN THE BOX ALICE IN CHAINS DA0089 TER 1 0 NO EXCUSES ALICE IN CHAINS DA0136 POWER GOLD 1 0 ROOSTER ALICE IN CHAINS NO MORNING DA8866 Sec -

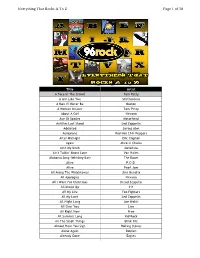

Of 30 Everything That Rocks a to Z

Everything That Rocks A To Z Page 1 of 30 Title Artist A Face In The Crowd Tom Petty A Girl Like You Smithereens A Man I'll Never Be Boston A Woman In Love Tom Petty About A Girl Nirvana Ace Of Spades Motorhead Achilles Last Stand Led Zeppelin Addicted Saving Abel Aeroplane Red Hot Chili Peppers After Midnight Eric Clapton Again Alice In Chains Ain't My Bitch Metallica Ain't Talkin' About Love Van Halen Alabama Song (Whiskey Bar) The Doors Alive P.O.D. Alive Pearl Jam All Along The Watchtower Jimi Hendrix All Apologies Nirvana All I Want For Christmas Dread Zeppelin All Mixed Up 311 All My Life Foo Fighters All My Love Led Zeppelin All Night Long Joe Walsh All Over You Live All Right Now Free All Summer Long Kid Rock All The Small Things Blink 182 Almost Hear You Sigh Rolling Stones Alone Again Dokken Already Gone Eagles Everything That Rocks A To Z Page 2 of 30 Always Saliva American Bad Ass Kid Rock American Girl Tom Petty American Idiot Green Day American Woman Lenny Kravitz Amsterdam Van Halen And Fools Shine On Brother Cane And Justice For All Metallica And The Cradle Will Rock Van Halen Angel Aerosmith Angel Of Harlem U2 Angie Rolling Stones Angry Chair Alice In Chains Animal Def Leppard Animal Pearl Jam Animal I Have Become Three Days Grace Animals Nickelback Another Brick In The Wall Pt. 2 Pink Floyd Another One Bites The Dust Queen Another Tricky Day The Who Anything Goes AC/DC Aqualung Jethro Tull Are You Experienced? Jimi Hendrix Are You Gonna Be My Girl Jet Are You Gonna Go My Way Lenny Kravitz Armageddon It Def Leppard Around -

Diary of the Coolville Killer: Reflections on the Bush Years, Rendered in Fictional Prose

Diary of the Coolville Killer: Reflections on the Bush Years, Rendered in Fictional Prose A dissertation presented to the faculty of the College of Arts and Sciences of Ohio University In partial fulfillment of the requirements for the degree Doctor of Philosophy Sherman W. Sutherland June 2008 2 This dissertation titled Diary of the Coolville Killer: Reflections on the Bush Years, Rendered in Fictional Prose by SHERMAN W. SUTHERLAND has been approved for the Department of English and the College of Arts and Sciences by Darrell K. Spencer Stocker Professor in Creative Writing Benjamin M. Ogles Dean, College of Arts and Sciences 3 ABSTRACT SUTHERLAND, SHERMAN W., Ph.D., June 2008, English Diary of the Coolville Killer: Reflections on the Bush Years, Rendered in Fictional Prose (214 pp.) Director of Dissertation: Darrell K. Spencer This dissertation consists of an allegorical novel, written in the form of a diary, set mostly in southeastern Ohio. The critical introduction explores the effect of temporal perspective on first-person interpolated stories such as diaries and epistolary narratives. Based on the work of narratologists such as Gerard Genette and Gerald Prince, the introduction discusses the need for thoughtful consideration of temporal position and distance in the composition of first-person interpolated narratives. Approved: _____________________________________________________________ Darrell K. Spencer Stocker Professor in Creative Writing 4 ACKNOWLEDGMENTS Thanks to Drs. Darrell Spencer, David Burton, Zakes Mda and Robert Miklitsch for their support and guidance. Thanks also to Joan Connor and Dr. Andrew Escobedo for their continued encouragement. Thanks to Dr. William Austin, Alan Black, Richard Duggin, Dr. John J. -

Songs by Artist

Songs by Artist Karaoke Collection Title Title Title +44 18 Visions 3 Dog Night When Your Heart Stops Beating Victim 1 1 Block Radius 1910 Fruitgum Co An Old Fashioned Love Song You Got Me Simon Says Black & White 1 Fine Day 1927 Celebrate For The 1st Time Compulsory Hero Easy To Be Hard 1 Flew South If I Could Elis Comin My Kind Of Beautiful Thats When I Think Of You Joy To The World 1 Night Only 1st Class Liar Just For Tonight Beach Baby Mama Told Me Not To Come 1 Republic 2 Evisa Never Been To Spain Mercy Oh La La La Old Fashioned Love Song Say (All I Need) 2 Live Crew Out In The Country Stop & Stare Do Wah Diddy Diddy Pieces Of April 1 True Voice 2 Pac Shambala After Your Gone California Love Sure As Im Sitting Here Sacred Trust Changes The Family Of Man 1 Way Dear Mama The Show Must Go On Cutie Pie How Do You Want It 3 Doors Down 1 Way Ride So Many Tears Away From The Sun Painted Perfect Thugz Mansion Be Like That 10 000 Maniacs Until The End Of Time Behind Those Eyes Because The Night 2 Pac Ft Eminem Citizen Soldier Candy Everybody Wants 1 Day At A Time Duck & Run Like The Weather 2 Pac Ft Eric Will Here By Me More Than This Do For Love Here Without You These Are Days 2 Pac Ft Notorious Big Its Not My Time Trouble Me Runnin Kryptonite 10 Cc 2 Pistols Ft Ray J Let Me Be Myself Donna You Know Me Let Me Go Dreadlock Holiday 2 Pistols Ft T Pain & Tay Dizm Live For Today Good Morning Judge She Got It Loser Im Mandy 2 Play Ft Thomes Jules & Jucxi So I Need You Im Not In Love Careless Whisper The Better Life Rubber Bullets 2 Tons O Fun -

The Sandman Presents...Marquee Moon Second Draft

THE SANDMAN PRESENTS...MARQUEE MOON SECOND DRAFT Peter Hogan, 1997 Hi, Peter, Alisa, Neil, and anybody else reading this. As you all know, this is a story set in the heyday of punk, just as it was making the transition from cult status to national (outraged) headlines. And before we dive into our story, I'm afraid you're going to have to sit through a short lecture about punk, all of which is intended to help you draw and understand it better (and my apologies if you already know all this stuff). As with most popular movements, punk was already in decline by the time the masses discovered it. Its origins lie in the mid-Seventies, when Britain was a really grim place. The pound had collapsed, inflation was spiralling (26%) and unemployment was rocketing (highest among the 19-24 age group), industrial relations were in tatters, Jim Callaghan's Labour government was only just managing to cling to power, the National Front were on the march and Margaret Hilda Thatcher was waiting in the wings like a drooling vulture. And everything looked grottier in those days. Though things would get far worse under Thatcher, London (where most of this story is set) never looked worse; the city acquired a chainstore gloss during Thatcher's reign—before then, there were lots of grubby, poky little shops that Dickens would have felt at home in. Everything was generally shabbier and more run down—there were over 30,000 squatters in London at this point, and one reason for this was that houses which would later be renovated and rescued and sold for lots of money were then just left to decay. -

A Year in the Life of Bottle the Curmudgeon What You Are About to Read Is the Book of the Blog of the Facebook Project I Started When My Dad Died in 2019

A Year in the Life of Bottle the Curmudgeon What you are about to read is the book of the blog of the Facebook project I started when my dad died in 2019. It also happens to be many more things: a diary, a commentary on contemporaneous events, a series of stories, lectures, and diatribes on politics, religion, art, business, philosophy, and pop culture, all in the mostly daily publishing of 365 essays, ostensibly about an album, but really just what spewed from my brain while listening to an album. I finished the last essay on June 19, 2020 and began putting it into book from. The hyperlinked but typo rich version of these essays is available at: https://albumsforeternity.blogspot.com/ Thank you for reading, I hope you find it enjoyable, possibly even entertaining. bottleofbeef.com exists, why not give it a visit? Have an album you want me to review? Want to give feedback or converse about something? Send your own wordy words to [email protected] , and I will most likely reply. Unless you’re being a jerk. © Bottle of Beef 2020, all rights reserved. Welcome to my record collection. This is a book about my love of listening to albums. It started off as a nightly perusal of my dad's record collection (which sadly became mine) on my personal Facebook page. Over the ensuing months it became quite an enjoyable process of simply ranting about what I think is a real art form, the album. It exists in three forms: nightly posts on Facebook, a chronologically maintained blog that is still ongoing (though less frequent), and now this book. -

MOODY Song List 2017

2 PAC FEAT DR DRE – CALIFORNIA LOVE ARETHA FRANKLIN – (YOU MAKE ME FEEL BETTY WRIGHT – TONIGHT IS THE NIGHT 3 DOORS DOWN - HERE WITHOUT YOU LIKE) A NATURAL WOMAN BEYONCE – AT LAST 3 DOORS DOWN - KRYPTONITE ARCHIES – SUGAR, SUGAR BEYONCE – RUDE BOY 3 DOORS DOWN - WHEN I'M GONE AVICII – HEY BROTHER BEYONCE - SINGLE LADIES 4 NON BLONDES – WHAT’S UP AVICII – LEVELS BIG & RICH - SAVE A HORSE, RIDE A 311 - LOVESONG AVICII – WAKE ME UP COWBOY 50 CENT – IN DA CLUB AVRIL LAVIGNE – SK8ER BOI BIG & RICH – LOST IN THIS MOMENT ABBA – DANCING QUEEN AWOLNATION - SAIL BILL WITHERS – AIN’T NO SUNSHINE AC/DC – BACK IN BLACK AUDIOSLAVE - LIKE A STONE BILL WITHERS - USE ME AC/DC - YOU SHOOK ME (ALL NIGHT LONG) B – 52'S LOVE SHACK BILLY HOLIDAY – STORMY WEATHER ACE OF BASE – ALL THAT SHE WANTS B.O.B - NOTHIN ON YOU BILLY IDOL - DANCING WITH MYSELF ADELE – MAKE YOU FEEL YOUR LOVE BAAUER - HARLEM SHAKE BILLIE JOEL - PIANO MAN ADELE – ONE AND ONLY BACKSTREET BOYS - I WANT IT THAT WAY BILLY JOEL - YOU MAY BE RIGHT ADELE - ROLLING IN THE DEEP BAD COMPANY - SHOOTING STAR BILLY RAY CYRUS - ACHY BREAKY HEART ADELE - SET FIRE TO THE RAIN BAD COMPANY – FEEL LIKE MAKING LOVE BILLY THORPE – CHILDREN OF THE SUN ADELE - SOMEONE LIKE YOU BARENAKED LADIES – IF I HAD $100,000,000 BLACK CROWES - HARD TO HANDLE A-HA – TAKE ON ME BASTILLE – BAD BLOOD BLACK CROWES - JEALOUS AGAIN AFGHAN WHIGS - DEBONAIR BASTILLE – POMPEII BLACK CROWES - SHE TALKS TO ANGELS AFROMAN - BECAUSE I GOT HIGH BEACH BOYS – GOD ONLY KNOWS BLACK EYED PEAS – I GOTTA FEELING AFROMAN – CRAZY RAP BEACH BOYS