Scenario-Based Development and Verification of Domain-Specific Languages

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

January 14, 2000

CFASPP Continuing Florida Aviation System Planning Process ________________________________________________________________________________________________ NORTHWEST FLORIDA REGION GREG DONOVAN, A.A.E., CHAIR May 7, 2008 Dear Committee Members, Advisors, and Participants, Subject: NORTHWEST FLORIDA REGION CFASPP Committee Meeting Date/Time: Thursday, June 5, 2008 at 10:00 AM (Central Standard Time). Location: Okaloosa Regional Airport, Terminal Conference Room. CFASPP Round 2008-2 is set for May 20th -June 5th at various locations around the State. The Round will conclude on July 19th with a Statewide Committee Meeting at the Breakers Resort in Palm Beach immediately prior the FAC Annual Conference. By the time the regional meetings are held for this Round, the Florida Legislative Session for 2008 will have come to an end signaling the beginning of a new set of challenges for airport sponsors. Aviation Planning has become even more difficult in this day and age with the continuing uncertainty brought about by budget adjustments at all levels of government. As a result, I would like to cordially invite you to join your fellow airport community members at our next regional meeting on June 5, 2008 as we continue our discussions on the direction of aviation in Florida. As we noted during the last round, the meeting agenda and handout material are now being distributed to you in electronic form. In order to ensure continued delivery of your meeting materials, please be sure that you check and if necessary update your contact information on the attendance roster at the meeting. If you should have any questions or concerns prior to the meeting, please feel free to let me know or you may contact Dan Afghani, CFASPP Administrator via e-mail at [email protected]. -

Florida Statewide Aviation Economic Impact Study

FLORIDA DEPARTMENT OF TRANSPORTATION STATEWIDE AVIATION Economic Impact Study 3 2 5 7 1 4 6 Technical Report 2019 Contents 1. Overview ............................................................................................................................................... 1 1.1 Background ................................................................................................................................... 4 1.2 Study Purpose ............................................................................................................................... 4 1.3 Communicating Results ................................................................................................................ 5 1.4 Florida’s Airports ........................................................................................................................... 5 1.5 Study Conventions ...................................................................................................................... 10 1.5.1 Study Terminology .............................................................................................................. 10 1.6 Report Organization .................................................................................................................... 12 2. Summary of Findings ........................................................................................................................... 13 2.1 FDOT District Results .................................................................................................................. -

Business Climate

SPECIAL REPORT Research Florida p. 34 Business FLORIDA Your 2015 Florida Opportunity Guide Quality of Life Business 6 Reasons Climate to Choose Infrastructure Global Access WorkforceFloridA Market PLUS: Targeted Opportunities Industry Sectors Official publication of Regional Opportunities $6.95 { } 30+ Airlines with over 375 Flights Daily Among the Top 10 35 Million Annual Passengers U.S. Airports for Low Fares 200 Acre Foreign Trade Zone % International Arrival Self-Processing Kiosks 25 (U.S., Canada, and Visa Waiver Country Travelers) More Domestic Service than any other Airport in Florida Close Proximity to Downtown, Convention Center, Medical City, Tourist Areas, Universities and Port Canaveral Future Direct-Rail Service from MCO to West Palm Beach, Non-stop Service to 75 Domestic & Ft. Lauderdale and Miami City Centers 39 International Cities Diversity, Innovation and Opportunity Land Here. BOOK ONLINE | OrlandoAirports.net /flymco @fly2mco MCO_FLTrend_8.125x10.75_F.indd 1 8/8/14 10:22 AM FLTR Economic Dev Jax 5/14_Layout 1 5/19/14 10:33 AM Page 1 New optimism. Strategic vision. Shareholder Michael Cavendish, Jacksonville From Jacksonville to Miami and Tampa to Tallahassee, the revitalization of Florida’s economy is inspiring a new optimism. Working with Florida business leaders, Gunster attorneys strive to deliver strategic value for our clients with a range of services, including mergers and acquisitions, land use and real estate law, complex commercial litigation, estate planning and business counseling. Our goal is to help clients navigate new opportunities while achieving growth. We are committed to our communities and optimistic about their future. With 11 Florida offices, Gunster is statewide and state wise. -

Final Packet

REQUEST FOR QUALIFICATIONS On-Call General Engineering Consultant Services City of Naples Airport Authority 160 Aviation Drive North Naples, FL 34104 RFQ Issue Date: January 11, 2019 RFQ Submittal Date: February 11, 2019 1 On-Call GEC RFQ 686773.1 12/26/2018 ADVERTISEMENT Request for Qualifications January 11, 2019 On Call General Engineering Consultant In accordance with Florida Statute 287.055, Title 49, United States Code, section 47105(d), Title 49, Code of Federal Regulations (CFR) Part 18, and FAA Advisory Circular 150/5100-14e, the City of Naples Airport Authority (NAA) invites the submission of Letters of Interest and Statements of Qualifications from all interested and qualified parties with demonstrated expertise in ON CALL GENERAL ENGINEERING CONSULTANT SERVICES at Naples Airport. A copy of the detailed Request for Qualifications and instructions for submittal may be obtained from the Naples Airport Authority online at https://flynaples.com/doing-business-with-the-authority/open-bids/ beginning January 10, 2019. Responses are due no later than 2:00 p.m., February 11, 2019. The NAA reserves the right to accept or reject any or all proposals and to waive any formalities or irregularities in the best interest of the Authority and is not liable for any costs incurred by the responding parties. All Respondents must be licensed in accordance with Florida Laws. The Authority recognizes fair and open competition as a basic tenet of public procurement. Respondents doing business with the Authority are prohibited from discriminating on the basis of race, color, creed, national origin, handicap, age or sex. The NAA has a progressive Disadvantaged, Minority, and Women-Owned Business Enterprises Program in place and encourages Disadvantaged, Minority, and Women-Owned Business Enterprises to participate in its RFQ process. -

Airport Dir Txt.Indd

2007 Florida Airport Directory ________________________________________ A Guide to Florida's Public and Private Airports Published By Aviation Office Florida Department of Transportation January 2007 INTRODUCTION The airport data in this directory came from annual public airport inspection and licensing records, private airport registration, and data provided by airport owners and managers. In matters of navigation, landing, and other critical flight decisions, we urge you to refer to the latest information available from sources such as the: • Aeronautical Information Manual • National Oceanic and Atmospheric Administration • Federal Aviation Administration Flight Service • Airport Facility Directory (AFD) • Airport operators The Florida Department of Transportation makes no warranty, expressed or implied, as to the accuracy of information and expressly disclaims liability for the accuracy thereof. Please address questions, requests for assistance, corrections, or changes to the address below: Aviation Office Department of Transportation 605 Suwannee Street, MS 46 Tallahassee, Florida 32399-0450 Phone: (850) 414-4500 Fax: (850) 414-4508 E-mail: [email protected] Website: www.dot.state.fl.us/aviation/ This publication is not intended for use in flight operations. Printing by PRIDE ENTERPRISES Graphics Division Blountstown, Florida Cover photo courtesy of Cecil Field Florida Department of Transportation 2007 Airport Directory Aviation Office CONTENTS List of Public Airports ........................................................ -

Regional Airport Overview

A r c a d i a G eneral Aviation Airport Arcadia Municipal Airport is located on the southeast side of Arcadia, south of State Route 70, west of State Route 31, and is accessible from Airport Road. Arcadia is located approximately 30 miles northeast of the Port Charlotte/Punta Gorda area, via US Route 17. Existing Facilities Arcadia Municipal is served by two runways. Runway 13/31 is 2,780 feet long by 140 feet wide, and Runway 05/23 is 3,700 feet long by 75 feet wide. Runway 13/31 is turf and has no lighting. There is a displaced threshold and a parallel taxiway that is 140 feet wide with no lighting. There are no navigational aids. The overall turf condition is excellent. Runway 05/23 is an asphalt runway with MIRL lighting and a parallel taxiway that is 50 feet wide with MITL lighting. The overall pavement condition is good. The airport has a general aviation terminal building to serve pilots and passengers. The building covers 3,000 square feet. There are 20 on-airport auto parking spaces to serve the terminal. There are 15 tie-down spaces and 20 T-hangars. The T-hangars are 100 percent filled, and the tie-down spaces are 50 percent filled. Current and Forecast Demand GENERAL AVIATION The largest plane that uses the airport on a regular basis is a Piper Navajo or Beech Super King Air aircraft. The current Airport Reference Code (ARC) as defined by the FAA Advisory Circular 150/5300-13 for the airport is B-II. -

3 Airport System and Classifications

3 Airport System and Classifications 3.1 Introduction One of the first steps in the system planning process is to identify the airports to be included in the system. States utilize different methods for defining the airports considered for inclusion in their state aviation transportation networks. Inclusion criteria could relate to ownership and use, while the impact of inclusion could affect funding eligibility or regulatory impacts. Once system airports are identified, further stratification or classification of facilities helps organize the system according to the types of users served and role in the statewide system. 3.2 Aviation Facilities in Florida A considerable number of aviation facilities are located in Florida. According to Federal Aviation Administration (FAA) airport facilities data as of August 2017, there were 768 aviation facilities located throughout the state. These facilities primarily consist of airports and heliports; however, approximately 10 percent of the aviation facilities consists of gliderports, seaplane bases, and ultralight facilities. These facilities are made up of airports with different ownership and uses across six categories (see Figure 3-1): 1. Publicly-owned, public-use. Of all aviation facilities located throughout Florida, 104 are publicly-owned and operated as public-use facilities. These facilities are owned by counties or municipalities or through the establishment of a special district (airport authority). A significant number of these facilities were originally military installations that were transferred from the U.S. government to local agencies. Many of these airport owners have accepted state and/or federal grant funding for new construction, maintenance, or other airport improvements. The acceptance and use of these grants comes with certain assurances, one of which requires that the airport continues to be operated as an airport for a specified duration as determined by the FAA, typically 20 years after the acceptance and use of grant funding. -

A Handbook of State Funding Information for Florida Airports Prepared By: the Florida Department of Transportation – Aviation Office 2020 - 2021 Table of Contents

Florida Aviation Project Handbook A Handbook of State Funding Information for Florida Airports Prepared by: The Florida Department of Transportation – Aviation Office 2020 - 2021 Table of Contents 1. The Florida Aviation Project Handbook Overview...............................4 The Florida Aviation Database and the Joint Automated Capital Improvement Programs ..................................................21 FDOT Organizational Structure ....................................................5 Public Transportation Grant Agreements...........................................22 FDOT Districts . 5 Public Transportation Grant Agreement Compliance .................................22 FDOT’s Aviation Office . 5 Construction Projects . 22 Planning Projects . 23 2. The Florida Aviation Grant Program Overview .................................6 Land Acquisition Projects . 23 Introduction....................................................................6 Project Invoicing . 23 Source of Funding for the Florida Aviation Grant Program . .6 Protecting the State’s Investment in our Aviation System .............................23 Airports Eligible for Funding ......................................................7 Grant Assurances . 24 Projects Eligible for Funding ......................................................7 Specific (Special) Appropriations . 25 Airport Planning Processes .......................................................7 Florida Aviation System Plan (FASP) . 7 3. The Strategic Intermodal System ...........................................26 -

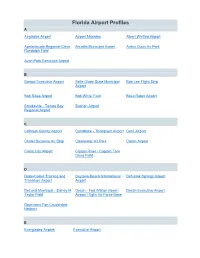

Airport Profiles from FASP 2035 Update

Florida Airport Profiles A Airglades Airport Airport Manatee Albert Whitted Airport Apalachicola Regional-Cleve Arcadia Municipal Airport Arthur Dunn Air Park Randolph Field Avon Park Executive Airport B Bartow Executive Airport Belle Glade State Municipal Bob Lee Flight Strip Airport Bob Sikes Airport Bob White Field Boca Raton Airport Brooksville - Tampa Bay Buchan Airport Regional Airport C Calhoun County Airport Carrabelle - Thompson Airport Cecil Airport Chalet Suzanne Air Strip Clearwater Air Park Costin Airport Cross City Airport Crystal River - Captain Tom Davis Field D Dade-Collier Training and Daytona Beach International Defuniak Springs Airport Transition Airport Airport DeLand Municipal - Sidney H Destin - Fort Walton Beach Destin Executive Airport Taylor Field Airport / Eglin Air Force Base Downtown Fort Lauderdale Heliport E Everglades Airpark Executive Airport F Ferguson Airport Fernandina Beach Municipal Flagler Executive Airport Airport Flying Ten Airport Fort Lauderdale Executive Fort Lauderdale/Hollywood Airport International Airport Fort Walton Beach Airport G Gainesville Regional Airport George T Lewis Airport H Halifax River Sea Plane Base Herlong Recreational Airport Hilliard Airpark I Immokalee Regional Airport Indiantown Airport Inverness Airport J Jack Browns Seaplane Base Jacksonville Executive At Craig Jacksonville International Airport Airport K Key West International Airport Keystone Heights Airport Kissimmee Gateway Airport L La Belle Municipal Airport Lake City Gateway Airport Lake Wales Municipal Airport -

An Economic Analysis of Potential Changes to the Aircraft Sales and Use Tax

State of Florida An Economic Analysis of Potential Changes To the Aircraft Sales and Use Tax February 2009 Prepared by: The Legislative Office of Economic and Demographic Research Table of Contents Analysis Summary.…..........................................……………………………………………….…3 Aviation in Florida…………………………………………………………………….…..….........6 Sales and Use Tax on Aircraft..........……………………………………………………..….....….9 Proposed Legislative Changes.……..…………………………………………………..……....…18 Economic Analysis /Cost-Benefit ………………………………………….…….……….…........21 Appendices…………………............………………………………………….………...……..….28 A. Sales and Use Tax on Aircraft Owners and Purchasers, FDOR B. Revenue Estimating Conference Impact Analysis Aircraft Sales and Use Tax, FDOR C. Technical Summary For the Florida Airports Economic Impact Study, FASP 2000, Wilbur Smith Associates, Inc., August 2000 D. HB 1379, Representatives Poppell, Attkisson, Glorioso, McKeel, and Seiler, 2008 Legislative Session E. Should New Aircraft Owners Avoid Florida, The Aero-News Network, Monday, May 21, 2007 F. Florida Dept. of Revenue Threatens to Tax Shuttle Landings, The Aero-News Network, Tuesday, April 1, 2008 G. Other Florida Sales and Use Tax Exemptions Related to Aircraft H. Cross State Comparison of Sales and Use Tax Exemptions 2 ANALYSIS SUMMARY A wide body of economic literature stresses that the retail sales tax should apply to all final sales to consuming households within the state, excluding only the purchase of business inputs or other items for resale. Common practice has varied significantly from the ideal. Today, many business inputs are taxed, exemptions exist for various purposes, and some of the tax burden is “exported” to out-of-state consumers. The retail sale of aircraft follows the state’s general provisions related to the sales tax. Florida law provides that every sale, admission charge, storage, or rental is taxable unless the transaction is specifically exempt.1 The state's general sales tax rate is 6%. -

Time Building and Discover Florida List of Airports in Florida

Time Building and Discover Florida List of Airports in Florida City served FAA IAT ICAO Airport name R A ol e Commercial Service – Primary airports Daytona Beach DA DA KDA Daytona Beach International Airport P- B B B N Fort Lauderdale FLL FLL KFLL Fort Lauderdale–Hollywood P- International Airport L Fort Myers RS RS KRS Southwest Florida International Airport P- W W W M Fort Walton Beach VPS VPS KVPS Destin–Fort Walton Beach Airport / Eglin P- Air Force Base S Gainesville GN GN KGN Gainesville Regional Airport P- V V V N Jacksonville JAX JAX KJAX Jacksonville International Airport P- M Key West EY EY KEY Key West International Airport P- W W W S Melbourne ML ML KML Orlando Melbourne International Airport P- B B B N Miami MIA MIA KMIA Miami International Airport P- L Orlando MC MC KMC Orlando International Airport P- O O O L Panama City Beach ECP ECP KECP Northwest Florida Beaches International P- Airport [nb 1] S Pensacola PNS PNS KPNS Pensacola International Airport P- S Punta Gorda PG PGD KPG Punta Gorda Airport P- D D S Sanford SFB SFB KSFB Orlando Sanford International Airport P- S City served FAA IAT ICAO Airport name R A ol e Sarasota SRQ SRQ KSRQ Sarasota–Bradenton International P- Airport S St. Augustine SGJ UST KSGJ Northeast Florida Regional Airport P- N St. Petersburg PIE PIE KPIE St. Pete–Clearwater International Airport P- S Tallahassee TLH TLH KTLH Tallahassee International Airport P- N Tampa TPA TPA KTPA Tampa International Airport P- L West Palm Beach PBI PBI KPBI Palm Beach International Airport P- M Reliever airports Boca Raton BCT BCT KBCT Boca Raton Airport R Clearwater CL CL KCL Clearwater Air Park R W W W DeLand DE KDE DeLand Municipal Airport (Sidney H. -

Office of Freight, Logistics & Passenger Operations (FLP)

Office of Freight, Logistics & Passenger Operations (FLP) Organization & Activities Guide 2017 Florida Department of Transportation Page | 0 This page is intentionally left blank TABLE OF CONTENTS TABLE OF CONTENTS OFFICE OF FREIGHT, LOGISTICS AND PASSENGER OPERATIONS OVERVIEW ............................................. 3 Office Of Freight, Logistics and Passenger Operations .............................................................................................. 4 AVIATION AND SPACEPORTS OFFICE ................................................................................................................ 7 Aviation System ......................................................................................................................................................... 7 Spaceports System ..................................................................................................................................................... 8 Aviation and Spaceports Staff .................................................................................................................................... 9 Aviation & Spaceports Office Activities ................................................................................................................... 12 15 Key Areas of Responsibility .................................................................................................................... 12 Aviation Grants ..........................................................................................................................................