3D User Interface System Control Techniques Overview

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Toga Documentation Release 0.2.15

Toga Documentation Release 0.2.15 Russell Keith-Magee Aug 14, 2017 Contents 1 Table of contents 3 1.1 Tutorial..................................................3 1.2 How-to guides..............................................3 1.3 Reference.................................................3 1.4 Background................................................3 2 Community 5 2.1 Tutorials.................................................5 2.2 How-to Guides.............................................. 17 2.3 Reference................................................. 18 2.4 Background................................................ 24 2.5 About the project............................................. 27 i ii Toga Documentation, Release 0.2.15 Toga is a Python native, OS native, cross platform GUI toolkit. Toga consists of a library of base components with a shared interface to simplify platform-agnostic GUI development. Toga is available on Mac OS, Windows, Linux (GTK), and mobile platforms such as Android and iOS. Contents 1 Toga Documentation, Release 0.2.15 2 Contents CHAPTER 1 Table of contents Tutorial Get started with a hands-on introduction to pytest for beginners How-to guides Guides and recipes for common problems and tasks Reference Technical reference - commands, modules, classes, methods Background Explanation and discussion of key topics and concepts 3 Toga Documentation, Release 0.2.15 4 Chapter 1. Table of contents CHAPTER 2 Community Toga is part of the BeeWare suite. You can talk to the community through: • @pybeeware on Twitter -

Basic Computer Lesson

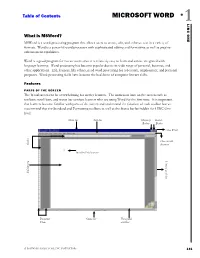

Table of Contents MICROSOFT WORD 1 ONE LINC What is MSWord? MSWord is a word-processing program that allows users to create, edit, and enhance text in a variety of formats. Word is a powerful word processor with sophisticated editing and formatting as well as graphic- enhancement capabilities. Word is a good program for novice users since it is relatively easy to learn and can be integrated with language learning. Word processing has become popular due to its wide range of personal, business, and other applications. ESL learners, like others, need word processing for job search, employment, and personal purposes. Word-processing skills have become the backbone of computer literacy skills. Features PARTS OF THE SCREEN The Word screen can be overwhelming for novice learners. The numerous bars on the screen such as toolbars, scroll bars, and status bar confuse learners who are using Word for the first time. It is important that learners become familiar with parts of the screen and understand the function of each toolbar but we recommend that the Standard and Formatting toolbars as well as the Status bar be hidden for LINC One level. Menu bar Title bar Minimize Restore Button Button Close Word Close current Rulers document Insertion Point (cursor) Vertical scroll bar Editing area Document Status bar Horizontal Views scroll bar A SOFTWARE GUIDE FOR LINC INSTRUCTORS 131 1 MICROSOFT WORD Hiding Standard toolbar, Formatting toolbar, and Status bar: • To hide the Standard toolbar, click View | Toolbars on the Menu bar. Check off Standard. LINC ONE LINC • To hide the Formatting toolbar, click View | Toolbars on the Menu bar. -

The BIAS Soundscape Planning Tool for Underwater Continuous Low Frequency Sound

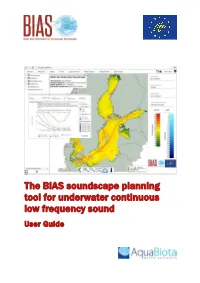

The BIAS soundscape planning tool for underwater continuous low frequency sound User Guide The BIAS soundscape planning tool BIAS - Baltic Sea Information on the Acoustic Soundscape The EU LIFE+ project Baltic Sea Information on the Acoustic Soundscape (BIAS) started in September 2012 for supporting a regional implementation of underwater noise in the Baltic Sea, in line with the EU roadmap for the Marine Strategy Framework Directive (MSFD) and the general recognition that a regional handling of Descriptor 11 is advantageous, or even necessary, for regions such as the Baltic Sea. BIAS was directed exclusively towards the MSFD descriptor criteria 11.2 Continuous low frequency sound and aimed at the establishment of a regional implementation plan for this sound category with regional standards, methodologies, and tools allowing for cross-border handling of acoustic data and the associated results. The project was the first one to include all phases of implementation of a joint monitoring programme across national borders. One year of sound measurements were performed in 2014 by six nations at 36 locations across the Baltic Sea. The measurements, as well as the post-processing of the measurement data, were subject to standard field procedures, quality control and signal processing routines, all established within BIAS based on the recommendations by the Technical Subgroup on Underwater Noise (TSG-Noise). The measured data were used to model soundscape maps for low frequent continuous noise in the project area, providing the first views of the Baltic Sea soundscape and its variation on a monthly basis. In parallel, a GIS-based online soundscape planning tool was designed for handling and visualizing both the measured data and the modelled soundscape maps. -

Design of a Graphical User Inter- Face Decision Support System for a Vegetated Treatment System 1S.R

DESIGN OF A GRAPHICAL USER INTER- FACE DECISION SUPPORT SYSTEM FOR A VEGETATED TREATMENT SYSTEM 1S.R. Burckhard, 2M. Narayanan, 1V.R. Schaefer, 3P.A. Kulakow, and 4B.A. Leven 1Department of Civil and Environmental Engineering, South Dakota State University, Brookings, SD 57007; Phone: (605)688-5316; Fax: (605)688-5878. 2Department of Comput- ing and Information Sciences, Kansas State University, Manhattan, KS 66506; Phone: (785)532-6350; Fax: (785)532-5985. 3Agronomy Department, Kansas State University, Manhattan, KS 66506; Phone: (785)532-7239; Fax: (785)532-6094. 4Great Plains/Rocky Mountain Hazardous Substance Research Center, Kansas State University, Manhattan, KS 66506; Phone: (785)532-0780; Fax: (785)532-5985. ABSTRACT The use of vegetation in remediating contaminated soils and sediments has been researched for a number of years. Positive laboratory results have lead to the use of vegetation at field sites. The design process involved with field sites and the associated decision processes are being developed. As part of this develop- ment, a computer-based graphical user interface decision support system was designed for use by practicing environmental professionals. The steps involved in designing the graphical user interface, incorporation of the contaminant degradation model, and development of the decision support system are presented. Key words: phytoremediation, simulation INTRODUCTION Vegetation has been shown to increase the degradation of petroleum and organic contaminants in contaminated soils. Laboratory experiments have shown promising results which has led to the deployment of vegetation in field trials. The design of field trials is different than the design of a treatment system. In a field trial, the type of vegetation, use of amendments, placement and division of plots, and monitoring requirements are geared toward producing statistically measurable results. -

Microtemporality: at the Time When Loading-In-Progress

Microtemporality: At The Time When Loading-in-progress Winnie Soon School of Communication and Culture, Aarhus University [email protected] Abstract which data processing and code inter-actions are Loading images and webpages, waiting for social media feeds operated in real-time. The notion of inter-actions mainly and streaming videos and multimedia contents have become a draws references from the notion of "interaction" from mundane activity in contemporary culture. In many situations Computer Science and the notion of "intra-actions" from nowadays, users encounter a distinctive spinning icon during Philosophy. [3][4][5] The term code inter-actions the loading, waiting and streaming of data content. A highlights the operational process of things happen graphically animated logo called throbber tells users something within, and across, machines through different technical is loading-in-progress, but nothing more. This article substrates, and hence produce agency. investigates the process of data buffering that takes place behind a running throbber. Through artistic practice, an experimental project calls The Spinning Wheel of Life explores This article is informed by artistic practice, including the temporal and computational complexity of buffering. The close reading of a throbber and its operational logics of article draws upon Wolfgang Ernst’s concept of data buffering, as well as making and coding of a “microtemporality,” in which microscopic temporality is throbber. These approaches, following the tradition of expressed through operational micro events. [1] artistic research, allow the artist/researcher to think in, Microtemporality relates to the nature of signals and through and with art. [7] Such mode of inquiry questions communications, mathematics, digital computation and the invisibility of computational culture. -

Menus Overview

Table of Contents!> Getting Started!> Introduction!> A first look at the Dreamweaver workspace!> Menus overview Menus overview This section provides a brief overview of the menus in Dreamweaver. The File menu and Edit menu contain the standard menu items for File and Edit menus, such as New, Open, Save, Cut, Copy, and Paste. The File menu also contains various other commands for viewing or acting on the current document, such as Preview in Browser and Print Code. The Edit menu includes selection and searching commands, such as Select Parent Tag and Find and Replace, and provides access to the Keyboard Shortcut Editor and the Tag Library Editor. The Edit menu also provides access to Preferences, except on the Macintosh in Mac OS X, where Preferences are in the Dreamweaver menu. The View menu allows you to see various views of your document (such as Design view and Code view) and to show and hide various kinds of page elements and various Dreamweaver tools. The Insert menu provides an alternative to the Insert bar for inserting objects into your document. The Modify menu allows you to change properties of the selected page element or item. Using this menu, you can edit tag attributes, change tables and table elements, and perform various actions for library items and templates. The Text menu allows you to easily format text. The Commands menu provides access to a variety of commands, including one to format code according to your formatting preferences, one to create a photo album, and one to optimize an image using Macromedia Fireworks. -

Developing GUI Applications. Architectural Patterns Revisited

Developing GUI Applications: Architectural Patterns Revisited A Survey on MVC, HMVC, and PAC Patterns Alexandros Karagkasidis [email protected] Abstract. Developing large and complex GUI applications is a rather difficult task. Developers have to address various common soFtware engineering problems and GUI-speciFic issues. To help the developers, a number of patterns have been proposed by the software community. At the architecture and higher design level, the Model-View-Controller (with its variants) and the Presentation-Abstraction- Control are two well-known patterns, which specify the structure oF a GUI application. However, when applying these patterns in practice, problems arise, which are mostly addressed to an insuFFicient extent in the existing literature (iF at all). So, the developers have to find their own solutions. In this paper, we revisit the Model-View-Controller, Hierarchical Model-View- Controller, and Presentation-Abstraction-Control patterns. We first set up a general context in which these patterns are applied. We then identiFy Four typical problems that usually arise when developing GUI applications and discuss how they can be addressed by each of the patterns, based on our own experience and investigation oF the available literature. We hope that this paper will help GUI developers to address the identified issues, when applying these patterns. 1 Introduction Developing a large and complex graphical user interface (GUI) application for displaying, working with, and managing complex business data and processes is a rather difficult task. A common problem is tackling system complexity. For instance, one could really get lost in large number of visual components comprising the GUI, not to mention the need to handle user input or track all the relationships between the GUI and the business logic components. -

SSRVM Content Creation Template Use This to Create the Lesson for Each Topic

SSRVM Content Creation Template Use this to create the lesson for each topic. Finally merge it into the main document. Title: Elements of a Window : Tool Bar , Scroll bar Contributors: Usha Viswanathan Std: 1 Reviewers: Names of those who reviewed and/or modified this content. Submission Date of sending the content to Approval Date of inclusion into SSRVM Date: SSRVM Academic Council. Date: curriculum. REF No: Internal reference no. Brief Description: This topic gives and idea about the title bar, scroll bar and the tool bar of a window. Goal: To familiarise the kid with different elements like the toolbar, titlebar and the scrollbar of a window. Pre- The kid should know how to open an application by double clicking the application requisites: icon. Learning To recognise the toolbar and scrollbar of a window. Outcome: Duration: Two hours References: Detailed Attached page Nos. 2, 3, 4 Description: Lesson Plan: Attached page No. 5, 6 Evaluation Attached page No. 6 Worksheet: Attached page No. 7, 8 Other Notes: 1 Detailed Description: Tool Bar and Scroll Bars Many programs and applications run within windows that can be opened, minimized, resized and closed (See figure (a)). The top bar of the window is called the Title Bar. This horizontal area at the top of a window identifies the window. The title bar also acts as a handle for dragging the window. figure(a) Tool Bar A toolbar is a set of tools that are grouped together into an area on the main window of an application. Typically, the tools are arranged into a horizontal strip called a bar, hence the term toolbar. -

Raginʼ CMS Beginner Guide

Raginʼ CMS Beginner Guide Raginʼ CMS is the official content management system for the University of Louisiana at Lafayette. This guide provides you with the tools you need to develop the basic structure of your website and begin migrating content. For more information, visit http://web.louisiana/edu. Contents Get started ............................................................................................................................................................................ 1 Log in .................................................................................................................................................................................... 1 Change your password ....................................................................................................................................................... 1 Screen overview .................................................................................................................................................................. 2 Create your main menu links/pages (buckets) ................................................................................................................. 2 Reorder the links in the main menu ................................................................................................................................... 3 Add a secondary menu link in a main menu topic ............................................................................................................. 3 Edit page text ...................................................................................................................................................................... -

Mxmanagementcenter a Newmilestoneinvideomanagement Security-Vision-Systems Mxmanagementcenter Tutorial 2/108

EN MOBOTIX Tutorial Security-Vision-Systems MxManagementCenter A New Milestone in Video Management V1.2_EN_06/2016 Innovations – Made in Germany The German company MOBOTIX AG is known as the leading pioneer in network camera technology and its decentralized concept has made high-resolution video systems cost-efficient. MOBOTIX AG • D-67722 Langmeil • Phone: +49 6302 9816-103 • Fax: +49 6302 9816-190 • [email protected] www.mobotix.com 2/108 MxManagementCenter Tutorial MOBOTIX Seminars MOBOTIX offers inexpensive seminars that include a workshop and practical exercises. For more information, visit www.mobotix.com > Seminars. Copyright Information All rights reserved. MOBOTIX, the MX logo, MxManagementCenter, MxControlCenter, MxEasy and MxPEG are trademarks of MOBOTIX AG registered in the European Union, the U.S.A., and other countries. Microsoft, Windows and Windows Server are registered trademarks of Microsoft Corporation. Apple, the Apple logo, Macintosh, OS X, iOS, Bonjour, the Bonjour logo, the Bonjour icon, iPod und iTunes are trademarks of Apple Inc. registered in the U.S.A. and other countries. iPhone, iPad, iPad mini and iPod touch are Apple Inc. trademarks. All other marks and names mentioned herein are trademarks or registered trademarks of the respective owners. Copyright © 1999-2016, MOBOTIX AG, Langmeil, Germany. Information subject to change without notice! © MOBOTIX AG • Security-Vision-Systems • Made in Germany • www.mobotix.com 3/108 CONTENTS 1 Basics 6 1.1 General Structure of the Views 6 1.2 Camera Groups 8 1.3 Camera -

Chapter SW1 Page 1 Chapter SW 1 FUNDAMENTALS of SOFTWARE USE If You Go out in the Computer Marketplace Today, You Will Find Thou

Chapter SW 1 FUNDAMENTALS OF SOFTWARE USE If you go out in the computer marketplace today, you will find thousands of different software packages. They do different things such as word processing, graphics, control computers, e-mail, calendars, compress disks or files, or control a computer. Even though the software does different tasks, software packages exhibit similarities in the way that they work. Systems & Applications Software • Systems Software • Enable applications to run – Operating System – Language Translators – Utility Programs • Applications Software In the introduction we learned that software falls into two categories: Systems software consists of programs that enable applications software to run. Systems software consists of three different types - Operating systems manage the system and the running of other programs. Language translators translate a programming language into machine language. Utility programs are tools to provide some functions that the operating system doesn’t provide. An example might be the Windows disk defragmentation program or Windows Explorer. Software Suites • Software Suites – MS/Office, Corel WordPerfect Office • Integrated Software Programs – MS/Works, Claris Works, Corel PerfectWork One type of application program is the office oriented program. Many times office oriented programs of different types are bundled together in a suite. The most popular suite is MS/Office with 80% or more of the suite market. Suites are the primary way that office programs are sold to business. Related to these are programs which compress a suite into a smaller less comprehensive package with less functions. How an office suite is organized In a suite, there are multiple components or applications that are bundled together. -

User Interface Toolkits Graphical Libraries

Master Informatique - Université Paris-Sud Outline Software layers User interface toolkits Graphical libraries Window systems Michel Beaudouin-Lafon Laboratoire de Recherche en Informatique User interface toolkits Université Paris-Sud / CNRS [email protected] http://insitu.lri.fr Applications frameworks Interface builders Software layers Output devices Bitmap screens Application CRT, LCD, Plasma, … Framework Spatial resolution: about 100dpi Color resolution (« color depth ») : User interface toolkit B&W, grey levels, color table, direct color Window system Graphical library R G B Operating system Device drivers Temporal resolution: 10 to 100 frames per second Bandwidth: 25 img/s * 1000x1000 pixels * 3 bytes/pixel = 75 Mb/s GPU : Graphics Processing Unit (c) 2011, Michel Beaudouin-Lafon, [email protected] 1 Master Informatique - Université Paris-Sud Input devices Graphical libraries 2D input devices Drawing model Mouse, Tablet, Joystick, Trackball, Touch screen Direct drawing (painter’s algorithm) Type of user control Structured drawing: scene graph position, motion, force, … ; linear, circular, … Edit the data structure Mapping of input dimensions position, speed, acceleration Graphical objects are defined by: transfer function (gain) Their geometry Motor space vs. visual space Their graphical attributes separate or identical color, texture, gradient, transparency, lighting Other input devices Graphical libraries Keyboards, Button boxes, Sliders Direct drawing: Xlib, Java2D, OpenGL 3D position and orientation sensors Structured drawing: Inventor (3D),