Exterior Algebra with Differential Forms on Manifolds Md

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

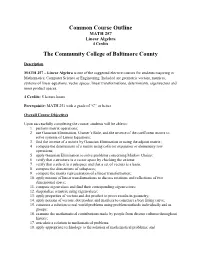

Common Course Outline MATH 257 Linear Algebra 4 Credits

Common Course Outline MATH 257 Linear Algebra 4 Credits The Community College of Baltimore County Description MATH 257 – Linear Algebra is one of the suggested elective courses for students majoring in Mathematics, Computer Science or Engineering. Included are geometric vectors, matrices, systems of linear equations, vector spaces, linear transformations, determinants, eigenvectors and inner product spaces. 4 Credits: 5 lecture hours Prerequisite: MATH 251 with a grade of “C” or better Overall Course Objectives Upon successfully completing the course, students will be able to: 1. perform matrix operations; 2. use Gaussian Elimination, Cramer’s Rule, and the inverse of the coefficient matrix to solve systems of Linear Equations; 3. find the inverse of a matrix by Gaussian Elimination or using the adjoint matrix; 4. compute the determinant of a matrix using cofactor expansion or elementary row operations; 5. apply Gaussian Elimination to solve problems concerning Markov Chains; 6. verify that a structure is a vector space by checking the axioms; 7. verify that a subset is a subspace and that a set of vectors is a basis; 8. compute the dimensions of subspaces; 9. compute the matrix representation of a linear transformation; 10. apply notions of linear transformations to discuss rotations and reflections of two dimensional space; 11. compute eigenvalues and find their corresponding eigenvectors; 12. diagonalize a matrix using eigenvalues; 13. apply properties of vectors and dot product to prove results in geometry; 14. apply notions of vectors, dot product and matrices to construct a best fitting curve; 15. construct a solution to real world problems using problem methods individually and in groups; 16. -

Topology and Physics 2019 - Lecture 2

Topology and Physics 2019 - lecture 2 Marcel Vonk February 12, 2019 2.1 Maxwell theory in differential form notation Maxwell's theory of electrodynamics is a great example of the usefulness of differential forms. A nice reference on this topic, though somewhat outdated when it comes to notation, is [1]. For notational simplicity, we will work in units where the speed of light, the vacuum permittivity and the vacuum permeability are all equal to 1: c = 0 = µ0 = 1. 2.1.1 The dual field strength In three dimensional space, Maxwell's electrodynamics describes the physics of the electric and magnetic fields E~ and B~ . These are three-dimensional vector fields, but the beauty of the theory becomes much more obvious if we (a) use a four-dimensional relativistic formulation, and (b) write it in terms of differential forms. For example, let us look at Maxwells two source-free, homogeneous equations: r · B = 0;@tB + r × E = 0: (2.1) That these equations have a relativistic flavor becomes clear if we write them out in com- ponents and organize the terms somewhat suggestively: x y z 0 + @xB + @yB + @zB = 0 x z y −@tB + 0 − @yE + @zE = 0 (2.2) y z x −@tB + @xE + 0 − @zE = 0 z y x −@tB − @xE + @yE + 0 = 0 Note that we also multiplied the last three equations by −1 to clarify the structure. All in all, we see that we have four equations (one for each space-time coordinate) which each contain terms in which the four coordinate derivatives act. Therefore, we may be tempted to write our set of equations in more \relativistic" notation as ^µν @µF = 0 (2.3) 1 with F^µν the coordinates of an antisymmetric two-tensor (i. -

Connections on Bundles Md

Dhaka Univ. J. Sci. 60(2): 191-195, 2012 (July) Connections on Bundles Md. Showkat Ali, Md. Mirazul Islam, Farzana Nasrin, Md. Abu Hanif Sarkar and Tanzia Zerin Khan Department of Mathematics, University of Dhaka, Dhaka 1000, Bangladesh, Email: [email protected] Received on 25. 05. 2011.Accepted for Publication on 15. 12. 2011 Abstract This paper is a survey of the basic theory of connection on bundles. A connection on tangent bundle , is called an affine connection on an -dimensional smooth manifold . By the general discussion of affine connection on vector bundles that necessarily exists on which is compatible with tensors. I. Introduction = < , > (2) In order to differentiate sections of a vector bundle [5] or where <, > represents the pairing between and ∗. vector fields on a manifold we need to introduce a Then is a section of , called the absolute differential structure called the connection on a vector bundle. For quotient or the covariant derivative of the section along . example, an affine connection is a structure attached to a differentiable manifold so that we can differentiate its Theorem 1. A connection always exists on a vector bundle. tensor fields. We first introduce the general theorem of Proof. Choose a coordinate covering { }∈ of . Since connections on vector bundles. Then we study the tangent vector bundles are trivial locally, we may assume that there is bundle. is a -dimensional vector bundle determine local frame field for any . By the local structure of intrinsically by the differentiable structure [8] of an - connections, we need only construct a × matrix on dimensional smooth manifold . each such that the matrices satisfy II. -

LP THEORY of DIFFERENTIAL FORMS on MANIFOLDS This

TRANSACTIONSOF THE AMERICAN MATHEMATICALSOCIETY Volume 347, Number 6, June 1995 LP THEORY OF DIFFERENTIAL FORMS ON MANIFOLDS CHAD SCOTT Abstract. In this paper, we establish a Hodge-type decomposition for the LP space of differential forms on closed (i.e., compact, oriented, smooth) Rieman- nian manifolds. Critical to the proof of this result is establishing an LP es- timate which contains, as a special case, the L2 result referred to by Morrey as Gaffney's inequality. This inequality helps us show the equivalence of the usual definition of Sobolev space with a more geometric formulation which we provide in the case of differential forms on manifolds. We also prove the LP boundedness of Green's operator which we use in developing the LP theory of the Hodge decomposition. For the calculus of variations, we rigorously verify that the spaces of exact and coexact forms are closed in the LP norm. For nonlinear analysis, we demonstrate the existence and uniqueness of a solution to the /1-harmonic equation. 1. Introduction This paper contributes primarily to the development of the LP theory of dif- ferential forms on manifolds. The reader should be aware that for the duration of this paper, manifold will refer only to those which are Riemannian, compact, oriented, C°° smooth and without boundary. For p = 2, the LP theory is well understood and the L2-Hodge decomposition can be found in [M]. However, in the case p ^ 2, the LP theory has yet to be fully developed. Recent appli- cations of the LP theory of differential forms on W to both quasiconformal mappings and nonlinear elasticity continue to motivate interest in this subject. -

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

NOTES on DIFFERENTIAL FORMS. PART 3: TENSORS 1. What Is A

NOTES ON DIFFERENTIAL FORMS. PART 3: TENSORS 1. What is a tensor? 1 n Let V be a finite-dimensional vector space. It could be R , it could be the tangent space to a manifold at a point, or it could just be an abstract vector space. A k-tensor is a map T : V × · · · × V ! R 2 (where there are k factors of V ) that is linear in each factor. That is, for fixed ~v2; : : : ;~vk, T (~v1;~v2; : : : ;~vk−1;~vk) is a linear function of ~v1, and for fixed ~v1;~v3; : : : ;~vk, T (~v1; : : : ;~vk) is a k ∗ linear function of ~v2, and so on. The space of k-tensors on V is denoted T (V ). Examples: n • If V = R , then the inner product P (~v; ~w) = ~v · ~w is a 2-tensor. For fixed ~v it's linear in ~w, and for fixed ~w it's linear in ~v. n • If V = R , D(~v1; : : : ;~vn) = det ~v1 ··· ~vn is an n-tensor. n • If V = R , T hree(~v) = \the 3rd entry of ~v" is a 1-tensor. • A 0-tensor is just a number. It requires no inputs at all to generate an output. Note that the definition of tensor says nothing about how things behave when you rotate vectors or permute their order. The inner product P stays the same when you swap the two vectors, but the determinant D changes sign when you swap two vectors. Both are tensors. For a 1-tensor like T hree, permuting the order of entries doesn't even make sense! ~ ~ Let fb1;:::; bng be a basis for V . -

Killing Spinor-Valued Forms and the Cone Construction

ARCHIVUM MATHEMATICUM (BRNO) Tomus 52 (2016), 341–355 KILLING SPINOR-VALUED FORMS AND THE CONE CONSTRUCTION Petr Somberg and Petr Zima Abstract. On a pseudo-Riemannian manifold M we introduce a system of partial differential Killing type equations for spinor-valued differential forms, and study their basic properties. We discuss the relationship between solutions of Killing equations on M and parallel fields on the metric cone over M for spinor-valued forms. 1. Introduction The subject of the present article are the systems of over-determined partial differential equations for spinor-valued differential forms, classified as atypeof Killing equations. The solution spaces of these systems of PDE’s are termed Killing spinor-valued differential forms. A central question in geometry asks for pseudo-Riemannian manifolds admitting non-trivial solutions of Killing type equa- tions, namely how the properties of Killing spinor-valued forms relate to the underlying geometric structure for which they can occur. Killing spinor-valued forms are closely related to Killing spinors and Killing forms with Killing vectors as a special example. Killing spinors are both twistor spinors and eigenspinors for the Dirac operator, and real Killing spinors realize the limit case in the eigenvalue estimates for the Dirac operator on compact Riemannian spin manifolds of positive scalar curvature. There is a classification of complete simply connected Riemannian manifolds equipped with real Killing spinors, leading to the construction of manifolds with the exceptional holonomy groups G2 and Spin(7), see [8], [1]. Killing vector fields on a pseudo-Riemannian manifold are the infinitesimal generators of isometries, hence they influence its geometrical properties. -

1.2 Topological Tensor Calculus

PH211 Physical Mathematics Fall 2019 1.2 Topological tensor calculus 1.2.1 Tensor fields Finite displacements in Euclidean space can be represented by arrows and have a natural vector space structure, but finite displacements in more general curved spaces, such as on the surface of a sphere, do not. However, an infinitesimal neighborhood of a point in a smooth curved space1 looks like an infinitesimal neighborhood of Euclidean space, and infinitesimal displacements dx~ retain the vector space structure of displacements in Euclidean space. An infinitesimal neighborhood of a point can be infinitely rescaled to generate a finite vector space, called the tangent space, at the point. A vector lives in the tangent space of a point. Note that vectors do not stretch from one point to vector tangent space at p p space Figure 1.2.1: A vector in the tangent space of a point. another, and vectors at different points live in different tangent spaces and so cannot be added. For example, rescaling the infinitesimal displacement dx~ by dividing it by the in- finitesimal scalar dt gives the velocity dx~ ~v = (1.2.1) dt which is a vector. Similarly, we can picture the covector rφ as the infinitesimal contours of φ in a neighborhood of a point, infinitely rescaled to generate a finite covector in the point's cotangent space. More generally, infinitely rescaling the neighborhood of a point generates the tensor space and its algebra at the point. The tensor space contains the tangent and cotangent spaces as a vector subspaces. A tensor field is something that takes tensor values at every point in a space. -

Vector Bundles on Projective Space

Vector Bundles on Projective Space Takumi Murayama December 1, 2013 1 Preliminaries on vector bundles Let X be a (quasi-projective) variety over k. We follow [Sha13, Chap. 6, x1.2]. Definition. A family of vector spaces over X is a morphism of varieties π : E ! X −1 such that for each x 2 X, the fiber Ex := π (x) is isomorphic to a vector space r 0 0 Ak(x).A morphism of a family of vector spaces π : E ! X and π : E ! X is a morphism f : E ! E0 such that the following diagram commutes: f E E0 π π0 X 0 and the map fx : Ex ! Ex is linear over k(x). f is an isomorphism if fx is an isomorphism for all x. A vector bundle is a family of vector spaces that is locally trivial, i.e., for each x 2 X, there exists a neighborhood U 3 x such that there is an isomorphism ': π−1(U) !∼ U × Ar that is an isomorphism of families of vector spaces by the following diagram: −1 ∼ r π (U) ' U × A (1.1) π pr1 U −1 where pr1 denotes the first projection. We call π (U) ! U the restriction of the vector bundle π : E ! X onto U, denoted by EjU . r is locally constant, hence is constant on every irreducible component of X. If it is constant everywhere on X, we call r the rank of the vector bundle. 1 The following lemma tells us how local trivializations of a vector bundle glue together on the entire space X. -

Determinants Math 122 Calculus III D Joyce, Fall 2012

Determinants Math 122 Calculus III D Joyce, Fall 2012 What they are. A determinant is a value associated to a square array of numbers, that square array being called a square matrix. For example, here are determinants of a general 2 × 2 matrix and a general 3 × 3 matrix. a b = ad − bc: c d a b c d e f = aei + bfg + cdh − ceg − afh − bdi: g h i The determinant of a matrix A is usually denoted jAj or det (A). You can think of the rows of the determinant as being vectors. For the 3×3 matrix above, the vectors are u = (a; b; c), v = (d; e; f), and w = (g; h; i). Then the determinant is a value associated to n vectors in Rn. There's a general definition for n×n determinants. It's a particular signed sum of products of n entries in the matrix where each product is of one entry in each row and column. The two ways you can choose one entry in each row and column of the 2 × 2 matrix give you the two products ad and bc. There are six ways of chosing one entry in each row and column in a 3 × 3 matrix, and generally, there are n! ways in an n × n matrix. Thus, the determinant of a 4 × 4 matrix is the signed sum of 24, which is 4!, terms. In this general definition, half the terms are taken positively and half negatively. In class, we briefly saw how the signs are determined by permutations. -

Matrices and Tensors

APPENDIX MATRICES AND TENSORS A.1. INTRODUCTION AND RATIONALE The purpose of this appendix is to present the notation and most of the mathematical tech- niques that are used in the body of the text. The audience is assumed to have been through sev- eral years of college-level mathematics, which included the differential and integral calculus, differential equations, functions of several variables, partial derivatives, and an introduction to linear algebra. Matrices are reviewed briefly, and determinants, vectors, and tensors of order two are described. The application of this linear algebra to material that appears in under- graduate engineering courses on mechanics is illustrated by discussions of concepts like the area and mass moments of inertia, Mohr’s circles, and the vector cross and triple scalar prod- ucts. The notation, as far as possible, will be a matrix notation that is easily entered into exist- ing symbolic computational programs like Maple, Mathematica, Matlab, and Mathcad. The desire to represent the components of three-dimensional fourth-order tensors that appear in anisotropic elasticity as the components of six-dimensional second-order tensors and thus rep- resent these components in matrices of tensor components in six dimensions leads to the non- traditional part of this appendix. This is also one of the nontraditional aspects in the text of the book, but a minor one. This is described in §A.11, along with the rationale for this approach. A.2. DEFINITION OF SQUARE, COLUMN, AND ROW MATRICES An r-by-c matrix, M, is a rectangular array of numbers consisting of r rows and c columns: ¯MM.. -

28. Exterior Powers

28. Exterior powers 28.1 Desiderata 28.2 Definitions, uniqueness, existence 28.3 Some elementary facts 28.4 Exterior powers Vif of maps 28.5 Exterior powers of free modules 28.6 Determinants revisited 28.7 Minors of matrices 28.8 Uniqueness in the structure theorem 28.9 Cartan's lemma 28.10 Cayley-Hamilton Theorem 28.11 Worked examples While many of the arguments here have analogues for tensor products, it is worthwhile to repeat these arguments with the relevant variations, both for practice, and to be sensitive to the differences. 1. Desiderata Again, we review missing items in our development of linear algebra. We are missing a development of determinants of matrices whose entries may be in commutative rings, rather than fields. We would like an intrinsic definition of determinants of endomorphisms, rather than one that depends upon a choice of coordinates, even if we eventually prove that the determinant is independent of the coordinates. We anticipate that Artin's axiomatization of determinants of matrices should be mirrored in much of what we do here. We want a direct and natural proof of the Cayley-Hamilton theorem. Linear algebra over fields is insufficient, since the introduction of the indeterminate x in the definition of the characteristic polynomial takes us outside the class of vector spaces over fields. We want to give a conceptual proof for the uniqueness part of the structure theorem for finitely-generated modules over principal ideal domains. Multi-linear algebra over fields is surely insufficient for this. 417 418 Exterior powers 2. Definitions, uniqueness, existence Let R be a commutative ring with 1.