Risk of Human System Integration Architecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Soyuz TMA-11 / Expedition 16 Manuel De La Mission

Soyuz TMA-11 / Expedition 16 Manuel de la mission SOYUZ TMA-11 – EXPEDITION 16 Par Philippe VOLVERT SOMMAIRE I. Présentation des équipages II. Présentation de la mission III. Présentation du vaisseau Soyuz IV. Précédents équipages de l’ISS V. Chronologie de lancement VI. Procédures d’amarrage VII. Procédures de retour VIII. Horaires IX. Sources A noter que toutes les heures présentes dans ce dossier sont en heure GMT. I. PRESENTATION DES EQUIPAGES Equipage Expedition 15 Fyodor YURCHIKHIN (commandant ISS) Lieu et Lieu et date de naissance : 03/01/1959 ; Batumi (Géorgie) Statut familial : Marié et 2 enfants Etudes : Graduat d’économie à la Moscow Service State University Statut professionnel: Ingénieur et travaille depuis 1993 chez RKKE Roskosmos : Sélectionné le 28/07/1997 (RKKE-13) Précédents vols : STS-112 (07/10/2002 au 18/10/2002), totalisant 10 jours 19h58 Oleg KOTOV(ingénieur de bord) Lieu et date de naissance : 27/10/1965 ; Simferopol (Ukraine) Statut familial : Marié et 2 enfants Etudes : Doctorat en médecine obtenu à la Sergei M. Kirov Military Medicine Academy Statut professionnel: Colonel, Russian Air Force et travaille au centre d’entraînement des cosmonautes, le TsPK Roskosmos : Sélectionné le 09/02/1996 (RKKE-12) Précédents vols : - Clayton Conrad ANDERSON (Ingénieur de vol ISS) Lieu et date de naissance : 23/02/1959 ; Omaha (Nebraska) Statut familial : Marié et 2 enfants Etudes : Promu bachelier en physique à Hastings College, maîtrise en ingénierie aérospatiale à la Iowa State University Statut professionnel: Directeur du centre des opérations de secours à la Nasa Nasa : Sélectionné le 04/06/1998 (Groupe) Précédents vols : - Equipage Expedition 16 / Soyuz TM-11 Peggy A. -

Maintenance Strategy and Its Importance in Rocket Launching System-An Indian Prospective

ISSN(Online) : 2319-8753 ISSN (Print) : 2347-6710 International Journal of Innovative Research in Science, Engineering and Technology (An ISO 3297: 2007 Certified Organization) Vol. 5, Issue 5, May 2016 Maintenance Strategy and its Importance in Rocket Launching System-An Indian Prospective N Gayathri1, Amit Suhane2 M. Tech Research Scholar, Mechanical Engineering, M.A.N.I.T, Bhopal, M.P. India Assistant Professor, Mechanical Engineering, M.A.N.I.T, Bhopal, M.P. India ABSTRACT: The present paper mainly describes the maintenance strategies followed at rocket launching system in India. A Launch pad is an above-ground platform from which a rocket or space vehicle is vertically launched. The potential for advanced rocket launch vehicles to meet the challenging , operational, and performance demands of space transportation in the early 21st century is examined. Space transportation requirements from recent studies underscoring the need for growth in capacity of an increasing diversity of space activities and the need for significant reductions in operational are reviewed. Maintenance strategies concepts based on moderate levels of evolutionary advanced technology are described. The vehicles provide a broad range of attractive concept alternatives with the potential to meet demanding operational and maintenance goals and the flexibility to satisfy a variety of vehicle architecture, mission, vehicle concept, and technology options. KEY WORDS: Preventive Maintenance, Rocket Launch Pad. I. INTRODUCTION A. ROCKET LAUNCH PAD A Launch pad is associate degree above-ground platform from that a rocket ballistic capsule is vertically launched. A launch advanced may be a facility which incorporates, and provides needed support for, one or a lot of launch pads. -

Mechanisms for Space Applications

Mechanisms for space applications M. Meftah a,* , A. Irbah a, R. Le Letty b, M. Barr´ ec, S. Pasquarella d, M. Bokaie e, A. Bataille b, and G. Poiet a a CNRS/INSU, LATMOS-IPSL, Universit´ e Versailles St-Quentin, Guyancourt, France b Cedrat Technologies SA, 15 Ch. de Malacher, 38246 Meylan, France c Thales Avionics Electrical Motors, 78700 Conflans Sainte-Honorine, France d Vincent Associates, 803 Linden Ave., Rochester, NY 14625,United States e TiNi Aerospace, 2505 Kerner Blvd., San Rafael, CA 94901, United States ABSTRACT All space instruments contain mechanisms or moving mechanical assemblies that must move (sliding, rolling, rotating, or spinning) and their successful operati on is usually mission-critical. Generally, mechanisms are not redundant and therefore represent potential single point failure modes. Several space missions have suered anomalies or failures due to problems in applying space mechanisms technology. Mechanisms require a specific qualification through a dedicated test campaign. This paper covers the design, development, testing, production, and in-flight experience of the PICARD/SODISM mechanisms. PICARD is a space mission dedicated to the study of the Sun. The PICARD Satellite was successfully launched, on June 15, 2010 on a DNEPR launcher from Dombarovskiy Cosmodrome, near Yasny (Russia). SODISM (SOlar Diameter Imager and Surface Mapper) is a 11 cm Ritchey-Chretien imaging telescope, taking solar images at five wavelengths. SODISM uses several mechanisms (a system to unlock the door at the entrance of the instrument, a system to open/closed the door using a stepper motor, two filters wheels usingastepper motor, and a mechanical shutter). For the fine pointing, SODISM uses three piezoelectric devices acting on th e primary mirror of the telescope. -

SPEAKERS TRANSPORTATION CONFERENCE FAA COMMERCIAL SPACE 15TH ANNUAL John R

15TH ANNUAL FAA COMMERCIAL SPACE TRANSPORTATION CONFERENCE SPEAKERS COMMERCIAL SPACE TRANSPORTATION http://www.faa.gov/go/ast 15-16 FEBRUARY 2012 HQ-12-0163.INDD John R. Allen Christine Anderson Dr. John R. Allen serves as the Program Executive for Crew Health Christine Anderson is the Executive Director of the New Mexico and Safety at NASA Headquarters, Washington DC, where he Spaceport Authority. She is responsible for the development oversees the space medicine activities conducted at the Johnson and operation of the first purpose-built commercial spaceport-- Space Center, Houston, Texas. Dr. Allen received a B.A. in Speech Spaceport America. She is a recently retired Air Force civilian Communication from the University of Maryland (1975), a M.A. with 30 years service. She was a member of the Senior Executive in Audiology/Speech Pathology from The Catholic University Service, the civilian equivalent of the military rank of General of America (1977), and a Ph.D. in Audiology and Bioacoustics officer. Anderson was the founding Director of the Space from Baylor College of Medicine (1996). Upon completion of Vehicles Directorate at the Air Force Research Laboratory, Kirtland his Master’s degree, he worked for the Easter Seals Treatment Air Force Base, New Mexico. She also served as the Director Center in Rockville, Maryland as an audiologist and speech- of the Space Technology Directorate at the Air Force Phillips language pathologist and received certification in both areas. Laboratory at Kirtland, and as the Director of the Military Satellite He joined the US Air Force in 1980, serving as Chief, Audiology Communications Joint Program Office at the Air Force Space at Andrews AFB, Maryland, and at the Wiesbaden Medical and Missile Systems Center in Los Angeles where she directed Center, Germany, and as Chief, Otolaryngology Services at the the development, acquisition and execution of a $50 billion Aeromedical Consultation Service, Brooks AFB, Texas, where portfolio. -

Final EA for the Launch and Reentry of Spaceshiptwo Reusable Suborbital Rockets at the Mojave Air and Space Port

Final Environmental Assessment for the Launch and Reentry of SpaceShipTwo Reusable Suborbital Rockets at the Mojave Air and Space Port May 2012 HQ-121575 DEPARTMENT OF TRANSPORTATION Federal Aviation Administration Office of Commercial Space Transportation; Finding of No Significant Impact AGENCY: Federal Aviation Administration (FAA) ACTION: Finding of No Significant Impact (FONSI) SUMMARY: The FAA Office of Commercial Space Transportation (AST) prepared a Final Environmental Assessment (EA) in accordance with the National Environmental Policy Act of 1969, as amended (NEPA; 42 United States Code 4321 et seq.), Council on Environmental Quality NEPA implementing regulations (40 Code of Federal Regulations parts 1500 to 1508), and FAA Order 1050.1E, Change 1, Environmental Impacts: Policies and Procedures, to evaluate the potential environmental impacts of issuing experimental permits and/or launch licenses to operate SpaceShipTwo reusable suborbital rockets and WhiteKnightTwo carrier aircraft at the Mojave Air and Space Port in Mojave, California. After reviewing and analyzing currently available data and information on existing conditions and the potential impacts of the Proposed Action, the FAA has determined that issuing experimental permits and/or launch licenses to operate SpaceShipTwo and WhiteKnightTwo at the Mojave Air and Space Port would not significantly impact the quality of the human environment. Therefore, preparation of an Environmental Impact Statement is not required, and the FAA is issuing this FONSI. The FAA made this determination in accordance with all applicable environmental laws. The Final EA is incorporated by reference in this FONSI. FOR A COPY OF THE EA AND FONSI: Visit the following internet address: http://www.faa.gov/about/office_org/headquarters_offices/ast/environmental/review/permits/ or contact Daniel Czelusniak, Environmental Program Lead, Federal Aviation Administration, 800 Independence Ave., SW, Suite 325, Washington, DC 20591; email [email protected]; or phone (202) 267-5924. -

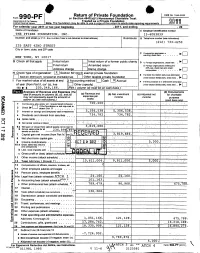

Return of Private Foundation CT' 10 201Z '

Return of Private Foundation OMB No 1545-0052 Form 990 -PF or Section 4947(a)(1) Nonexempt Charitable Trust Department of the Treasury Treated as a Private Foundation Internal Revenue Service Note. The foundation may be able to use a copy of this return to satisfy state reporting requirem M11 For calendar year 20 11 or tax year beainnina . 2011. and ending . 20 Name of foundation A Employer Identification number THE PFIZER FOUNDATION, INC. 13-6083839 Number and street (or P 0 box number If mail is not delivered to street address ) Room/suite B Telephone number (see instructions) (212) 733-4250 235 EAST 42ND STREET City or town, state, and ZIP code q C If exemption application is ► pending, check here • • • • • . NEW YORK, NY 10017 G Check all that apply Initial return Initial return of a former public charity D q 1 . Foreign organizations , check here . ► Final return Amended return 2. Foreign organizations meeting the 85% test, check here and attach Address chang e Name change computation . 10. H Check type of organization' X Section 501( exempt private foundation E If private foundation status was terminated Section 4947 ( a)( 1 ) nonexem pt charitable trust Other taxable p rivate foundation q 19 under section 507(b )( 1)(A) , check here . ► Fair market value of all assets at end J Accounting method Cash X Accrual F If the foundation is in a60-month termination of year (from Part Il, col (c), line Other ( specify ) ---- -- ------ ---------- under section 507(b)(1)(B),check here , q 205, 8, 166. 16) ► $ 04 (Part 1, column (d) must be on cash basis) Analysis of Revenue and Expenses (The (d) Disbursements total of amounts in columns (b), (c), and (d) (a) Revenue and (b) Net investment (c) Adjusted net for charitable may not necessanly equal the amounts in expenses per income income Y books purposes C^7 column (a) (see instructions) .) (cash basis only) I Contribution s odt s, grants etc. -

Solar Radius Determined from PICARD/SODISM Observations and Extremely Weak Wavelength Dependence in the Visible and the Near-Infrared

A&A 616, A64 (2018) https://doi.org/10.1051/0004-6361/201832159 Astronomy c ESO 2018 & Astrophysics Solar radius determined from PICARD/SODISM observations and extremely weak wavelength dependence in the visible and the near-infrared M. Meftah1, T. Corbard2, A. Hauchecorne1, F. Morand2, R. Ikhlef2, B. Chauvineau2, C. Renaud2, A. Sarkissian1, and L. Damé1 1 Université Paris Saclay, Sorbonne Université, UVSQ, CNRS, LATMOS, 11 Boulevard d’Alembert, 78280 Guyancourt, France e-mail: [email protected] 2 Université de la Côte d’Azur, Observatoire de la Côte d’Azur (OCA), CNRS, Boulevard de l’Observatoire, 06304 Nice, France Received 23 October 2017 / Accepted 9 May 2018 ABSTRACT Context. In 2015, the International Astronomical Union (IAU) passed Resolution B3, which defined a set of nominal conversion constants for stellar and planetary astronomy. Resolution B3 defined a new value of the nominal solar radius (RN = 695 700 km) that is different from the canonical value used until now (695 990 km). The nominal solar radius is consistent with helioseismic estimates. Recent results obtained from ground-based instruments, balloon flights, or space-based instruments highlight solar radius values that are significantly different. These results are related to the direct measurements of the photospheric solar radius, which are mainly based on the inflection point position methods. The discrepancy between the seismic radius and the photospheric solar radius can be explained by the difference between the height at disk center and the inflection point of the intensity profile on the solar limb. At 535.7 nm (photosphere), there may be a difference of 330 km between the two definitions of the solar radius. -

U.S. Human Space Flight Safety Record As of July 20, 2021

U.S. Human Space Flight Safety Record (As of 20 July 2021) Launch Type Total # of Total # People Total # of Total # of People on Died or Human Space Catastrophic Space Flight Seriously Flights Failures6 Injured3 Orbital (Total) 9271 17 1664 3 Suborbital 2332 3 2135 2 (Total) Total 1160 20 379 5 § 460.45(c) An operator must inform each space flight participant of the safety record of all launch or reentry vehicles that have carried one or more persons on board, including both U.S. government and private sector vehicles. This information must include— (1) The total number of people who have been on a suborbital or orbital space flight and the total number of people who have died or been seriously injured on these flights; and (2) The total number of launches and reentries conducted with people on board and the number of catastrophic failures of those launches and reentries. Federal Aviation Administration AST Commercial Space Transportation Footnotes 1. People on orbital space flights include Mercury (Atlas) (4), Gemini (20), Apollo (36), Skylab (9), Space Shuttle (852), and Crew Dragon (6) a. Occupants are counted even if they flew on only the launch or reentry portion. The Space Shuttle launched 817 humans and picked up 35 humans from MIR and the International Space Station. b. Occupants are counted once reentry is complete. 2. People on suborbital space flights include X-15 (169), M2 (24), Mercury (Redstone) (2), SpaceShipOne (5), SpaceShipTwo (29), and New Shepard System (4) a. Only occupants on the rocket-powered space bound vehicles are counted per safety record criterion #11. -

Virgin Galactic Th E First Ten Years Other Springer-Praxis Books of Related Interest by Erik Seedhouse

Virgin Galactic Th e First Ten Years Other Springer-Praxis books of related interest by Erik Seedhouse Tourists in Space: A Practical Guide 2008 ISBN: 978-0-387-74643-2 Lunar Outpost: The Challenges of Establishing a Human Settlement on the Moon 2008 ISBN: 978-0-387-09746-6 Martian Outpost: The Challenges of Establishing a Human Settlement on Mars 2009 ISBN: 978-0-387-98190-1 The New Space Race: China vs. the United States 2009 ISBN: 978-1-4419-0879-7 Prepare for Launch: The Astronaut Training Process 2010 ISBN: 978-1-4419-1349-4 Ocean Outpost: The Future of Humans Living Underwater 2010 ISBN: 978-1-4419-6356-7 Trailblazing Medicine: Sustaining Explorers During Interplanetary Missions 2011 ISBN: 978-1-4419-7828-8 Interplanetary Outpost: The Human and Technological Challenges of Exploring the Outer Planets 2012 ISBN: 978-1-4419-9747-0 Astronauts for Hire: The Emergence of a Commercial Astronaut Corps 2012 ISBN: 978-1-4614-0519-1 Pulling G: Human Responses to High and Low Gravity 2013 ISBN: 978-1-4614-3029-2 SpaceX: Making Commercial Spacefl ight a Reality 2013 ISBN: 978-1-4614-5513-4 Suborbital: Industry at the Edge of Space 2014 ISBN: 978-3-319-03484-3 Tourists in Space: A Practical Guide, Second Edition 2014 ISBN: 978-3-319-05037-9 Erik Seedhouse Virgin Galactic The First Ten Years Erik Seedhouse Astronaut Instructor Sandefjord , Vestfold , Norway SPRINGER-PRAXIS BOOKS IN SPACE EXPLORATION ISBN 978-3-319-09261-4 ISBN 978-3-319-09262-1 (eBook) DOI 10.1007/978-3-319-09262-1 Springer Cham Heidelberg New York Dordrecht London Library of Congress Control Number: 2014957708 © Springer International Publishing Switzerland 2015 This work is subject to copyright. -

Expedition 16 Adding International Science

EXPEDITION 16 ADDING INTERNATIONAL SCIENCE The most complex phase of assembly since the NASA Astronaut Peggy Whitson, the fi rst woman Two days after launch, International Space Station was fi rst occupied seven commander of the ISS, and Russian Cosmonaut the Soyuz docked The International Space Station is seen by the crew of STS-118 years ago began when the Expedition 16 crew arrived Yuri Malenchenko were launched aboard the Soyuz to the Space Station as Space Shuttle Endeavour moves away. at the orbiting outpost. During this ambitious six-month TMA-11 spacecraft from the Baikonur Cosmodrome joining Expedition 15 endeavor, an unprecedented three Space Shuttle in Kazakhstan on October 10. The two veterans of Commander Fyodor crews will visit the Station delivering critical new earlier missions aboard the ISS were accompanied by Yurchikhin, Oleg Kotov, components – the American-built “Harmony” node, the Dr. Sheikh Muzaphar Shukor, an orthopedic surgeon both of Russia, and European Space Agency’s “Columbus” laboratory and and the fi rst Malaysian to fl y in space. NASA Flight Engineer Japanese “Kibo” element. Clayton Anderson. Shukor spent nine days CREW PROFILE on the ISS, returning to Earth in the Soyuz Peggy Whitson (Ph. D.) TMA-10 on October Expedition 16 Commander 21 with Yurchikhin and Born: February 9, 1960, Mount Ayr, Iowa Kotov who had been Education: Graduated with a bachelors degree in biology/chemistry from Iowa aboard the station since Wesleyan College, 1981 & a doctorate in biochemistry from Rice University, 1985 April 9. Experience: Selected as an astronaut in 1996, Whitson served as a Science Offi cer during Expedition 5. -

Listening Patterns – 2 About the Study Creating the Format Groups

SSRRGG PPuubblliicc RRaaddiioo PPrrooffiillee TThhee PPuubblliicc RRaaddiioo FFoorrmmaatt SSttuuddyy LLiisstteenniinngg PPaatttteerrnnss AA SSiixx--YYeeaarr AAnnaallyyssiiss ooff PPeerrffoorrmmaannccee aanndd CChhaannggee BByy SSttaattiioonn FFoorrmmaatt By Thomas J. Thomas and Theresa R. Clifford December 2005 STATION RESOURCE GROUP 6935 Laurel Avenue Takoma Park, MD 20912 301.270.2617 www.srg.org TThhee PPuubblliicc RRaaddiioo FFoorrmmaatt SSttuuddyy:: LLiisstteenniinngg PPaatttteerrnnss Each week the 393 public radio organizations supported by the Corporation for Public Broadcasting reach some 27 million listeners. Most analyses of public radio listening examine the performance of individual stations within this large mix, the contributions of specific national programs, or aggregate numbers for the system as a whole. This report takes a different approach. Through an extensive, multi-year study of 228 stations that generate about 80% of public radio’s audience, we review patterns of listening to groups of stations categorized by the formats that they present. We find that stations that pursue different format strategies – news, classical, jazz, AAA, and the principal combinations of these – have experienced significantly different patterns of audience growth in recent years and important differences in key audience behaviors such as loyalty and time spent listening. This quantitative study complements qualitative research that the Station Resource Group, in partnership with Public Radio Program Directors, and others have pursued on the values and benefits listeners perceive in different formats and format combinations. Key findings of The Public Radio Format Study include: • In a time of relentless news cycles and a near abandonment of news by many commercial stations, public radio’s news and information stations have seen a 55% increase in their average audience from Spring 1999 to Fall 2004. -

Highlights in Space 2010

International Astronautical Federation Committee on Space Research International Institute of Space Law 94 bis, Avenue de Suffren c/o CNES 94 bis, Avenue de Suffren UNITED NATIONS 75015 Paris, France 2 place Maurice Quentin 75015 Paris, France Tel: +33 1 45 67 42 60 Fax: +33 1 42 73 21 20 Tel. + 33 1 44 76 75 10 E-mail: : [email protected] E-mail: [email protected] Fax. + 33 1 44 76 74 37 URL: www.iislweb.com OFFICE FOR OUTER SPACE AFFAIRS URL: www.iafastro.com E-mail: [email protected] URL : http://cosparhq.cnes.fr Highlights in Space 2010 Prepared in cooperation with the International Astronautical Federation, the Committee on Space Research and the International Institute of Space Law The United Nations Office for Outer Space Affairs is responsible for promoting international cooperation in the peaceful uses of outer space and assisting developing countries in using space science and technology. United Nations Office for Outer Space Affairs P. O. Box 500, 1400 Vienna, Austria Tel: (+43-1) 26060-4950 Fax: (+43-1) 26060-5830 E-mail: [email protected] URL: www.unoosa.org United Nations publication Printed in Austria USD 15 Sales No. E.11.I.3 ISBN 978-92-1-101236-1 ST/SPACE/57 *1180239* V.11-80239—January 2011—775 UNITED NATIONS OFFICE FOR OUTER SPACE AFFAIRS UNITED NATIONS OFFICE AT VIENNA Highlights in Space 2010 Prepared in cooperation with the International Astronautical Federation, the Committee on Space Research and the International Institute of Space Law Progress in space science, technology and applications, international cooperation and space law UNITED NATIONS New York, 2011 UniTEd NationS PUblication Sales no.