How to Conduct Meta-Analysis: a Basic Tutorial

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Adaptive Clinical Trials: an Introduction

Adaptive clinical trials: an introduction What are the advantages and disadvantages of adaptive clinical trial designs? How and why were Introduction adaptive clinical Adaptive clinical trial design is trials developed? becoming a hot topic in healthcare research, with some researchers In 2004, the FDA published a report arguing that adaptive trials have the on the problems faced by the potential to get new drugs to market scientific community in developing quicker. In this article, we explain new medical treatments.2 The what adaptive trials are and why they report highlighted that the pace of were developed, and we explore both innovation in biomedical science is the advantages of adaptive designs outstripping the rate of advances and the concerns being raised by in the available technologies and some in the healthcare community. tools for evaluating new treatments. Outdated tools are being used to assess new treatments and there What are adaptive is a critical need to improve the effectiveness and efficiency of clinical trials? clinical trials.2 The current process for developing Adaptive clinical trials enable new treatments is expensive, takes researchers to change an aspect a long time and in some cases, the of a trial design at an interim development process has to be assessment, while controlling stopped after significant amounts the rate of type 1 errors.1 Interim of time and resources have been assessments can help to determine invested.2 In 2006, the FDA published whether a trial design is the most a “Critical Path Opportunities -

Receiver Operating Characteristic (ROC) Curve: Practical Review for Radiologists

Receiver Operating Characteristic (ROC) Curve: Practical Review for Radiologists Seong Ho Park, MD1 The receiver operating characteristic (ROC) curve, which is defined as a plot of Jin Mo Goo, MD1 test sensitivity as the y coordinate versus its 1-specificity or false positive rate Chan-Hee Jo, PhD2 (FPR) as the x coordinate, is an effective method of evaluating the performance of diagnostic tests. The purpose of this article is to provide a nonmathematical introduction to ROC analysis. Important concepts involved in the correct use and interpretation of this analysis, such as smooth and empirical ROC curves, para- metric and nonparametric methods, the area under the ROC curve and its 95% confidence interval, the sensitivity at a particular FPR, and the use of a partial area under the ROC curve are discussed. Various considerations concerning the collection of data in radiological ROC studies are briefly discussed. An introduc- tion to the software frequently used for performing ROC analyses is also present- ed. he receiver operating characteristic (ROC) curve, which is defined as a Index terms: plot of test sensitivity as the y coordinate versus its 1-specificity or false Diagnostic radiology positive rate (FPR) as the x coordinate, is an effective method of evaluat- Receiver operating characteristic T (ROC) curve ing the quality or performance of diagnostic tests, and is widely used in radiology to Software reviews evaluate the performance of many radiological tests. Although one does not necessar- Statistical analysis ily need to understand the complicated mathematical equations and theories of ROC analysis, understanding the key concepts of ROC analysis is a prerequisite for the correct use and interpretation of the results that it provides. -

Quasi-Experimental Studies in the Fields of Infection Control and Antibiotic Resistance, Ten Years Later: a Systematic Review

HHS Public Access Author manuscript Author ManuscriptAuthor Manuscript Author Infect Control Manuscript Author Hosp Epidemiol Manuscript Author . Author manuscript; available in PMC 2019 November 12. Published in final edited form as: Infect Control Hosp Epidemiol. 2018 February ; 39(2): 170–176. doi:10.1017/ice.2017.296. Quasi-experimental Studies in the Fields of Infection Control and Antibiotic Resistance, Ten Years Later: A Systematic Review Rotana Alsaggaf, MS, Lyndsay M. O’Hara, PhD, MPH, Kristen A. Stafford, PhD, MPH, Surbhi Leekha, MBBS, MPH, Anthony D. Harris, MD, MPH, CDC Prevention Epicenters Program Department of Epidemiology and Public Health, University of Maryland School of Medicine, Baltimore, Maryland. Abstract OBJECTIVE.—A systematic review of quasi-experimental studies in the field of infectious diseases was published in 2005. The aim of this study was to assess improvements in the design and reporting of quasi-experiments 10 years after the initial review. We also aimed to report the statistical methods used to analyze quasi-experimental data. DESIGN.—Systematic review of articles published from January 1, 2013, to December 31, 2014, in 4 major infectious disease journals. METHODS.—Quasi-experimental studies focused on infection control and antibiotic resistance were identified and classified based on 4 criteria: (1) type of quasi-experimental design used, (2) justification of the use of the design, (3) use of correct nomenclature to describe the design, and (4) statistical methods used. RESULTS.—Of 2,600 articles, 173 (7%) featured a quasi-experimental design, compared to 73 of 2,320 articles (3%) in the previous review (P<.01). Moreover, 21 articles (12%) utilized a study design with a control group; 6 (3.5%) justified the use of a quasi-experimental design; and 68 (39%) identified their design using the correct nomenclature. -

Epidemiology and Biostatistics (EPBI) 1

Epidemiology and Biostatistics (EPBI) 1 Epidemiology and Biostatistics (EPBI) Courses EPBI 2219. Biostatistics and Public Health. 3 Credit Hours. This course is designed to provide students with a solid background in applied biostatistics in the field of public health. Specifically, the course includes an introduction to the application of biostatistics and a discussion of key statistical tests. Appropriate techniques to measure the extent of disease, the development of disease, and comparisons between groups in terms of the extent and development of disease are discussed. Techniques for summarizing data collected in samples are presented along with limited discussion of probability theory. Procedures for estimation and hypothesis testing are presented for means, for proportions, and for comparisons of means and proportions in two or more groups. Multivariable statistical methods are introduced but not covered extensively in this undergraduate course. Public Health majors, minors or students studying in the Public Health concentration must complete this course with a C or better. Level Registration Restrictions: May not be enrolled in one of the following Levels: Graduate. Repeatability: This course may not be repeated for additional credits. EPBI 2301. Public Health without Borders. 3 Credit Hours. Public Health without Borders is a course that will introduce you to the world of disease detectives to solve public health challenges in glocal (i.e., global and local) communities. You will learn about conducting disease investigations to support public health actions relevant to affected populations. You will discover what it takes to become a field epidemiologist through hands-on activities focused on promoting health and preventing disease in diverse populations across the globe. -

A Guide to Systematic Review and Meta-Analysis of Prediction Model Performance

RESEARCH METHODS AND REPORTING A guide to systematic review and meta-analysis of prediction model performance Thomas P A Debray,1,2 Johanna A A G Damen,1,2 Kym I E Snell,3 Joie Ensor,3 Lotty Hooft,1,2 Johannes B Reitsma,1,2 Richard D Riley,3 Karel G M Moons1,2 1Cochrane Netherlands, Validation of prediction models is diagnostic test accuracy studies. Compared to therapeu- University Medical Center tic intervention and diagnostic test accuracy studies, Utrecht, PO Box 85500 Str highly recommended and increasingly there is limited guidance on the conduct of systematic 6.131, 3508 GA Utrecht, Netherlands common in the literature. A systematic reviews and meta-analysis of primary prognosis studies. 2Julius Center for Health review of validation studies is therefore A common aim of primary prognostic studies con- Sciences and Primary Care, cerns the development of so-called prognostic predic- University Medical Center helpful, with meta-analysis needed to tion models or indices. These models estimate the Utrecht, PO Box 85500 Str 6.131, 3508 GA Utrecht, summarise the predictive performance individualised probability or risk that a certain condi- Netherlands of the model being validated across tion will occur in the future by combining information 3Research Institute for Primary from multiple prognostic factors from an individual. Care and Health Sciences, Keele different settings and populations. This Unfortunately, there is often conflicting evidence about University, Staffordshire, UK article provides guidance for the predictive performance of developed prognostic Correspondence to: T P A Debray [email protected] researchers systematically reviewing prediction models. For this reason, there is a growing Additional material is published demand for evidence synthesis of (external validation) online only. -

Biostatistics (EHCA 5367)

The University of Texas at Tyler Executive Health Care Administration MPA Program Spring 2019 COURSE NUMBER EHCA 5367 COURSE TITLE Biostatistics INSTRUCTOR Thomas Ross, PhD INSTRUCTOR INFORMATION email - [email protected] – this one is checked once a week [email protected] – this one is checked Monday thru Friday Phone - 828.262.7479 REQUIRED TEXT: Wayne Daniel and Chad Cross, Biostatistics, 10th ed., Wiley, 2013, ISBN: 978-1- 118-30279-8 COURSE DESCRIPTION This course is designed to teach students the application of statistical methods to the decision-making process in health services administration. Emphasis is placed on understanding the principles in the meaning and use of statistical analyses. Topics discussed include a review of descriptive statistics, the standard normal distribution, sampling distributions, t-tests, ANOVA, simple and multiple regression, and chi-square analysis. Students will use Microsoft Excel or other appropriate computer software in the completion of assignments. COURSE LEARNING OBJECTIVES Upon completion of this course, the student will be able to: 1. Use descriptive statistics and graphs to describe data 2. Understand basic principles of probability, sampling distributions, and binomial, Poisson, and normal distributions 3. Formulate research questions and hypotheses 4. Run and interpret t-tests and analyses of variance (ANOVA) 5. Run and interpret regression analyses 6. Run and interpret chi-square analyses GRADING POLICY Students will be graded on homework, midterm, and final exam, see weights below. ATTENDANCE/MAKE UP POLICY All students are expected to attend all of the on-campus sessions. Students are expected to participate in all online discussions in a substantive manner. If circumstances arise that a student is unable to attend a portion of the on-campus session or any of the online discussions, special arrangements must be made with the instructor in advance for make-up activity. -

Biostatistics

Biostatistics As one of the nation’s premier centers of biostatistical research, the Department of Biostatistics is dedicated to addressing high priority public health and biomedical issues and driving scientific discovery through specialized analytic techniques and catalyzing interdisciplinary research. Our world-renowned experts are engaged in developing novel statistical methods to break new ground in current research frontiers. The Department has become a leader in mission the analysis and interpretation of complex data in genetics, brain science, clinical trials, and personalized medicine. Our mission is to improve disease prevention, In these and other areas, our faculty play key roles in diagnosis, treatment, and the public’s health by public health, mental health, social science, and biomedical developing new theoretical results and applied research as collaborators and conveners. Current research statistical methods, collaborating with scientists in is wide-ranging. designing informative experiments and analyzing their data to weigh evidence and obtain valid Examples of our work: findings, and educating the next generation of biostatisticians through an innovative and relevant • Researching efficient statistical methods using large-scale curriculum, hands-on experience, and exposure biomarker data for disease risk prediction, informing to the latest in research findings and methods. clinical trial designs, discovery of personalized treatment regimes, and guiding new and more effective interventions history • Analyzing data to -

Biostatistics (BIOSTAT) 1

Biostatistics (BIOSTAT) 1 This course covers practical aspects of conducting a population- BIOSTATISTICS (BIOSTAT) based research study. Concepts include determining a study budget, setting a timeline, identifying study team members, setting a strategy BIOSTAT 301-0 Introduction to Epidemiology (1 Unit) for recruitment and retention, developing a data collection protocol This course introduces epidemiology and its uses for population health and monitoring data collection to ensure quality control and quality research. Concepts include measures of disease occurrence, common assurance. Students will demonstrate these skills by engaging in a sources and types of data, important study designs, sources of error in quarter-long group project to draft a Manual of Operations for a new epidemiologic studies and epidemiologic methods. "mock" population study. BIOSTAT 302-0 Introduction to Biostatistics (1 Unit) BIOSTAT 429-0 Systematic Review and Meta-Analysis in the Medical This course introduces principles of biostatistics and applications Sciences (1 Unit) of statistical methods in health and medical research. Concepts This course covers statistical methods for meta-analysis. Concepts include descriptive statistics, basic probability, probability distributions, include fixed-effects and random-effects models, measures of estimation, hypothesis testing, correlation and simple linear regression. heterogeneity, prediction intervals, meta regression, power assessment, BIOSTAT 303-0 Probability (1 Unit) subgroup analysis and assessment of publication -

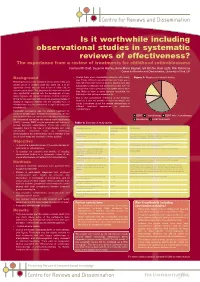

Is It Worthwhile Including Observational Studies in Systematic Reviews of Effectiveness?

CRD_mcdaid05_Poster.qxd 13/6/05 5:12 pm Page 1 Is it worthwhile including observational studies in systematic reviews of effectiveness? The experience from a review of treatments for childhood retinoblastoma Catriona Mc Daid, Suzanne Hartley, Anne-Marie Bagnall, Gill Ritchie, Kate Light, Rob Riemsma Centre for Reviews and Dissemination, University of York, UK Background • Overall there were considerable problems with quality Figure 1: Mapping of included studies (see Table). Without randomised allocation there was a Retinoblastoma is a rare malignant tumour of the retina and high risk of selection bias in all studies. Studies were also usually occurs in children under two years old. It is an susceptible to detection and performance bias, with the aggressive tumour that can lead to loss of vision and, in retrospective studies particularly susceptible as they were extreme cases, death. The prognoses for vision and survival less likely to have a study protocol specifying the have significantly improved with the development of more intervention and outcome assessments. timely diagnosis and improved treatment methods. Important • Due to the considerable limitations of the evidence clinical factors associated with prognosis are age and stage of identified, it was not possible to make meaningful and disease at diagnosis. Patients with the hereditary form of robust conclusions about the relative effectiveness of retinoblastoma may be predisposed to significant long-term different treatment approaches for childhood complications. retinoblastoma. Historically, enucleation was the standard treatment for unilateral retinoblastoma. In bilateral retinoblastoma, the eye ■ ■ ■ with the most advanced tumour was commonly removed and EBRT Chemotherapy EBRT with Chemotherapy the contralateral eye treated with external beam radiotherapy ■ Enucleation ■ Local Treatments (EBRT). -

How to Get Started with a Systematic Review in Epidemiology: an Introductory Guide for Early Career Researchers

Denison et al. Archives of Public Health 2013, 71:21 http://www.archpublichealth.com/content/71/1/21 ARCHIVES OF PUBLIC HEALTH METHODOLOGY Open Access How to get started with a systematic review in epidemiology: an introductory guide for early career researchers Hayley J Denison1*†, Richard M Dodds1,2†, Georgia Ntani1†, Rachel Cooper3†, Cyrus Cooper1†, Avan Aihie Sayer1,2† and Janis Baird1† Abstract Background: Systematic review is a powerful research tool which aims to identify and synthesize all evidence relevant to a research question. The approach taken is much like that used in a scientific experiment, with high priority given to the transparency and reproducibility of the methods used and to handling all evidence in a consistent manner. Early career researchers may find themselves in a position where they decide to undertake a systematic review, for example it may form part or all of a PhD thesis. Those with no prior experience of systematic review may need considerable support and direction getting started with such a project. Here we set out in simple terms how to get started with a systematic review. Discussion: Advice is given on matters such as developing a review protocol, searching using databases and other methods, data extraction, risk of bias assessment and data synthesis including meta-analysis. Signposts to further information and useful resources are also given. Conclusion: A well-conducted systematic review benefits the scientific field by providing a summary of existing evidence and highlighting unanswered questions. For the individual, undertaking a systematic review is also a great opportunity to improve skills in critical appraisal and in synthesising evidence. -

Guidelines for Reporting Meta-Epidemiological Methodology Research

EBM Primer Evid Based Med: first published as 10.1136/ebmed-2017-110713 on 12 July 2017. Downloaded from Guidelines for reporting meta-epidemiological methodology research Mohammad Hassan Murad, Zhen Wang 10.1136/ebmed-2017-110713 Abstract The goal is generally broad but often focuses on exam- Published research should be reported to evidence users ining the impact of certain characteristics of clinical studies on the observed effect, describing the distribu- Evidence-Based Practice with clarity and transparency that facilitate optimal tion of research evidence in a specific setting, exam- Center, Mayo Clinic, Rochester, appraisal and use of evidence and allow replication Minnesota, USA by other researchers. Guidelines for such reporting ining heterogeneity and exploring its causes, identifying are available for several types of studies but not for and describing plausible biases and providing empirical meta-epidemiological methodology studies. Meta- evidence for hypothesised associations. Unlike classic Correspondence to: epidemiological studies adopt a systematic review epidemiology, the unit of analysis for meta-epidemio- Dr Mohammad Hassan Murad, or meta-analysis approach to examine the impact logical studies is a study, not a patient. The outcomes Evidence-based Practice Center, of certain characteristics of clinical studies on the of meta-epidemiological studies are usually not clinical Mayo Clinic, 200 First Street 6–8 observed effect and provide empirical evidence for outcomes. SW, Rochester, MN 55905, USA; hypothesised associations. The unit of analysis in meta- In this guide, we adapt the items used in the PRISMA murad. mohammad@ mayo. edu 9 epidemiological studies is a study, not a patient. The statement for reporting systematic reviews and outcomes of meta-epidemiological studies are usually meta-analysis to fit the setting of meta- epidemiological not clinical outcomes. -

Adaptive Designs in Clinical Trials: Why Use Them, and How to Run and Report Them Philip Pallmann1* , Alun W

Pallmann et al. BMC Medicine (2018) 16:29 https://doi.org/10.1186/s12916-018-1017-7 CORRESPONDENCE Open Access Adaptive designs in clinical trials: why use them, and how to run and report them Philip Pallmann1* , Alun W. Bedding2, Babak Choodari-Oskooei3, Munyaradzi Dimairo4,LauraFlight5, Lisa V. Hampson1,6, Jane Holmes7, Adrian P. Mander8, Lang’o Odondi7, Matthew R. Sydes3,SofíaS.Villar8, James M. S. Wason8,9, Christopher J. Weir10, Graham M. Wheeler8,11, Christina Yap12 and Thomas Jaki1 Abstract Adaptive designs can make clinical trials more flexible by utilising results accumulating in the trial to modify the trial’s course in accordance with pre-specified rules. Trials with an adaptive design are often more efficient, informative and ethical than trials with a traditional fixed design since they often make better use of resources such as time and money, and might require fewer participants. Adaptive designs can be applied across all phases of clinical research, from early-phase dose escalation to confirmatory trials. The pace of the uptake of adaptive designs in clinical research, however, has remained well behind that of the statistical literature introducing new methods and highlighting their potential advantages. We speculate that one factor contributing to this is that the full range of adaptations available to trial designs, as well as their goals, advantages and limitations, remains unfamiliar to many parts of the clinical community. Additionally, the term adaptive design has been misleadingly used as an all-encompassing label to refer to certain methods that could be deemed controversial or that have been inadequately implemented. We believe that even if the planning and analysis of a trial is undertaken by an expert statistician, it is essential that the investigators understand the implications of using an adaptive design, for example, what the practical challenges are, what can (and cannot) be inferred from the results of such a trial, and how to report and communicate the results.