1 Elementary Set Theory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mathematics 144 Set Theory Fall 2012 Version

MATHEMATICS 144 SET THEORY FALL 2012 VERSION Table of Contents I. General considerations.……………………………………………………………………………………………………….1 1. Overview of the course…………………………………………………………………………………………………1 2. Historical background and motivation………………………………………………………….………………4 3. Selected problems………………………………………………………………………………………………………13 I I. Basic concepts. ………………………………………………………………………………………………………………….15 1. Topics from logic…………………………………………………………………………………………………………16 2. Notation and first steps………………………………………………………………………………………………26 3. Simple examples…………………………………………………………………………………………………………30 I I I. Constructions in set theory.………………………………………………………………………………..……….34 1. Boolean algebra operations.……………………………………………………………………………………….34 2. Ordered pairs and Cartesian products……………………………………………………………………… ….40 3. Larger constructions………………………………………………………………………………………………..….42 4. A convenient assumption………………………………………………………………………………………… ….45 I V. Relations and functions ……………………………………………………………………………………………….49 1.Binary relations………………………………………………………………………………………………………… ….49 2. Partial and linear orderings……………………………..………………………………………………… ………… 56 3. Functions…………………………………………………………………………………………………………… ….…….. 61 4. Composite and inverse function.…………………………………………………………………………… …….. 70 5. Constructions involving functions ………………………………………………………………………… ……… 77 6. Order types……………………………………………………………………………………………………… …………… 80 i V. Number systems and set theory …………………………………………………………………………………. 84 1. The Natural Numbers and Integers…………………………………………………………………………….83 2. Finite induction -

The Matroid Theorem We First Review Our Definitions: a Subset System Is A

CMPSCI611: The Matroid Theorem Lecture 5 We first review our definitions: A subset system is a set E together with a set of subsets of E, called I, such that I is closed under inclusion. This means that if X ⊆ Y and Y ∈ I, then X ∈ I. The optimization problem for a subset system (E, I) has as input a positive weight for each element of E. Its output is a set X ∈ I such that X has at least as much total weight as any other set in I. A subset system is a matroid if it satisfies the exchange property: If i and i0 are sets in I and i has fewer elements than i0, then there exists an element e ∈ i0 \ i such that i ∪ {e} ∈ I. 1 The Generic Greedy Algorithm Given any finite subset system (E, I), we find a set in I as follows: • Set X to ∅. • Sort the elements of E by weight, heaviest first. • For each element of E in this order, add it to X iff the result is in I. • Return X. Today we prove: Theorem: For any subset system (E, I), the greedy al- gorithm solves the optimization problem for (E, I) if and only if (E, I) is a matroid. 2 Theorem: For any subset system (E, I), the greedy al- gorithm solves the optimization problem for (E, I) if and only if (E, I) is a matroid. Proof: We will show first that if (E, I) is a matroid, then the greedy algorithm is correct. Assume that (E, I) satisfies the exchange property. -

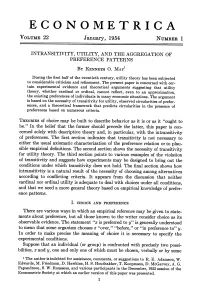

Intransitivity, Utility, and the Aggregation of Preference Patterns

ECONOMETRICA VOLUME 22 January, 1954 NUMBER 1 INTRANSITIVITY, UTILITY, AND THE AGGREGATION OF PREFERENCE PATTERNS BY KENNETH 0. MAY1 During the first half of the twentieth century, utility theory has been subjected to considerable criticism and refinement. The present paper is concerned with cer- tain experimental evidence and theoretical arguments suggesting that utility theory, whether cardinal or ordinal, cannot reflect, even to an approximation, the existing preferences of individuals in many economic situations. The argument is based on the necessity of transitivity for utility, observed circularities of prefer- ences, and a theoretical framework that predicts circularities in the presence of preferences based on numerous criteria. THEORIES of choice may be built to describe behavior as it is or as it "ought to be." In the belief that the former should precede the latter, this paper is con- cerned solely with descriptive theory and, in particular, with the intransitivity of preferences. The first section indicates that transitivity is not necessary to either the usual axiomatic characterization of the preference relation or to plau- sible empirical definitions. The second section shows the necessity of transitivity for utility theory. The third section points to various examples of the violation of transitivity and suggests how experiments may be designed to bring out the conditions under which transitivity does not hold. The final section shows how intransitivity is a natural result of the necessity of choosing among alternatives according to conflicting criteria. It appears from the discussion that neither cardinal nor ordinal utility is adequate to deal with choices under all conditions, and that we need a more general theory based on empirical knowledge of prefer- ence patterns. -

Determinacy in Linear Rational Expectations Models

Journal of Mathematical Economics 40 (2004) 815–830 Determinacy in linear rational expectations models Stéphane Gauthier∗ CREST, Laboratoire de Macroéconomie (Timbre J-360), 15 bd Gabriel Péri, 92245 Malakoff Cedex, France Received 15 February 2002; received in revised form 5 June 2003; accepted 17 July 2003 Available online 21 January 2004 Abstract The purpose of this paper is to assess the relevance of rational expectations solutions to the class of linear univariate models where both the number of leads in expectations and the number of lags in predetermined variables are arbitrary. It recommends to rule out all the solutions that would fail to be locally unique, or equivalently, locally determinate. So far, this determinacy criterion has been applied to particular solutions, in general some steady state or periodic cycle. However solutions to linear models with rational expectations typically do not conform to such simple dynamic patterns but express instead the current state of the economic system as a linear difference equation of lagged states. The innovation of this paper is to apply the determinacy criterion to the sets of coefficients of these linear difference equations. Its main result shows that only one set of such coefficients, or the corresponding solution, is locally determinate. This solution is commonly referred to as the fundamental one in the literature. In particular, in the saddle point configuration, it coincides with the saddle stable (pure forward) equilibrium trajectory. © 2004 Published by Elsevier B.V. JEL classification: C32; E32 Keywords: Rational expectations; Selection; Determinacy; Saddle point property 1. Introduction The rational expectations hypothesis is commonly justified by the fact that individual forecasts are based on the relevant theory of the economic system. -

A Taste of Set Theory for Philosophers

Journal of the Indian Council of Philosophical Research, Vol. XXVII, No. 2. A Special Issue on "Logic and Philosophy Today", 143-163, 2010. Reprinted in "Logic and Philosophy Today" (edited by A. Gupta ans J.v.Benthem), College Publications vol 29, 141-162, 2011. A taste of set theory for philosophers Jouko Va¨an¨ anen¨ ∗ Department of Mathematics and Statistics University of Helsinki and Institute for Logic, Language and Computation University of Amsterdam November 17, 2010 Contents 1 Introduction 1 2 Elementary set theory 2 3 Cardinal and ordinal numbers 3 3.1 Equipollence . 4 3.2 Countable sets . 6 3.3 Ordinals . 7 3.4 Cardinals . 8 4 Axiomatic set theory 9 5 Axiom of Choice 12 6 Independence results 13 7 Some recent work 14 7.1 Descriptive Set Theory . 14 7.2 Non well-founded set theory . 14 7.3 Constructive set theory . 15 8 Historical Remarks and Further Reading 15 ∗Research partially supported by grant 40734 of the Academy of Finland and by the EUROCORES LogICCC LINT programme. I Journal of the Indian Council of Philosophical Research, Vol. XXVII, No. 2. A Special Issue on "Logic and Philosophy Today", 143-163, 2010. Reprinted in "Logic and Philosophy Today" (edited by A. Gupta ans J.v.Benthem), College Publications vol 29, 141-162, 2011. 1 Introduction Originally set theory was a theory of infinity, an attempt to understand infinity in ex- act terms. Later it became a universal language for mathematics and an attempt to give a foundation for all of mathematics, and thereby to all sciences that are based on mathematics. -

Natural Numerosities of Sets of Tuples

TRANSACTIONS OF THE AMERICAN MATHEMATICAL SOCIETY Volume 367, Number 1, January 2015, Pages 275–292 S 0002-9947(2014)06136-9 Article electronically published on July 2, 2014 NATURAL NUMEROSITIES OF SETS OF TUPLES MARCO FORTI AND GIUSEPPE MORANA ROCCASALVO Abstract. We consider a notion of “numerosity” for sets of tuples of natural numbers that satisfies the five common notions of Euclid’s Elements, so it can agree with cardinality only for finite sets. By suitably axiomatizing such a no- tion, we show that, contrasting to cardinal arithmetic, the natural “Cantorian” definitions of order relation and arithmetical operations provide a very good algebraic structure. In fact, numerosities can be taken as the non-negative part of a discretely ordered ring, namely the quotient of a formal power series ring modulo a suitable (“gauge”) ideal. In particular, special numerosities, called “natural”, can be identified with the semiring of hypernatural numbers of appropriate ultrapowers of N. Introduction In this paper we consider a notion of “equinumerosity” on sets of tuples of natural numbers, i.e. an equivalence relation, finer than equicardinality, that satisfies the celebrated five Euclidean common notions about magnitudines ([8]), including the principle that “the whole is greater than the part”. This notion preserves the basic properties of equipotency for finite sets. In particular, the natural Cantorian definitions endow the corresponding numerosities with a structure of a discretely ordered semiring, where 0 is the size of the emptyset, 1 is the size of every singleton, and greater numerosity corresponds to supersets. This idea of “Euclidean” numerosity has been recently investigated by V. -

Chapter 1 Logic and Set Theory

Chapter 1 Logic and Set Theory To criticize mathematics for its abstraction is to miss the point entirely. Abstraction is what makes mathematics work. If you concentrate too closely on too limited an application of a mathematical idea, you rob the mathematician of his most important tools: analogy, generality, and simplicity. – Ian Stewart Does God play dice? The mathematics of chaos In mathematics, a proof is a demonstration that, assuming certain axioms, some statement is necessarily true. That is, a proof is a logical argument, not an empir- ical one. One must demonstrate that a proposition is true in all cases before it is considered a theorem of mathematics. An unproven proposition for which there is some sort of empirical evidence is known as a conjecture. Mathematical logic is the framework upon which rigorous proofs are built. It is the study of the principles and criteria of valid inference and demonstrations. Logicians have analyzed set theory in great details, formulating a collection of axioms that affords a broad enough and strong enough foundation to mathematical reasoning. The standard form of axiomatic set theory is denoted ZFC and it consists of the Zermelo-Fraenkel (ZF) axioms combined with the axiom of choice (C). Each of the axioms included in this theory expresses a property of sets that is widely accepted by mathematicians. It is unfortunately true that careless use of set theory can lead to contradictions. Avoiding such contradictions was one of the original motivations for the axiomatization of set theory. 1 2 CHAPTER 1. LOGIC AND SET THEORY A rigorous analysis of set theory belongs to the foundations of mathematics and mathematical logic. -

PROBLEM SET 1. the AXIOM of FOUNDATION Early on in the Book

PROBLEM SET 1. THE AXIOM OF FOUNDATION Early on in the book (page 6) it is indicated that throughout the formal development ‘set’ is going to mean ‘pure set’, or set whose elements, elements of elements, and so on, are all sets and not items of any other kind such as chairs or tables. This convention applies also to these problems. 1. A set y is called an epsilon-minimal element of a set x if y Î x, but there is no z Î x such that z Î y, or equivalently x Ç y = Ø. The axiom of foundation, also called the axiom of regularity, asserts that any set that has any element at all (any nonempty set) has an epsilon-minimal element. Show that this axiom implies the following: (a) There is no set x such that x Î x. (b) There are no sets x and y such that x Î y and y Î x. (c) There are no sets x and y and z such that x Î y and y Î z and z Î x. 2. In the book the axiom of foundation or regularity is considered only in a late chapter, and until that point no use of it is made of it in proofs. But some results earlier in the book become significantly easier to prove if one does use it. Show, for example, how to use it to give an easy proof of the existence for any sets x and y of a set x* such that x* and y are disjoint (have empty intersection) and there is a bijection (one-to-one onto function) from x to x*, a result called the exchange principle. -

The Axiom of Choice and Its Implications

THE AXIOM OF CHOICE AND ITS IMPLICATIONS KEVIN BARNUM Abstract. In this paper we will look at the Axiom of Choice and some of the various implications it has. These implications include a number of equivalent statements, and also some less accepted ideas. The proofs discussed will give us an idea of why the Axiom of Choice is so powerful, but also so controversial. Contents 1. Introduction 1 2. The Axiom of Choice and Its Equivalents 1 2.1. The Axiom of Choice and its Well-known Equivalents 1 2.2. Some Other Less Well-known Equivalents of the Axiom of Choice 3 3. Applications of the Axiom of Choice 5 3.1. Equivalence Between The Axiom of Choice and the Claim that Every Vector Space has a Basis 5 3.2. Some More Applications of the Axiom of Choice 6 4. Controversial Results 10 Acknowledgments 11 References 11 1. Introduction The Axiom of Choice states that for any family of nonempty disjoint sets, there exists a set that consists of exactly one element from each element of the family. It seems strange at first that such an innocuous sounding idea can be so powerful and controversial, but it certainly is both. To understand why, we will start by looking at some statements that are equivalent to the axiom of choice. Many of these equivalences are very useful, and we devote much time to one, namely, that every vector space has a basis. We go on from there to see a few more applications of the Axiom of Choice and its equivalents, and finish by looking at some of the reasons why the Axiom of Choice is so controversial. -

Formal Construction of a Set Theory in Coq

Saarland University Faculty of Natural Sciences and Technology I Department of Computer Science Masters Thesis Formal Construction of a Set Theory in Coq submitted by Jonas Kaiser on November 23, 2012 Supervisor Prof. Dr. Gert Smolka Advisor Dr. Chad E. Brown Reviewers Prof. Dr. Gert Smolka Dr. Chad E. Brown Eidesstattliche Erklarung¨ Ich erklare¨ hiermit an Eides Statt, dass ich die vorliegende Arbeit selbststandig¨ verfasst und keine anderen als die angegebenen Quellen und Hilfsmittel verwendet habe. Statement in Lieu of an Oath I hereby confirm that I have written this thesis on my own and that I have not used any other media or materials than the ones referred to in this thesis. Einverstandniserkl¨ arung¨ Ich bin damit einverstanden, dass meine (bestandene) Arbeit in beiden Versionen in die Bibliothek der Informatik aufgenommen und damit vero¨ffentlicht wird. Declaration of Consent I agree to make both versions of my thesis (with a passing grade) accessible to the public by having them added to the library of the Computer Science Department. Saarbrucken,¨ (Datum/Date) (Unterschrift/Signature) iii Acknowledgements First of all I would like to express my sincerest gratitude towards my advisor, Chad Brown, who supported me throughout this work. His extensive knowledge and insights opened my eyes to the beauty of axiomatic set theory and foundational mathematics. We spent many hours discussing the minute details of the various constructions and he taught me the importance of mathematical rigour. Equally important was the support of my supervisor, Prof. Smolka, who first introduced me to the topic and was there whenever a question arose. -

Equivalents to the Axiom of Choice and Their Uses A

EQUIVALENTS TO THE AXIOM OF CHOICE AND THEIR USES A Thesis Presented to The Faculty of the Department of Mathematics California State University, Los Angeles In Partial Fulfillment of the Requirements for the Degree Master of Science in Mathematics By James Szufu Yang c 2015 James Szufu Yang ALL RIGHTS RESERVED ii The thesis of James Szufu Yang is approved. Mike Krebs, Ph.D. Kristin Webster, Ph.D. Michael Hoffman, Ph.D., Committee Chair Grant Fraser, Ph.D., Department Chair California State University, Los Angeles June 2015 iii ABSTRACT Equivalents to the Axiom of Choice and Their Uses By James Szufu Yang In set theory, the Axiom of Choice (AC) was formulated in 1904 by Ernst Zermelo. It is an addition to the older Zermelo-Fraenkel (ZF) set theory. We call it Zermelo-Fraenkel set theory with the Axiom of Choice and abbreviate it as ZFC. This paper starts with an introduction to the foundations of ZFC set the- ory, which includes the Zermelo-Fraenkel axioms, partially ordered sets (posets), the Cartesian product, the Axiom of Choice, and their related proofs. It then intro- duces several equivalent forms of the Axiom of Choice and proves that they are all equivalent. In the end, equivalents to the Axiom of Choice are used to prove a few fundamental theorems in set theory, linear analysis, and abstract algebra. This paper is concluded by a brief review of the work in it, followed by a few points of interest for further study in mathematics and/or set theory. iv ACKNOWLEDGMENTS Between the two department requirements to complete a master's degree in mathematics − the comprehensive exams and a thesis, I really wanted to experience doing a research and writing a serious academic paper. -

Frege and the Logic of Sense and Reference

FREGE AND THE LOGIC OF SENSE AND REFERENCE Kevin C. Klement Routledge New York & London Published in 2002 by Routledge 29 West 35th Street New York, NY 10001 Published in Great Britain by Routledge 11 New Fetter Lane London EC4P 4EE Routledge is an imprint of the Taylor & Francis Group Printed in the United States of America on acid-free paper. Copyright © 2002 by Kevin C. Klement All rights reserved. No part of this book may be reprinted or reproduced or utilized in any form or by any electronic, mechanical or other means, now known or hereafter invented, including photocopying and recording, or in any infomration storage or retrieval system, without permission in writing from the publisher. 10 9 8 7 6 5 4 3 2 1 Library of Congress Cataloging-in-Publication Data Klement, Kevin C., 1974– Frege and the logic of sense and reference / by Kevin Klement. p. cm — (Studies in philosophy) Includes bibliographical references and index ISBN 0-415-93790-6 1. Frege, Gottlob, 1848–1925. 2. Sense (Philosophy) 3. Reference (Philosophy) I. Title II. Studies in philosophy (New York, N. Y.) B3245.F24 K54 2001 12'.68'092—dc21 2001048169 Contents Page Preface ix Abbreviations xiii 1. The Need for a Logical Calculus for the Theory of Sinn and Bedeutung 3 Introduction 3 Frege’s Project: Logicism and the Notion of Begriffsschrift 4 The Theory of Sinn and Bedeutung 8 The Limitations of the Begriffsschrift 14 Filling the Gap 21 2. The Logic of the Grundgesetze 25 Logical Language and the Content of Logic 25 Functionality and Predication 28 Quantifiers and Gothic Letters 32 Roman Letters: An Alternative Notation for Generality 38 Value-Ranges and Extensions of Concepts 42 The Syntactic Rules of the Begriffsschrift 44 The Axiomatization of Frege’s System 49 Responses to the Paradox 56 v vi Contents 3.