A Robust Void-Finding Algorithm Using Computational Geometry and Parallelization Techniques

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

And Ecclesiastical Cosmology

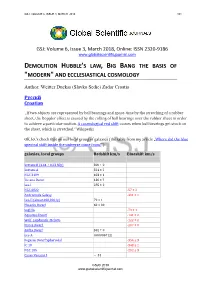

GSJ: VOLUME 6, ISSUE 3, MARCH 2018 101 GSJ: Volume 6, Issue 3, March 2018, Online: ISSN 2320-9186 www.globalscientificjournal.com DEMOLITION HUBBLE'S LAW, BIG BANG THE BASIS OF "MODERN" AND ECCLESIASTICAL COSMOLOGY Author: Weitter Duckss (Slavko Sedic) Zadar Croatia Pусскй Croatian „If two objects are represented by ball bearings and space-time by the stretching of a rubber sheet, the Doppler effect is caused by the rolling of ball bearings over the rubber sheet in order to achieve a particular motion. A cosmological red shift occurs when ball bearings get stuck on the sheet, which is stretched.“ Wikipedia OK, let's check that on our local group of galaxies (the table from my article „Where did the blue spectral shift inside the universe come from?“) galaxies, local groups Redshift km/s Blueshift km/s Sextans B (4.44 ± 0.23 Mly) 300 ± 0 Sextans A 324 ± 2 NGC 3109 403 ± 1 Tucana Dwarf 130 ± ? Leo I 285 ± 2 NGC 6822 -57 ± 2 Andromeda Galaxy -301 ± 1 Leo II (about 690,000 ly) 79 ± 1 Phoenix Dwarf 60 ± 30 SagDIG -79 ± 1 Aquarius Dwarf -141 ± 2 Wolf–Lundmark–Melotte -122 ± 2 Pisces Dwarf -287 ± 0 Antlia Dwarf 362 ± 0 Leo A 0.000067 (z) Pegasus Dwarf Spheroidal -354 ± 3 IC 10 -348 ± 1 NGC 185 -202 ± 3 Canes Venatici I ~ 31 GSJ© 2018 www.globalscientificjournal.com GSJ: VOLUME 6, ISSUE 3, MARCH 2018 102 Andromeda III -351 ± 9 Andromeda II -188 ± 3 Triangulum Galaxy -179 ± 3 Messier 110 -241 ± 3 NGC 147 (2.53 ± 0.11 Mly) -193 ± 3 Small Magellanic Cloud 0.000527 Large Magellanic Cloud - - M32 -200 ± 6 NGC 205 -241 ± 3 IC 1613 -234 ± 1 Carina Dwarf 230 ± 60 Sextans Dwarf 224 ± 2 Ursa Minor Dwarf (200 ± 30 kly) -247 ± 1 Draco Dwarf -292 ± 21 Cassiopeia Dwarf -307 ± 2 Ursa Major II Dwarf - 116 Leo IV 130 Leo V ( 585 kly) 173 Leo T -60 Bootes II -120 Pegasus Dwarf -183 ± 0 Sculptor Dwarf 110 ± 1 Etc. -

Zerohack Zer0pwn Youranonnews Yevgeniy Anikin Yes Men

Zerohack Zer0Pwn YourAnonNews Yevgeniy Anikin Yes Men YamaTough Xtreme x-Leader xenu xen0nymous www.oem.com.mx www.nytimes.com/pages/world/asia/index.html www.informador.com.mx www.futuregov.asia www.cronica.com.mx www.asiapacificsecuritymagazine.com Worm Wolfy Withdrawal* WillyFoReal Wikileaks IRC 88.80.16.13/9999 IRC Channel WikiLeaks WiiSpellWhy whitekidney Wells Fargo weed WallRoad w0rmware Vulnerability Vladislav Khorokhorin Visa Inc. Virus Virgin Islands "Viewpointe Archive Services, LLC" Versability Verizon Venezuela Vegas Vatican City USB US Trust US Bankcorp Uruguay Uran0n unusedcrayon United Kingdom UnicormCr3w unfittoprint unelected.org UndisclosedAnon Ukraine UGNazi ua_musti_1905 U.S. Bankcorp TYLER Turkey trosec113 Trojan Horse Trojan Trivette TriCk Tribalzer0 Transnistria transaction Traitor traffic court Tradecraft Trade Secrets "Total System Services, Inc." Topiary Top Secret Tom Stracener TibitXimer Thumb Drive Thomson Reuters TheWikiBoat thepeoplescause the_infecti0n The Unknowns The UnderTaker The Syrian electronic army The Jokerhack Thailand ThaCosmo th3j35t3r testeux1 TEST Telecomix TehWongZ Teddy Bigglesworth TeaMp0isoN TeamHav0k Team Ghost Shell Team Digi7al tdl4 taxes TARP tango down Tampa Tammy Shapiro Taiwan Tabu T0x1c t0wN T.A.R.P. Syrian Electronic Army syndiv Symantec Corporation Switzerland Swingers Club SWIFT Sweden Swan SwaggSec Swagg Security "SunGard Data Systems, Inc." Stuxnet Stringer Streamroller Stole* Sterlok SteelAnne st0rm SQLi Spyware Spying Spydevilz Spy Camera Sposed Spook Spoofing Splendide -

Galaxy and Mass Assembly (GAMA): the Bright Void Galaxy Population

MNRAS 000, 1–22 (2015) Preprint 10 March 2021 Compiled using MNRAS LATEX style file v3.0 Galaxy And Mass Assembly (GAMA): The Bright Void Galaxy Population in the Optical and Mid-IR S. J. Penny,1,2,3⋆ M. J. I. Brown,1,2 K. A. Pimbblet,4,1,2 M. E. Cluver,5 D. J. Croton,6 M. S. Owers,7,8 R. Lange,9 M. Alpaslan,10 I. Baldry,11 J. Bland-Hawthorn,12 S. Brough,7 S. P. Driver,9,13 B. W. Holwerda,14 A. M. Hopkins,7 T. H. Jarrett,15 D. Heath Jones,8 L. S. Kelvin16 M. A. Lara-López,17 J. Liske,18 A. R. López-Sánchez,7,8 J. Loveday,19 M. Meyer,9 P. Norberg,20 A. S. G. Robotham,9 and M. Rodrigues21 1School of Physics, Monash University, Clayton, Victoria 3800, Australia 2Monash Centre for Astrophysics, Monash University, Clayton, Victoria 3800, Australia 3Institute of Cosmology and Gravitation, University of Portsmouth, Dennis Sciama Building, Burnaby Road, Portsmouth, PO1 3FX, UK 4 Department of Physics and Mathematics, University of Hull, Cottingham Road, Kingston-upon-Hull HU6 7RX, UK 5 University of the Western Cape, Robert Sobukwe Road, Bellville, 7535, South Africa 6 Centre for Astrophysics and Supercomputing, Swinburne University of Technology, Hawthorn, Victoria 3122, Australia 7 Australian Astronomical Observatory, PO Box 915, North Ryde, NSW 1670, Australia 8 Department of Physics and Astronomy, Macquarie University, NSW 2109, Australia 9 ICRAR, The University of Western Australia, 35 Stirling Highway, Crawley, WA 6009, Australia 10 NASA Ames Research Centre, N232, Moffett Field, Mountain View, CA 94035, United States 11 Astrophysics Research -

The W. M. Keck Observatory Scientific Strategic Plan

The W. M. Keck Observatory Scientific Strategic Plan A.Kinney (Keck Chief Scientist), S.Kulkarni (COO Director, Caltech),C.Max (UCO Director, UCSC), H. Lewis, (Keck Observatory Director), J.Cohen (SSC Co-Chair, Caltech), C. L.Martin (SSC Co-Chair, UCSB), C. Beichman (Exo-Planet TG, NExScI/NASA), D.R.Ciardi (TMT TG, NExScI/NASA), E.Kirby (Subaru TG. Caltech), J.Rhodes (WFIRST/Euclid TG, JPL/NASA), A. Shapley (JWST TG, UCLA), C. Steidel (TMT TG, Caltech), S. Wright (AO TG, UCSD), R. Campbell (Keck Observatory, Observing Support and AO Operations Lead) Ethan Tweedie Photography 1. Overview and Summary of Recommendations The W. M. Keck Observatory (Keck Observatory), with its twin 10-m telescopes, has had a glorious history of transformative discoveries, instrumental advances, and education for young scientists since the start of science operations in 1993. This document presents our strategic plan and vision for the next five (5) years to ensure the continuation of this great tradition during a challenging period of fiscal constraints, the imminent launch of powerful new space telescopes, and the rise of Time Domain Astronomy (TDA). Our overarching goal is to maximize the scientific impact of the twin Keck telescopes, and to continue on this great trajectory of discoveries. In pursuit of this goal, we must continue our development of new capabilities, as well as new modes of operation and observing. We must maintain our existing instruments, telescopes, and infrastructure to ensure the most efficient possible use of precious telescope time, all within the larger context of the Keck user community and the enormous scientific opportunities afforded by upcoming space telescopes and large survey programs. -

The 2Df Galaxy Redshift Survey: Wiener Reconstruction of The

Mon. Not. R. Astron. Soc. 000, 000–000 (0000) Printed 9 November 2018 (MN LATEX style file v2.2) The 2dF Galaxy Redshift Survey: Wiener Reconstruction of the Cosmic Web Pirin Erdogdu˜ 1,2, Ofer Lahav1, Saleem Zaroubi3, George Efstathiou1, Steve Moody, John A. Peacock12, Matthew Colless17, Ivan K. Baldry9, Carlton M. Baugh16, Joss Bland- Hawthorn7, Terry Bridges7, Russell Cannon7, Shaun Cole16, Chris Collins4, Warrick Couch5, Gavin Dalton6,15, Roberto De Propris17, Simon P. Driver17, Richard S. Ellis8, Carlos S. Frenk16, Karl Glazebrook9, Carole Jackson17, Ian Lewis6, Stuart Lumsden10, Steve Maddox11, Darren Madgwick13, Peder Norberg14, Bruce A. Peterson17, Will Sutherland12, Keith Taylor8 (The 2dFGRS Team) 1Institute of Astronomy, Madingley Road, Cambridge CB3 0HA, UK 2Department of Physics, Middle East Technical University, 06531, Ankara, Turkey 3Max Planck Institut f¨ur Astrophysik, Karl-Schwarzschild-Straße 1, 85741 Garching, Germany 4Astrophysics Research Institute, Liverpool John Moores University, Twelve Quays House, Birkenhead, L14 1LD, UK 5Department of Astrophysics, University of New South Wales, Sydney, NSW 2052, Australia 6Department of Physics, University of Oxford, Keble Road, Oxford OX1 3RH, UK 7Anglo-Australian Observatory, P.O. Box 296, Epping, NSW 2111, Australia 8Department of Astronomy, California Institute of Technology, Pasadena, CA 91025, USA 9Department of Physics & Astronomy, Johns Hopkins University, Baltimore, MD 21118-2686, USA 10Department of Physics, University of Leeds, Woodhouse Lane, Leeds, LS2 9JT, UK 11School -

Cover Illustration by JE Mullat the BIG BANG and the BIG CRUNCH

Cover Illustration by J. E. Mullat THE BIG BANG AND THE BIG CRUNCH From Public Domain: designed by Luke Mastin OBSERVATIONS THAT SEEM TO CONTRADICT THE BIG BANG MODEL WHILE AT THE SAME TIME SUPPORT AN ALTERNATIVE COSMOLOGY Forrest W. Noble, Timothy M. Cooper The Pantheory Research Organization Cerritos, California 90703 USA HUBBLE-INDEPENDENT PROCEDURE CALCULATING DISTANCES TO COSMOLOGICAL OBJECTS Joseph E. Mullat Project and Technical Editor: J. E. Mullat, Copenhagen 2019 ISBN‐13 978‐8740‐40‐411‐1 Private Publishing Platform Byvej 269 2650, Hvidovre, Denmark [email protected] The Postulate COSMOLOGY THAT CONTRADICTS THE BIG BANG THEORY The Standard and The Alternative Cosmological Models, Distances Calculation to Galaxies without Hubble Constant For the alternative cosmological models discussed in the book, distances are calculated for galaxies without using the Hubble constant. This proc‐ ess is mentioned in the second narrative and is described in detail in the third narrative. According to the third narrative, when the energy den‐ sity of space in the universe decreases, and the universe expands, a new space is created by a gravitational transition from dark energy. Although the Universe develops on the basis of this postulate about the appear‐ ance of a new space, it is assumed that matter arises as a result of such a gravitational transition into both new dark space and visible / baryonic matter. It is somewhat unimportant how we describe dark energy, call‐ ing it ether, gravitons, or vice versa, turning ether into dark energy. It should be clear to everyone that this renaming does not change the es‐ sence of this gravitational transition. -

A Robust Public Catalogue of Voids and Superclusters in the SDSS Data Release 7 Galaxy Surveys

MNRAS (2014) doi:10.1093/mnras/stu349 A robust public catalogue of voids and superclusters in the SDSS Data Release 7 galaxy surveys Seshadri Nadathur1,2‹ and Shaun Hotchkiss2,3 1Fakultat¨ fur¨ Physik, Universitat¨ Bielefeld, Postfach 100131, D-33501 Bielefeld, Germany 2Department of Physics, University of Helsinki and Helsinki Institute of Physics, PO Box 64, FIN-00014, University of Helsinki, Finland 3Department of Physics and Astronomy, University of Sussex, Brighton BN1 9QH, UK Accepted 2014 February 20. Received 2014 February 20; in original form 2013 October 15 ABSTRACT The study of the interesting cosmological properties of voids in the Universe depends on the efficient and robust identification of such voids in galaxy redshift surveys. Recently, Sutter et al. have published a public catalogue of voids in the Sloan Digital Sky Survey Data Release 7 main galaxy and luminous red galaxy samples, using the void-finding algorithm ZOBOV, which is based on the watershed transform. We examine the properties of this catalogue and show that it suffers from several problems and inconsistencies, including the identification of some extremely overdense regions as voids. As a result, cosmological results obtained using this catalogue need to be reconsidered. We provide instead an alternative, self-consistent, public catalogue of voids in the same galaxy data, obtained from using an improved version of the same watershed transform algorithm. We provide a more robust method of dealing with survey boundaries and masks, as well as with a radially varying selection function, which means that our method can be applied to any other survey. We discuss some basic properties of the voids thus discovered, and describe how further information may be obtained from the catalogue. -

Chronology of the Universe - Wikipedia

7/1/2018 Chronology of the universe - Wikipedia Chronology of the universe The chronology of the universe describes the history and future of the universe according to Big Bang cosmology. The earliest stages of the universe's existence are estimated as taking place 13.8 billion years ago, with an uncertainty of around 21 million years at the 68% confidence level.[1] Contents Outline A more detailed summary Very early universe Diagram of evolution of the (observable part) of the universe from the Big Planck epoch Bang (left) to the present Grand unification epoch Electroweak epoch Inflationary epoch and the metric expansion of space Nature timeline Baryogenesis Supersymmetry breaking view • discuss • (speculative) 0 — Electroweak symmetry breaking Vertebrates ←Earliest mammals – Cambrian explosion Early universe ← ←Earliest plants The quark epoch -1 — Multicellular ←Earliest sexual Hadron epoch – L life reproduction Neutrino decoupling and cosmic neutrino background -2 — i f Possible formation of primordial – ←Atmospheric oxygen black holes e photosynthesis Lepton epoch -3 — Photon epoch – ←Earliest oxygen Nucleosynthesis of light Unicellular life elements -4 —accelerated expansion←Earliest life Matter domination – ←Solar System Recombination, photon decoupling, and the cosmic -5 — microwave background (CMB) – The Dark Ages and large-scale structure emergence -6 — Dark Ages – Habitable epoch -7 — ←Alpha Centauri https://en.wikipedia.org/wiki/Chronology_of_the_universe 1/26 7/1/2018 Chronology of the universe - Wikipedia -7 — p a Ce tau -

The Full Appendices with All References

Breakthrough Listen Exotica Catalog References 1 APPENDIX A. THE PROTOTYPE SAMPLE A.1. Minor bodies We classify Solar System minor bodies according to both orbital family and composition, with a small number of additional subtypes. Minor bodies of specific compositions might be selected by ETIs for mining (c.f., Papagiannis 1978). From a SETI perspective, orbital families might be targeted by ETI probes to provide a unique vantage point over bodies like the Earth, or because they are dynamically stable for long periods of time and could accumulate a large number of artifacts (e.g., Benford 2019). There is a large overlap in some cases between spectral and orbital groups (as in DeMeo & Carry 2014), as with the E-belt and E-type asteroids, for which we use the same Prototype. For asteroids, our spectral-type system is largely taken from Tholen(1984) (see also Tedesco et al. 1989). We selected those types considered the most significant by Tholen(1984), adding those unique to one or a few members. Some intermediate classes that blend into larger \complexes" in the more recent Bus & Binzel(2002) taxonomy were omitted. In choosing the Prototypes, we were guided by the classifications of Tholen(1984), Tedesco et al.(1989), and Bus & Binzel(2002). The comet orbital classifications were informed by Levison(1996). \Distant minor bodies", adapting the \distant objects" term used by the Minor Planet Center,1 refer to outer Solar System bodies beyond the Jupiter Trojans that are not comets. The spectral type system is that of Barucci et al. (2005) and Fulchignoni et al.(2008), with the latter guiding our Prototype selection. -

The Dark Energy Survey Collaboration , 2015) Using DES Data As Well As Combined Analyses Focusing on Smaller Scales (Park Et Al., 2015) Have Been Presented Elsewhere

ADVERTIMENT. Lʼaccés als continguts dʼaquesta tesi queda condicionat a lʼacceptació de les condicions dʼús establertes per la següent llicència Creative Commons: http://cat.creativecommons.org/?page_id=184 ADVERTENCIA. El acceso a los contenidos de esta tesis queda condicionado a la aceptación de las condiciones de uso establecidas por la siguiente licencia Creative Commons: http://es.creativecommons.org/blog/licencias/ WARNING. The access to the contents of this doctoral thesis it is limited to the acceptance of the use conditions set by the following Creative Commons license: https://creativecommons.org/licenses/?lang=en Dark Energy properties from the combination of large-scale structure and weak gravitational lensing in the Dark Energy Survey Author: Carles Sánchez Alonso Departament de Física Universitat Autònoma de Barcelona A thesis submitted for the degree of Philosophae Doctor (PhD) Day of defense: 28 September 2017 Director & Tutor: Dr. Ramon Miquel Pascual IFAE & ICREA Edifici Cn, UAB 08193 Bellaterra (Barcelona), Spain [email protected] CONTENTS Introduction 1 I Preliminars 3 1 Cosmological framework 5 1.1 The smooth universe ............................. 6 1.1.1 The field equations ......................... 6 1.1.2 The FLRW metric ........................... 7 1.1.3 The Friedmann equations ..................... 8 1.2 Fundamental observations ......................... 9 1.2.1 The expansion of the universe: Hubble’s law .......... 9 1.2.2 The Cosmic Microwave Background ............... 11 1.2.3 The abundance of primordial elements ............. 14 1.3 Distances in the universe .......................... 16 1.3.1 Comoving distance .......................... 16 1.3.2 Angular diameter distance ..................... 16 1.3.3 Luminosity distance ......................... 17 1.4 The accelerating universe .......................... 18 1.5 The Large-Scale Structure of the Universe ............... -

Defining Cosmological Voids in the Millennium Simulation Using The

Defining Cosmological Voids in the Millennium Simulation Using the Parameter-free ZOBOV Algorithm Frances Mei Hardin 2009 NSF/REU Program Physics Department, University of Notre Dame Advisor: Dr. Peter Garnavich Professor of Physics, University of Notre Dame Abstract The Zones Bordering On Voidness (ZOBOV) parameter-free void-finding algorithm is applied to data from the 2005 N-body Millennium Simulation. Voids can be used as a distance estimator for cosmic expansion to the first order because they are affected only by dark matter and relatively small quantities of real matter. They are defined by the ZOBOV program as local density minima and their surrounding depressions fixed by Voronoi tessellations. The return from ZOBOV's analysis of the Millennium data depicts the probabilities of voids from Poisson fluctuations instead of assigning parameters to automatically choose a set of voids because there currently is no conventional statistical significance level across void-finding algorithms in astrophysics. Designating a significance level allows for specificity of output voids but overall significance levels vary depending on the goals of the experiment. The voids defined by the ZOBOV program in this paper have a default density threshold of 0.2 so that voids with minimum density greater than 0.2 times the mean density are excluded. Successful results were obtained by running ZOBOV with 58,715 voids found within the full, 9 million particle data set, 6,451 voids found within ~1 million particles, 1,884 voids found within ~300,000 particles, and 483 voids found within ~80,000 particles. Introduction The mass distribution of the Universe describes a “cosmic web” which is dominated by filaments that extend from galaxy clusters, sheets of filaments that form complex walls, and under-dense voids that constitute the overall greatest volume (Bond, Kofman, & Pogosyan, 1996). -

UCLA Electronic Theses and Dissertations

UCLA UCLA Electronic Theses and Dissertations Title Galaxies at the Epoch of Reionization Permalink https://escholarship.org/uc/item/39t4d9qg Author Mason, Charlotte Publication Date 2018 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA Los Angeles Galaxies at the Epoch of Cosmic Reionization A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Astronomy by Charlotte Ann Mason 2018 © Copyright by Charlotte Ann Mason 2018 ABSTRACT OF THE DISSERTATION Galaxies at the Epoch of Cosmic Reionization by Charlotte Ann Mason Doctor of Philosophy in Astronomy University of California, Los Angeles, 2018 Professor Tommaso L. Treu, Chair The reionization of intergalactic hydrogen in the universe's first billion years was likely driven by the first stars and galaxies, so its history encodes information about their prop- erties. But the timeline of reionization is not well-measured and it is still unclear whether galaxies alone can produce the required ionizing photons. In my thesis I have focused on two ways to use galaxies at our current observational frontiers to constrain reionization and high redshift galaxy evolution. One tool is the UV luminosity function (LF), which traces the evolution of star-forming galaxies and their ionizing photons. Accurately measuring LFs and understanding their evolution are important for understanding the connections between galaxies and their dark matter halos. I developed a simple, but powerful, semi-analytic model for LF evolution assuming star formation is driven by dark matter accretion. This has proved remarkably consistent with observations, and implies the majority of star formation at high redshifts occurs in low mass galaxies.