Defining Cosmological Voids in the Millennium Simulation Using The

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gravitational Waves from Resolvable Massive Black Hole Binary Systems

Mon. Not. R. Astron. Soc. 000, 000–000 (0000) Printed 22 October 2018 (MN LATEX style file v2.2) Gravitational waves from resolvable massive black hole binary systems and observations with Pulsar Timing Arrays A. Sesana1, A. Vecchio2 and M. Volonteri3 1Center for Gravitational Wave Physics, The Pennsylvania State University, University Park, PA 16802, USA 2School of Physics and Astronomy, University of Birmingham, Edgbaston, Birmingham, B15 2TT, UK 3 Dept. of Astronomy, University of Michigan, Ann Arbor, MI 48109, USA Received — ABSTRACT Massive black holes are key components of the assembly and evolution of cosmic structures and a number of surveys are currently on-going or planned to probe the demographics of these objects and to gain insight into the relevant physical processes. Pulsar Timing Arrays (PTAs) currently provide the only means to observe gravita- > 7 tional radiation from massive black hole binary systems with masses ∼ 10 M⊙. The whole cosmic population produces a stochastic background that could be detectable with upcoming Pulsar Timing Arrays. Sources sufficiently close and/or massive gener- ate gravitational radiation that significantly exceeds the level of the background and could be individually resolved. We consider a wide range of massive black hole binary assembly scenarios, we investigate the distribution of the main physical parameters of the sources, such as masses and redshift, and explore the consequences for Pulsar Timing Arrays observations. Depending on the specific massive black hole population model, we estimate that on average at least one resolvable source produces timing residuals in the range ∼ 5 − 50 ns. Pulsar Timing Arrays, and in particular the future Square Kilometre Array (SKA), can plausibly detect these unique systems, although the events are likely to be rare. -

The Illustristng Simulations: Public Data Release

Nelson et al. Computational Astrophysics and Cosmology (2019)6:2 https://doi.org/10.1186/s40668-019-0028-x R E S E A R C H Open Access The IllustrisTNG simulations: public data release Dylan Nelson1*,VolkerSpringel1,6,7, Annalisa Pillepich2, Vicente Rodriguez-Gomez8,PaulTorrey9,5, Shy Genel4, Mark Vogelsberger5, Ruediger Pakmor1,6, Federico Marinacci10,5, Rainer Weinberger3, Luke Kelley11,MarkLovell12,13, Benedikt Diemer3 and Lars Hernquist3 Abstract We present the full public release of all data from the TNG100 and TNG300 simulations of the IllustrisTNG project. IllustrisTNG is a suite of large volume, cosmological, gravo-magnetohydrodynamical simulations run with the moving-mesh code AREPO. TNG includes a comprehensive model for galaxy formation physics, and each TNG simulation self-consistently solves for the coupled evolution of dark matter, cosmic gas, luminous stars, and supermassive black holes from early time to the present day, z = 0. Each of the flagship runs—TNG50, TNG100, and TNG300—are accompanied by halo/subhalo catalogs, merger trees, lower-resolution and dark-matter only counterparts, all available with 100 snapshots. We discuss scientific and numerical cautions and caveats relevant when using TNG. The data volume now directly accessible online is ∼750 TB, including 1200 full volume snapshots and ∼80,000 high time-resolution subbox snapshots. This will increase to ∼1.1 PB with the future release of TNG50. Data access and analysis examples are available in IDL, Python, and Matlab. We describe improvements and new functionality in the web-based API, including on-demand visualization and analysis of galaxies and halos, exploratory plotting of scaling relations and other relationships between galactic and halo properties, and a new JupyterLab interface. -

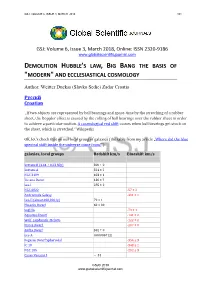

And Ecclesiastical Cosmology

GSJ: VOLUME 6, ISSUE 3, MARCH 2018 101 GSJ: Volume 6, Issue 3, March 2018, Online: ISSN 2320-9186 www.globalscientificjournal.com DEMOLITION HUBBLE'S LAW, BIG BANG THE BASIS OF "MODERN" AND ECCLESIASTICAL COSMOLOGY Author: Weitter Duckss (Slavko Sedic) Zadar Croatia Pусскй Croatian „If two objects are represented by ball bearings and space-time by the stretching of a rubber sheet, the Doppler effect is caused by the rolling of ball bearings over the rubber sheet in order to achieve a particular motion. A cosmological red shift occurs when ball bearings get stuck on the sheet, which is stretched.“ Wikipedia OK, let's check that on our local group of galaxies (the table from my article „Where did the blue spectral shift inside the universe come from?“) galaxies, local groups Redshift km/s Blueshift km/s Sextans B (4.44 ± 0.23 Mly) 300 ± 0 Sextans A 324 ± 2 NGC 3109 403 ± 1 Tucana Dwarf 130 ± ? Leo I 285 ± 2 NGC 6822 -57 ± 2 Andromeda Galaxy -301 ± 1 Leo II (about 690,000 ly) 79 ± 1 Phoenix Dwarf 60 ± 30 SagDIG -79 ± 1 Aquarius Dwarf -141 ± 2 Wolf–Lundmark–Melotte -122 ± 2 Pisces Dwarf -287 ± 0 Antlia Dwarf 362 ± 0 Leo A 0.000067 (z) Pegasus Dwarf Spheroidal -354 ± 3 IC 10 -348 ± 1 NGC 185 -202 ± 3 Canes Venatici I ~ 31 GSJ© 2018 www.globalscientificjournal.com GSJ: VOLUME 6, ISSUE 3, MARCH 2018 102 Andromeda III -351 ± 9 Andromeda II -188 ± 3 Triangulum Galaxy -179 ± 3 Messier 110 -241 ± 3 NGC 147 (2.53 ± 0.11 Mly) -193 ± 3 Small Magellanic Cloud 0.000527 Large Magellanic Cloud - - M32 -200 ± 6 NGC 205 -241 ± 3 IC 1613 -234 ± 1 Carina Dwarf 230 ± 60 Sextans Dwarf 224 ± 2 Ursa Minor Dwarf (200 ± 30 kly) -247 ± 1 Draco Dwarf -292 ± 21 Cassiopeia Dwarf -307 ± 2 Ursa Major II Dwarf - 116 Leo IV 130 Leo V ( 585 kly) 173 Leo T -60 Bootes II -120 Pegasus Dwarf -183 ± 0 Sculptor Dwarf 110 ± 1 Etc. -

Machine Learning and Cosmological Simulations I: Semi-Analytical Models

MNRAS 000,1{18 (2015) Preprint 20 August 2018 Compiled using MNRAS LATEX style file v3.0 Machine Learning and Cosmological Simulations I: Semi-Analytical Models Harshil M. Kamdar1;2?, Matthew J. Turk2;4 and Robert J. Brunner2;3;4;5 1Department of Physics, University of Illinois, Urbana, IL 61801 USA 2Department of Astronomy, University of Illinois, Urbana, IL 61801 USA 3Department of Statistics, University of Illinois, Champaign, IL 61820 USA 4National Center for Supercomputing Applications, Urbana, IL 61801 USA 5Beckman Institute For Advanced Science and Technology, University of Illinois, Urbana, IL, 61801 USA Accepted 2015 October 1. Received 2015 September 30; in original form 2015 July 2 ABSTRACT We present a new exploratory framework to model galaxy formation and evolution in a hierarchical universe by using machine learning (ML). Our motivations are two- fold: (1) presenting a new, promising technique to study galaxy formation, and (2) quantitatively analyzing the extent of the influence of dark matter halo properties on galaxies in the backdrop of semi-analytical models (SAMs). We use the influential Millennium Simulation and the corresponding Munich SAM to train and test vari- ous sophisticated machine learning algorithms (k-Nearest Neighbors, decision trees, random forests and extremely randomized trees). By using only essential dark matter 12 halo physical properties for haloes of M > 10 M and a partial merger tree, our model predicts the hot gas mass, cold gas mass, bulge mass, total stellar mass, black hole mass and cooling radius at z = 0 for each central galaxy in a dark matter halo for the Millennium run. Our results provide a unique and powerful phenomenolog- ical framework to explore the galaxy-halo connection that is built upon SAMs and demonstrably place ML as a promising and a computationally efficient tool to study small-scale structure formation. -

Zerohack Zer0pwn Youranonnews Yevgeniy Anikin Yes Men

Zerohack Zer0Pwn YourAnonNews Yevgeniy Anikin Yes Men YamaTough Xtreme x-Leader xenu xen0nymous www.oem.com.mx www.nytimes.com/pages/world/asia/index.html www.informador.com.mx www.futuregov.asia www.cronica.com.mx www.asiapacificsecuritymagazine.com Worm Wolfy Withdrawal* WillyFoReal Wikileaks IRC 88.80.16.13/9999 IRC Channel WikiLeaks WiiSpellWhy whitekidney Wells Fargo weed WallRoad w0rmware Vulnerability Vladislav Khorokhorin Visa Inc. Virus Virgin Islands "Viewpointe Archive Services, LLC" Versability Verizon Venezuela Vegas Vatican City USB US Trust US Bankcorp Uruguay Uran0n unusedcrayon United Kingdom UnicormCr3w unfittoprint unelected.org UndisclosedAnon Ukraine UGNazi ua_musti_1905 U.S. Bankcorp TYLER Turkey trosec113 Trojan Horse Trojan Trivette TriCk Tribalzer0 Transnistria transaction Traitor traffic court Tradecraft Trade Secrets "Total System Services, Inc." Topiary Top Secret Tom Stracener TibitXimer Thumb Drive Thomson Reuters TheWikiBoat thepeoplescause the_infecti0n The Unknowns The UnderTaker The Syrian electronic army The Jokerhack Thailand ThaCosmo th3j35t3r testeux1 TEST Telecomix TehWongZ Teddy Bigglesworth TeaMp0isoN TeamHav0k Team Ghost Shell Team Digi7al tdl4 taxes TARP tango down Tampa Tammy Shapiro Taiwan Tabu T0x1c t0wN T.A.R.P. Syrian Electronic Army syndiv Symantec Corporation Switzerland Swingers Club SWIFT Sweden Swan SwaggSec Swagg Security "SunGard Data Systems, Inc." Stuxnet Stringer Streamroller Stole* Sterlok SteelAnne st0rm SQLi Spyware Spying Spydevilz Spy Camera Sposed Spook Spoofing Splendide -

Virtu - the Virtual Universe

VirtU - The Virtual Universe comprising the Theoretical Virtual Observatory & the Theory/Observations Interface A collaborative e-science proposal between Carlos Frenk University of Durham Ofer Lahav University College London Nicholas Walton Cambridge University Carlton Baugh University of Durham Greg Bryan University of Oxford Shaun Cole University of Durham Jean-Christophe Desplat Edinburgh Parallel Computing Centre Gavin Dalton University of Oxford George Efstathiou Cambridge University Nick Holliman University of Durham Adrian Jenkins University of Durham Andrew King University of Leicester Simon Morris University of Durham Jerry Ostriker Cambridge University John Peacock University of Edinburgh Frazer Pearce University of Nottingham Jim Pringle Cambridge University Chris Rudge University of Leicester Joe Silk University of Oxford Chris Simpson University of Durham Linda Smith University College London Iain Stewart University of Durham Tom Theuns University of Durham Peter Thomas University of Sussex Gavin Pringle Edinburgh Parallel Computing Centre Jie Xu University of Durham Sukyoung Yi University of Oxford Overseas collaborators: Joerg Colberg University of Pittsburgh Hugh Couchman McMaster University August Evrard University of Michigan Guinevere Kauffmann Max-Planck Institut fur Astrophysik Volker Springel Max-Planck Institut fur Astrophysik Rob Thacker McMaster University Simon White Max-Planck Institut fur Astrophysik 1 Executive Summary 1. We propose to construct a Virtual Universe (VirtU) consisting of the \Theoretical Virtual -

Galaxy and Mass Assembly (GAMA): the Bright Void Galaxy Population

MNRAS 000, 1–22 (2015) Preprint 10 March 2021 Compiled using MNRAS LATEX style file v3.0 Galaxy And Mass Assembly (GAMA): The Bright Void Galaxy Population in the Optical and Mid-IR S. J. Penny,1,2,3⋆ M. J. I. Brown,1,2 K. A. Pimbblet,4,1,2 M. E. Cluver,5 D. J. Croton,6 M. S. Owers,7,8 R. Lange,9 M. Alpaslan,10 I. Baldry,11 J. Bland-Hawthorn,12 S. Brough,7 S. P. Driver,9,13 B. W. Holwerda,14 A. M. Hopkins,7 T. H. Jarrett,15 D. Heath Jones,8 L. S. Kelvin16 M. A. Lara-López,17 J. Liske,18 A. R. López-Sánchez,7,8 J. Loveday,19 M. Meyer,9 P. Norberg,20 A. S. G. Robotham,9 and M. Rodrigues21 1School of Physics, Monash University, Clayton, Victoria 3800, Australia 2Monash Centre for Astrophysics, Monash University, Clayton, Victoria 3800, Australia 3Institute of Cosmology and Gravitation, University of Portsmouth, Dennis Sciama Building, Burnaby Road, Portsmouth, PO1 3FX, UK 4 Department of Physics and Mathematics, University of Hull, Cottingham Road, Kingston-upon-Hull HU6 7RX, UK 5 University of the Western Cape, Robert Sobukwe Road, Bellville, 7535, South Africa 6 Centre for Astrophysics and Supercomputing, Swinburne University of Technology, Hawthorn, Victoria 3122, Australia 7 Australian Astronomical Observatory, PO Box 915, North Ryde, NSW 1670, Australia 8 Department of Physics and Astronomy, Macquarie University, NSW 2109, Australia 9 ICRAR, The University of Western Australia, 35 Stirling Highway, Crawley, WA 6009, Australia 10 NASA Ames Research Centre, N232, Moffett Field, Mountain View, CA 94035, United States 11 Astrophysics Research -

Mocking the Universe: the Jubilee Cosmological Simulations

Mocking the Universe: The Jubilee cosmological simulations Deep galaxy surveys, large-scale structure and dark energy. Spanish participation in future projects, Valencia, 29-30 March 2012 JUBILEE Juropa Hubble Volume Simulation Project Participants: P.I: S. Gottlober (AIP) I. I. Iliev (Sussex) II. G. Yepes (UAM) III. J.M. Diego (IFCA) IV. E. Martínez González (IFCA) Why do we need large particle simulations? Large Volume Galaxy Surveys DES, KIDS, BOSS, JPAS, LSST, BigBOSS, Euclid They will probe 10-100 Gpc^3 volumes • Need to resolve halos hosting the faintest galaxies of these surveys to produce realistic mock catalogues. Higher z surveys imply smaller galaxies and smaller halos->more mass resolution. • Fundamental tool to compare clustering properties Zero crossing of CF. 130Mpc of galaxies with theoretical predictions from Prada et al 2012 cosmological models at few % level. Not possible only with linear theory. Must do the full non-linear evolution for scales 100+ Mpc (BAO, zero crossing) Galaxy Biases: Large mass resolution is needed if BAO in BOSS, internal sub-structure of dm halos has to be properly 100Mpc scale resolved to map halos to galaxies. e.g. Using the Halo Abundance Matching technique (e.g. Trujillo-Gómez et al 2011, Nuza et al 2012) We will need trillion+ particle simulations Large Volume Galaxy Surveys A typical example: BOSS (z=0.1..0.7) Box size to host BOSS survey : 3.5 h^-1 Gpc BOSS completed down to galx with Vcir >350 km/s - > Mvir ~5x10^12 Msun. To properly resolve the peak of the Vrot in a dark matter halo we need a minimum of 500-1000 particles. -

Numerical Cosmology: Recreating the Universe in a Supercomputer The

March 2006 The Alexandria Lectures Numerical Cosmology: Recreating the Universe in a Supercomputer Simon White Max Planck Institute for Astrophysics The Three-fold Way to Astrophysical Truth OBSERVATION The Three-fold Way to Astrophysical Truth OBSERVATION THEORY The Three-fold Way to Astrophysical Truth OBSERVATION SIMULATION THEORY INGREDIENTS FOR SIMULATIONS ● The physical contents of the Universe Ordinary (baryonic) matter – protons, neutrons, electrons Radiation (photons, neutrinos...) Dark Matter Dark Energy ● The Laws of Physics General Relativity Electromagnetism Standard model of particle physics Thermodynamics ● Initial and boundary conditions Global cosmological context Creation of “initial” structure ● Astrophysical phenomenology (“subgrid” physics) Star formation and evoluti on The COBE satellite (1989 - 1993) ● Three instruments Far Infrared Absolute Spectroph. Differential Microwave Radiom. Diffuse InfraRed Background Exp Spectrum of the Cosmic Microwave Background Data from COBE/FIRAS a near-perfect black body! What do we learn from the COBE spectrum? ● The microwave background radiation looks like thermal radiation from a `Planckian black-body'. This determines its temperature T = 2.73K ● In the past the Universe was hot and almost without structure -- Void and without form -- At that time it was nearly in thermal equilibrium ● There has been no substantial heating of the Universe since a few months after the Big Bang itself. COBE's temperature map of the entire sky T = 2.728 K T = 0.1 K COBE's temperature map of the -

The Millennium Simulation: Cosmic Evolution in a Supercomputer Simon White Max Planck Institute for Astrophysics the COBE Satellite (1989 - 1993)

The Millennium Simulation: cosmic evolution in a supercomputer Simon White Max Planck Institute for Astrophysics The COBE satellite (1989 - 1993) ● Two instruments made maps of the whole sky in microwaves and in infrared radiation ● One instrument took a precise spectrum of the sky in microwaves COBE's temperature map of the entire sky T = 2.728 K T = 0.1 K COBE's temperature map of the entire sky T = 2.728 K T = 0.0034 K COBE's temperature map of the entire sky T = 2.728 K T = 0.00002 K Structure in the COBE map ● One side of the sky is `hot', the other is `cold' the Earth's motion through the Cosmos V = 600 km/s Milky Way ● Radiation from hot gas and dust in our own Milky Way ● Structure in the Microwave Background itself Structure in the Microwave Background Where is the structure? In the cosmic “clouds”, 40 billion light years away What are we seeing? Weak gravito-sound waves in the clouds When do we see these clouds? When the Universe was 400,000 years old, and was 1,000 times smaller and 1,000 times hotter than today How big are the structures? At least a billion light-years across (in the COBE maps) When were they made? During inflation, perhaps 10-30sec. after the Big Bang What did they turn into? Everything we see in the present Universe The WMAP Satellite at Lagrange-Point L2 The WMAP of the whole CMB sky Bennett et al 2003 What can we learn from these structures? The pattern of the structures is influenced by several things: --the Geometry of the Universe finite or infinite eternal or doomed to end --the Content of the Universe: -

The W. M. Keck Observatory Scientific Strategic Plan

The W. M. Keck Observatory Scientific Strategic Plan A.Kinney (Keck Chief Scientist), S.Kulkarni (COO Director, Caltech),C.Max (UCO Director, UCSC), H. Lewis, (Keck Observatory Director), J.Cohen (SSC Co-Chair, Caltech), C. L.Martin (SSC Co-Chair, UCSB), C. Beichman (Exo-Planet TG, NExScI/NASA), D.R.Ciardi (TMT TG, NExScI/NASA), E.Kirby (Subaru TG. Caltech), J.Rhodes (WFIRST/Euclid TG, JPL/NASA), A. Shapley (JWST TG, UCLA), C. Steidel (TMT TG, Caltech), S. Wright (AO TG, UCSD), R. Campbell (Keck Observatory, Observing Support and AO Operations Lead) Ethan Tweedie Photography 1. Overview and Summary of Recommendations The W. M. Keck Observatory (Keck Observatory), with its twin 10-m telescopes, has had a glorious history of transformative discoveries, instrumental advances, and education for young scientists since the start of science operations in 1993. This document presents our strategic plan and vision for the next five (5) years to ensure the continuation of this great tradition during a challenging period of fiscal constraints, the imminent launch of powerful new space telescopes, and the rise of Time Domain Astronomy (TDA). Our overarching goal is to maximize the scientific impact of the twin Keck telescopes, and to continue on this great trajectory of discoveries. In pursuit of this goal, we must continue our development of new capabilities, as well as new modes of operation and observing. We must maintain our existing instruments, telescopes, and infrastructure to ensure the most efficient possible use of precious telescope time, all within the larger context of the Keck user community and the enormous scientific opportunities afforded by upcoming space telescopes and large survey programs. -

Exploring the Millennium Run-Scalable Rendering of Large

Exploring the Millennium Run - Scalable Rendering of Large-Scale Cosmological Datasets Roland Fraedrich, Jens Schneider, and Rudiger¨ Westermann Fig. 1. Visualizations of the Millennium Simulation with more than 10 billion particles at different scales and a screen space error below one pixel. On a 1200x800 viewport the average frame rate is 11 fps. Abstract—In this paper we investigate scalability limitations in the visualization of large-scale particle-based cosmological simula- tions, and we present methods to reduce these limitations on current PC architectures. To minimize the amount of data to be streamed from disk to the graphics subsystem, we propose a visually continuous level-of-detail (LOD) particle representation based on a hier- archical quantization scheme for particle coordinates and rules for generating coarse particle distributions. Given the maximal world space error per level, our LOD selection technique guarantees a sub-pixel screen space error during rendering. A brick-based page- tree allows to further reduce the number of disk seek operations to be performed. Additional particle quantities like density, velocity dispersion, and radius are compressed at no visible loss using vector quantization of logarithmically encoded floating point values. By fine-grain view-frustum culling and presence acceleration in a geometry shader the required geometry throughput on the GPU can be significantly reduced. We validate the quality and scalability of our method by presenting visualizations of a particle-based cosmological dark-matter simulation exceeding 10 billion elements. Index Terms—Particle Visualization, Scalability, Cosmology. 1 INTRODUCTION Particle-based cosmological simulations play an eminent role in re- By today, for the largest available cosmological simulations an in- producing the large-scale structure of the Universe.