The Dark Energy Survey Collaboration , 2015) Using DES Data As Well As Combined Analyses Focusing on Smaller Scales (Park Et Al., 2015) Have Been Presented Elsewhere

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dark Energy and Dark Matter As Inertial Effects Introduction

Dark Energy and Dark Matter as Inertial Effects Serkan Zorba Department of Physics and Astronomy, Whittier College 13406 Philadelphia Street, Whittier, CA 90608 [email protected] ABSTRACT A disk-shaped universe (encompassing the observable universe) rotating globally with an angular speed equal to the Hubble constant is postulated. It is shown that dark energy and dark matter are cosmic inertial effects resulting from such a cosmic rotation, corresponding to centrifugal (dark energy), and a combination of centrifugal and the Coriolis forces (dark matter), respectively. The physics and the cosmological and galactic parameters obtained from the model closely match those attributed to dark energy and dark matter in the standard Λ-CDM model. 20 Oct 2012 Oct 20 ph] - PACS: 95.36.+x, 95.35.+d, 98.80.-k, 04.20.Cv [physics.gen Introduction The two most outstanding unsolved problems of modern cosmology today are the problems of dark energy and dark matter. Together these two problems imply that about a whopping 96% of the energy content of the universe is simply unaccounted for within the reigning paradigm of modern cosmology. arXiv:1210.3021 The dark energy problem has been around only for about two decades, while the dark matter problem has gone unsolved for about 90 years. Various ideas have been put forward, including some fantastic ones such as the presence of ghostly fields and particles. Some ideas even suggest the breakdown of the standard Newton-Einstein gravity for the relevant scales. Although some progress has been made, particularly in the area of dark matter with the nonstandard gravity theories, the problems still stand unresolved. -

Report from the Dark Energy Task Force (DETF)

Fermi National Accelerator Laboratory Fermilab Particle Astrophysics Center P.O.Box 500 - MS209 Batavia, Il l i noi s • 60510 June 6, 2006 Dr. Garth Illingworth Chair, Astronomy and Astrophysics Advisory Committee Dr. Mel Shochet Chair, High Energy Physics Advisory Panel Dear Garth, Dear Mel, I am pleased to transmit to you the report of the Dark Energy Task Force. The report is a comprehensive study of the dark energy issue, perhaps the most compelling of all outstanding problems in physical science. In the Report, we outline the crucial need for a vigorous program to explore dark energy as fully as possible since it challenges our understanding of fundamental physical laws and the nature of the cosmos. We recommend that program elements include 1. Prompt critical evaluation of the benefits, costs, and risks of proposed long-term projects. 2. Commitment to a program combining observational techniques from one or more of these projects that will lead to a dramatic improvement in our understanding of dark energy. (A quantitative measure for that improvement is presented in the report.) 3. Immediately expanded support for long-term projects judged to be the most promising components of the long-term program. 4. Expanded support for ancillary measurements required for the long-term program and for projects that will improve our understanding and reduction of the dominant systematic measurement errors. 5. An immediate start for nearer term projects designed to advance our knowledge of dark energy and to develop the observational and analytical techniques that will be needed for the long-term program. Sincerely yours, on behalf of the Dark Energy Task Force, Edward Kolb Director, Particle Astrophysics Center Fermi National Accelerator Laboratory Professor of Astronomy and Astrophysics The University of Chicago REPORT OF THE DARK ENERGY TASK FORCE Dark energy appears to be the dominant component of the physical Universe, yet there is no persuasive theoretical explanation for its existence or magnitude. -

Fine-Tuning, Complexity, and Life in the Multiverse*

Fine-Tuning, Complexity, and Life in the Multiverse* Mario Livio Dept. of Physics and Astronomy, University of Nevada, Las Vegas, NV 89154, USA E-mail: [email protected] and Martin J. Rees Institute of Astronomy, University of Cambridge, Cambridge CB3 0HA, UK E-mail: [email protected] Abstract The physical processes that determine the properties of our everyday world, and of the wider cosmos, are determined by some key numbers: the ‘constants’ of micro-physics and the parameters that describe the expanding universe in which we have emerged. We identify various steps in the emergence of stars, planets and life that are dependent on these fundamental numbers, and explore how these steps might have been changed — or completely prevented — if the numbers were different. We then outline some cosmological models where physical reality is vastly more extensive than the ‘universe’ that astronomers observe (perhaps even involving many ‘big bangs’) — which could perhaps encompass domains governed by different physics. Although the concept of a multiverse is still speculative, we argue that attempts to determine whether it exists constitute a genuinely scientific endeavor. If we indeed inhabit a multiverse, then we may have to accept that there can be no explanation other than anthropic reasoning for some features our world. _______________________ *Chapter for the book Consolidation of Fine Tuning 1 Introduction At their fundamental level, phenomena in our universe can be described by certain laws—the so-called “laws of nature” — and by the values of some three dozen parameters (e.g., [1]). Those parameters specify such physical quantities as the coupling constants of the weak and strong interactions in the Standard Model of particle physics, and the dark energy density, the baryon mass per photon, and the spatial curvature in cosmology. -

Galaxy Redshifts: from Dozens to Millions

GALAXY REDSHIFTS: FROM DOZENS TO MILLIONS Chris Impey University of Arizona The Expanding Universe • Evolution of the scale factor from GR; metric assumes homogeneity and isotropy (~FLRW). • Cosmological redshift fundamentally differs from a Doppler shift. • Galaxies (and other objects) are used as space-time markers. Expansion History and Contents Science is Seeing The expansion history since the big bang and the formation of large scale structure are driven by dark matter and dark energy. Observing Galaxies Galaxy Observables: • Redshift (z) • Magnitude (color, SED) • Angular size (morphology) All other quantities (size, luminosity, mass) derive from knowing a distance, which relates to redshift via a cosmological model. The observables are poor distance indicators. Other astrophysical luminosity predictors are required. (Cowie) ~90% of the volume or look-back time is probed by deep field • Number of galaxies in observable universe: ~90% of the about 100 billion galaxies in the volume • Number of stars in the are counted observable universe: about 1022 Heading for 100 Million (Baldry/Eisenstein) Multiplex Advantage Cannon (1910) Gemini/GMOS (2010) Sluggish, Spacious, Reliable. Photography CCD Imaging Speedy, Cramped, Finicky. 100 Years of Measuring Galaxy Redshifts Who When # Galaxies Size Time Mag Scheiner 1899 1 (M31) 0.3m 7.5h V = 3.4 Slipher 1914 15 0.6m ~5h V ~ 8 Slipher 1917 25 0.6m ~5h V ~ 8 Hubble/Slipher 1929 46 1.5m ~6h V ~ 10 Hum/May/San 1956 800 5m ~2h V = 11.6 Photographic 1960s 1 ~4m ~2.5h V ~ 15 Image Intensifier 1970s -

New Constraints on Cosmic Reionization

New Constraints on Cosmic Reionization Brant Robertson UCSC Exploring the Universe with JWST ESA / ESTEC, The Netherlands October 12, 2015 1 Exploring the Universe with JWST, 10/12/2015 B. Robertson, UCSC Brief History of the Observable Universe 1100 Redshift 12 8 6 1 13.8 13.5 Billions of years ago 13.4 12.9 8 Adapted from Robertson et al. Nature, 468, 49 (2010). 2 Exploring the Universe with JWST, 10/12/2015 B. Robertson, UCSC Observational Facilities Over the Next Decade 1100 Redshift 12 8 6 1 Planck Future 21cm Current 21cm LSST Thirty Meter Telescope WFIRST ALMA Hubble Chandra Fermi James Webb Space Telescope 13.8 13.5 Billions of years ago 13.4 12.9 8 Observations with JWST, WFIRST, TMT/E-ELT, LSST, ALMA, and 21- cm will drive astronomical discoveries over the next decade. Adapted from Robertson et al. Nature, 468, 49 (2010). 3 Exploring the Universe with JWST, 10/12/2015 B. Robertson, UCSC 1100 Redshift 12 8 6 1 Planck Future 21cm Current 21cm LSST Thirty Meter Telescope WFIRST ALMA Hubble Chandra Fermi James Webb Space Telescope 13.8 13.5 Billions of years ago 13.4 12.9 8 1. What can we learn about Cosmic Dawn? Was it a dramatic event in a narrow period of time or did the birth of galaxies happen gradually? 2. Can we be sure light from early galaxies caused cosmic reionization? We have some guide on when reionization occurred from studying the thermal glow of the Big Bang (microwave background), but what were the sources of ionizing photons? 3. -

Cosmology Slides

Inhomogeneous cosmologies George Ellis, University of Cape Town 27th Texas Symposium on Relativistic Astrophyiscs Dallas, Texas Thursday 12th December 9h00-9h45 • The universe is inhomogeneous on all scales except the largest • This conclusion has often been resisted by theorists who have said it could not be so (e.g. walls and large scale motions) • Most of the universe is almost empty space, punctuated by very small very high density objects (e.g. solar system) • Very non-linear: / = 1030 in this room. Models in cosmology • Static: Einstein (1917), de Sitter (1917) • Spatially homogeneous and isotropic, evolving: - Friedmann (1922), Lemaitre (1927), Robertson-Walker, Tolman, Guth • Spatially homogeneous anisotropic (Bianchi/ Kantowski-Sachs) models: - Gödel, Schücking, Thorne, Misner, Collins and Hawking,Wainwright, … • Perturbed FLRW: Lifschitz, Hawking, Sachs and Wolfe, Peebles, Bardeen, Ellis and Bruni: structure formation (linear), CMB anisotropies, lensing Spherically symmetric inhomogeneous: • LTB: Lemaître, Tolman, Bondi, Silk, Krasinski, Celerier , Bolejko,…, • Szekeres (no symmetries): Sussman, Hellaby, Ishak, … • Swiss cheese: Einstein and Strauss, Schücking, Kantowski, Dyer,… • Lindquist and Wheeler: Perreira, Clifton, … • Black holes: Schwarzschild, Kerr The key observational point is that we can only observe on the past light cone (Hoyle, Schücking, Sachs) See the diagrams of our past light cone by Mark Whittle (Virginia) 5 Expand the spatial distances to see the causal structure: light cones at ±45o. Observable Geo Data Start of universe Particle Horizon (Rindler) Spacelike singularity (Penrose). 6 The cosmological principle The CP is the foundational assumption that the Universe obeys a cosmological law: It is necessarily spatially homogeneous and isotropic (Milne 1935, Bondi 1960) Thus a priori: geometry is Robertson-Walker Weaker form: the Copernican Principle: We do not live in a special place (Weinberg 1973). -

![Arxiv:1707.01004V1 [Astro-Ph.CO] 4 Jul 2017](https://docslib.b-cdn.net/cover/2069/arxiv-1707-01004v1-astro-ph-co-4-jul-2017-392069.webp)

Arxiv:1707.01004V1 [Astro-Ph.CO] 4 Jul 2017

July 5, 2017 0:15 WSPC/INSTRUCTION FILE coc2ijmpe International Journal of Modern Physics E c World Scientific Publishing Company Primordial Nucleosynthesis Alain Coc Centre de Sciences Nucl´eaires et de Sciences de la Mati`ere (CSNSM), CNRS/IN2P3, Univ. Paris-Sud, Universit´eParis–Saclay, Bˆatiment 104, F–91405 Orsay Campus, France [email protected] Elisabeth Vangioni Institut d’Astrophysique de Paris, UMR-7095 du CNRS, Universit´ePierre et Marie Curie, 98 bis bd Arago, 75014 Paris (France), Sorbonne Universit´es, Institut Lagrange de Paris, 98 bis bd Arago, 75014 Paris (France) [email protected] Received July 5, 2017 Revised Day Month Year Primordial nucleosynthesis, or big bang nucleosynthesis (BBN), is one of the three evi- dences for the big bang model, together with the expansion of the universe and the Cos- mic Microwave Background. There is a good global agreement over a range of nine orders of magnitude between abundances of 4He, D, 3He and 7Li deduced from observations, and calculated in primordial nucleosynthesis. However, there remains a yet–unexplained discrepancy of a factor ≈3, between the calculated and observed lithium primordial abundances, that has not been reduced, neither by recent nuclear physics experiments, nor by new observations. The precision in deuterium observations in cosmological clouds has recently improved dramatically, so that nuclear cross sections involved in deuterium BBN need to be known with similar precision. We will shortly discuss nuclear aspects re- lated to BBN of Li and D, BBN with non-standard neutron sources, and finally, improved sensitivity studies using Monte Carlo that can be used in other sites of nucleosynthesis. -

Aspects of Spatially Homogeneous and Isotropic Cosmology

Faculty of Technology and Science Department of Physics and Electrical Engineering Mikael Isaksson Aspects of Spatially Homogeneous and Isotropic Cosmology Degree Project of 15 credit points Physics Program Date/Term: 02-04-11 Supervisor: Prof. Claes Uggla Examiner: Prof. Jürgen Fuchs Karlstads universitet 651 88 Karlstad Tfn 054-700 10 00 Fax 054-700 14 60 [email protected] www.kau.se Abstract In this thesis, after a general introduction, we first review some differential geom- etry to provide the mathematical background needed to derive the key equations in cosmology. Then we consider the Robertson-Walker geometry and its relation- ship to cosmography, i.e., how one makes measurements in cosmology. We finally connect the Robertson-Walker geometry to Einstein's field equation to obtain so- called cosmological Friedmann-Lema^ıtre models. These models are subsequently studied by means of potential diagrams. 1 CONTENTS CONTENTS Contents 1 Introduction 3 2 Differential geometry prerequisites 8 3 Cosmography 13 3.1 Robertson-Walker geometry . 13 3.2 Concepts and measurements in cosmography . 18 4 Friedmann-Lema^ıtre dynamics 30 5 Bibliography 42 2 1 INTRODUCTION 1 Introduction Cosmology comes from the Greek word kosmos, `universe' and logia, `study', and is the study of the large-scale structure, origin, and evolution of the universe, that is, of the universe taken as a whole [1]. Even though the word cosmology is relatively recent (first used in 1730 in Christian Wolff's Cosmologia Generalis), the study of the universe has a long history involving science, philosophy, eso- tericism, and religion. Cosmologies in their earliest form were concerned with, what is now known as celestial mechanics (the study of the heavens). -

Dark Energy and CMB

Dark Energy and CMB Conveners: S. Dodelson and K. Honscheid Topical Conveners: K. Abazajian, J. Carlstrom, D. Huterer, B. Jain, A. Kim, D. Kirkby, A. Lee, N. Padmanabhan, J. Rhodes, D. Weinberg Abstract The American Physical Society's Division of Particles and Fields initiated a long-term planning exercise over 2012-13, with the goal of developing the community's long term aspirations. The sub-group \Dark Energy and CMB" prepared a series of papers explaining and highlighting the physics that will be studied with large galaxy surveys and cosmic microwave background experiments. This paper summarizes the findings of the other papers, all of which have been submitted jointly to the arXiv. arXiv:1309.5386v2 [astro-ph.CO] 24 Sep 2013 2 1 Cosmology and New Physics Maps of the Universe when it was 400,000 years old from observations of the cosmic microwave background and over the last ten billion years from galaxy surveys point to a compelling cosmological model. This model requires a very early epoch of accelerated expansion, inflation, during which the seeds of structure were planted via quantum mechanical fluctuations. These seeds began to grow via gravitational instability during the epoch in which dark matter dominated the energy density of the universe, transforming small perturbations laid down during inflation into nonlinear structures such as million light-year sized clusters, galaxies, stars, planets, and people. Over the past few billion years, we have entered a new phase, during which the expansion of the Universe is accelerating presumably driven by yet another substance, dark energy. Cosmologists have historically turned to fundamental physics to understand the early Universe, successfully explaining phenomena as diverse as the formation of the light elements, the process of electron-positron annihilation, and the production of cosmic neutrinos. -

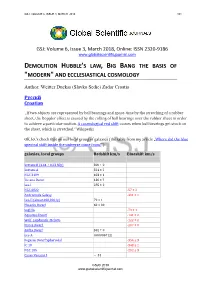

And Ecclesiastical Cosmology

GSJ: VOLUME 6, ISSUE 3, MARCH 2018 101 GSJ: Volume 6, Issue 3, March 2018, Online: ISSN 2320-9186 www.globalscientificjournal.com DEMOLITION HUBBLE'S LAW, BIG BANG THE BASIS OF "MODERN" AND ECCLESIASTICAL COSMOLOGY Author: Weitter Duckss (Slavko Sedic) Zadar Croatia Pусскй Croatian „If two objects are represented by ball bearings and space-time by the stretching of a rubber sheet, the Doppler effect is caused by the rolling of ball bearings over the rubber sheet in order to achieve a particular motion. A cosmological red shift occurs when ball bearings get stuck on the sheet, which is stretched.“ Wikipedia OK, let's check that on our local group of galaxies (the table from my article „Where did the blue spectral shift inside the universe come from?“) galaxies, local groups Redshift km/s Blueshift km/s Sextans B (4.44 ± 0.23 Mly) 300 ± 0 Sextans A 324 ± 2 NGC 3109 403 ± 1 Tucana Dwarf 130 ± ? Leo I 285 ± 2 NGC 6822 -57 ± 2 Andromeda Galaxy -301 ± 1 Leo II (about 690,000 ly) 79 ± 1 Phoenix Dwarf 60 ± 30 SagDIG -79 ± 1 Aquarius Dwarf -141 ± 2 Wolf–Lundmark–Melotte -122 ± 2 Pisces Dwarf -287 ± 0 Antlia Dwarf 362 ± 0 Leo A 0.000067 (z) Pegasus Dwarf Spheroidal -354 ± 3 IC 10 -348 ± 1 NGC 185 -202 ± 3 Canes Venatici I ~ 31 GSJ© 2018 www.globalscientificjournal.com GSJ: VOLUME 6, ISSUE 3, MARCH 2018 102 Andromeda III -351 ± 9 Andromeda II -188 ± 3 Triangulum Galaxy -179 ± 3 Messier 110 -241 ± 3 NGC 147 (2.53 ± 0.11 Mly) -193 ± 3 Small Magellanic Cloud 0.000527 Large Magellanic Cloud - - M32 -200 ± 6 NGC 205 -241 ± 3 IC 1613 -234 ± 1 Carina Dwarf 230 ± 60 Sextans Dwarf 224 ± 2 Ursa Minor Dwarf (200 ± 30 kly) -247 ± 1 Draco Dwarf -292 ± 21 Cassiopeia Dwarf -307 ± 2 Ursa Major II Dwarf - 116 Leo IV 130 Leo V ( 585 kly) 173 Leo T -60 Bootes II -120 Pegasus Dwarf -183 ± 0 Sculptor Dwarf 110 ± 1 Etc. -

Structure Formation in a CDM Universe

Structure formation in a CDM Universe Thomas Quinn, University of Washington Greg Stinson, Charlotte Christensen, Alyson Brooks Ferah Munshi Fabio Governato, Andrew Pontzen, Chris Brook, James Wadsley Microwave Background Fluctuations Image courtesy NASA/WMAP Large Scale Clustering A well constrained cosmology ` Contents of the Universe Can it make one of these? Structure formation issues ● The substructure problem ● The angular momentum problem ● The cusp problem Light vs CDM structure Stars Gas Dark Matter The CDM Substructure Problem Moore et al 1998 Substructure down to 100 pc Stadel et al, 2009 Consequences for direct detection Afshordi etal 2010 Warm Dark Matter cold warm hot Constant Core Mass Strigari et al 2008 Light vs Mass Number density log(halo or galaxy mass) Simulations of Galaxy formation Guo e tal, 2010 Origin of Galaxy Spins ● Torques on the collapsing galaxy (Peebles, 1969; Ryden, 1988) λ ≡ L E1/2/GM5/2 ≈ 0.09 for galaxies Distribution of Halo Spins f(λ) Λ = LE1/2/GM5/2 Gardner, 2001 Angular momentum Problem Too few low-J baryons Van den Bosch 01 Bullock 01 Core/Cusps in Dwarfs Moore 1994 Warm DM doesn't help Moore et al 1999 Dwarf simulated to z=0 Stellar mass = 5e8 M sun` M = -16.8 i g - r = 0.53 V = 55 km/s rot R = 1 kpc d M /M = 2.5 HI * f = .3 f cosmic b b i band image Dwarf Light Profile Rotation Curve Resolution effects Low resolution: bad Low resolution star formation: worse Inner Profile Slopes vs Mass Governato, Zolotov etal 2012 Constant Core Masses Angular Momentum Outflows preferential remove low J baryons Simulation Results: Resolution and H2 Munshi, in prep. -

Methods of Measuring Astronomical Distances

LASER INTERFEROMETER GRAVITATIONAL WAVE OBSERVATORY - LIGO - =============================== LIGO SCIENTIFIC COLLABORATION Technical Note LIGO-T1200427{v1 2012/09/02 Methods of Measuring Astronomical Distances Sarah Gossan and Christian Ott California Institute of Technology Massachusetts Institute of Technology LIGO Project, MS 18-34 LIGO Project, Room NW22-295 Pasadena, CA 91125 Cambridge, MA 02139 Phone (626) 395-2129 Phone (617) 253-4824 Fax (626) 304-9834 Fax (617) 253-7014 E-mail: [email protected] E-mail: [email protected] LIGO Hanford Observatory LIGO Livingston Observatory Route 10, Mile Marker 2 19100 LIGO Lane Richland, WA 99352 Livingston, LA 70754 Phone (509) 372-8106 Phone (225) 686-3100 Fax (509) 372-8137 Fax (225) 686-7189 E-mail: [email protected] E-mail: [email protected] http://www.ligo.org/ LIGO-T1200427{v1 1 Introduction The determination of source distances, from solar system to cosmological scales, holds great importance for the purposes of all areas of astrophysics. Over all distance scales, there is not one method of measuring distances that works consistently, and as a result, distance scales must be built up step-by-step, using different methods that each work over limited ranges of the full distance required. Broadly, astronomical distance `calibrators' can be categorised as primary, secondary or tertiary, with secondary calibrated themselves by primary, and tertiary by secondary, thus compounding any uncertainties in the distances measured with each rung ascended on the cosmological `distance ladder'. Typically, primary calibrators can only be used for nearby stars and stellar clusters, whereas secondary and tertiary calibrators are employed for sources within and beyond the Virgo cluster respectively.