Wangth (2017-2020, CC BY-SA) ? (1996-2016)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ストレージの管理: Geom, Ufs, Zfs

FreeBSD 勉強会 ストレージの管理: GEOM, UFS, ZFS 佐藤 広生 <[email protected]> 東京工業大学/ FreeBSD Project 2012/7/20 2012/7/20 (c) Hiroki Sato 1 / 94 FreeBSD 勉強会 前編 ストレージの管理: GEOM, UFS, ZFS 佐藤 広生 <[email protected]> 東京工業大学/ FreeBSD Project 2012/7/20 2012/7/20 (c) Hiroki Sato 1 / 94 講師紹介 佐藤 広生 <[email protected]> ▶ *BSD関連のプロジェクトで10年くらい色々やってます ▶ カーネル開発・ユーザランド開発・文書翻訳・サーバ提供 などなど ▶ FreeBSD コアチームメンバ(2006 年から 4期目)、 リリースエンジニア (commit 比率は src/ports/doc で 1:1:1くらい) ▶ AsiaBSDCon 主宰 ▶ 技術的なご相談や講演・執筆依頼は [email protected] まで 2012/7/20 (c) Hiroki Sato 2 / 94 お話すること ▶ ストレージ管理 ▶ まずは基礎知識 ▶ GEOMフレームワーク ▶ 構造とコンセプト ▶ 使い方 ▶ ファイルシステム ▶ 原理と技術的詳細を知ろう ▶ UFS の構造 ▶ ZFS の構造(次回) ▶ GEOM, UFS, ZFSの実際の運用と具体例(次回) 2012/7/20 (c) Hiroki Sato 3 / 94 復習:UNIX系OSの記憶装置 記憶装置 ハードウェア カーネル ソフトウェア ユーザランド ユーザランド ユーザランド プロセス プロセス プロセス 2012/7/20 (c) Hiroki Sato 4 / 94 復習:UNIX系OSの記憶装置 HDD カーネル デバイスドライバ GEOMサブシステム /dev/foo (devfs) バッファキャッシュ VMサブシステム physio() ファイルシステム ユーザランドアプリケーション 2012/7/20 (c) Hiroki Sato 5 / 94 復習:UNIX系OSの記憶装置 ▶ 記憶装置はどう見える? ▶ UNIX系OSでは、資源は基本的に「ファイル」 ▶ /dev/ada0 (SATA, SAS HDD) ▶ /dev/da0 (SCSI HDD, USB mass storage class device) ▶ 特殊ファイル(デバイスノード) # ls -al /dev/ada* crw-r----- 1 root operator 0, 75 Jul 19 23:46 /dev/ada0 crw-r----- 1 root operator 0, 77 Jul 19 23:46 /dev/ada1 crw-r----- 1 root operator 0, 79 Jul 19 23:46 /dev/ada1s1 crw-r----- 1 root operator 0, 81 Jul 19 23:46 /dev/ada1s1a crw-r----- 1 root operator 0, 83 Jul 19 23:46 /dev/ada1s1b crw-r----- 1 root operator 0, 85 Jul 19 23:46 /dev/ada1s1d crw-r----- 1 root operator 0, 87 Jul 19 23:46 /dev/ada1s1e crw-r----- 1 root operator 0, 89 -

Portace Na Jin´E Os

VYSOKEU´ CENˇ ´I TECHNICKE´ V BRNEˇ BRNO UNIVERSITY OF TECHNOLOGY FAKULTA INFORMACNˇ ´ICH TECHNOLOGI´I USTAV´ INFORMACNˇ ´ICH SYSTEM´ U˚ FACULTY OF INFORMATION TECHNOLOGY DEPARTMENT OF INFORMATION SYSTEMS REDIRFS - PORTACE NA JINE´ OS PORTING OF REDIRFS ON OTHER OS DIPLOMOVA´ PRACE´ MASTER’S THESIS AUTOR PRACE´ Bc. LUKA´ Sˇ CZERNER AUTHOR VEDOUC´I PRACE´ Ing. TOMA´ Sˇ KASPˇ AREK´ SUPERVISOR BRNO 2010 Abstrakt Tato pr´acepopisuje jak pˇr´ıpravu na portaci, tak samotnou portaci Linuxov´ehomodulu RedirFS na operaˇcn´ısyst´emFreeBSD. Jsou zde pops´any z´akladn´ırozd´ılypˇr´ıstupuk Lin- uxov´emu a FreeBSD j´adru,d´alerozd´ılyv implementaci, pro RedirFS z´asadn´ı,ˇc´astij´adra a sice VFS vrstvy. D´alezkoum´amoˇznostia r˚uzn´epˇr´ıstupy k implementaci funkcionality linuxov´ehoRedirFS na operaˇcn´ımsyst´emu FreeBSD. N´aslednˇejsou zhodnoceny moˇznostia navrˇzenide´aln´ıpostup portace. N´asleduj´ıc´ıkapitoly pak popisuj´ıpoˇzadovanou funkcional- itu spolu s navrhovanou architekturou nov´ehomodulu. D´aleje detailnˇepops´ann´avrha implementace nov´ehomodulu tak, aby mˇelˇcten´aˇrjasnou pˇredstavu jak´ymzp˚usobem modul implementuje poˇzadovanou funkcionalitu. Abstract This thesis describes preparation for porting as well aw porting itself of RedirFS Linux kernel module to FreeBSD. Basic differences between Linux and FreeBSD kernels are de- scribed as well as differences in implementation of the Virtual Filesystem, crucial part for RedirFS. Further there are described possibilities and different approaches to implemen- tation RedirFS functionality to FreeBSD. Then, the possibilities are evaluated and ideal approach is proposed. Next chapters introduces erquired functionality of the new module as well as its solutions. Then the implementation details are describet so the reader can very well understand how the new module works and how the required functionality is implemented into the module. -

ZFS 2018 and Onward

SEE TEXT ONLY 2018 and Onward BY ALLAN JUDE 2018 is going to be a big year for ZFS RAID-Z Expansion not only FreeBSD, but for OpenZFS • 2018Q4 as well. OpenZFS is the collaboration of developers from IllumOS, FreeBSD, The ability to add an additional disk to an existing RAID-Z VDEV to grow its size with- Linux, Mac OS X, many industry ven- out changing its redundancy level. For dors, and a number of other projects example, a RAID-Z2 consisting of 6 disks to maintain and advance the open- could be expanded to contain 7 disks, source version of Sun’s ZFS filesystem. increasing the available space. This is accomplished by reflowing the data across Improved Pool Import the disks, so as to not change an individual • 2018Q1 block’s offset from the start of the VDEV. The new disk will appear as a new column on the A new patch set changes the way pools are right, and the data will be relocated to main- imported, such that they depend less on con- tain the offset within the VDEV. Similar to figuration data from the system. Instead, the reflowing a paragraph by making it wider, possibly outdated configuration from the sys- without changing the order of the words. In tem is used to find the pool, and partially open the end this means all of the new space shows it read-only. Then the correct on-disk configura- up at the end of the disk, without any frag- tion is loaded. This reduces the number of mentation. -

GELI (8) Freebsd System Manager's Manual GELI (8) NAME Geli

GELI (8) FreeBSD System Manager’s Manual GELI (8) NAME geli — control utility for cryptographic GEOM class SYNOPSIS To compile GEOM ELI into your kernel, place the following lines in your kernel configuration file: device crypto options GEOM_ELI Alternately, to load the GEOM ELI module at boot time, place the following line in your loader.conf(5): geom_eli_load="YES" Usage of the geli(8) utility: geli init [ -bPv][ -a algo ][ -i iterations ][ -K newkeyfile][ -l keylen ][ -s sectorsize ] prov geli label - an alias for init geli attach [ -dpv ][ -k keyfile ] prov geli detach [ -fl] prov . geli stop - an alias for detach geli onetime [ -d][ -a algo][ -l keylen][ -s sectorsize ] prov . geli setkey [ -pPv ][ -i iterations ][ -k keyfile][ -K newkeyfile][ -n keyno] prov geli delkey [ -afv ][ -n keyno] prov geli kill [ -av][ prov . ] geli backup [ -v] prov file geli restore [ -v] file prov geli clear [ -v] prov . geli dump [ -v] prov . geli list geli status geli load geli unload DESCRIPTION The geli utility is used to configure encryption on GEOM providers. The following is a list of the most important features: • Utilizes the crypto(9) framework, so when there is crypto hardware available, geli will make use of it automatically. • Supports many cryptographic algorithms (currently AES, Blowfish and 3DES). • Can create a key from a couple of components (user entered passphrase, random bits from a file, etc.). • Allows to encrypt the root partition - the user will be asked for the passphrase before the root file system is mounted. • The passphrase of the user is strengthened with: B. Kaliski, PKCS #5: Password-Based Cryptography Specification, Version 2.0. -

967-Malone.Pdf

IN THIS ARTICLE I’M GOING TO LOOK at some less well known security features DAVID MALONE of FreeBSD. Some of these features are com- mon to all the BSDs. Others are FreeBSD- specific or have been extended in some way in FreeBSD. The features that I will be security through discussing are available in FreeBSD 5.4. obscurity First, I’ll mention some features that I don’t plan to cover. FreeBSD jails are like a more powerful version of chroot. Like chroot they are restricted to a subtree A REVIEW OF A FEW OF of the file system, but users (including root) in a jail are also restricted in terms of networking and system FREEBSD’S LESSER-KNOWN calls. It should be safe to give root access to a jail to an untrusted user, making a jail like virtual system, SECURITY CAPABILITIES not unlike some applications of UML [1] or Xen [2] David is a system administrator at Trinity College, but without running separate kernels for each system. Dublin, a researcher in NUI Maynooth, and a com- A lot has already been written about jails [3], so I mitter on the FreeBSD project. He likes to express won’t dwell on them further here. himself on technical matters, and so has a Ph.D. in mathematics and is the co-author of IPv6 Network Another feature that I don’t plan to spend much time Administration (O’Reilly, 2005). on is ACLs. ACLs are an extension of the traditional [email protected] UNIX permissions system to allow you to specify per- missions for users and groups other than the file’s owner and group. -

Freebsd Enterprise Storage Polish BSD User Group Welcome 2020/02/11 Freebsd Enterprise Storage

FreeBSD Enterprise Storage Polish BSD User Group Welcome 2020/02/11 FreeBSD Enterprise Storage Sławomir Wojciech Wojtczak [email protected] vermaden.wordpress.com twitter.com/vermaden bsd.network/@vermaden https://is.gd/bsdstg FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 What is Enterprise Storage? The wikipedia.org/wiki/enterprise_storage page tells nothing about enterprise. Actually just redirects to wikipedia.org/wiki/data_storage page. The other wikipedia.org/wiki/computer_data_storage page also does the same. The wikipedia.org/wiki/enterprise is just meta page with lin s. FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 Common Charasteristics o Enterprise Storage ● Category that includes ser$ices/products designed &or !arge organizations. ● Can handle !arge "o!umes o data and !arge num%ers o sim#!tano#s users. ● 'n$olves centra!ized storage repositories such as SA( or NAS de$ices. ● )equires more time and experience%expertise to set up and operate. ● Generally costs more than consumer or small business storage de$ices. ● Generally o&&ers higher re!ia%i!it'%a"aila%i!it'%sca!a%i!it'. FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 EnterpriCe or EnterpriSe? DuckDuckGo does not pro$ide search results count +, Goog!e search &or enterprice word gi$es ~ 1 )00 000 results. Goog!e search &or enterprise word gi$es ~ 1 000 000 000 results ,1000 times more). ● /ost dictionaries &or enterprice word sends you to enterprise term. ● Given the *+,CE o& many enterprise solutions it could be enterPRICE 0 ● 0 or enterpri$e as well +. -

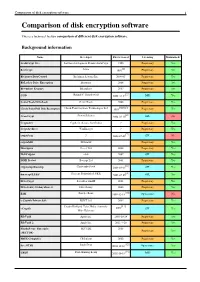

Comparison of Disk Encryption Software 1 Comparison of Disk Encryption Software

Comparison of disk encryption software 1 Comparison of disk encryption software This is a technical feature comparison of different disk encryption software. Background information Name Developer First released Licensing Maintained? ArchiCrypt Live Softwaredevelopment Remus ArchiCrypt 1998 Proprietary Yes [1] BestCrypt Jetico 1993 Proprietary Yes BitArmor DataControl BitArmor Systems Inc. 2008-05 Proprietary Yes BitLocker Drive Encryption Microsoft 2006 Proprietary Yes Bloombase Keyparc Bloombase 2007 Proprietary Yes [2] CGD Roland C. Dowdeswell 2002-10-04 BSD Yes CenterTools DriveLock CenterTools 2008 Proprietary Yes [3][4][5] Check Point Full Disk Encryption Check Point Software Technologies Ltd 1999 Proprietary Yes [6] CrossCrypt Steven Scherrer 2004-02-10 GPL No Cryptainer Cypherix (Secure-Soft India) ? Proprietary Yes CryptArchiver WinEncrypt ? Proprietary Yes [7] cryptoloop ? 2003-07-02 GPL No cryptoMill SEAhawk Proprietary Yes Discryptor Cosect Ltd. 2008 Proprietary Yes DiskCryptor ntldr 2007 GPL Yes DISK Protect Becrypt Ltd 2001 Proprietary Yes [8] cryptsetup/dmsetup Christophe Saout 2004-03-11 GPL Yes [9] dm-crypt/LUKS Clemens Fruhwirth (LUKS) 2005-02-05 GPL Yes DriveCrypt SecurStar GmbH 2001 Proprietary Yes DriveSentry GoAnywhere 2 DriveSentry 2008 Proprietary Yes [10] E4M Paul Le Roux 1998-12-18 Open source No e-Capsule Private Safe EISST Ltd. 2005 Proprietary Yes Dustin Kirkland, Tyler Hicks, (formerly [11] eCryptfs 2005 GPL Yes Mike Halcrow) FileVault Apple Inc. 2003-10-24 Proprietary Yes FileVault 2 Apple Inc. 2011-7-20 Proprietary -

The Daemon's Dozen: Introducing Freebsd 12.0

SEE TEXT ONLY By Michael W LUCAS The Daemon’s Dozen Introducing FreeBSD 12.0 We’ve all been eagerly awaiting FreeBSD 12.0 since about two hours after FreeBSD 11.0 escaped. Here I’ll take a few looks at some new features, removed features, and forthcoming changes, starting with storage. FS users are probably familiar with the beadm(8) package, the boot environment administration pro- gram. We’ve previously covered it in the FreeBSD Journal. FreeBSD now includes bectl(8), a boot envi- Zronment control program. It retains much of the beadm(8) syntax, letting you create, activate, list, and destroy boot environments. Additionally, it lets you mount unused boot environments and create jails from those boot environments. I’ve used jails to troubleshoot problematic boot environments, so this is a welcome addition to the base system. Many people have used GELI to encrypt disks. Doing hard drive encryption properly is tricky, but GELI • handily reduces the risk of exposing confidential data when your laptop gets stolen. GELI was painful to use on systems with many hard drives, though. Each disk needed to be configured separately. With FreeBSD 12.0, GELI can now configure multiple disks simultaneously so long as they use the same encryp- tion key, easing this annoyance for sysadmins who administer such machines. Also, in the GELI realm, the installer can now encrypt disks on hosts booting off of UEFI. FreeBSD’s GEOM system lets us stack storage transformations on top of one another in exactly the way you need for your application, hardware, and environment. -

Freenas® 11.2-U3 User Guide

FreeNAS® 11.2-U3 User Guide March 2019 Edition FreeNAS® is © 2011-2019 iXsystems FreeNAS® and the FreeNAS® logo are registered trademarks of iXsystems FreeBSD® is a registered trademark of the FreeBSD Foundation Written by users of the FreeNAS® network-attached storage operating system. Version 11.2 Copyright © 2011-2019 iXsystems (https://www.ixsystems.com/) CONTENTS Welcome .............................................................. 8 Typographic Conventions ..................................................... 10 1 Introduction 11 1.1 New Features in 11.2 .................................................... 11 1.1.1 RELEASE-U1 ..................................................... 14 1.1.2 U2 .......................................................... 14 1.1.3 U3 .......................................................... 15 1.2 Path and Name Lengths .................................................. 16 1.3 Hardware Recommendations ............................................... 17 1.3.1 RAM ......................................................... 17 1.3.2 The Operating System Device ........................................... 18 1.3.3 Storage Disks and Controllers ........................................... 18 1.3.4 Network Interfaces ................................................. 19 1.4 Getting Started with ZFS .................................................. 20 2 Installing and Upgrading 21 2.1 Getting FreeNAS® ...................................................... 21 2.2 Preparing the Media ................................................... -

Seven Ways to Increase Security in a New Freebsd Installation by MARIUSZ ZABORSKI

1 of 6 Seven Ways to Increase Security in a New FreeBSD Installation BY MARIUSZ ZABORSKI FreeBSD can be used as a desktop or as a server operating system. When setting up new boxes, it is crucial to choose the most secure configuration. We have a lot of data on our computers, and it all needs to be protected. This article describes seven configuration improvements that everyone should remember when configur- ing a new FreeBSD box. Full Disk Encryption With a fresh installation, it is important to decide about hard drive encryption—par- 1ticularly since it may be challenging to add later. The purpose of disk encryption is to protect data stored on the system. We store a lot of personal and business data on our com- puters, and most of the time, we do not want that to be read by unauthorized people. Our laptop may be stolen, and if so, it should be assumed that a thief might gain access to almost every document, photo, and all pages logged in to through our web browser. Some people may also try to tamper with or read our data when we are far away from our computer. We recommend encrypting all systems—especially servers or personal computers. Encryp- tion of hard disks is relatively inexpensive and the advantages are significant. In FreeBSD, there are three main ways to encrypt storage: • GBDE, • ZFS native encryption, • GELI. Geom Based Disk Encryption (GBDE) is the oldest disk encryption mechanism in FreeBSD. But the GBDE is currently neglected by developers. GDBE supports only AES-CBC with a 128-bit key length and uses a separate key for each write, which may introduce some CPU overhead. -

GELI — Disk Encryption in Freebsd

GELI | Disk Encryption in FreeBSD Micha l Borysiak [email protected] November 15, 2018 Disk encryption facilities in FreeBSD I GBDE (GEOM-based Disk Encryption) I FreeBSD 5, 2003 I Poul-Henning Kamp I GEOM module in the kernel gbde(4) I User space tool gbde(8) I Creates new device with .bde suffix I GELI (GEOM eli) I FreeBSD 6, 2005 I Pawe l Jakub Dawidek I GEOM module in the kernel I User space tool geli(8) I Creates new device with .eli suffix I Operates on sector level I New devices are created to allow plain text access to the data The GEOM framework I Standardized way to access storage layers I FreeBSD 5, 2003 I Poul-Henning Kamp I Set of GEOM classes I Classes can be freely stackable in any order I Abstraction of an I/O request transformation I Transformations: striping, mirroring, partitioning, encryption I Providers and consumers I Auto discovery GBDE I Master key (2048 random bits) is located in a random place on the GEOM provider, and its location is stored in a lock file I The lock file is encrypted using a user password and should be stored separately I Up to 4 independent user secrets (lock sectors) I Each sector is encrypted using AES-CBC-128 and a random sector key I The sector key is encrypted using a key derived from the master key and the sector number I Disk space overhead to store per-sector keys I Non-atomic disk updates, since sector keys are stored separately from data I Does not support mounting encrypted device in the / file system GELI I Simple sector-to-sector encryption I To perform symmetric cryptography on sectors -

Talk: a Better Rescue Environment for BSD Systems

A Remote Rescue Environment for FreeBSD Systems LinuxTag 2006; Wiesbaden, Germany Friday May 5, 2006; 10:00-11:00 Adrian Steinmann Webgroup Consulting AG, Zürich <[email protected]> Agenda What is a Remote Rescue Environment Introduction to RAMdisks and their uses Compact Flash and “Small” Hardware Building and Deploying the Rescue RAMdisk Status of Work in Progress Demonstration and Questions & Answers FreeBSD Rescue Environment Traditional versus Something New Traditional ‣ Serial console ‣ boot -s ‣ fsck -y / ‣ /rescue (statically linked) FreeBSD Rescue Environment Traditional versus Something New Traditional New Idea ‣ Serial console ★ Serial console optional ‣ boot -s ★ ssh root@sickhost ‣ fsck -y / ★ RAMdisk root always clean ‣ /rescue (statically linked) ★ RAMdisk “mostly static” “Single User Secure Shell” The goal is to be able to login remotely onto a system with SSH even when the harddisk where the root filesystem resides is acting up! RAMdisk to the Rescue Swap-backed filesystems (i.e., /tmp) Malloc-backed filesystems for read-write area in read-only environments (i.e., /var on compact flash or mfsroot on install CD) Create “disk images” to build custom distributions dd if=/dev/zero of=somebackingfile bs=1k count=5k mdconfig -a -t vnode -f somebackingfile -u 0 bsdlabel -w md0 auto newfs md0c mount /dev/md0c /mnt Linux often boots using “initrd” (initial ramdisk) RAMdisk to the Rescue Swap-backed filesystems (i.e., /tmp) Malloc-backed filesystems for read-write area in read-only environments (i.e., /var on compact flash or mfsroot on