Freebsd Enterprise Storage Polish BSD User Group Welcome 2020/02/11 Freebsd Enterprise Storage

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Copy on Write Based File Systems Performance Analysis and Implementation

Copy On Write Based File Systems Performance Analysis And Implementation Sakis Kasampalis Kongens Lyngby 2010 IMM-MSC-2010-63 Technical University of Denmark Department Of Informatics Building 321, DK-2800 Kongens Lyngby, Denmark Phone +45 45253351, Fax +45 45882673 [email protected] www.imm.dtu.dk Abstract In this work I am focusing on Copy On Write based file systems. Copy On Write is used on modern file systems for providing (1) metadata and data consistency using transactional semantics, (2) cheap and instant backups using snapshots and clones. This thesis is divided into two main parts. The first part focuses on the design and performance of Copy On Write based file systems. Recent efforts aiming at creating a Copy On Write based file system are ZFS, Btrfs, ext3cow, Hammer, and LLFS. My work focuses only on ZFS and Btrfs, since they support the most advanced features. The main goals of ZFS and Btrfs are to offer a scalable, fault tolerant, and easy to administrate file system. I evaluate the performance and scalability of ZFS and Btrfs. The evaluation includes studying their design and testing their performance and scalability against a set of recommended file system benchmarks. Most computers are already based on multi-core and multiple processor architec- tures. Because of that, the need for using concurrent programming models has increased. Transactions can be very helpful for supporting concurrent program- ming models, which ensure that system updates are consistent. Unfortunately, the majority of operating systems and file systems either do not support trans- actions at all, or they simply do not expose them to the users. -

PDF, 32 Pages

Helge Meinhard / CERN V2.0 30 October 2015 HEPiX Fall 2015 at Brookhaven National Lab After 2004, the lab, located on Long Island in the State of New York, U.S.A., was host to a HEPiX workshop again. Ac- cess to the site was considerably easier for the registered participants than 11 years ago. The meeting took place in a very nice and comfortable seminar room well adapted to the size and style of meeting such as HEPiX. It was equipped with advanced (sometimes too advanced for the session chairs to master!) AV equipment and power sockets at each seat. Wireless networking worked flawlessly and with good bandwidth. The welcome reception on Monday at Wading River at the Long Island sound and the workshop dinner on Wednesday at the ocean coast in Patchogue showed more of the beauty of the rather natural region around the lab. For those interested, the hosts offered tours of the BNL RACF data centre as well as of the STAR and PHENIX experiments at RHIC. The meeting ran very smoothly thanks to an efficient and experienced team of local organisers headed by Tony Wong, who as North-American HEPiX co-chair also co-ordinated the workshop programme. Monday 12 October 2015 Welcome (Michael Ernst / BNL) On behalf of the lab, Michael welcomed the participants, expressing his gratitude to the audience to have accepted BNL's invitation. He emphasised the importance of computing for high-energy and nuclear physics. He then intro- duced the lab focusing on physics, chemistry, biology, material science etc. The total head count of BNL-paid people is close to 3'000. -

ストレージの管理: Geom, Ufs, Zfs

FreeBSD 勉強会 ストレージの管理: GEOM, UFS, ZFS 佐藤 広生 <[email protected]> 東京工業大学/ FreeBSD Project 2012/7/20 2012/7/20 (c) Hiroki Sato 1 / 94 FreeBSD 勉強会 前編 ストレージの管理: GEOM, UFS, ZFS 佐藤 広生 <[email protected]> 東京工業大学/ FreeBSD Project 2012/7/20 2012/7/20 (c) Hiroki Sato 1 / 94 講師紹介 佐藤 広生 <[email protected]> ▶ *BSD関連のプロジェクトで10年くらい色々やってます ▶ カーネル開発・ユーザランド開発・文書翻訳・サーバ提供 などなど ▶ FreeBSD コアチームメンバ(2006 年から 4期目)、 リリースエンジニア (commit 比率は src/ports/doc で 1:1:1くらい) ▶ AsiaBSDCon 主宰 ▶ 技術的なご相談や講演・執筆依頼は [email protected] まで 2012/7/20 (c) Hiroki Sato 2 / 94 お話すること ▶ ストレージ管理 ▶ まずは基礎知識 ▶ GEOMフレームワーク ▶ 構造とコンセプト ▶ 使い方 ▶ ファイルシステム ▶ 原理と技術的詳細を知ろう ▶ UFS の構造 ▶ ZFS の構造(次回) ▶ GEOM, UFS, ZFSの実際の運用と具体例(次回) 2012/7/20 (c) Hiroki Sato 3 / 94 復習:UNIX系OSの記憶装置 記憶装置 ハードウェア カーネル ソフトウェア ユーザランド ユーザランド ユーザランド プロセス プロセス プロセス 2012/7/20 (c) Hiroki Sato 4 / 94 復習:UNIX系OSの記憶装置 HDD カーネル デバイスドライバ GEOMサブシステム /dev/foo (devfs) バッファキャッシュ VMサブシステム physio() ファイルシステム ユーザランドアプリケーション 2012/7/20 (c) Hiroki Sato 5 / 94 復習:UNIX系OSの記憶装置 ▶ 記憶装置はどう見える? ▶ UNIX系OSでは、資源は基本的に「ファイル」 ▶ /dev/ada0 (SATA, SAS HDD) ▶ /dev/da0 (SCSI HDD, USB mass storage class device) ▶ 特殊ファイル(デバイスノード) # ls -al /dev/ada* crw-r----- 1 root operator 0, 75 Jul 19 23:46 /dev/ada0 crw-r----- 1 root operator 0, 77 Jul 19 23:46 /dev/ada1 crw-r----- 1 root operator 0, 79 Jul 19 23:46 /dev/ada1s1 crw-r----- 1 root operator 0, 81 Jul 19 23:46 /dev/ada1s1a crw-r----- 1 root operator 0, 83 Jul 19 23:46 /dev/ada1s1b crw-r----- 1 root operator 0, 85 Jul 19 23:46 /dev/ada1s1d crw-r----- 1 root operator 0, 87 Jul 19 23:46 /dev/ada1s1e crw-r----- 1 root operator 0, 89 -

Portace Na Jin´E Os

VYSOKEU´ CENˇ ´I TECHNICKE´ V BRNEˇ BRNO UNIVERSITY OF TECHNOLOGY FAKULTA INFORMACNˇ ´ICH TECHNOLOGI´I USTAV´ INFORMACNˇ ´ICH SYSTEM´ U˚ FACULTY OF INFORMATION TECHNOLOGY DEPARTMENT OF INFORMATION SYSTEMS REDIRFS - PORTACE NA JINE´ OS PORTING OF REDIRFS ON OTHER OS DIPLOMOVA´ PRACE´ MASTER’S THESIS AUTOR PRACE´ Bc. LUKA´ Sˇ CZERNER AUTHOR VEDOUC´I PRACE´ Ing. TOMA´ Sˇ KASPˇ AREK´ SUPERVISOR BRNO 2010 Abstrakt Tato pr´acepopisuje jak pˇr´ıpravu na portaci, tak samotnou portaci Linuxov´ehomodulu RedirFS na operaˇcn´ısyst´emFreeBSD. Jsou zde pops´any z´akladn´ırozd´ılypˇr´ıstupuk Lin- uxov´emu a FreeBSD j´adru,d´alerozd´ılyv implementaci, pro RedirFS z´asadn´ı,ˇc´astij´adra a sice VFS vrstvy. D´alezkoum´amoˇznostia r˚uzn´epˇr´ıstupy k implementaci funkcionality linuxov´ehoRedirFS na operaˇcn´ımsyst´emu FreeBSD. N´aslednˇejsou zhodnoceny moˇznostia navrˇzenide´aln´ıpostup portace. N´asleduj´ıc´ıkapitoly pak popisuj´ıpoˇzadovanou funkcional- itu spolu s navrhovanou architekturou nov´ehomodulu. D´aleje detailnˇepops´ann´avrha implementace nov´ehomodulu tak, aby mˇelˇcten´aˇrjasnou pˇredstavu jak´ymzp˚usobem modul implementuje poˇzadovanou funkcionalitu. Abstract This thesis describes preparation for porting as well aw porting itself of RedirFS Linux kernel module to FreeBSD. Basic differences between Linux and FreeBSD kernels are de- scribed as well as differences in implementation of the Virtual Filesystem, crucial part for RedirFS. Further there are described possibilities and different approaches to implemen- tation RedirFS functionality to FreeBSD. Then, the possibilities are evaluated and ideal approach is proposed. Next chapters introduces erquired functionality of the new module as well as its solutions. Then the implementation details are describet so the reader can very well understand how the new module works and how the required functionality is implemented into the module. -

FOSDEM 2017 Schedule

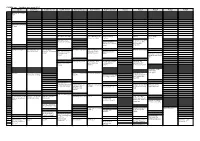

FOSDEM 2017 - Saturday 2017-02-04 (1/9) Janson K.1.105 (La H.2215 (Ferrer) H.1301 (Cornil) H.1302 (Depage) H.1308 (Rolin) H.1309 (Van Rijn) H.2111 H.2213 H.2214 H.3227 H.3228 Fontaine)… 09:30 Welcome to FOSDEM 2017 09:45 10:00 Kubernetes on the road to GIFEE 10:15 10:30 Welcome to the Legal Python Winding Itself MySQL & Friends Opening Intro to Graph … Around Datacubes Devroom databases Free/open source Portability of containers software and drones Optimizing MySQL across diverse HPC 10:45 without SQL or touching resources with my.cnf Singularity Welcome! 11:00 Software Heritage The Veripeditus AR Let's talk about The State of OpenJDK MSS - Software for The birth of HPC Cuba Game Framework hardware: The POWER Make your Corporate planning research Applying profilers to of open. CLA easy to use, aircraft missions MySQL Using graph databases please! 11:15 in popular open source CMSs 11:30 Jockeying the Jigsaw The power of duck Instrumenting plugins Optimized and Mixed License FOSS typing and linear for Performance reproducible HPC Projects algrebra Schema Software deployment 11:45 Incremental Graph Queries with 12:00 CloudABI LoRaWAN for exploring Open J9 - The Next Free It's time for datetime Reproducible HPC openCypher the Internet of Things Java VM sysbench 1.0: teaching Software Installation on an old dog new tricks Cray Systems with EasyBuild 12:15 Making License 12:30 Compliance Easy: Step Diagnosing Issues in Webpush notifications Putting Your Jobs Under Twitter Streaming by Open Source Step. Java Apps using for Kinto Introducing gh-ost the Microscope using Graph with Gephi Thermostat and OGRT Byteman. -

Introduzione Al Mondo Freebsd

Introduzione al mondo FreeBSD Corso avanzato Netstudent Netstudent http://netstudent.polito.it E.Richiardone [email protected] maggio 2009 CC-by http://creativecommons.org/licenses/by/2.5/it/ The FreeBSD project - 1 ·EÁ un progetto software open in parte finanziato ·Lo scopo eÁ mantenere e sviluppare il sistema operativo FreeBSD ·Nasce su CDROM come FreeBSD 1.0 nel 1993 ·Deriva da un patchkit per 386BSD, eredita codice da UNIX versione Berkeley 1977 ·Per problemi legali subisce un rallentamento, release 2.0 nel 1995 con codice royalty-free ·Dalla release 5.0 (2003) assume la struttura che ha oggi ·Disponibile per x86 32 e 64bit, ia64, MIPS, ppc, sparc... ·La mascotte (Beastie) nasce nel 1984 The FreeBSD project - 2 ·Erede di 4.4BSD (eÁ la stessa gente...) ·Sistema stabile; sviluppo uniforme; codice molto chiaro, ordinato e ben commentato ·Documentazione ufficiale ben curata ·Licenza molto permissiva, spesso attrae aziende per progetti commerciali: ·saltuariamente esterni collaborano con implementazioni ex-novo (i.e. Intel, GEOM, atheros, NDISwrapper, ZFS) ·a volte no (i.e. Windows NT) ·Semplificazione di molte caratteristiche tradizionali UNIX Di cosa si tratta Il progetto FreeBSD include: ·Un sistema base ·Bootloader, kernel, moduli, librerie di base, comandi e utility di base, servizi tradizionali ·Sorgenti completi in /usr/src (~500MB) ·EÁ giaÁ abbastanza completo (i.e. ipfw, ppp, bind, ...) ·Un sistema di gestione per software aggiuntivo ·Ports e packages ·Documentazione, canali di assistenza, strumenti di sviluppo ·i.e. Handbook, -

File System Design Approaches

File System Design Approaches Dr. Brijender Kahanwal Department of Computer Science & Engineering Galaxy Global Group of Institutions Dinarpur, Ambala, Haryana, INDIA [email protected] Abstract—In this article, the file system development design The experience with file system development is limited approaches are discussed. The selection of the file system so the research served to identify the different techniques design approach is done according to the needs of the that can be used. The variety of file systems encountered developers what are the needed requirements and show what an active area of research file system specifications for the new design. It allowed us to identify development is. The file systems researched fell into one of where our proposal fitted in with relation to current and past file system development. Our experience with file system the following four categories: development is limited so the research served to identify the 1. The file system is developed in user space and runs as a different techniques that can be used. The variety of file user process. systems encountered show what an active area of research file 2. The file system is developed in the user space using system development is. The file systems may be from one of the FUSE (File system in USEr space) kernel module and two fundamental categories. In one category, the file system is runs as a user process. developed in user space and runs as a user process. Another 3. The file system is developed in the kernel and runs as a file system may be developed in the kernel space and runs as a privileged process. -

Truenas® 11.3-U5 User Guide

TrueNAS® 11.3-U5 User Guide Note: Starting with version 12.0, FreeNAS and TrueNAS are unifying (https://www.ixsystems.com/blog/freenas- truenas-unification/.) into “TrueNAS”. Documentation for TrueNAS 12.0 and later releases has been unified and moved to the TrueNAS Documentation Hub (https://www.truenas.com/docs/). Warning: To avoid the potential for data loss, iXsystems must be contacted before replacing a controller or upgrading to High Availability. Copyright iXsystems 2011-2020 TrueNAS® and the TrueNAS® logo are registered trademarks of iXsystems. CONTENTS Welcome .................................................... 8 Typographic Conventions ................................................ 9 1 Introduction 10 1.1 Contacting iXsystems ............................................... 10 1.2 Path and Name Lengths ............................................. 10 1.3 Using the Web Interface ............................................. 12 1.3.1 Tables and Columns ........................................... 12 1.3.2 Advanced Scheduler ........................................... 12 1.3.3 Schedule Calendar ............................................ 13 1.3.4 Changing TrueNAS® Settings ...................................... 13 1.3.5 Web Interface Troubleshooting ..................................... 14 1.3.6 Help Text ................................................. 14 1.3.7 Humanized Fields ............................................ 14 1.3.8 File Browser ................................................ 14 2 Initial Setup 15 2.1 Hardware -

ZFS 2018 and Onward

SEE TEXT ONLY 2018 and Onward BY ALLAN JUDE 2018 is going to be a big year for ZFS RAID-Z Expansion not only FreeBSD, but for OpenZFS • 2018Q4 as well. OpenZFS is the collaboration of developers from IllumOS, FreeBSD, The ability to add an additional disk to an existing RAID-Z VDEV to grow its size with- Linux, Mac OS X, many industry ven- out changing its redundancy level. For dors, and a number of other projects example, a RAID-Z2 consisting of 6 disks to maintain and advance the open- could be expanded to contain 7 disks, source version of Sun’s ZFS filesystem. increasing the available space. This is accomplished by reflowing the data across Improved Pool Import the disks, so as to not change an individual • 2018Q1 block’s offset from the start of the VDEV. The new disk will appear as a new column on the A new patch set changes the way pools are right, and the data will be relocated to main- imported, such that they depend less on con- tain the offset within the VDEV. Similar to figuration data from the system. Instead, the reflowing a paragraph by making it wider, possibly outdated configuration from the sys- without changing the order of the words. In tem is used to find the pool, and partially open the end this means all of the new space shows it read-only. Then the correct on-disk configura- up at the end of the disk, without any frag- tion is loaded. This reduces the number of mentation. -

Truenas Recommendations for Veeam® Backup & Replication™

TRUENAS RECOMMENDATIONS FOR VEEAM® BACKUP & REPLICATION™ [email protected] CONTENTS 1. About this document 2. What is needed? 3. Certified hardware 4. Sizing considerations 5. Advantages for using TrueNAS with Veeam 6. Set up TrueNAS as a Veeam repository 7. Performance tuning for Veeam Backup & Replication 8. Additional references Appendix A: Setup an iSCSI share in TrueNAS and mount in Windows Appendix B: Setup SMB (CIFS) share for your Veeam Repository TrueNAS Recommendations for Veeam Backup & Replication 1 ABOUT THIS DOCUMENT TrueNAS Unified Storage appliances are certified Veeam Ready and can be used to handle demanding backup requirements for file and VM backup. These certification tests measure the speed and effectiveness of the data storage repository using a testing methodology defined by Veeam for Full Backups, Full Restores, Synthetic Full Backups, and Instant VM Recovery from within the Veeam Backup & Replication environment. With the ability to seamlessly scale to petabytes of raw capacity, high-performance networking and cache, and all-flash options, TrueNAS appliances are the ideal choice for Veeam Backup & Replication repositories large and small. This document will cover some of the best practices when deploying TrueNAS with Veeam, specific considerations users must be aware of, and some tips to help with performance. The focus will be on capabilities native to TrueNAS, and users are encouraged to also review relevant Veeam documentation, such as their help center and best practices for more information about using -

DMFS - a Data Migration File System for Netbsd

DMFS - A Data Migration File System for NetBSD William Studenmund Veridian MRJ Technology Solutions NASAAmes Research Center" Abstract It was designed to support the mass storage systems de- ployed here at NAS under the NAStore 2 system. That system supported a total of twenty StorageTek NearLine ! have recently developed DMFS, a Data Migration File tape silos at two locations, each with up to four tape System, for NetBSD[I]. This file system provides ker- drives each. Each silo contained upwards of 5000 tapes, nel support for the data migration system being devel- and had robotic pass-throughs to adjoining silos. oped by my research group at NASA/Ames. The file system utilizes an underlying file store to provide the file The volman system is designed using a client-server backing, and coordinates user and system access to the model, and consists of three main components: the vol- files. It stores its internal metadata in a flat file, which man master, possibly multiple volman servers, and vol- resides on a separate file system. This paper will first man clients. The volman servers connect to each tape describe our data migration system to provide a context silo, mount and unmount tapes at the direction of the for DMFS, then it will describe DMFS. It also will de- volman master, and provide tape services to clients. The scribe the changes to NetBSD needed to make DMFS volman master maintains a database of known tapes and work. Then it will give an overview of the file archival locations, and directs the tape servers to move and mount and restoration procedures, and describe how some typi- tapes to service client requests. -

Filesystems HOWTO Filesystems HOWTO Table of Contents Filesystems HOWTO

Filesystems HOWTO Filesystems HOWTO Table of Contents Filesystems HOWTO..........................................................................................................................................1 Martin Hinner < [email protected]>, http://martin.hinner.info............................................................1 1. Introduction..........................................................................................................................................1 2. Volumes...............................................................................................................................................1 3. DOS FAT 12/16/32, VFAT.................................................................................................................2 4. High Performance FileSystem (HPFS)................................................................................................2 5. New Technology FileSystem (NTFS).................................................................................................2 6. Extended filesystems (Ext, Ext2, Ext3)...............................................................................................2 7. Macintosh Hierarchical Filesystem − HFS..........................................................................................3 8. ISO 9660 − CD−ROM filesystem.......................................................................................................3 9. Other filesystems.................................................................................................................................3