Translators & Virtual Machines

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

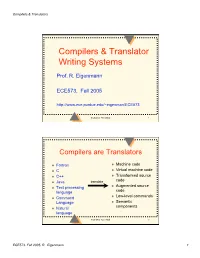

Compilers & Translator Writing Systems

Compilers & Translators Compilers & Translator Writing Systems Prof. R. Eigenmann ECE573, Fall 2005 http://www.ece.purdue.edu/~eigenman/ECE573 ECE573, Fall 2005 1 Compilers are Translators Fortran Machine code C Virtual machine code C++ Transformed source code Java translate Augmented source Text processing language code Low-level commands Command Language Semantic components Natural language ECE573, Fall 2005 2 ECE573, Fall 2005, R. Eigenmann 1 Compilers & Translators Compilers are Increasingly Important Specification languages Increasingly high level user interfaces for ↑ specifying a computer problem/solution High-level languages ↑ Assembly languages The compiler is the translator between these two diverging ends Non-pipelined processors Pipelined processors Increasingly complex machines Speculative processors Worldwide “Grid” ECE573, Fall 2005 3 Assembly code and Assemblers assembly machine Compiler code Assembler code Assemblers are often used at the compiler back-end. Assemblers are low-level translators. They are machine-specific, and perform mostly 1:1 translation between mnemonics and machine code, except: – symbolic names for storage locations • program locations (branch, subroutine calls) • variable names – macros ECE573, Fall 2005 4 ECE573, Fall 2005, R. Eigenmann 2 Compilers & Translators Interpreters “Execute” the source language directly. Interpreters directly produce the result of a computation, whereas compilers produce executable code that can produce this result. Each language construct executes by invoking a subroutine of the interpreter, rather than a machine instruction. Examples of interpreters? ECE573, Fall 2005 5 Properties of Interpreters “execution” is immediate elaborate error checking is possible bookkeeping is possible. E.g. for garbage collection can change program on-the-fly. E.g., switch libraries, dynamic change of data types machine independence. -

Source-To-Source Translation and Software Engineering

Journal of Software Engineering and Applications, 2013, 6, 30-40 http://dx.doi.org/10.4236/jsea.2013.64A005 Published Online April 2013 (http://www.scirp.org/journal/jsea) Source-to-Source Translation and Software Engineering David A. Plaisted Department of Computer Science, University of North Carolina at Chapel Hill, Chapel Hill, USA. Email: [email protected] Received February 5th, 2013; revised March 7th, 2013; accepted March 15th, 2013 Copyright © 2013 David A. Plaisted. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. ABSTRACT Source-to-source translation of programs from one high level language to another has been shown to be an effective aid to programming in many cases. By the use of this approach, it is sometimes possible to produce software more cheaply and reliably. However, the full potential of this technique has not yet been realized. It is proposed to make source- to-source translation more effective by the use of abstract languages, which are imperative languages with a simple syntax and semantics that facilitate their translation into many different languages. By the use of such abstract lan- guages and by translating only often-used fragments of programs rather than whole programs, the need to avoid writing the same program or algorithm over and over again in different languages can be reduced. It is further proposed that programmers be encouraged to write often-used algorithms and program fragments in such abstract languages. Libraries of such abstract programs and program fragments can then be constructed, and programmers can be encouraged to make use of such libraries by translating their abstract programs into application languages and adding code to join things together when coding in various application languages. -

A Case for High Performance Computing with Virtual Machines

A Case for High Performance Computing with Virtual Machines Wei Huangy Jiuxing Liuz Bulent Abaliz Dhabaleswar K. Panday y Computer Science and Engineering z IBM T. J. Watson Research Center The Ohio State University 19 Skyline Drive Columbus, OH 43210 Hawthorne, NY 10532 fhuanwei, [email protected] fjl, [email protected] ABSTRACT in the 1960s [9], but are experiencing a resurgence in both Virtual machine (VM) technologies are experiencing a resur- industry and research communities. A VM environment pro- gence in both industry and research communities. VMs of- vides virtualized hardware interfaces to VMs through a Vir- fer many desirable features such as security, ease of man- tual Machine Monitor (VMM) (also called hypervisor). VM agement, OS customization, performance isolation, check- technologies allow running different guest VMs in a phys- pointing, and migration, which can be very beneficial to ical box, with each guest VM possibly running a different the performance and the manageability of high performance guest operating system. They can also provide secure and computing (HPC) applications. However, very few HPC ap- portable environments to meet the demanding requirements plications are currently running in a virtualized environment of computing resources in modern computing systems. due to the performance overhead of virtualization. Further, Recently, network interconnects such as InfiniBand [16], using VMs for HPC also introduces additional challenges Myrinet [24] and Quadrics [31] are emerging, which provide such as management and distribution of OS images. very low latency (less than 5 µs) and very high bandwidth In this paper we present a case for HPC with virtual ma- (multiple Gbps). -

A Light-Weight Virtual Machine Monitor for Blue Gene/P

A Light-Weight Virtual Machine Monitor for Blue Gene/P Jan Stoessx{1 Udo Steinbergz1 Volkmar Uhlig{1 Jonathan Appavooy1 Amos Waterlandj1 Jens Kehnex xKarlsruhe Institute of Technology zTechnische Universität Dresden {HStreaming LLC jHarvard School of Engineering and Applied Sciences yBoston University ABSTRACT debugging tools. CNK also supports I/O only via function- In this paper, we present a light-weight, micro{kernel-based shipping to I/O nodes. virtual machine monitor (VMM) for the Blue Gene/P Su- CNK's lightweight kernel model is a good choice for the percomputer. Our VMM comprises a small µ-kernel with current set of BG/P HPC applications, providing low oper- virtualization capabilities and, atop, a user-level VMM com- ating system (OS) noise and focusing on performance, scal- ponent that manages virtual BG/P cores, memory, and in- ability, and extensibility. However, today's HPC application terconnects; we also support running native applications space is beginning to scale out towards Exascale systems of directly atop the µ-kernel. Our design goal is to enable truly global dimensions, spanning companies, institutions, compatibility to standard OSes such as Linux on BG/P via and even countries. The restricted support for standardized virtualization, but to also keep the amount of kernel func- application interfaces of light-weight kernels in general and tionality small enough to facilitate shortening the path to CNK in particular renders porting the sprawling diversity applications and lowering OS noise. of scalable applications to supercomputers more and more a Our prototype implementation successfully virtualizes a bottleneck in the development path of HPC applications. -

Opportunities for Leveraging OS Virtualization in High-End Supercomputing

Opportunities for Leveraging OS Virtualization in High-End Supercomputing Kevin T. Pedretti Patrick G. Bridges Sandia National Laboratories∗ University of New Mexico Albuquerque, NM Albuquerque, NM [email protected] [email protected] ABSTRACT formance, making modest virtualization performance over- This paper examines potential motivations for incorporating heads viable. In these cases,the increased flexibility that vir- virtualization support in the system software stacks of high- tualization provides can be used to support a wider range end capability supercomputers. We advocate that this will of applications, to enable exascale co-design research and increase the flexibility of these platforms significantly and development, and provide new capabilities that are not pos- enable new capabilities that are not possible with current sible with the fixed software stacks that high-end capability fixed software stacks. Our results indicate that compute, supercomputers use today. virtual memory, and I/O virtualization overheads are low and can be further mitigated by utilizing well-known tech- The remainder of this paper is organized as follows. Sec- niques such as large paging and VMM bypass. Furthermore, tion 2 discusses previous work dealing with the use of virtu- since the addition of virtualization support does not affect alization in HPC. Section 3 discusses several potential areas the performance of applications using the traditional native where platform virtualization could be useful in high-end environment, there is essentially no disadvantage to its ad- supercomputing. Section 4 presents single node native vs. dition. virtual performance results on a modern Intel platform that show that compute, virtual memory, and I/O virtualization 1. -

Tdb: a Source-Level Debugger for Dynamically Translated Programs

Tdb: A Source-level Debugger for Dynamically Translated Programs Naveen Kumar†, Bruce R. Childers†, and Mary Lou Soffa‡ †Department of Computer Science ‡Department of Computer Science University of Pittsburgh University of Virginia Pittsburgh, Pennsylvania 15260 Charlottesville, Virginia 22904 {naveen, childers}@cs.pitt.edu [email protected] Abstract single stepping through program execution, watching for par- ticular conditions and requests to add and remove breakpoints. Debugging techniques have evolved over the years in response In order to respond, the debugger has to map the values and to changes in programming languages, implementation tech- statements that the user expects using the source program niques, and user needs. A new type of implementation vehicle viewpoint, to the actual values and locations of the statements for software has emerged that, once again, requires new as found in the executable program. debugging techniques. Software dynamic translation (SDT) As programming languages have evolved, new debugging has received much attention due to compelling applications of techniques have been developed. For example, checkpointing the technology, including software security checking, binary and time stamping techniques have been developed for lan- translation, and dynamic optimization. Using SDT, program guages with concurrent constructs [6,19,30]. The pervasive code changes dynamically, and thus, debugging techniques use of code optimizations to improve performance has necessi- developed for statically generated code cannot be used to tated techniques that can respond to queries even though the debug these applications. In this paper, we describe a new optimization may have changed the number of statement debug architecture for applications executing with SDT sys- instances and the order of execution [15,25,29]. -

Chapter 1 Introduction to Computers, Programs, and Java

Chapter 1 Introduction to Computers, Programs, and Java 1.1 Introduction • The central theme of this book is to learn how to solve problems by writing a program . • This book teaches you how to create programs by using the Java programming languages . • Java is the Internet program language • Why Java? The answer is that Java enables user to deploy applications on the Internet for servers , desktop computers , and small hand-held devices . 1.2 What is a Computer? • A computer is an electronic device that stores and processes data. • A computer includes both hardware and software. o Hardware is the physical aspect of the computer that can be seen. o Software is the invisible instructions that control the hardware and make it work. • Computer programming consists of writing instructions for computers to perform. • A computer consists of the following hardware components o CPU (Central Processing Unit) o Memory (Main memory) o Storage Devices (hard disk, floppy disk, CDs) o Input/Output devices (monitor, printer, keyboard, mouse) o Communication devices (Modem, NIC (Network Interface Card)). Bus Storage Communication Input Output Memory CPU Devices Devices Devices Devices e.g., Disk, CD, e.g., Modem, e.g., Keyboard, e.g., Monitor, and Tape and NIC Mouse Printer FIGURE 1.1 A computer consists of a CPU, memory, Hard disk, floppy disk, monitor, printer, and communication devices. CMPS161 Class Notes (Chap 01) Page 1 / 15 Kuo-pao Yang 1.2.1 Central Processing Unit (CPU) • The central processing unit (CPU) is the brain of a computer. • It retrieves instructions from memory and executes them. -

A Virtual Machine Environment for Real Time Systems Laboratories

AC 2007-904: A VIRTUAL MACHINE ENVIRONMENT FOR REAL-TIME SYSTEMS LABORATORIES Mukul Shirvaikar, University of Texas-Tyler MUKUL SHIRVAIKAR received the Ph.D. degree in Electrical and Computer Engineering from the University of Tennessee in 1993. He is currently an Associate Professor of Electrical Engineering at the University of Texas at Tyler. He has also held positions at Texas Instruments and the University of West Florida. His research interests include real-time imaging, embedded systems, pattern recognition, and dual-core processor architectures. At the University of Texas he has started a new real-time systems lab using dual-core processor technology. He is also the principal investigator for the “Back-To-Basics” project aimed at engineering student retention. Nikhil Satyala, University of Texas-Tyler NIKHIL SATYALA received the Bachelors degree in Electronics and Communication Engineering from the Jawaharlal Nehru Technological University (JNTU), India in 2004. He is currently pursuing his Masters degree at the University of Texas at Tyler, while working as a research assistant. His research interests include embedded systems, dual-core processor architectures and microprocessors. Page 12.152.1 Page © American Society for Engineering Education, 2007 A Virtual Machine Environment for Real Time Systems Laboratories Abstract The goal of this project was to build a superior environment for a real time system laboratory that would allow users to run Windows and Linux embedded application development tools concurrently on a single computer. These requirements were dictated by real-time system applications which are increasingly being implemented on asymmetric dual-core processors running different operating systems. A real time systems laboratory curriculum based on dual- core architectures has been presented in this forum in the past.2 It was designed for a senior elective course in real time systems at the University of Texas at Tyler that combines lectures along with an integrated lab. -

Virtual Machine Part II: Program Control

Virtual Machine Part II: Program Control Building a Modern Computer From First Principles www.nand2tetris.org Elements of Computing Systems, Nisan & Schocken, MIT Press, www.nand2tetris.org , Chapter 8: Virtual Machine, Part II slide 1 Where we are at: Human Abstract design Software abstract interface Thought Chapters 9, 12 hierarchy H.L. Language Compiler & abstract interface Chapters 10 - 11 Operating Sys. Virtual VM Translator abstract interface Machine Chapters 7 - 8 Assembly Language Assembler Chapter 6 abstract interface Computer Machine Architecture abstract interface Language Chapters 4 - 5 Hardware Gate Logic abstract interface Platform Chapters 1 - 3 Electrical Chips & Engineering Hardware Physics hierarchy Logic Gates Elements of Computing Systems, Nisan & Schocken, MIT Press, www.nand2tetris.org , Chapter 8: Virtual Machine, Part II slide 2 The big picture Some . Some Other . Jack language language language Chapters Some Jack compiler Some Other 9-13 compiler compiler Implemented in VM language Projects 7-8 VM implementation VM imp. VM imp. VM over the Hack Chapters over CISC over RISC emulator platforms platforms platform 7-8 A Java-based emulator CISC RISC is included in the course written in Hack software suite machine machine . a high-level machine language language language language Chapters . 1-6 CISC RISC other digital platforms, each equipped Any Hack machine machine with its VM implementation computer computer Elements of Computing Systems, Nisan & Schocken, MIT Press, www.nand2tetris.org , Chapter 8: Virtual Machine, -

GT-Virtual-Machines

College of Design Virtual Machine Setup for CP 6581 Fall 2020 1. You will have access to multiple College of Design virtual machines (VMs). CP 6581 will require VMs with ArcGIS installed, and for class sessions we will start the semester using the K2GPU VM. You should open each VM available to you and check to see if ArcGIS is installed. 2. Here are a few general notes on VMs. a. Because you will be a new user when you first start a VM, you may be prompted to set up Microsoft Explorer, Microsoft Edge, Adobe Creative Suite, or other software. You can close these windows and set up specific software later, if you wish. b. Different VMs allow different degrees of customization. To customize right-click on an empty Desktop area and choose “Personalize”. Change your background to a solid color that’s a distinctive color so you can easily distinguish your virtual desktop from your local computer’s desktop. Some VMs will remember your desktop color, other VMs must be re-set at each login, other VMs won’t allow you to change the background color but may allow you to change a highlight color from the “Colors” item on the left menu just below “Background”. c. Your desktop will look exactly the same as physical computer’s desktop except for the small black rectangle at the middle of the very top of the VM screen. Click on the rectangle and a pop-down horizontal menu of VM options will appear. Here are two useful options. i. Preferences then File Access will allow you to grant VM access to your local computer’s drives and files. -

A Brief History of Just-In-Time Compilation

A Brief History of Just-In-Time JOHN AYCOCK University of Calgary Software systems have been using “just-in-time” compilation (JIT) techniques since the 1960s. Broadly, JIT compilation includes any translation performed dynamically, after a program has started execution. We examine the motivation behind JIT compilation and constraints imposed on JIT compilation systems, and present a classification scheme for such systems. This classification emerges as we survey forty years of JIT work, from 1960–2000. Categories and Subject Descriptors: D.3.4 [Programming Languages]: Processors; K.2 [History of Computing]: Software General Terms: Languages, Performance Additional Key Words and Phrases: Just-in-time compilation, dynamic compilation 1. INTRODUCTION into a form that is executable on a target platform. Those who cannot remember the past are con- What is translated? The scope and na- demned to repeat it. ture of programming languages that re- George Santayana, 1863–1952 [Bartlett 1992] quire translation into executable form covers a wide spectrum. Traditional pro- This oft-quoted line is all too applicable gramming languages like Ada, C, and in computer science. Ideas are generated, Java are included, as well as little lan- explored, set aside—only to be reinvented guages [Bentley 1988] such as regular years later. Such is the case with what expressions. is now called “just-in-time” (JIT) or dy- Traditionally, there are two approaches namic compilation, which refers to trans- to translation: compilation and interpreta- lation that occurs after a program begins tion. Compilation translates one language execution. into another—C to assembly language, for Strictly speaking, JIT compilation sys- example—with the implication that the tems (“JIT systems” for short) are com- translated form will be more amenable pletely unnecessary. -

Language Translators

Student Notes Theory LANGUAGE TRANSLATORS A. HIGH AND LOW LEVEL LANGUAGES Programming languages Low – Level Languages High-Level Languages Example: Assembly Language Example: Pascal, Basic, Java Characteristics of LOW Level Languages: They are machine oriented : an assembly language program written for one machine will not work on any other type of machine unless they happen to use the same processor chip. Each assembly language statement generally translates into one machine code instruction, therefore the program becomes long and time-consuming to create. Example: 10100101 01110001 LDA &71 01101001 00000001 ADD #&01 10000101 01110001 STA &71 Characteristics of HIGH Level Languages: They are not machine oriented: in theory they are portable , meaning that a program written for one machine will run on any other machine for which the appropriate compiler or interpreter is available. They are problem oriented: most high level languages have structures and facilities appropriate to a particular use or type of problem. For example, FORTRAN was developed for use in solving mathematical problems. Some languages, such as PASCAL were developed as general-purpose languages. Statements in high-level languages usually resemble English sentences or mathematical expressions and these languages tend to be easier to learn and understand than assembly language. Each statement in a high level language will be translated into several machine code instructions. Example: number:= number + 1; 10100101 01110001 01101001 00000001 10000101 01110001 B. GENERATIONS OF PROGRAMMING LANGUAGES 4th generation 4GLs 3rd generation High Level Languages 2nd generation Low-level Languages 1st generation Machine Code Page 1 of 5 K Aquilina Student Notes Theory 1. MACHINE LANGUAGE – 1ST GENERATION In the early days of computer programming all programs had to be written in machine code.