Fifty Genome Sequences Reveal Breast Cancer's Complexity

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

June 19 City Council Hearing Submissions

Testimony of LindsayGreene, SeniorAdvisor to the Deputy Mayor for Housing& EconomicDevelopment before the New York City CouncilCommittee on ConsumerAffairs Hearingon Intro. No. 1648 Establishinga Nightlife Task Forceand an Office of Nightlife June 19, 2017 Introduction Good morning, [Speaker], Chairman Espinal, and members of the Committee on Consumer Affairs. I am Lindsay Greene, Senior Advisor to the D_eputyMayor for Housing & Economic Development. I work closely with several agencies that are involved with economic_development, public space and business opportunity, including the Department of Consumer Affairs ("DCA"), the Department of Small BusinessServices ("SBS") and the New York City Economic Development Corporation ("EDC") among others. I am joined today by several colleagues from various city agencies that touch the nightlife and entertainment industries, including Shira Gans (Senior Director of Policy+ Programs at the Mayor's Office of Media & Entertainment), Tam.ala Boyd (General Counsel at the Department of Consumer Affairs), and Kristin Sakoda (Deputy Commissioner and General Counsel' at the Department of Cultural Affairs). Shira and I will be giving testimony on the Nightlife bill, and Tamala and Kristin are joining us for Q&A. We are pleased to be representing Mayor Bill de Blasio's administration here today. First, Chairman Espinal, I want to thank you again for the work you have already been doing with us on nightlife issues broadly. Second, let me echo Shira's statements that this Administration feels strongly that nightlife is essential to the New York City economy and culture and we want to help the industry flourish and ensure all New Yorkers are safe and secure while they are enjoying the diversity of the City's entertainment and nightlife offerings. -

Download Transcript

The following oral history memoir is the result of 1 videorecorded session of an interview with Nat Roe by Cynthia Tobar on August 21, 2015 in New York City. This interview is part of "Cities for People, Not for Profit": Gentrification and Housing Activism in Bushwick. Nat Roe has reviewed the transcript and has made minor corrections and emendations. The reader is asked to bear in mind that she or he is reading a verbatim transcript of the spoken word, rather than written prose. Nat Roe [Start of recorded material at 00:00:00] Cynthia: Good afternoon. It is Friday, August 21, 2015, and this is an interview for the Bushwick Fair Housing Collective for history. Would you please state your name and affiliation? Nat: My name is Nat Roe. I am a resident here at Silent Barn. We're in my apartment. I am also a co-founder of this space and I'm the executive director of Flux Factory as well. Cynthia: And Silent Barn is located here on Bushwick Avenue in Bushwick, Brooklyn. Thank you for taking the time to be a part of the project of the interviews. I just want to start with a little general question just like an icebreaker. Maybe you can even tell me a little bit about your early childhood, your early memories, how you got started along your path, and what brought you eventually to Bushwick? You can start anywhere you like. Nat: Okay. Well, I was born and raised in New Jersey, Montclair, New Jersey, so I did come into the city pretty frequently and somehow figured that after college I would end up living here. -

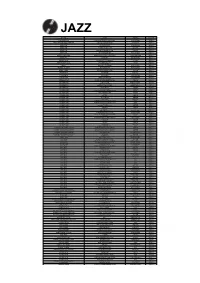

Order Form Full

JAZZ ARTIST TITLE LABEL RETAIL ADDERLEY, CANNONBALL SOMETHIN' ELSE BLUE NOTE RM112.00 ARMSTRONG, LOUIS LOUIS ARMSTRONG PLAYS W.C. HANDY PURE PLEASURE RM188.00 ARMSTRONG, LOUIS & DUKE ELLINGTON THE GREAT REUNION (180 GR) PARLOPHONE RM124.00 AYLER, ALBERT LIVE IN FRANCE JULY 25, 1970 B13 RM136.00 BAKER, CHET DAYBREAK (180 GR) STEEPLECHASE RM139.00 BAKER, CHET IT COULD HAPPEN TO YOU RIVERSIDE RM119.00 BAKER, CHET SINGS & STRINGS VINYL PASSION RM146.00 BAKER, CHET THE LYRICAL TRUMPET OF CHET JAZZ WAX RM134.00 BAKER, CHET WITH STRINGS (180 GR) MUSIC ON VINYL RM155.00 BERRY, OVERTON T.O.B.E. + LIVE AT THE DOUBLET LIGHT 1/T ATTIC RM124.00 BIG BAD VOODOO DADDY BIG BAD VOODOO DADDY (PURPLE VINYL) LONESTAR RECORDS RM115.00 BLAKEY, ART 3 BLIND MICE UNITED ARTISTS RM95.00 BROETZMANN, PETER FULL BLAST JAZZWERKSTATT RM95.00 BRUBECK, DAVE THE ESSENTIAL DAVE BRUBECK COLUMBIA RM146.00 BRUBECK, DAVE - OCTET DAVE BRUBECK OCTET FANTASY RM119.00 BRUBECK, DAVE - QUARTET BRUBECK TIME DOXY RM125.00 BRUUT! MAD PACK (180 GR WHITE) MUSIC ON VINYL RM149.00 BUCKSHOT LEFONQUE MUSIC EVOLUTION MUSIC ON VINYL RM147.00 BURRELL, KENNY MIDNIGHT BLUE (MONO) (200 GR) CLASSIC RECORDS RM147.00 BURRELL, KENNY WEAVER OF DREAMS (180 GR) WAX TIME RM138.00 BYRD, DONALD BLACK BYRD BLUE NOTE RM112.00 CHERRY, DON MU (FIRST PART) (180 GR) BYG ACTUEL RM95.00 CLAYTON, BUCK HOW HI THE FI PURE PLEASURE RM188.00 COLE, NAT KING PENTHOUSE SERENADE PURE PLEASURE RM157.00 COLEMAN, ORNETTE AT THE TOWN HALL, DECEMBER 1962 WAX LOVE RM107.00 COLTRANE, ALICE JOURNEY IN SATCHIDANANDA (180 GR) IMPULSE -

Twitter Data

Twitter Search Rule: #CreateNYC User Details Date Screen Name Full Name Tweet Text Tweet ID App Followers Follows Retweets Favorites Verfied User Since Location Bio Profile Image Google Maps 2/14/2017 13:52:47 @NYCulture NYC Cultural Affairs We're discussing cultural heritage & neighborhood preservation at the next #CreateNYC Office Hours. Join us on Fri https://t.co/2jKgL37R8E 831576940779884547 Hootsuite 7205 546 2 2 No 3/2/2012 New York City We're the New York City DepartmentView of Cultural Affairs, the largest municipal funder of culture in the US. We believe that arts and culture are for everyone. 2/14/2017 14:09:26 @afinelyne AFineLyne RT @NYCulture: We're discussing cultural heritage & neighborhood preservation at the next #CreateNYC Office Hours. Join us on Fri https://t… 831581131736829952 Twitter for iPad 2059 1855 2 0 No 5/22/2009 Harlem, NYC Artist/Freelance Writer - UntappedView Cities. You can follow my paintbrush at https://t.co/ahhx4yavys 2/15/2017 12:53:07 @NPCenter NPC RT @NYCulture: We're discussing cultural heritage & neighborhood preservation at the next #CreateNYC Office Hours. Join us on Fri https://t… 831924312315744256 Twitter for iPhone 532 309 2 0 No 3/20/2011 232 East 11th Street A project of @LandmarkFund, NPCView is dedicated to facilitating & encouraging citizen participation in the improvement & protection of NYC's diverse neighborhoods 2/15/2017 14:42:15 @Hester_Street Hester Street Collab NYC DIY Spaces are having conversations with @NYCulture as part of the Cultural Plan for New York City #CreateNYC -

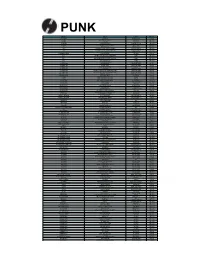

Order Form Full

PUNK ARTIST TITLE LABEL RETAIL 100 DEMONS 100 DEMONS DEATHWISH INC RM90.00 4-SKINS A FISTFUL OF 4-SKINS RADIATION RM125.00 4-SKINS LOW LIFE RADIATION RM114.00 400 BLOWS SICKNESS & HEALTH ORIGINAL RECORD RM117.00 45 GRAVE SLEEP IN SAFETY (GREEN VINYL) REAL GONE RM142.00 999 DEATH IN SOHO PH RECORDS RM125.00 999 THE BIGGEST PRIZE IN SPORT (200 GR) DRASTIC PLASTIC RM121.00 999 THE BIGGEST PRIZE IN SPORT (GREEN) DRASTIC PLASTIC RM121.00 999 YOU US IT! COMBAT ROCK RM120.00 A WILHELM SCREAM PARTYCRASHER NO IDEA RM96.00 A.F.I. ANSWER THAT AND STAY FASHIONABLE NITRO RM119.00 A.F.I. BLACK SAILS IN THE SUNSET NITRO RM119.00 A.F.I. SHUT YOUR MOUTH AND OPEN YOUR EYES NITRO RM119.00 A.F.I. VERY PROUD OF YA NITRO RM119.00 ABEST ASYLUM (WHITE VINYL) THIS CHARMING MAN RM98.00 ACCUSED, THE ARCHIVE TAPES UNREST RECORDS RM108.00 ACCUSED, THE BAKED TAPES UNREST RECORDS RM98.00 ACCUSED, THE NASTY CUTS (1991-1993) UNREST RM98.00 ACCUSED, THE OH MARTHA! UNREST RECORDS RM93.00 ACCUSED, THE RETURN OF MARTHA SPLATTERHEAD (EARA UNREST RECORDS RM98.00 ACCUSED, THE RETURN OF MARTHA SPLATTERHEAD (SUBC UNREST RECORDS RM98.00 ACHTUNGS, THE WELCOME TO HELL GOING UNDEGROUND RM96.00 ACID BABY JESUS ACID BABY JESUS SLOVENLY RM94.00 ACIDEZ BEER DRINKERS SURVIVORS UNREST RM98.00 ACIDEZ DON'T ASK FOR PERMISSION UNREST RM98.00 ADICTS, THE AND IT WAS SO! (WHITE VINYL) NUCLEAR BLAST RM127.00 ADICTS, THE TWENTY SEVEN DAILY RECORDS RM120.00 ADOLESCENTS ADOLESCENTS FRONTIER RM97.00 ADOLESCENTS BRATS IN BATTALIONS NICKEL & DIME RM96.00 ADOLESCENTS LA VENDETTA FRONTIER RM95.00 ADOLESCENTS -

Rock Clubs and Gentrification in New York City: the Case of the Bowery Presents

Rock Clubs and Gentrification in New York City: The Case of The Bowery Presents Fabian Holt University of Roskilde [email protected] Abstract This article offers a new analytical perspective on the relation between rock clubs and gentrification to illuminate broader changes in urbanism and cultural production in New York City. Although gentrification is central to understanding how the urban condition has changed since the 1960s, the long-term implications for popular music and its evolution within new urban populations and cultural industries have received relatively little scholarly attention. Gentrification has often been dismissed as an outside threat to music scenes. This article, in contrast, argues that gentrification needs to be understood as a broader social, economic, and cultural process in which popular music cultures have changed. The argument is developed through a case study of The Bowery Presents, a now dominant concert promoter and venue operator with offices on the Lower East Side. Based on fieldwork conducted over a three-year period and on urban sociological macro-level analysis, this article develops an analytical narrative to account for the evolution of the contemporary concert culture in the mid-size venues of The Bowery Presents on the Lower East Side and Williamsburg, Brooklyn, as a particular instance of more general dynamics of culture and commerce in contemporary cities. The narrative opens up new perspectives for theorizing live music and popular culture within processes of urban social change. The article begins by reviewing conventional approaches to rock music clubs in popular music studies and urban sociology. These approaches are further clarified through the mapping of a deep structure in how music scenes have framed the relationship between clubs and gentrification discursively. -

Crain's New York Business

CRAINS 20160523-NEWS--0001-NAT-CCI-CN_-- 5/20/2016 8:42 PM Page 1 CRAINS ® MAY 23-29, 2016 | PRICE $3.00 NEW YORK BUSINESS VOL. XXXII, NO. 21 WWW.CRAINSNEWYORK.COM 0 71486 01068 5 21 NEWSPAPER WE’RE IN. CBRE is honored to take home a historic 34th win ingenious. in the Real Estate Board of New York’s Most Ingenious Deal of the Year Awards. Lauren Crowley Corrinet, Gregory Tosko and Sacha innovative. Zarba received the 2015 Robert T. Lawrence Memorial Award for helping our client, LinkedIn, expand at The Empire State Building— inspiring. inspiring an icon to innovate for one of tech’s most innovative companies. We’re proud of our creative partnership with LinkedIn— and the advantage we bring to the tech sector in its growth across New York City. 20160523-NEWS--0003-NAT-CCI-CN_-- 5/20/2016 8:33 PM Page 1 MAYCRAINS 23-29, 2016 FROM THE NEWSROOM | JEREMY SMERD Interesting conflicts IN THIS ISSUE 4 AGENDA THE MOST RECENT DUSTUP 5 IN CASE YOU MISSED IT between Gov. Andrew Cuomo and No good subway Mayor Bill de Blasio, this time over the development of two 6 ASKED & ANSWERED improvement goes unpunished residential buildings at Pier 6 in Brooklyn Bridge Park, says 7 TRANSPORTATION a lot about their attitudes toward the incestuous way 8 WHO OWNS THE BLOCK politics and business commingle in New York. 9 INFRASTRUCTURE The mayor wants to push his agenda in the face of 10 questions about quid pro quos for campaign donors, while VIEWPOINTS the governor, facing similar questions, is more than happy to shine a spotlight on the FEATURES mayor as several probes by U.S. -

Type Artist Album Barcode Price 32.95 21.95 20.95 26.95 26.95

Type Artist Album Barcode Price 10" 13th Floor Elevators You`re Gonna Miss Me (pic disc) 803415820412 32.95 10" A Perfect Circle Doomed/Disillusioned 4050538363975 21.95 10" A.F.I. All Hallow's Eve (Orange Vinyl) 888072367173 20.95 10" African Head Charge 2016RSD - Super Mystic Brakes 5060263721505 26.95 10" Allah-Las Covers #1 (Ltd) 184923124217 26.95 10" Andrew Jackson Jihad Only God Can Judge Me (white vinyl) 612851017214 24.95 10" Animals 2016RSD - Animal Tracks 018771849919 21.95 10" Animals The Animals Are Back 018771893417 21.95 10" Animals The Animals Is Here (EP) 018771893516 21.95 10" Beach Boys Surfin' Safari 5099997931119 26.95 10" Belly 2018RSD - Feel 888608668293 21.95 10" Black Flag Jealous Again (EP) 018861090719 26.95 10" Black Flag Six Pack 018861092010 26.95 10" Black Lips This Sick Beat 616892522843 26.95 10" Black Moth Super Rainbow Drippers n/a 20.95 10" Blitzen Trapper 2018RSD - Kids Album! 616948913199 32.95 10" Blossoms 2017RSD - Unplugged At Festival No. 6 602557297607 31.95 (45rpm) 10" Bon Jovi Live 2 (pic disc) 602537994205 26.95 10" Bouncing Souls Complete Control Recording Sessions 603967144314 17.95 10" Brian Jonestown Massacre Dropping Bombs On the Sun (UFO 5055869542852 26.95 Paycheck) 10" Brian Jonestown Massacre Groove Is In the Heart 5055869507837 28.95 10" Brian Jonestown Massacre Mini Album Thingy Wingy (2x10") 5055869507585 47.95 10" Brian Jonestown Massacre The Sun Ship 5055869507783 20.95 10" Bugg, Jake Messed Up Kids 602537784158 22.95 10" Burial Rodent 5055869558495 22.95 10" Burial Subtemple / Beachfires 5055300386793 21.95 10" Butthole Surfers Locust Abortion Technician 868798000332 22.95 10" Butthole Surfers Locust Abortion Technician (Red 868798000325 29.95 Vinyl/Indie-retail-only) 10" Cisneros, Al Ark Procession/Jericho 781484055815 22.95 10" Civil Wars Between The Bars EP 888837937276 19.95 10" Clark, Gary Jr. -

Order Form Full

PUNK ARTIST TITLE LABEL RETAIL 100 DEMONS 100 DEMONS DEATHWISH INC RM87.00 4-SKINS A FISTFUL OF 4-SKINS RADIATION RM120.00 400 BLOWS SICKNESS & HEALTH ORIGINAL RECORD RM113.00 45 GRAVE SLEEP IN SAFETY (GREEN VINYL) REAL GONE RM137.00 999 BAY AREA HOMICIDE (COLOR) CLEOPATRA RM111.00 999 DEATH IN SOHO PH RECORDS RM120.00 999 THE BIGGEST PRIZE IN SPORT (200 GR) DRASTIC PLASTIC RM116.00 999 THE BIGGEST PRIZE IN SPORT (GREEN) DRASTIC PLASTIC RM116.00 999 YOU US IT! COMBAT ROCK RM115.00 A WILHELM SCREAM PARTYCRASHER NO IDEA RM93.00 A.F.I. ANSWER THAT AND STAY FASHIONABLE NITRO RM114.00 A.F.I. BLACK SAILS IN THE SUNSET NITRO RM114.00 A.F.I. THE ART OF DROWNING NITRO RM114.00 A.F.I. VERY PROUD OF YA NITRO RM114.00 ABEST ASYLUM (WHITE VINYL) THIS CHARMING MAN RM95.00 ACCUSED, THE ARCHIVE TAPES UNREST RECORDS RM104.00 ACCUSED, THE BAKED TAPES UNREST RECORDS RM95.00 ACCUSED, THE NASTY CUTS (1991-1993) UNREST RM95.00 ACCUSED, THE OH MARTHA! UNREST RECORDS RM89.00 ACCUSED, THE RETURN OF MARTHA SPLATTERHEAD (EARA UNREST RECORDS RM95.00 ACCUSED, THE RETURN OF MARTHA SPLATTERHEAD (SUBC UNREST RECORDS RM95.00 ACHTUNGS, THE WELCOME TO HELL GOING UNDEGROUND RM93.00 ACID BABY JESUS ACID BABY JESUS SLOVENLY RM90.00 ACIDEZ BEER DRINKERS SURVIVORS UNREST RM95.00 ACIDEZ DON'T ASK FOR PERMISSION UNREST RM95.00 ADICTS, THE AND IT WAS SO! (WHITE VINYL) NUCLEAR BLAST RM122.00 ADOLESCENTS ADOLESCENTS FRONTIER RM94.00 ADOLESCENTS BRATS IN BATTALIONS NICKEL & DIME RM93.00 ADOLESCENTS LA VENDETTA FRONTIER RM94.00 ADOLESCENTS MANIFEST DESTINY CONCRETE JUNGLE RM106.00 -

Black Leaders Rip Ratner's $400M Barclays Arena Deal

THE stoop: Heights Lowdown: Will Smith stood me up, local news, p.3 Brooklyn’s Real Newspaper BrooklynPaper.com • (718) 834–9350 • Brooklyn, NY • ©2007 BROOKLYN HEIGHTS–DOWNTOWN EDITION AWP/18 pages • Vol. 30, No. 4 • Saturday, Jan. 27, 2007 • FREE INCLUDING DUMBO Black leaders rip Ratner’s $400M Barclays arena deal Atlantic Yards supporter says Bruce is taking ‘blood money’ of slaves at Nets site; another ally is demanding reparations By Ariella Cohen “All options should be on the table, including The Brooklyn Paper payment for past wrongs and termination of the MORE INSIDE Two black supporters of Atlantic Yards have agreement,” Jeffries said. joined a growing chorus saying that developer Green, a strong supporter of Atlantic Yards, •Editorial and Letters: p. 6 Bruce Ratner betrayed his black allies when he moved last week to distance himself from the nam- •Ratner socks it to locals: p.15 ing-rights deal. He called on Barclays to pay repa- sold the naming rights to his proposed Nets arena •Anti-Ratner movie debuts: p.15 to Barclays, a global banking firm that was found- rations to American blacks for its role in slavery. ed by slave traders and did business with South “Barclays must step up and respond to our com- •Locals slam Barclays deal: p.15 Africa’s apartheid government. munity the way they responded to Nelson Mandela” •Lawsuit’s new angle: p.15 over the issue of apartheid in South Africa, he said. Both Roger Green — a former state Assembly- As part of the $400-million naming-rights deal, •Ratner foes dial for $: p.15 man — and his successor Hakeem Jeffries came out Barclays has said it will pay $2.5 million to repair this week against the Barclays deal. -

Rock Clubs and Gentrification in New York City: the Case of the Bowery Presents

Rock Clubs and Gentrification in New York City: The Case of The Bowery Presents Fabian Holt University of Roskilde [email protected] Abstract This article offers a new analytical perspective on the relation between rock clubs and gentrification to illuminate broader changes in urbanism and cultural production in New York City. Although gentrification is central to understanding how the urban condition has changed since the 1960s, the long-term implications for popular music and its evolution within new urban populations and cultural industries have received relatively little scholarly attention. Gentrification has often been dismissed as an outside threat to music scenes. This article, in contrast, argues that gentrification needs to be understood as a broader social, economic, and cultural process in which popular music cultures have changed. The argument is developed through a case study of The Bowery Presents, a now dominant concert promoter and venue operator with offices on the Lower East Side. Based on fieldwork conducted over a three-year period and on urban sociological macro-level analysis, this article develops an analytical narrative to account for the evolution of the contemporary concert culture in the mid-size venues of The Bowery Presents on the Lower East Side and Williamsburg, Brooklyn, as a particular instance of more general dynamics of culture and commerce in contemporary cities. The narrative opens up new perspectives for theorizing live music and popular culture within processes of urban social change. The article begins by reviewing conventional approaches to rock music clubs in popular music studies and urban sociology. These approaches are further clarified through the mapping of a deep structure in how music scenes have framed the relationship between clubs and gentrification discursively. -

UNIVERSAL MUSIC • Various Artists – Now! Country 9 • Tiesto – a Town

Various Artists – Now! Country 9 Tiesto – A Town Called Paradsie Mia Martina – Mia Martina New Releases From Classics And Jazz Inside!!! And more… UNI14-21 UNIVERSAL MUSIC 2450 Victoria Park Ave., Suite 1, Willowdale, Ontario M2J 5H3 Phone: (416) 718.4000 Artwork shown may not be final UNIVERSAL MUSIC CANADA NEW RELEASE Artist/Title: Jennifer Lopez / Jennifer Lopez Cat. #: B001993202 Price Code: SP Order Due: May 22, 2014 Release Date: June 17, 2014 Bar Code: File: Pop Genre Code: 33 Box Lot: 25 Key Tracks: 6 02537 67098 7 First Love I Luh Ya Papi Artist/Title: Jennifer Lopez / Jennifer Lopez [Deluxe] Bar Code: Cat. #: B002082802 Price Code: AV 6 02537 84282 7 DELUXE VERSION WILL CONTAIN 4 ADDITIONAL TRACKS JUST THE FACTS: This will be Jennifer’s 10th album First official single “First Love” premiered on May 1st via the Ryan Seacrest show and immediately added to KISS FM in Toronto and VIRGIN Radio in Toronto. Set‐up track ‘I Luh Ya Papi’ Video has over 27 million views : http://youtu.be/c4oiEhf9M04 Major National TV, Radio, Online, and Lifestyle Advertising Campaign to support album release Jennifer has sold over 57 million albums worldwide with over 1.2 million in Canada!! Jennifer is currently a judge AMERICAN IDOL alongside Keith Urban & Harry Connick Jr., the #1 Show in its Time Slot in Canada! Additional Links: o Same Girl Viral Video (over 8.5 Million Views): http://youtu.be/s3T2A7xJgZs o Girls Viral Audio: http://youtu.be/M2UXYwr3clQ o American Idol performance of “I Luh Ya Papi”: http://youtu.be/RIDmvbJ07XA INTERNAL US Label Name: 2101 Records/Capitol Records Territory: International Release Type: O For additional artist information please contact Nick Nunes at 416‐718‐4045 or [email protected] UNIVERSAL MUSIC 2450 Victoria Park Avenue, Suite 1, Toronto, ON M2J 5H3 Phone: (416) 718‐4000 Fax: (416) 718‐4218 UNIVERSAL MUSIC CANADA NEW RELEASE Artist/Title: Sam Smith / In The Lonely Hour Cat.