2Nd Generation Intel Core Processor Family Desktop, Intel Pentium

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Release Notes for X11R6.8.2 the X.Orgfoundation the Xfree86 Project, Inc

Release Notes for X11R6.8.2 The X.OrgFoundation The XFree86 Project, Inc. 9February 2005 Abstract These release notes contains information about features and their status in the X.Org Foundation X11R6.8.2 release. It is based on the XFree86 4.4RC2 RELNOTES docu- ment published by The XFree86™ Project, Inc. Thereare significant updates and dif- ferences in the X.Orgrelease as noted below. 1. Introduction to the X11R6.8.2 Release The release numbering is based on the original MIT X numbering system. X11refers to the ver- sion of the network protocol that the X Window system is based on: Version 11was first released in 1988 and has been stable for 15 years, with only upwardcompatible additions to the coreX protocol, a recordofstability envied in computing. Formal releases of X started with X version 9 from MIT;the first commercial X products werebased on X version 10. The MIT X Consortium and its successors, the X Consortium, the Open Group X Project Team, and the X.OrgGroup released versions X11R3 through X11R6.6, beforethe founding of the X.OrgFoundation. Therewill be futuremaintenance releases in the X11R6.8.x series. However,efforts arewell underway to split the X distribution into its modular components to allow for easier maintenance and independent updates. We expect a transitional period while both X11R6.8 releases arebeing fielded and the modular release completed and deployed while both will be available as different consumers of X technology have different constraints on deployment. Wehave not yet decided how the modular X releases will be numbered. We encourage you to submit bug fixes and enhancements to bugzilla.freedesktop.orgusing the xorgproduct, and discussions on this server take place on <[email protected]>. -

Intel® Xeon® Processor E3-1200 V2 Product Family and LGA 1155 Socket

Intel® Xeon® Processor E3-1200 v2 Product Family and LGA 1155 Socket Thermal/Mechanical Specifications and Design Guidelines May 2012 Document Number: 324973-001 NFORMATIONLegal Lines and Disclaimers IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, life sustaining, critical control or safety systems, or in nuclear facility applications. Intel may make changes to specifications and product descriptions at any time, without notice. This document contains information on products in the design phase of development. The information here is subject to change without notice. Do not finalize a design with this information. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The Intel® Xeon® processor E3-1200 v2 product family and Intel® C200 Series Chipset family may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. -

Inside Intel® Core™ Microarchitecture Setting New Standards for Energy-Efficient Performance

White Paper Inside Intel® Core™ Microarchitecture Setting New Standards for Energy-Efficient Performance Ofri Wechsler Intel Fellow, Mobility Group Director, Mobility Microprocessor Architecture Intel Corporation White Paper Inside Intel®Core™ Microarchitecture Introduction Introduction 2 The Intel® Core™ microarchitecture is a new foundation for Intel®Core™ Microarchitecture Design Goals 3 Intel® architecture-based desktop, mobile, and mainstream server multi-core processors. This state-of-the-art multi-core optimized Delivering Energy-Efficient Performance 4 and power-efficient microarchitecture is designed to deliver Intel®Core™ Microarchitecture Innovations 5 increased performance and performance-per-watt—thus increasing Intel® Wide Dynamic Execution 6 overall energy efficiency. This new microarchitecture extends the energy efficient philosophy first delivered in Intel's mobile Intel® Intelligent Power Capability 8 microarchitecture found in the Intel® Pentium® M processor, and Intel® Advanced Smart Cache 8 greatly enhances it with many new and leading edge microar- Intel® Smart Memory Access 9 chitectural innovations as well as existing Intel NetBurst® microarchitecture features. What’s more, it incorporates many Intel® Advanced Digital Media Boost 10 new and significant innovations designed to optimize the Intel®Core™ Microarchitecture and Software 11 power, performance, and scalability of multi-core processors. Summary 12 The Intel Core microarchitecture shows Intel’s continued Learn More 12 innovation by delivering both greater energy efficiency Author Biographies 12 and compute capability required for the new workloads and usage models now making their way across computing. With its higher performance and low power, the new Intel Core microarchitecture will be the basis for many new solutions and form factors. In the home, these include higher performing, ultra-quiet, sleek and low-power computer designs, and new advances in more sophisticated, user-friendly entertainment systems. -

Dual-Core Intel® Xeon® Processor 3100 Series Specification Update

Dual-Core Intel® Xeon® Processor 3100 Series Specification Update — on 45 nm Process in the 775-land LGA Package December 2010 Notice: Dual-Core Intel® Xeon® Processor 3100 Series may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are documented in this Specification Update. Document Number: 319006-009 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. Intel products are not intended for use in medical, life saving, or life sustaining applications. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. Enabling Execute Disable Bit functionality requires a PC with a processor with Execute Disable Bit capability and a supporting operating system. -

Hyper-V Performance Comparison: Microsoft Windows Server 2008 SP2 and R2 with Intel Xeon Processor X5570

TEST REPORT JULY 2009 Hyper-V performance comparison: Microsoft Windows Server 2008 SP2 and R2 with Intel Xeon processor X5570- and E5450-based servers Executive summary KEY FINDINGS Microsoft® Corporation (Microsoft) and Intel® Corporation (Intel) commissioned Principled z The Intel Xeon processor X5570-based server with Technologies® (PT) to measure Hyper-V R2 the optimum number of CSUs delivered 37.1 percent virtualization performance improvements using more vConsolidate performance when running vConsolidate on the following Microsoft operating Microsoft Windows Server 2008 Enterprise Edition systems and Intel processors: R2 than when runningTEST Microsoft REPORT Windows Server 2008 EnterpriseFEBRUARY Edition SP2. (See 2006 Figure 1.) • Microsoft Windows Server® 2008 z Microsoft Windows Server 2008 Enterprise Edition Enterprise Edition SP2 with Hyper-V on SP2 running on the Intel Xeon processor X5570- Intel® Xeon® processor E5450 based server with the optimum number of CSUs • Microsoft Windows Server 2008 Enterprise delivered 98.7 percent more vConsolidate Edition SP2 with Hyper-V on Intel Xeon performance than it did running on the Intel Xeon processor X5570 processor E5450-based server with the optimum • Microsoft Windows Server 2008 Enterprise number of CSUs. (See Figure 1.) Edition R2 with Hyper-V on Intel Xeon processor X5570 Figure 1 shows the vConsolidate results for all three configurations with the optimum number of vConsolidate work units, consolidation stack units (CSUs). The Intel Xeon processor X5570-based server with the optimum number of CSUs (eight) delivered 37.1 percent more vConsolidate performance when running Microsoft Windows Server 2008 Enterprise Edition R2 than when running Microsoft Windows Server 2008 Enterprise Edition SP2. -

Microcode Revision Guidance August 31, 2019 MCU Recommendations

microcode revision guidance August 31, 2019 MCU Recommendations Section 1 – Planned microcode updates • Provides details on Intel microcode updates currently planned or available and corresponding to Intel-SA-00233 published June 18, 2019. • Changes from prior revision(s) will be highlighted in yellow. Section 2 – No planned microcode updates • Products for which Intel does not plan to release microcode updates. This includes products previously identified as such. LEGEND: Production Status: • Planned – Intel is planning on releasing a MCU at a future date. • Beta – Intel has released this production signed MCU under NDA for all customers to validate. • Production – Intel has completed all validation and is authorizing customers to use this MCU in a production environment. -

Single Board Computer

Single Board Computer SBC with the Intel® 8th generation Core™/Xeon® (formerly Coffee Lake H) SBC-C66 and 9th generation Core™ / Xeon® / Pentium® / Celeron® (formerly Coffee Lake Refresh) CPUs High-performing, flexible solution for intelligence at the edge HIGHLIGHTS CONNECTIVITY CPU 2x USB 3.1; 4x USB 2.0; NVMe SSD Slot; PCI-e x8 Intel® 8th gen. Core™ / Xeon® and 9th gen. Core™ / port (PCI-e x16 mechanical slot); VPU High Speed Xeon® / Pentium® / Celeron® CPUs Connector with 4xUSB3.1 + 2x PCI-ex4 GRAPHICS MEMORY Intel® UHD Graphics 630/P630 architecture, up to 128GB DDR4 memory on 4x SO-DIMM Slots supports up to 3 independent displays (ECC supported) Available in Industrial Temperature Range MAIN FIELDS OF APPLICATION Biomedical/ Gaming Industrial Industrial Surveillance Medical devices Automation and Internet of Control Things FEATURES ® ™ ® Intel 8th generation Core /Xeon (formerly Coffee Lake H) CPUs: Max Cores 6 • Intel® Core™ i7-8850H, Six Core @ 2.6GHz (4.3GHz Max 1 Core Turbo), 9MB Cache, 45W TDP (35W cTDP), with Max Thread 12 HyperThreading • Intel® Core™ i5-8400H, Quad Core @ 2.5GHz (4.2GHz Intel® QM370, HM370 or CM246 Platform Controller Hub Chipset Max 1 Core Turbo), 8MB Cache, 45W TDP (35W cTDP), (PCH) with HyperThreading • Intel® Core™ i3-8100H, Quad Core @ 3.0GHz, 6MB 2x DDR4-2666 or 4x DDR4-2444 ECC SODIMM Slots, up to 128GB total (only with 4 SODIMM modules). Cache, 45W TDP (35W cTDP) Memory ® ™ ® ® ECC DDR4 memory modules supported only with Xeon Core Information subject to change. Please visit www.seco.com to find the latest version of this datasheet Information subject to change. -

Download/Face- Modules/Documents/Face-Modules-Hw-Specifications.Pdf

I PC H “ CompuLab Ltd. Revision 1.2 December 2013 Legal Notice © 2013 CompuLab Ltd. All Rights Reserved. No part of this document may be photocopied, reproduced, stored in a retrieval system, or transmitted, in any form or by any means whether, electronic, mechanical, or otherwise without the prior written permission of CompuLab Ltd. No warranty of accuracy is given concerning the contents of the information contained in this publication. To the extent permitted by law no liability (including liability to any person by reason of negligence) will be accepted by CompuLab Ltd., its subsidiaries or employees for any direct or indirect loss or damage caused by omissions from or inaccuracies in this document. CompuLab Ltd. reserves the right to change details in this publication without notice. Product and company names herein may be the trademarks of their respective owners. CompuLab Ltd. 17 HaYetsira St., Yokneam Elite 20692, P.O.B 687 ISRAEL Tel: +972-4-8290100 http://www.compulab.co.il http://fit-pc.com/web/ Fax: +972-4-8325251 CompuLab Ltd. Intense PC Hardware Specification Page 2 of 74 Revision History Revision Engineer Revision Changes 1.0 Maxim Birger Initial public release 1.1 Maxim Birger Memory Interface updated Super-IO Controller peripheral section added RS232 serial com port info added 1.2 Maxim Birger HDMI Block Diagram updated DP Block Diagram updated CompuLab Ltd. Intense PC Hardware Specification Page 3 of 74 Table of Contents Legal Notice .................................................................................................................................................. -

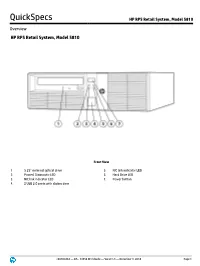

HP RP5810 Retail System, Model 5810

QuickSpecs HP RP5 Retail System, Model 5810 Overview HP RP5 Retail System, Model 5810 Front View 1. 5.25” external optical drive 5. NIC link indicator LED 2. Power/ Diagnostic LED 6. Hard Drive LED 3. NIC link indicator LED 7. Power button 4. 2 USB 2.0 ports with sliding door c04304464 — DA - 14956 Worldwide — Version 8 — December 8, 2014 Page 1 QuickSpecs HP RP5 Retail System, Model 5810 Overview Rear View 1. 24 Volt USB + PWR port 10. PS/2 keyboard port 2. Two (2) Full-Height Slots* 11. VGA port 3. RS232 serial COM3 12. 3 USB 2.0 ports 4. RJ-45 LAN jack 13. 2 USB 3.0 ports 5. RS232 serial (power configurable) COM1 port 14. RS232 serial (power configurable) COM2 port 6. PS/2 mouse port 15. DisplayPort 7. 240W EPA – Active PFC power supply (no line 16. RJ12 cash drawer port switching required) 8. Line in audio jack 17. One (1) PCIe x16 Slot (wired as x16)** – shown is optional three (3) port 12 Volt USB +Power Card 9. Line out audio jack 18. One (1) PCIe x16 Slot (wired as x4)** – shown is optional three (3) port 12 Volt USB + Power Card * Can be configured either as two (2) PCI x1 or two (2) PCIe x1 Full-Height slots. Shown is optional 2 Port RS232 serial (power configurable) Card, COM4 port (left) and COM3 port (right port). **A variety of cards are available to populate slots, dependant on riser choice and connectors utilized. For full details, please contact your HP sales representative for configuration choices. -

Intel X86 Considered Harmful

Intel x86 considered harmful Joanna Rutkowska October 2015 Intel x86 considered harmful Version: 1.0 1 Contents 1 Introduction5 Trusted, Trustworthy, Secure?......................6 2 The BIOS and boot security8 BIOS as the root of trust. For everything................8 Bad SMM vs. Tails...........................9 How can the BIOS become malicious?.................9 Write-Protecting the flash chip..................... 10 Measuring the firmware: TPM and Static Root of Trust........ 11 A forgotten element: an immutable CRTM............... 12 Intel Boot Guard............................. 13 Problems maintaining long chains of trust............... 14 UEFI Secure Boot?........................... 15 Intel TXT to the rescue!......................... 15 The broken promise of Intel TXT.................... 16 Rescuing TXT: SMM sandboxing with STM.............. 18 The broken promise of an STM?.................... 19 Intel SGX: a next generation TXT?................... 20 Summary of x86 boot (in)security.................... 21 2 Intel x86 considered harmful Contents 3 The peripherals 23 Networking devices & subsystem as attack vectors........... 23 Networking devices as leaking apparatus................ 24 Sandboxing the networking devices................... 24 Keeping networking devices outside of the TCB............ 25 Preventing networking from leaking out data.............. 25 The USB as an attack vector...................... 26 The graphics subsystem......................... 29 The disk controller and storage subsystem............... 30 The audio -

2Nd Generation Intel Core Processor Family with Intel 6 Series Chipset Development Kit User Guide

2nd Generation Intel® Core™ Processor Family with Intel® 6 Series Chipset Development Kit User Guide March 2011 Document Number: 325208 About This Document INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. Intel may make changes to specifications and product descriptions at any time, without notice. Intel Corporation may have patents or pending patent applications, trademarks, copyrights, or other intellectual property rights that relate to the presented subject matter. The furnishing of documents and other materials and information does not provide any license, express or implied, by estoppel or otherwise, to any such patents, trademarks, copyrights, or other intellectual property rights. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. Intel processor numbers are not a measure of performance. -

Data Sampling on MDS-Resistant 10Th Generation Intel Core (Ice Lake) 1

Data Sampling on MDS-resistant 10th Generation Intel Core (Ice Lake) Daniel Moghimi Worcester Polytechnic Institute, Worcester, MA, USA Abstract the store buffer. MSBDS has been shown in attacks on the kernel space as well as on SGX [1]. In addition to the leakage Microarchitectural Data Sampling (MDS) is a set of hardware component, MSBDS can also be turned around easily to tran- vulnerabilities in Intel CPUs that allows an attacker to leak siently inject values into a victim, an attack technique known bytes of data from memory loads and stores across various as Load Value Injection (LVI) [14]. security boundaries. On affected CPUs, some of these vulner- abilities were patched via microcode updates. Additionally, To mitigate these vulnerabilities, Intel released software Intel announced that the newest microarchitectures, namely workarounds and microcode updates for affected CPUs [4]. Cascade Lake and Ice Lake, were not affected by MDS. While One of the most recent microarchitectures, Ice Lake, is re- Cascade Lake turned out to be vulnerable to the ZombieLoad ported to be unaffected by MDS attacks [6,8]. Intel has also v2 MDS attack (also known as TAA), Ice Lake was not af- explicitly listed Ice Lake processors as not being vulnera- fected by this attack. ble to LVI-SB [14], which exploits MSBDS for Load Value Injection [7]. In this technical report, we show a variant of MSBDS (CVE- 2018-12126), an MDS attack, also known as Fallout, that To better analyze the MDS vulnerabilities, and potentially works on Ice Lake CPUs. This variant was automatically find new variants, Moghimi et al.