Data Intelligence for Improved Water Resource Management

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lecture 7 Network Management and Debugging

SYSTEM ADMINISTRATION MTAT.08.021 LECTURE 7 NETWORK MANAGEMENT AND DEBUGGING Prepared By: Amnir Hadachi and Artjom Lind University of Tartu, Institute of Computer Science [email protected] / [email protected] 1 LECTURE 7: NETWORK MGT AND DEBUGGING OUTLINE 1.Intro 2.Network Troubleshooting 3.Ping 4.SmokePing 5.Trace route 6.Network statistics 7.Inspection of live interface activity 8.Packet sniffers 9.Network management protocols 10.Network mapper 2 1. INTRO 3 LECTURE 7: NETWORK MGT AND DEBUGGING INTRO QUOTE: Networks has tendency to increase the number of interdependencies among machine; therefore, they tend to magnify problems. • Network management tasks: ✴ Fault detection for networks, gateways, and critical servers ✴ Schemes for notifying an administrator of problems ✴ General network monitoring, to balance load and plan expansion ✴ Documentation and visualization of the network ✴ Administration of network devices from a central site 4 LECTURE 7: NETWORK MGT AND DEBUGGING INTRO Network Size 160 120 80 40 Management Procedures 0 AUTOMATION ILLUSTRATION OF NETWORK GROWTH VS MGT PROCEDURES AUTOMATION 5 LECTURE 7: NETWORK MGT AND DEBUGGING INTRO • Network: • Subnets + Routers / switches Time to consider • Automating mgt tasks: • shell scripting source: http://www.eventhelix.com/RealtimeMantra/Networking/ip_routing.htm#.VvjkA2MQhIY • network mgt station 6 2. NETWORK TROUBLES HOOTING 7 LECTURE 7: NETWORK MGT AND DEBUGGING NETWORK TROUBLESHOOTING • Many tools are available for debugging • Debugging: • Low-level (e.g. TCP/IP layer) • high-level (e.g. DNS, NFS, and HTTP) • This section progress: ping trace route GENERAL ESSENTIAL TROUBLESHOOTING netstat TOOLS STRATEGY nmap tcpdump … 8 LECTURE 7: NETWORK MGT AND DEBUGGING NETWORK TROUBLESHOOTING • Before action, principle to consider: ✴ Make one change at a time ✴ Document the situation as it was before you got involved. -

Unix/Linux Command Reference

Unix/Linux Command Reference .com File Commands System Info ls – directory listing date – show the current date and time ls -al – formatted listing with hidden files cal – show this month's calendar cd dir - change directory to dir uptime – show current uptime cd – change to home w – display who is online pwd – show current directory whoami – who you are logged in as mkdir dir – create a directory dir finger user – display information about user rm file – delete file uname -a – show kernel information rm -r dir – delete directory dir cat /proc/cpuinfo – cpu information rm -f file – force remove file cat /proc/meminfo – memory information rm -rf dir – force remove directory dir * man command – show the manual for command cp file1 file2 – copy file1 to file2 df – show disk usage cp -r dir1 dir2 – copy dir1 to dir2; create dir2 if it du – show directory space usage doesn't exist free – show memory and swap usage mv file1 file2 – rename or move file1 to file2 whereis app – show possible locations of app if file2 is an existing directory, moves file1 into which app – show which app will be run by default directory file2 ln -s file link – create symbolic link link to file Compression touch file – create or update file tar cf file.tar files – create a tar named cat > file – places standard input into file file.tar containing files more file – output the contents of file tar xf file.tar – extract the files from file.tar head file – output the first 10 lines of file tar czf file.tar.gz files – create a tar with tail file – output the last 10 lines -

Unix/Linux Command Reference

Unix/Linux Command Reference .com File Commands System Info ls – directory listing date – show the current date and time ls -al – formatted listing with hidden files cal – show this month's calendar cd dir - change directory to dir uptime – show current uptime cd – change to home w – display who is online pwd – show current directory whoami – who you are logged in as mkdir dir – create a directory dir finger user – display information about user rm file – delete file uname -a – show kernel information rm -r dir – delete directory dir cat /proc/cpuinfo – cpu information rm -f file – force remove file cat /proc/meminfo – memory information rm -rf dir – force remove directory dir * man command – show the manual for command cp file1 file2 – copy file1 to file2 df – show disk usage cp -r dir1 dir2 – copy dir1 to dir2; create dir2 if it du – show directory space usage doesn't exist free – show memory and swap usage mv file1 file2 – rename or move file1 to file2 whereis app – show possible locations of app if file2 is an existing directory, moves file1 into which app – show which app will be run by default directory file2 ln -s file link – create symbolic link link to file Compression touch file – create or update file tar cf file.tar files – create a tar named cat > file – places standard input into file file.tar containing files more file – output the contents of file tar xf file.tar – extract the files from file.tar head file – output the first 10 lines of file tar czf file.tar.gz files – create a tar with tail file – output the last 10 lines -

26 Disk Space Management

26 Disk Space Management 26.1 INTRODUCTION It has been said that the only thing all UNIX systems have in common is the login message asking users to clean up their files and use less disk space. No matter how much space you have, it isn’t enough; as soon as a disk is added, files magically appear to fill it up. Both users and the system itself are potential sources of disk bloat. Chapter 12, Syslog and Log Files, discusses various sources of logging information and the techniques used to manage them. This chapter focuses on space problems caused by users and the technical and psy- chological weapons you can deploy against them. If you do decide to Even if you have the option of adding more disk storage to your system, add a disk, refer to it’s a good idea to follow this chapter’s suggestions. Disks are cheap, but Chapter 9 for help. administrative effort is not. Disks have to be dumped, maintained, cross-mounted, and monitored; the fewer you need, the better. 26.2 DEALING WITH DISK HOGS In the absence of external pressure, there is essentially no reason for a user to ever delete anything. It takes time and effort to clean up unwanted files, and there’s always the risk that something thrown away might be wanted again in the future. Even when users have good intentions, it often takes a nudge from the system administrator to goad them into action. 618 Chapter 26 Disk Space Management 619 On a PC, disk space eventually runs out and the machine’s primary user must clean up to get the system working again. -

MTU and MSS Tutorial

MTU and MSS Tutorial Dr. E. Garcia, [email protected] Published: November 16, 2009. Last Update: November 16, 2009. © 2009 E. Garcia Abstract – This tutorial covers maximum transmission unit ( MTU ), maximum segment size ( MSS ), PING, NETSTAT, and fragmentation. Expressions relevant to these concepts are systematically derived and explained. Keywords: maximum transmission unit, MTU , maximum segment size, MSS , PING, NETSTAT 1 MTU and MSS As discussed in the IP Packet Fragmentation Tutorial (http://www.miislita.com/internet-engineering/ip-packet-fragmentation-tutorial.pdf ) and elsewhere (1 - 3), the data payload ( DP ) of an IP packet is defined as the packet length ( PL ) minus the length of its IP header ( IPHL ), (Eq 1) whereܦܲ theൌ maximum ܲܮ െ ܫܲܪܮ PL is defined as the Maximum Transmission Unit (MTU) . This is the largest IP packet that can be transmitted without further fragmentation. Thus, when PL = MTU (Eq 2) However,ܦܲ ൌ an ܯܷܶ IP packet െ ܫܲܪܮ encapsulates a TCP packet such that DP = TCPHL + MSS (Eq 3) where TCPHL is the length of the TCP header and MSS is the data payload of the TCP packet, also known as the Maximum Segment Size (MSS) . Combining Equations 2 and 3 leads to MSS = MTU – IPHL – TCPHL (Eq 4) Figure 1 illustrates the connection between MTU and MSS –for an IP packet decomposed into three fragments. Figure 1. Fragmentation example where MTU = PL = pl 1 = pl 2 > pl 3 and DP = dp 1 + dp 2 + dp 3 = PL – IPHL . © 2009 E. Garcia 1 Typically, IP and TCP headers are 20 bytes long. Thus, MSS = MTU – 40 (Eq 5) If IP or TCP options are specified, the MSS is further reduced by the number of bytes taken up by the options (OP), each of which may be one byte or several bytes in size. -

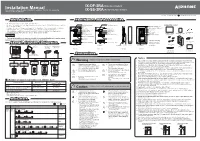

Installation Manual IX-DF-2RA (Video Door Station) This Manual Includes Product Liability Precautions

Installation Manual IX-DF-2RA (Video Door Station) This manual includes Product Liability Precautions. Provide this manual to (Audio Only Door Station) end user after installation. IX-SS-2RA Issue Date : November 2014 FK2122 A P1114 SQ 56142 Printed in Thailand Introduction Part Names and Accessories • Read this manual before installation and connection. Read the "Setting Manual" and "Operation Manual" included on the DVD-ROM that comes with the Call indicator (orange) Call indicator (orange) Accessories included Master Station (IX-MV). Camera Camera angle adjustment lever (Video Door Station only) • Configure the system settings according to the "Setting Manual" after completing the installation Microphone Microphone and connection. The system will not function unless it has been properly configured. Communication Communication Reset button* • After installation, explain to the customer how to use the device, and be sure to provide the indicator (green) indicator (green) CAT5e/6 cable accompanying DVD-ROM that came with the Master Station (IX-MV). Speaker Speaker T20 screwdriver connections Installation Manual Chinese RoHS paper Status indicator (red) Status indicator (red) bit x1 Option connector input (this manual) x1 x1 Important LED for night illumination • Perform the installation and connection only after fully understanding this device and the manual. Call button Call button Illustrations used in this manual may be different from the actual ones. * Press and hold the reset button for longer than 1 Option connector Emergency call -

Unix Quickref.Dvi

Summary of UNIX commands Table of Contents df [dirname] display free disk space. If dirname is omitted, 1. Directory and file commands 1994,1995,1996 Budi Rahardjo ([email protected]) display all available disks. The output maybe This is a summary of UNIX commands available 2. Print-related commands in blocks or in Kbytes. Use df -k in Solaris. on most UNIX systems. Depending on the config- uration, some of the commands may be unavailable 3. Miscellaneous commands du [dirname] on your site. These commands may be a commer- display disk usage. cial program, freeware or public domain program that 4. Process management must be installed separately, or probably just not in less filename your search path. Check your local documentation or 5. File archive and compression display filename one screenful. A pager similar manual pages for more details (e.g. man program- to (better than) more. 6. Text editors name). This reference card, obviously, cannot de- ls [dirname] scribe all UNIX commands in details, but instead I 7. Mail programs picked commands that are useful and interesting from list the content of directory dirname. Options: a user's point of view. 8. Usnet news -a display hidden files, -l display in long format 9. File transfer and remote access mkdir dirname Disclaimer make directory dirname The author makes no warranty of any kind, expressed 10. X window or implied, including the warranties of merchantabil- more filename 11. Graph, Plot, Image processing tools ity or fitness for a particular purpose, with regard to view file filename one screenfull at a time the use of commands contained in this reference card. -

ANSWERS ΤΟ EVEN-Numbered EXERCISES

6 Answers to Even-numbered Exercises 2.1. ListIs each ofname? the commands you can use to perform these operations: a. Make your home directory the working directory b. Identify the working directory a. cd; b. pwd 4.3. TheIf your contents. df utility displays all mounted filesystems along with information about each. Use the df utility with the –h (human-readable) option to answer the following questions. $ df -h Filesystem Size Used Avail Use% Mounted on /dev/hda1 1.4G 242M 1.1G 18% / /dev/hda3 23M 11M 10M 51% /boot /dev/hda4 1.5G 1.2G 222M 85% /home /dev/hda7 564M 17M 518M 4% /tmp /dev/hdc1 984M 92M 842M 10% /gc1 /dev/hdc2 16G 13G 1.9G 87% /gc2 a. How many filesystems are mounted on your Linux system? b. Which filesystem stores your home directory? c. Assuming that your answer to exercise 4a is two or more, attempt to create a hard link to a file on another filesystem. What error message do you get? What happens when you attempt to create a symbolic link to the file instead? Following are sample answers to these questions. Your answers will be dif- ferent because your filesystems are different. a. six; b. /dev/hda4; c. ln: creating hard link '/tmp/xxx' to 'xxx': Invalid cross-device link. No problem creating a cross-device symbolic link. 5. Suppose tho longer shared? 1 2 6. You should have read permission for the /etc/passwd file. To answer the following questions, use cat or less to display /etc/passwd. Look at the fields of information in /etc/passwd for the users on your system. -

The Linux Command Line

The Linux Command Line Second Internet Edition William E. Shotts, Jr. A LinuxCommand.org Book Copyright ©2008-2013, William E. Shotts, Jr. This work is licensed under the Creative Commons Attribution-Noncommercial-No De- rivative Works 3.0 United States License. To view a copy of this license, visit the link above or send a letter to Creative Commons, 171 Second Street, Suite 300, San Fran- cisco, California, 94105, USA. Linux® is the registered trademark of Linus Torvalds. All other trademarks belong to their respective owners. This book is part of the LinuxCommand.org project, a site for Linux education and advo- cacy devoted to helping users of legacy operating systems migrate into the future. You may contact the LinuxCommand.org project at http://linuxcommand.org. This book is also available in printed form, published by No Starch Press and may be purchased wherever fine books are sold. No Starch Press also offers this book in elec- tronic formats for most popular e-readers: http://nostarch.com/tlcl.htm Release History Version Date Description 13.07 July 6, 2013 Second Internet Edition. 09.12 December 14, 2009 First Internet Edition. 09.11 November 19, 2009 Fourth draft with almost all reviewer feedback incorporated and edited through chapter 37. 09.10 October 3, 2009 Third draft with revised table formatting, partial application of reviewers feedback and edited through chapter 18. 09.08 August 12, 2009 Second draft incorporating the first editing pass. 09.07 July 18, 2009 Completed first draft. Table of Contents Introduction....................................................................................................xvi -

Gnu Coreutils Core GNU Utilities for Version 5.93, 2 November 2005

gnu Coreutils Core GNU utilities for version 5.93, 2 November 2005 David MacKenzie et al. This manual documents version 5.93 of the gnu core utilities, including the standard pro- grams for text and file manipulation. Copyright c 1994, 1995, 1996, 2000, 2001, 2002, 2003, 2004, 2005 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation License”. Chapter 1: Introduction 1 1 Introduction This manual is a work in progress: many sections make no attempt to explain basic concepts in a way suitable for novices. Thus, if you are interested, please get involved in improving this manual. The entire gnu community will benefit. The gnu utilities documented here are mostly compatible with the POSIX standard. Please report bugs to [email protected]. Remember to include the version number, machine architecture, input files, and any other information needed to reproduce the bug: your input, what you expected, what you got, and why it is wrong. Diffs are welcome, but please include a description of the problem as well, since this is sometimes difficult to infer. See section “Bugs” in Using and Porting GNU CC. This manual was originally derived from the Unix man pages in the distributions, which were written by David MacKenzie and updated by Jim Meyering. -

Intro to Unix 2018

Introduction to *nix Bill Renaud, OLCF ORNL is managed by UT-Battelle for the US Department of Energy Background • UNIX operating system was developed in 1969 by Ken Thompson and Dennis Ritchie • Many “UNIX-like” OSes developed over the years • UNIX® is now a trademark of The Open Group, which maintains the Single UNIX Specification • Linux developed by Linus Torvalds in 1991 • GNU Project started by Richard Stallman in 1983 w/aim to provide free, UNIX-compatible OS • Many of the world’s most powerful computers use Linux kernel + software from the GNU Project References: 1www.opengroup.org/unix 2https://en.wikipedia.org/wiki/Linux 2 3https://www.gnu.org/gnu/about-gnu.html This Presentation • This presentation will focus on using *nix operating systems as a non-privileged user in an HPC environment – Assumes you’re using a ‘remote’ system – No info on printing, mounting disks, etc. • We’ll focus on systems using the Linux kernel + system software from the GNU project since that’s so prevalent • Two-Part – Basics: general information, commands – Advanced: advanced commands, putting it all together, scripts, etc. 3 This Presentation • May seem a bit disjoint at first – Several basic concepts that don’t flow naturally but later topics build upon – Hopefully everything will come together, but if not… • I’ll cover (what I hope is) some useful info but can’t cover it all – People write thousand-page books on this, after all • Please ask questions! 4 Basics Terminology • User – An entity that interacts with the computer. Typically a person but could also be for an automated task. -

Gnu Coreutils Core GNU Utilities for Version 6.9, 22 March 2007

gnu Coreutils Core GNU utilities for version 6.9, 22 March 2007 David MacKenzie et al. This manual documents version 6.9 of the gnu core utilities, including the standard pro- grams for text and file manipulation. Copyright c 1994, 1995, 1996, 2000, 2001, 2002, 2003, 2004, 2005, 2006 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled \GNU Free Documentation License". Chapter 1: Introduction 1 1 Introduction This manual is a work in progress: many sections make no attempt to explain basic concepts in a way suitable for novices. Thus, if you are interested, please get involved in improving this manual. The entire gnu community will benefit. The gnu utilities documented here are mostly compatible with the POSIX standard. Please report bugs to [email protected]. Remember to include the version number, machine architecture, input files, and any other information needed to reproduce the bug: your input, what you expected, what you got, and why it is wrong. Diffs are welcome, but please include a description of the problem as well, since this is sometimes difficult to infer. See section \Bugs" in Using and Porting GNU CC. This manual was originally derived from the Unix man pages in the distributions, which were written by David MacKenzie and updated by Jim Meyering.