Correct UTF-8 Handling During Phase 1 of Translation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Man'yogana.Pdf (574.0Kb)

Bulletin of the School of Oriental and African Studies http://journals.cambridge.org/BSO Additional services for Bulletin of the School of Oriental and African Studies: Email alerts: Click here Subscriptions: Click here Commercial reprints: Click here Terms of use : Click here The origin of man'yogana John R. BENTLEY Bulletin of the School of Oriental and African Studies / Volume 64 / Issue 01 / February 2001, pp 59 73 DOI: 10.1017/S0041977X01000040, Published online: 18 April 2001 Link to this article: http://journals.cambridge.org/abstract_S0041977X01000040 How to cite this article: John R. BENTLEY (2001). The origin of man'yogana. Bulletin of the School of Oriental and African Studies, 64, pp 5973 doi:10.1017/S0041977X01000040 Request Permissions : Click here Downloaded from http://journals.cambridge.org/BSO, IP address: 131.156.159.213 on 05 Mar 2013 The origin of man'yo:gana1 . Northern Illinois University 1. Introduction2 The origin of man'yo:gana, the phonetic writing system used by the Japanese who originally had no script, is shrouded in mystery and myth. There is even a tradition that prior to the importation of Chinese script, the Japanese had a native script of their own, known as jindai moji ( , age of the gods script). Christopher Seeley (1991: 3) suggests that by the late thirteenth century, Shoku nihongi, a compilation of various earlier commentaries on Nihon shoki (Japan's first official historical record, 720 ..), circulated the idea that Yamato3 had written script from the age of the gods, a mythical period when the deity Susanoo was believed by the Japanese court to have composed Japan's first poem, and the Sun goddess declared her son would rule the land below. -

Unicode Ate My Brain

UNICODE ATE MY BRAIN John Cowan Reuters Health Information Copyright 2001-04 John Cowan under GNU GPL 1 Copyright • Copyright © 2001 John Cowan • Licensed under the GNU General Public License • ABSOLUTELY NO WARRANTIES; USE AT YOUR OWN RISK • Portions written by Tim Bray; used by permission • Title devised by Smarasderagd; used by permission • Black and white for readability Copyright 2001-04 John Cowan under GNU GPL 2 Abstract Unicode, the universal character set, is one of the foundation technologies of XML. However, it is not as widely understood as it should be, because of the unavoidable complexity of handling all of the world's writing systems, even in a fairly uniform way. This tutorial will provide the basics about using Unicode and XML to save lots of money and achieve world domination at the same time. Copyright 2001-04 John Cowan under GNU GPL 3 Roadmap • Brief introduction (4 slides) • Before Unicode (16 slides) • The Unicode Standard (25 slides) • Encodings (11 slides) • XML (10 slides) • The Programmer's View (27 slides) • Points to Remember (1 slide) Copyright 2001-04 John Cowan under GNU GPL 4 How Many Different Characters? a A à á â ã ä å ā ă ą a a a a a a a a a a a Copyright 2001-04 John Cowan under GNU GPL 5 How Computers Do Text • Characters in computer storage are represented by “small” numbers • The numbers use a small number of bits: from 6 (BCD) to 21 (Unicode) to 32 (wchar_t on some Unix boxes) • Design choices: – Which numbers encode which characters – How to pack the numbers into bytes Copyright 2001-04 John Cowan under GNU GPL 6 Where Does XML Come In? • XML is a textual data format • XML software is required to handle all commercially important characters in the world; a promise to “handle XML” implies a promise to be international • Applications can do what they want; monolingual applications can mostly ignore internationalization Copyright 2001-04 John Cowan under GNU GPL 7 $$$ £££ ¥¥¥ • Extra cost of building-in internationalization to a new computer application: about 20% (assuming XML and Unicode). -

Unicode and Code Page Support

Natural for Mainframes Unicode and Code Page Support Version 4.2.6 for Mainframes October 2009 This document applies to Natural Version 4.2.6 for Mainframes and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © Software AG 1979-2009. All rights reserved. The name Software AG, webMethods and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA, Inc. Other company and product names mentioned herein may be trademarks of their respective owners. Table of Contents 1 Unicode and Code Page Support .................................................................................... 1 2 Introduction ..................................................................................................................... 3 About Code Pages and Unicode ................................................................................ 4 About Unicode and Code Page Support in Natural .................................................. 5 ICU on Mainframe Platforms ..................................................................................... 6 3 Unicode and Code Page Support in the Natural Programming Language .................... 7 Natural Data Format U for Unicode-Based Data ....................................................... 8 Statements .................................................................................................................. 9 Logical -

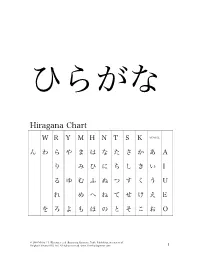

Hiragana Chart

ひらがな Hiragana Chart W R Y M H N T S K VOWEL ん わ ら や ま は な た さ か あ A り み ひ に ち し き い I る ゆ む ふ ぬ つ す く う U れ め へ ね て せ け え E を ろ よ も ほ の と そ こ お O © 2010 Michael L. Kluemper et al. Beginning Japanese, Tuttle Publishing, an imprint of Periplus Editions (HK) Ltd. All rights reserved. www.TimeForJapanese.com. 1 Beginning Japanese 名前: ________________________ 1-1 Hiragana Activity Book 日付: ___月 ___日 一、 Practice: あいうえお かきくけこ がぎぐげご O E U I A お え う い あ あ お え う い あ お う あ え い あ お え う い お う い あ お え あ KO KE KU KI KA こ け く き か か こ け く き か こ け く く き か か こ き き か こ こ け か け く く き き こ け か © 2010 Michael L. Kluemper et al. Beginning Japanese, Tuttle Publishing, an imprint of Periplus Editions (HK) Ltd. All rights reserved. www.TimeForJapanese.com. 2 GO GE GU GI GA ご げ ぐ ぎ が が ご げ ぐ ぎ が ご ご げ ぐ ぐ ぎ ぎ が が ご げ ぎ が ご ご げ が げ ぐ ぐ ぎ ぎ ご げ が 二、 Fill in each blank with the correct HIRAGANA. SE N SE I KI A RA NA MA E 1. -

KANA Response Live Organization Administration Tool Guide

This is the most recent version of this document provided by KANA Software, Inc. to Genesys, for the version of the KANA software products licensed for use with the Genesys eServices (Multimedia) products. Click here to access this document. KANA Response Live Organization Administration KANA Response Live Version 10 R2 February 2008 KANA Response Live Organization Administration All contents of this documentation are the property of KANA Software, Inc. (“KANA”) (and if relevant its third party licensors) and protected by United States and international copyright laws. All Rights Reserved. © 2008 KANA Software, Inc. Terms of Use: This software and documentation are provided solely pursuant to the terms of a license agreement between the user and KANA (the “Agreement”) and any use in violation of, or not pursuant to any such Agreement shall be deemed copyright infringement and a violation of KANA's rights in the software and documentation and the user consents to KANA's obtaining of injunctive relief precluding any further such use. KANA assumes no responsibility for any damage that may occur either directly or indirectly, or any consequential damages that may result from the use of this documentation or any KANA software product except as expressly provided in the Agreement, any use hereunder is on an as-is basis, without warranty of any kind, including without limitation the warranties of merchantability, fitness for a particular purpose, and non-infringement. Use, duplication, or disclosure by licensee of any materials provided by KANA is subject to restrictions as set forth in the Agreement. Information contained in this document is subject to change without notice and does not represent a commitment on the part of KANA. -

Depression What Is Depression? Can People with Depression Be Helped? • Depression Is a Very Common Problem

Depression What is depression? Can people with depression be helped? • Depression is a very common problem. Yes they can! Together, counselling and medication • We all have times in our lives when we feel have proven positive results. sad or down. • Usually sad feelings go away, but someone with depression may find that the sadness stays COUNSELLING Going for longer; they lose interest in things they usually counselling or talking therapy will enjoy and are left feeling low or very down for help. It is important that you attend a long time. all your sessions when referred; • Day to day life becomes difficult to manage. develop healthy coping skills to • Many adults will at some time experience manage your depression. symptoms of depression. How will I know if I have depression? In the last two weeks you have experienced the following: MEDICATION Anti-depressant • feel depressed or down most of the time; medication when prescribed • lose interest or pleasure in things you and taken as advised can usually enjoyed; shift depression. • sleep more or less than usual; • eat more or less than usual; • feel tired and hopeless; • think it will be better if you died or that you want to commit suicide; • feelings of guilt and worthlessness; SELF HELP Make small changes in • reduced self-esteem or self-confidence; or your life by doing things you used • reduced attention and concentration. to enjoy. Spend time with people around you and be more active. Join a support group and learn Causes of depression: self-help skills. • many stressors e.g. trauma, housing problems, unemployment, major change, becoming a parent; • ill-health; Focusing on your emotional, mental and physical Where can I find help? • history of depression in your family; wellbeing to improve your mood and reconnect At your local clinic or hospital • if you have a history of abuse or went through with your community. -

Frank's Do-It-Yourself Kana Cards V

Frank's do-it-yourself kana cards v. 1.0, 2000-08-07 Frank Stajano University of Cambridge and AT&T Laboratories Cambridge http://www.cl.cam.ac.uk/~fms27/ and http://www.uk.research.att.com/~fms/ This set of flash cards is meant to help you familiar cards and insist on the difficult part of き and さ with a separate stroke, become fluent in the use of the Japanese ones. unlike what happens in the fonts used in hiragana and katakana syllabaries. I made this document. I have followed the stroke it because I needed one myself and could The complete set consists of 10 double- counts of Henshall-Takagaki, even when not find it in the local bookshops (kanji sided sheets (20 printable pages) of 50 they seem weird for the shape of the char- cards were available, and I bought those; cards each, but you may choose to print acter as drawn on the card. but kana cards weren't); if it helps you too, smaller subsets as detailed below. Actu- so much the better. ally there are some blanks, so the total The easiest way to turn this document into number of cards is only 428 instead of 500. a set of cards is simply to print it (double The romanisation system chosen for these It would have been possible to fit them on sided of course!) and then cut each page cards is the Hepburn, which is the most 9 sheets instead of 10, but only by com- into cards with a ruler and a sharp blade. -

Introduction to the Special Issue on Japanese Geminate Obstruents

J East Asian Linguist (2013) 22:303-306 DOI 10.1007/s10831-013-9109-z Introduction to the special issue on Japanese geminate obstruents Haruo Kubozono Received: 8 January 2013 / Accepted: 22 January 2013 / Published online: 6 July 2013 © Springer Science+Business Media Dordrecht 2013 Geminate obstruents (GOs) and so-called unaccented words are the two properties most characteristic of Japanese phonology and the two features that are most difficult to learn for foreign learners of Japanese, regardless of their native language. This special issue deals with the first of these features, discussing what makes GOs so difficult to master, what is so special about them, and what makes the research thereon so interesting. GOs are one of the two types of geminate consonant in Japanese1 which roughly corresponds to what is called ‘sokuon’ (促音). ‘Sokon’ is defined as a ‘one-mora- long silence’ (Sanseido Daijirin Dictionary), often symbolized as /Q/ in Japanese linguistics, and is transcribed with a small letter corresponding to /tu/ (っ or ッ)in Japanese orthography. Its presence or absence is distinctive in Japanese phonology as exemplified by many pairs of words, including the following (dots /. / indicate syllable boundaries). (1) sa.ki ‘point’ vs. sak.ki ‘a short time ago’ ka.ko ‘past’ vs. kak.ko ‘paranthesis’ ba.gu ‘bug (in computer)’ vs. bag.gu ‘bag’ ka.ta ‘type’ vs. kat.ta ‘bought (past tense of ‘buy’)’ to.sa ‘Tosa (place name)’ vs. tos.sa ‘in an instant’ More importantly, ‘sokuon’ is an important characteristic of Japanese speech rhythm known as mora-timing. It is one of the four elements that can form a mora 1 The other type of geminate consonant is geminate nasals, which phonologically consist of a coda nasal and the nasal onset of the following syllable, e.g., /am. -

Bibliography

BIBLIOGRAPHY Akimoto, Kichirō (ed.) 1958. Fudoki [Gazetteers]. Nihon Koten Bungaku Taikei [Series of the Japanese Classical Literature], vol. 2. Tokyo: Iwanami shoten. Aso, Mizue (ed.) 2007. Man’yōshū zenka kōgi. Kan daigo ~ kan dairoku [A commentary on all Man’yōshū poems. Books five and six]. Tokyo: Kasama shoin. Bentley, John R. 1997. MO and PO in Old Japanese. Unpublished MA thesis. University of Hawai’i at Mānoa. —— 1999. ‘The Verb TORU in Old Japanese.’ Journal of East Asian Linguistics 8: 131-46. —— 2001a. ‘The Origin of the Man’yōgana.’ Bulletin of the School of Oriental and African Studies 64.1: 59-73. —— 2001b. A Descriptive Grammar of Early Old Japanese Prose. Leiden: Brill. —— 2002. ‘The spelling of /MO/ in Old Japanese.’ Journal of East Asian Linguistics 11.4: 349-74. Gluskina, Anna E. 1971-73. Man”yosiu. t. 1-3. Moscow: Nauka [reprinted: Moscow: Izdatel’stvo ACT, 2001]. —— 1979. ‘O prefikse sa- v pesniakh Man”yoshu [About the prefix sa- in the Man’yōshū songs]. In: Gluskina A. Zametki o iaponskoi literature i teatre [Notes on Japanese literature and theater], pp. 99-110. Moscow: Nauka, Glavnaia redakciia vostochnoi literatury. Hashimoto, Shinkichi 1917. ‘Kokugo kanazukai kenkyū shi jō no ichi hakken – Ishizuka Tatsumaro no Kanazukai oku no yama michi ni tsuite’ [A Discovery in the Field of Japanese Kana Usage Research Concerning Ishizuka Tatsumaro’s The Mountain Road into the Secrets of Kana Usage]. Teikoku bungaku 23.5 [reprinted in Hashimoto (1949: 123-63). —— 1931. ‘Jōdai no bunken ni sonsuru tokushu no kanazukai to tōji no gohō’ [The Special Kana Usage of Old Japanese Texts and the Grammar of the Period]. -

Fun with Unicode - an Overview About Unicode Dangers

Fun with Unicode - an overview about Unicode dangers by Thomas Skora Overview ● Short Introduction to Unicode/UTF-8 ● Fooling charset detection ● Ambigiuous Encoding ● Ambigiuous Characters ● Normalization overflows your buffer ● Casing breaks your XSS filter ● Unicode in domain names – how to short payloads ● Text Direction Unicode/UTF-8 ● Unicode = Character set ● Encodings: – UTF-8: Common standard in web, … – UTF-16: Often used as internal representation – UTF-7: if the 8th bit is not safe – UTF-32: yes, it exists... UTF-8 ● Often used in Internet communication, e.g. the web. ● Efficient: minimum length 1 byte ● Variable length, up to 7 bytes (theoretical). ● Downwards-compatible: First 127 chars use ASCII encoding ● 1 Byte: 0xxxxxxx ● 2 Bytes: 110xxxxx 10xxxxxx ● 3 Bytes: 1110xxxx 10xxxxxx 10xxxxxx ● ...got it? ;-) UTF-16 ● Often used for internal representation: Java, .NET, Windows, … ● Inefficient: minimum length per char is 2 bytes. ● Byte Order? Byte Order Mark! → U+FEFF – BOM at HTML beginning overrides character set definition in IE. ● Y\x00o\x00u\x00 \x00k\x00n\x00o\x00w\x00 \x00t\x00h\x00i\x00s\x00?\x00 UTF-7 ● Unicode chars in not 8 bit-safe environments. Used in SMTP, NNTP, … ● Personal opinion: browser support was an inside job of the security industry. ● Why? Because: <script>alert(1)</script> == +Adw-script+AD4-alert(1)+ADw-/script+AD4- ● Fortunately (for the defender) support is dropped by browser vendors. Byte Order Mark ● U+FEFF ● Appears as:  ● W3C says: BOM has priority over declaration – IE 10+11 just dropped this insecure behavior, we should expect that it comes back. – http://www.w3.org/International/tests/html-css/character- encoding/results-basics#precedence – http://www.w3.org/International/questions/qa-byte-order -mark.en#bomhow ● If you control the first character of a HTML document, then you also control its character set. -

L2/20-235 (Unihan Ad Hoc Recommendations For

L2/20-235 Title: Unihan Ad Hoc Recommendations for UTC #165 Meeting Author: Ken Lunde & Unihan Ad Hoc Date: 2020-09-22 This document provides the Unihan Ad Hoc recommendations for UTC #165 based on a meet- ing that took place from 6 to 9PM PDT on 2020-09-18, which was attended by Eiso Chan, Lee Collins, John Jenkins, Ken Lunde, William Nelson, Ngô Thanh Nhàn, Stephan Hyeonjun Stiller, and Yifán Wáng via Zoom. John Jenkins and Ken Lunde co-chaired the meeting. The Unihan Ad Hoc reviewed public feedback and documents that were received since UTC #164. Comments are marked in green, and Copy&Paste-ready Recommendations to the UTC are marked in red. 1) UAX #45 / U-Source Public Feedback The single item of public feedback that is in this section was discussed by the ad hoc, and for convenience, the USource-changes-20200918.txt, Unihan-removals-20200918.txt, and Unihan-additions-20200918.txt (PDF attachments) files include all of the recommended chang- es based on the changes that were proposed. These recommended changes are also shown inline as part of the Recommendations. Date/Time: Mon Aug 31 08:29:11 CDT 2020 Name: Ken Lunde Report Type: Error Report Opt Subject: Unihan-related feedback Please consider the following three pieces of Unihan-related feedback: 1) Change (U+91D2) to (U+91D1) in the IDSes for the following eight U- 釒 金 Source ideographs: UTC-00102;C;U+2B4B6;167.9;1316.111; ;kMatthews 2051; ⿰釒凾 UTC-00207;X;;167.10;1318.281; ;kSBGY 115.19; ⿰釒冤 UTC-00432;X;;167.11;1321.071; ;kMeyerWempe 3708b; ⿰釒患 UTC-00872;D;U+2B7F0;167.6;1305.211; ;Adobe-Japan1 20240; ⿰釒当 UTC-00889;N;;167.10;1318.281; ;Adobe-CNS1 C+16257; ⿰釒袓 UK-02711;G;U+30F25;167.5;1303.101; ;UTCDoc L2/15-260 1399; ⿰釒卢 UK-02829;UK-2015;UTC-02828;167.7;1308.261; ;UTCDoc L2/15-260 1517; ⿰釒囱 UK-02895;G;U+30F23;167.4;1299.191; ;UTCDoc L2/15-260 1583; ⿰釒㝉 Rationale: (U+91D2) appears only once in the IDS database, as itself. -

Sveučilište Josipa Jurja Strossmayera U Osijeku Filozofski Fakultet U Osijeku Odsjek Za Engleski Jezik I Književnost Uroš Ba

CORE Metadata, citation and similar papers at core.ac.uk Provided by Croatian Digital Thesis Repository Sveučilište Josipa Jurja Strossmayera u Osijeku Filozofski fakultet u Osijeku Odsjek za engleski jezik i književnost Uroš Barjaktarević Japanese-English Language Contact / Japansko-engleski jezični kontakt Diplomski rad Kolegij: Engleski jezik u kontaktu Mentor: doc. dr. sc. Dubravka Vidaković Erdeljić Osijek, 2015. 1 Summary JAPANESE-ENGLISH LANGUAGE CONTACT The paper examines the language contact between Japanese and English. The first section of the paper defines language contact and the most common contact-induced language phenomena with an emphasis on linguistic borrowing as the dominant contact-induced phenomenon. The classification of linguistic borrowing thereby follows Haugen's distinction between morphemic importation and substitution. The second section of the paper presents the features of the Japanese language in terms of origin, phonology, syntax, morphology, and writing. The third section looks at the history of language contact of the Japanese with the Europeans, starting with the Portuguese and Spaniards, followed by the Dutch, and finally the English. The same section examines three different borrowing routes from English, and contact-induced language phenomena other than linguistic borrowing – bilingualism , code alternation, code-switching, negotiation, and language shift – present in Japanese-English language contact to varying degrees. This section also includes a survey of the motivation and reasons for borrowing from English, as well as the attitudes of native Japanese speakers to these borrowings. The fourth and the central section of the paper looks at the phenomenon of linguistic borrowing, its scope and the various adaptations that occur upon morphemic importation on the phonological, morphological, orthographic, semantic and syntactic levels.