Wikipedia Data Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Content Categorization for Contextual Advertising Using Wikipedia

Content Categorization for Contextual Advertising Using Wikipedia Ingrid Grønlie Guren August 2, 2015 Content Categorization for Contextual Advertising Using Wikipedia Ingrid Grønlie Guren August 2, 2015 ii Abstract Automatic categorization of content is an important functionality in online ad- vertising and automated content recommendations, both for ensuring contextual relevancy of placements and for building up behavioral profiles for users that consume the content. Within the advertising domain, the taxonomy tree that content is classified into is defined with some commercial application in mind to somehow reflect the advertising platform’s ad inventory. The nature of the ad inventory and the language of the content might vary across brokers (i.e., the operator of the advertising platform), so it was of interest to develop a system that can easily bootstrap the development of a well-working classifier. We developed a dictionary-based classifier based on titles from Wikipedia articles where the titles represent entries in the dictionary. The idea of the dictionary-based classifier is so simple that it can be understood by users of the program, also those who lack technical experience. Further, it has the ad- vantage that its users easily can expand the dictionary with desirable words for specific advertisement purposes. The process of creating the classifier includes a processing of all Wikipedia article titles to a form more likely to occur in docu- ments, before each entry is graded to their most describing Wikipedia category path. The Wikipedia category paths are further mapped to categories based on the taxonomy of Interactive Advertising Bureau (IAB), which are categories relevant for advertising. -

System Integration in Web 2.0 Environment

Masaryk university, Faculty of Informatics Ph.D. Thesis System Integration in Web 2.0 Environment Pavel Drášil Supervisor: doc. RNDr. Tomáš Pitner, Ph.D. January 2011 Brno, Czech Republic Except where indicated otherwise, this thesis is my own original work. Pavel Drášil Brno, January 2011 Acknowledgements There are many people who have influenced my life and the path I have chosen in it. They have all, in their own way, made this thesis and the work described herein possible. First of all, I have to thank my supervisor, assoc. prof. Tomáš Pitner. Anytime we met, I received not only a number of valuable advices, but also a great deal of enthusiasm, understanding, appreciation, support and trust. Further, I have to thank my colleague Tomáš Ludík for lots of fruitful discussions and constructive criticism during the last year. Without any doubt, this thesis would not come into existence without the love, unceasing support and patience of the people I hold deepest in my heart – my parents and my beloved girlfriend Míša. I am grateful to them more than I can express. Abstract During the last decade, Service-Oriented Architecture (SOA) concepts have matured into a prominent architectural style for enterprise application development and integration. At the same time, Web 2.0 became a predominant paradigm in the web environment. Even if the ideological, technological and business bases of the two are quite different, significant similarities can still be found. Especially in their view of services as basic building blocks and service composition as a way of creating complex applications. Inspired by this finding, this thesis aims to contribute to the state of the art in designing service-based systems by exploring the possibilities and potential of bridging the SOA and Web 2.0 worlds. -

The Culture of Wikipedia

Good Faith Collaboration: The Culture of Wikipedia Good Faith Collaboration The Culture of Wikipedia Joseph Michael Reagle Jr. Foreword by Lawrence Lessig The MIT Press, Cambridge, MA. Web edition, Copyright © 2011 by Joseph Michael Reagle Jr. CC-NC-SA 3.0 Purchase at Amazon.com | Barnes and Noble | IndieBound | MIT Press Wikipedia's style of collaborative production has been lauded, lambasted, and satirized. Despite unease over its implications for the character (and quality) of knowledge, Wikipedia has brought us closer than ever to a realization of the centuries-old Author Bio & Research Blog pursuit of a universal encyclopedia. Good Faith Collaboration: The Culture of Wikipedia is a rich ethnographic portrayal of Wikipedia's historical roots, collaborative culture, and much debated legacy. Foreword Preface to the Web Edition Praise for Good Faith Collaboration Preface Extended Table of Contents "Reagle offers a compelling case that Wikipedia's most fascinating and unprecedented aspect isn't the encyclopedia itself — rather, it's the collaborative culture that underpins it: brawling, self-reflexive, funny, serious, and full-tilt committed to the 1. Nazis and Norms project, even if it means setting aside personal differences. Reagle's position as a scholar and a member of the community 2. The Pursuit of the Universal makes him uniquely situated to describe this culture." —Cory Doctorow , Boing Boing Encyclopedia "Reagle provides ample data regarding the everyday practices and cultural norms of the community which collaborates to 3. Good Faith Collaboration produce Wikipedia. His rich research and nuanced appreciation of the complexities of cultural digital media research are 4. The Puzzle of Openness well presented. -

An Analysis of Contributions to Wikipedia from Tor

Are anonymity-seekers just like everybody else? An analysis of contributions to Wikipedia from Tor Chau Tran Kaylea Champion Andrea Forte Department of Computer Science & Engineering Department of Communication College of Computing & Informatics New York University University of Washington Drexel University New York, USA Seatle, USA Philadelphia, USA [email protected] [email protected] [email protected] Benjamin Mako Hill Rachel Greenstadt Department of Communication Department of Computer Science & Engineering University of Washington New York University Seatle, USA New York, USA [email protected] [email protected] Abstract—User-generated content sites routinely block contri- butions from users of privacy-enhancing proxies like Tor because of a perception that proxies are a source of vandalism, spam, and abuse. Although these blocks might be effective, collateral damage in the form of unrealized valuable contributions from anonymity seekers is invisible. One of the largest and most important user-generated content sites, Wikipedia, has attempted to block contributions from Tor users since as early as 2005. We demonstrate that these blocks have been imperfect and that thousands of attempts to edit on Wikipedia through Tor have been successful. We draw upon several data sources and analytical techniques to measure and describe the history of Tor editing on Wikipedia over time and to compare contributions from Tor users to those from other groups of Wikipedia users. Fig. 1. Screenshot of the page a user is shown when they attempt to edit the Our analysis suggests that although Tor users who slip through Wikipedia article on “Privacy” while using Tor. Wikipedia’s ban contribute content that is more likely to be reverted and to revert others, their contributions are otherwise similar in quality to those from other unregistered participants and to the initial contributions of registered users. -

Initiativen Roger Cloes Infobrief

Wissenschaftliche Dienste Deutscher Bundestag Infobrief Entwicklung und Bedeutung der im Internet ehrenamtlich eingestell- ten Wissensangebote insbesondere im Hinblick auf die Wiki- Initiativen Roger Cloes WD 10 - 3010 - 074/11 Wissenschaftliche Dienste Infobrief Seite 2 WD 10 - 3010 - 074/11 Entwicklung und Bedeutung der ehrenamtlich im Internet eingestellten Wissensangebote insbe- sondere im Hinblick auf die Wiki-Initiativen Verfasser: Dr. Roger Cloes / Tim Moritz Hector (Praktikant) Aktenzeichen: WD 10 - 3010 - 074/11 Abschluss der Arbeit: 1. Juli 2011 Fachbereich: WD 10: Kultur, Medien und Sport Ausarbeitungen und andere Informationsangebote der Wissenschaftlichen Dienste geben nicht die Auffassung des Deutschen Bundestages, eines seiner Organe oder der Bundestagsverwaltung wieder. Vielmehr liegen sie in der fachlichen Verantwortung der Verfasserinnen und Verfasser sowie der Fachbereichsleitung. Der Deutsche Bundestag behält sich die Rechte der Veröffentlichung und Verbreitung vor. Beides bedarf der Zustimmung der Leitung der Abteilung W, Platz der Republik 1, 11011 Berlin. Wissenschaftliche Dienste Infobrief Seite 3 WD 10 - 3010 - 074/11 Zusammenfassung Ehrenamtlich ins Internet eingestelltes Wissen spielt in Zeiten des sogenannten „Mitmachweb“ eine zunehmend herausgehobene Rolle. Vor allem Wikis und insbesondere Wikipedia sind ein nicht mehr wegzudenkendes Element des Internets. Keine anderen vergleichbaren Wissensange- bote im Internet, auch wenn sie zum freien Abruf eingestellt sind und mit Steuern, Gebühren, Werbeeinnahmen finanziert oder als Gratisproben im Rahmen von Geschäftsmodellen verschenkt werden, erreichen die Zugriffszahlen von Wikipedia. Das ist ein Phänomen, das in seiner Dimension vor dem Hintergrund der urheberrechtlichen Dis- kussion und der Begründung von staatlichem Schutz als Voraussetzung für die Schaffung von geistigen Gütern kaum Beachtung findet. Relativ niedrige Verbreitungskosten im Internet und geringe oder keine Erfordernisse an Kapitalinvestitionen begünstigen diese Entwicklung. -

Reliability of Wikipedia 1 Reliability of Wikipedia

Reliability of Wikipedia 1 Reliability of Wikipedia The reliability of Wikipedia (primarily of the English language version), compared to other encyclopedias and more specialized sources, is assessed in many ways, including statistically, through comparative review, analysis of the historical patterns, and strengths and weaknesses inherent in the editing process unique to Wikipedia. [1] Because Wikipedia is open to anonymous and collaborative editing, assessments of its Vandalism of a Wikipedia article reliability usually include examinations of how quickly false or misleading information is removed. An early study conducted by IBM researchers in 2003—two years following Wikipedia's establishment—found that "vandalism is usually repaired extremely quickly — so quickly that most users will never see its effects"[2] and concluded that Wikipedia had "surprisingly effective self-healing capabilities".[3] A 2007 peer-reviewed study stated that "42% of damage is repaired almost immediately... Nonetheless, there are still hundreds of millions of damaged views."[4] Several studies have been done to assess the reliability of Wikipedia. A notable early study in the journal Nature suggested that in 2005, Wikipedia scientific articles came close to the level of accuracy in Encyclopædia Britannica and had a similar rate of "serious errors".[5] This study was disputed by Encyclopædia Britannica.[6] By 2010 reviewers in medical and scientific fields such as toxicology, cancer research and drug information reviewing Wikipedia against professional and peer-reviewed sources found that Wikipedia's depth and coverage were of a very high standard, often comparable in coverage to physician databases and considerably better than well known reputable national media outlets. -

Detecting Undisclosed Paid Editing in Wikipedia

Detecting Undisclosed Paid Editing in Wikipedia Nikesh Joshi, Francesca Spezzano, Mayson Green, and Elijah Hill Computer Science Department, Boise State University Boise, Idaho, USA [email protected] {nikeshjoshi,maysongreen,elijahhill}@u.boisestate.edu ABSTRACT 1 INTRODUCTION Wikipedia, the free and open-collaboration based online ency- Wikipedia is the free online encyclopedia based on the principle clopedia, has millions of pages that are maintained by thousands of of open collaboration; for the people by the people. Anyone can volunteer editors. As per Wikipedia’s fundamental principles, pages add and edit almost any article or page. However, volunteers should on Wikipedia are written with a neutral point of view and main- follow a set of guidelines when editing Wikipedia. The purpose tained by volunteer editors for free with well-defned guidelines in of Wikipedia is “to provide the public with articles that summa- order to avoid or disclose any confict of interest. However, there rize accepted knowledge, written neutrally and sourced reliably” 1 have been several known incidents where editors intentionally vio- and the encyclopedia should not be considered as a platform for late such guidelines in order to get paid (or even extort money) for advertising and self-promotion. Wikipedia’s guidelines strongly maintaining promotional spam articles without disclosing such. discourage any form of confict-of-interest (COI) editing and require In this paper, we address for the frst time the problem of identi- editors to disclose any COI contribution. Paid editing is a form of fying undisclosed paid articles in Wikipedia. We propose a machine COI editing and refers to editing Wikipedia (in the majority of the learning-based framework using a set of features based on both cases for promotional purposes) in exchange for compensation. -

PHP Credits Configuration

PHP Version 5.0.1 www.entropy.ch Release 1 System Darwin G4-500.local 7.7.0 Darwin Kernel Version 7.7.0: Sun Nov 7 16:06:51 PST 2004; root:xnu/xnu-517.9.5.obj~1/RELEASE_PPC Power Macintosh Build Date Aug 13 2004 15:03:31 Configure './configure' '--prefix=/usr/local/php5' '--with-config-file-path=/usr/local/php5/lib' '--with-apxs' '- Command -with-iconv' '--with-openssl=/usr' '--with-zlib=/usr' '--with-mysql=/Users/marc/cvs/entropy/php- module/src/mysql-standard-*' '--with-mysqli=/usr/local/mysql/bin/mysql_config' '--with- xsl=/usr/local/php5' '--with-pdflib=/usr/local/php5' '--with-pgsql=/Users/marc/cvs/entropy/php- module/build/postgresql-build' '--with-gd' '--with-jpeg-dir=/usr/local/php5' '--with-png- dir=/usr/local/php5' '--with-zlib-dir=/usr' '--with-freetype-dir=/usr/local/php5' '--with- t1lib=/usr/local/php5' '--with-imap=../imap-2002d' '--with-imap-ssl=/usr' '--with- gettext=/usr/local/php5' '--with-ming=/Users/marc/cvs/entropy/php-module/build/ming-build' '- -with-ldap' '--with-mime-magic=/usr/local/php5/etc/magic.mime' '--with-iodbc=/usr' '--with- xmlrpc' '--with-expat -dir=/usr/local/php5' '--with-iconv-dir=/usr' '--with-curl=/usr/local/php5' '-- enable-exif' '--enable-wddx' '--enable-soap' '--enable-sqlite-utf8' '--enable-ftp' '--enable- sockets' '--enable-dbx' '--enable-dbase' '--enable-mbstring' '--enable-calendar' '--with- bz2=/usr' '--with-mcrypt=/usr/local/php5' '--with-mhash=/usr/local/php5' '--with- mssql=/usr/local/php5' '--with-fbsql=/Users/marc/cvs/entropy/php-module/build/frontbase- build/Library/FrontBase' Server -

Web Application Development with PHP 4.0 00 9971 FM 6/16/00 7:24 AM Page Ii

00 9971 FM 6/16/00 7:24 AM Page i Web Application Development with PHP 4.0 00 9971 FM 6/16/00 7:24 AM Page ii Other Books by New Riders Publishing MySQL GTK+/Gnome Application Paul DuBois, 0-7357-0921-1 Development Havoc Pennington, 0-7357-0078-8 A UML Pattern Language Paul Evitts, 1-57870-118-X DCE/RPC over SMB: Samba and Windows NT Domain Internals Constructing Superior Software Luke Leighton, 1-57870-150-3 Paul Clements, 1-57870-147-3 Linux Firewalls Python Essential Reference Robert Ziegler, 0-7357-0900-9 David Beazley, 0-7357-0901-7 Linux Essential Reference KDE Application Development Ed Petron, 0-7357-0852-5 Uwe Thiem, 1-57870-201-1 Linux System Administration Developing Linux Applications with Jim Dennis, M. Carling, et al, GTK+ and GDK 1-556205-934-3 Eric Harlow, 0-7357-0021-4 00 9971 FM 6/16/00 7:24 AM Page iii Web Application Development with PHP 4.0 Tobias Ratschiller Till Gerken With contributions by Zend Technologies, LTD 201 West 103rd Street, Zeev Suraski Indianapolis, Indiana 46290 Andi Gutmans 00 9971 FM 6/16/00 7:24 AM Page iv Web Application Development with PHP 4.0 By:Tobias Ratschiller and Till Gerken Copyright © 2000 by New Riders Publishing Publisher FIRST EDITION: July, 2000 David Dwyer All rights reserved. No part of this book may be reproduced Executive Editor or transmitted in any form or by any means, electronic or Al Valvano mechanical, including photocopying, recording, or by any information storage and retrieval system, without written Managing Editor permission from the publisher, except for the inclusion of Gina Brown brief quotations in a review. -

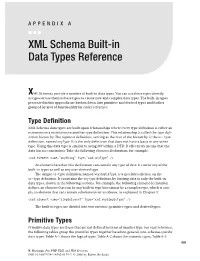

XML Schema Built-In Data Types Reference

APPENDIX A ■ ■ ■ XML Schema Built-in Data Types Reference XML Schemas provide a number of built-in data types. You can use these types directly as types or use them as base types to create new and complex data types. The built-in types presented in this appendix are broken down into primitive and derived types and further grouped by area of functionality for easier reference. Type Definition XML Schema data types are built upon relationships where every type definition is either an extension or a restriction to another type definition. This relationship is called the type defi- nition hierarchy. The topmost definition, serving as the root of the hierarchy, is the ur-type definition, named anyType. It is the only definition that does not have a basis in any other type. Using this data type is similar to using ANY within a DTD. It effectively means that the data has no constraints. Take the following element declaration, for example: <xsd:element name="anything" type="xsd:anyType" /> An element based on this declaration can contain any type of data. It can be any of the built-in types as well as any user-derived type. The simple ur-type definition, named anySimpleType, is a special restriction on the ur-type definition. It constrains the anyType definition by limiting data to only the built-in data types, shown in the following sections. For example, the following element declaration defines an element that can be any built-in type but cannot be a complex type, which is sim- ply an element that can contain subelements or attributes, as explained in Chapter 3: <xsd:element name="simplelement" type="xsd:anySimpleType" /> The built-in types are divided into two varieties: primitive types and derived types. -

WMF Advisory Board Beesley Starling

Advisory Board interviews conducted at Wikimania: Angela Beesley Starling August 24-28, 2009 Key themes: . Need for experimentation/innovation within Wikimedia . Need for prioritization and rationalization of Wiki projects . Need for renewed efforts towards the charitable mission What is your history with Wikimedia? . Was on the first Board of Trustees, joined in 2003. Stepped down from that role and became Chair of the Advisory Board when that was set up. Husband is a MediaWiki developer on staff at Wikimedia (Tim Starling). Now working with Jimmy on Wikia. What are your major concerns in thinking about Wikimedia’s development over the next five years? . Concern that as the WMF gets bigger, the connection to the community will be weaker. Big software challenges; and it is harder to try out new things since the site is so big o Difficulty trying out new things; concerned that the community will object to change o We need new processes, a way for the Foundation to say they need to try something out. For example, with flagged revisions, it was not clear how or whether it would will get rolled out, when it might live, who would be responsible for it, and whether it would be for a trial period only, and if so what metrics would determine success of the trial. o Too few technical staff at Wikipedia, and too few people to review the code of volunteers. Major changes, particularly user-facing ones, are less likely to happen. The tech staff is focused on management of volunteers – filling in other bits around the edges. -

Towards Content-Driven Reputation for Collaborative Code Repositories

University of Pennsylvania ScholarlyCommons Departmental Papers (CIS) Department of Computer & Information Science 8-28-2012 Towards Content-Driven Reputation for Collaborative Code Repositories Andrew G. West University of Pennsylvania, [email protected] Insup Lee University of Pennsylvania, [email protected] Follow this and additional works at: https://repository.upenn.edu/cis_papers Part of the Databases and Information Systems Commons, Graphics and Human Computer Interfaces Commons, and the Software Engineering Commons Recommended Citation Andrew G. West and Insup Lee, "Towards Content-Driven Reputation for Collaborative Code Repositories", 8th International Symposium on Wikis and Open Collaboration (WikiSym '12) , 13.1-13.4. August 2012. http://dx.doi.org/10.1145/2462932.2462950 Eighth International Symposium on Wikis and Open Collaboration, Linz, Austria, August 2012. This paper is posted at ScholarlyCommons. https://repository.upenn.edu/cis_papers/750 For more information, please contact [email protected]. Towards Content-Driven Reputation for Collaborative Code Repositories Abstract As evidenced by SourceForge and GitHub, code repositories now integrate Web 2.0 functionality that enables global participation with minimal barriers-to-entry. To prevent detrimental contributions enabled by crowdsourcing, reputation is one proposed solution. Fortunately this is an issue that has been addressed in analogous version control systems such as the *wiki* for natural language content. The WikiTrust algorithm ("content-driven reputation"), while developed and evaluated in wiki environments operates under a possibly shared collaborative assumption: actions that "survive" subsequent edits are reflective of good authorship. In this paper we examine WikiTrust's ability to measure author quality in collaborative code development. We first define a mapping omfr repositories to wiki environments and use it to evaluate a production SVN repository with 92,000 updates.