ORES Scalable, Community-Driven, Auditable, Machine Learning-As-A-Service in This Presentation, We Lay out Ideas

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Analysis of Contributions to Wikipedia from Tor

Are anonymity-seekers just like everybody else? An analysis of contributions to Wikipedia from Tor Chau Tran Kaylea Champion Andrea Forte Department of Computer Science & Engineering Department of Communication College of Computing & Informatics New York University University of Washington Drexel University New York, USA Seatle, USA Philadelphia, USA [email protected] [email protected] [email protected] Benjamin Mako Hill Rachel Greenstadt Department of Communication Department of Computer Science & Engineering University of Washington New York University Seatle, USA New York, USA [email protected] [email protected] Abstract—User-generated content sites routinely block contri- butions from users of privacy-enhancing proxies like Tor because of a perception that proxies are a source of vandalism, spam, and abuse. Although these blocks might be effective, collateral damage in the form of unrealized valuable contributions from anonymity seekers is invisible. One of the largest and most important user-generated content sites, Wikipedia, has attempted to block contributions from Tor users since as early as 2005. We demonstrate that these blocks have been imperfect and that thousands of attempts to edit on Wikipedia through Tor have been successful. We draw upon several data sources and analytical techniques to measure and describe the history of Tor editing on Wikipedia over time and to compare contributions from Tor users to those from other groups of Wikipedia users. Fig. 1. Screenshot of the page a user is shown when they attempt to edit the Our analysis suggests that although Tor users who slip through Wikipedia article on “Privacy” while using Tor. Wikipedia’s ban contribute content that is more likely to be reverted and to revert others, their contributions are otherwise similar in quality to those from other unregistered participants and to the initial contributions of registered users. -

Detecting Undisclosed Paid Editing in Wikipedia

Detecting Undisclosed Paid Editing in Wikipedia Nikesh Joshi, Francesca Spezzano, Mayson Green, and Elijah Hill Computer Science Department, Boise State University Boise, Idaho, USA [email protected] {nikeshjoshi,maysongreen,elijahhill}@u.boisestate.edu ABSTRACT 1 INTRODUCTION Wikipedia, the free and open-collaboration based online ency- Wikipedia is the free online encyclopedia based on the principle clopedia, has millions of pages that are maintained by thousands of of open collaboration; for the people by the people. Anyone can volunteer editors. As per Wikipedia’s fundamental principles, pages add and edit almost any article or page. However, volunteers should on Wikipedia are written with a neutral point of view and main- follow a set of guidelines when editing Wikipedia. The purpose tained by volunteer editors for free with well-defned guidelines in of Wikipedia is “to provide the public with articles that summa- order to avoid or disclose any confict of interest. However, there rize accepted knowledge, written neutrally and sourced reliably” 1 have been several known incidents where editors intentionally vio- and the encyclopedia should not be considered as a platform for late such guidelines in order to get paid (or even extort money) for advertising and self-promotion. Wikipedia’s guidelines strongly maintaining promotional spam articles without disclosing such. discourage any form of confict-of-interest (COI) editing and require In this paper, we address for the frst time the problem of identi- editors to disclose any COI contribution. Paid editing is a form of fying undisclosed paid articles in Wikipedia. We propose a machine COI editing and refers to editing Wikipedia (in the majority of the learning-based framework using a set of features based on both cases for promotional purposes) in exchange for compensation. -

Critical Point of View: a Wikipedia Reader

w ikipedia pedai p edia p Wiki CRITICAL POINT OF VIEW A Wikipedia Reader 2 CRITICAL POINT OF VIEW A Wikipedia Reader CRITICAL POINT OF VIEW 3 Critical Point of View: A Wikipedia Reader Editors: Geert Lovink and Nathaniel Tkacz Editorial Assistance: Ivy Roberts, Morgan Currie Copy-Editing: Cielo Lutino CRITICAL Design: Katja van Stiphout Cover Image: Ayumi Higuchi POINT OF VIEW Printer: Ten Klei Groep, Amsterdam Publisher: Institute of Network Cultures, Amsterdam 2011 A Wikipedia ISBN: 978-90-78146-13-1 Reader EDITED BY Contact GEERT LOVINK AND Institute of Network Cultures NATHANIEL TKACZ phone: +3120 5951866 INC READER #7 fax: +3120 5951840 email: [email protected] web: http://www.networkcultures.org Order a copy of this book by sending an email to: [email protected] A pdf of this publication can be downloaded freely at: http://www.networkcultures.org/publications Join the Critical Point of View mailing list at: http://www.listcultures.org Supported by: The School for Communication and Design at the Amsterdam University of Applied Sciences (Hogeschool van Amsterdam DMCI), the Centre for Internet and Society (CIS) in Bangalore and the Kusuma Trust. Thanks to Johanna Niesyto (University of Siegen), Nishant Shah and Sunil Abraham (CIS Bangalore) Sabine Niederer and Margreet Riphagen (INC Amsterdam) for their valuable input and editorial support. Thanks to Foundation Democracy and Media, Mondriaan Foundation and the Public Library Amsterdam (Openbare Bibliotheek Amsterdam) for supporting the CPOV events in Bangalore, Amsterdam and Leipzig. (http://networkcultures.org/wpmu/cpov/) Special thanks to all the authors for their contributions and to Cielo Lutino, Morgan Currie and Ivy Roberts for their careful copy-editing. -

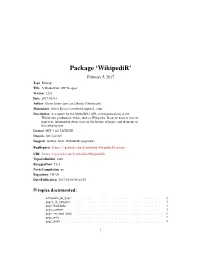

Package 'Wikipedir'

Package ‘WikipediR’ February 5, 2017 Type Package Title A MediaWiki API Wrapper Version 1.5.0 Date 2017-02-04 Author Oliver Keyes [aut, cre], Brock Tilbert [ctb] Maintainer Oliver Keyes <[email protected]> Description A wrapper for the MediaWiki API, aimed particularly at the Wikimedia 'production' wikis, such as Wikipedia. It can be used to retrieve page text, information about users or the history of pages, and elements of the category tree. License MIT + file LICENSE Imports httr, jsonlite Suggests testthat, knitr, WikidataR, pageviews BugReports https://github.com/Ironholds/WikipediR/issues URL https://github.com/Ironholds/WikipediR/ VignetteBuilder knitr RoxygenNote 5.0.1 NeedsCompilation no Repository CRAN Date/Publication 2017-02-05 08:44:55 R topics documented: categories_in_page . .2 pages_in_category . .3 page_backlinks . .4 page_content . .5 page_external_links . .6 page_info . .7 page_links . .8 1 2 categories_in_page query . .9 random_page . .9 recent_changes . 10 revision_content . 11 revision_diff . 12 user_contributions . 14 user_information . 15 WikipediR . 17 Index 18 categories_in_page Retrieves categories associated with a page. Description Retrieves categories associated with a page (or list of pages) on a MediaWiki instance Usage categories_in_page(language = NULL, project = NULL, domain = NULL, pages, properties = c("sortkey", "timestamp", "hidden"), limit = 50, show_hidden = FALSE, clean_response = FALSE, ...) Arguments language The language code of the project you wish to query, if appropriate. project The project you wish to query ("wikiquote"), if appropriate. Should be provided in conjunction with language. domain as an alternative to a language and project combination, you can also provide a domain ("rationalwiki.org") to the URL constructor, allowing for the querying of non-Wikimedia MediaWiki instances. pages A vector of page titles, with or without spaces, that you want to retrieve cate- gories for. -

The Production and Consumption of Quality Content in Peer Production Communities

The Production and Consumption of Quality Content in Peer Production Communities A Dissertation SUBMITTED TO THE FACULTY OF THE UNIVERSITY OF MINNESOTA BY Morten Warncke-Wang IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY Loren Terveen and Brent Hecht December, 2016 Copyright c 2016 M. Warncke-Wang All rights reserved. Portions copyright c Association for Computing Machinery (ACM) and is used in accordance with the ACM Author Agreement. Portions copyright c Association for the Advancement of Artificial Intelligence (AAAI) and is used in accordance with the AAAI Distribution License. Map tiles used in illustrations are from Stamen Design and published under a CC BY 3.0 license, and from OpenStreetMap and published under the ODbL. Dedicated in memory of John. T. Riedl, Ph.D professor, advisor, mentor, squash player, friend i Acknowledgements This work would not be possible without the help and support of a great number of people. I would first like to thank my family, in particular my parents Kari and Hans who have had to see yet another of their offspring fly off to the United States for a prolonged period of time. They have supported me in pursuing my dreams even when it meant I would be away from them. I also thank my brother Hans and his fiancée Anna for helping me get settled in Minnesota, solving everyday problems, and for the good company whenever I stopped by. Likewise, I thank my brother Carl and his wife Bente whom I have only been able to see ever so often, but it has always been moments I have cherished. -

Community, Cooperation, and Conflict in Wikipedia

Community, Cooperation, and Conflict in Wikipedia John Riedl GroupLens Research University of Minnesota UNIVERSITY OF MINNESOTA Wikipedia & the “Editor Decline” Number of Active Editors UC Irvine 2012 2 Vision ! "Community Health ! "Humans + Computers ! "Synthetic vs. Analytic Research UC Irvine 2012 3 Readership vs. Creation Date UC Irvine 2012 4 Search Engine Test vs Creation Date UC Irvine 2012 5 UC Irvine 2012 6 Community Health We can tell when a community has failed. Our goal is to know before it fails, and to know what to do to prevent failure. UC Irvine 2012 7 UC Irvine 2012 8 ! "Reader ! "Contributor ! "Collaborator ! "Leader UC Irvine 2012 9 Creating, Destroying, and Restoring Value in Wikipedia (Group 2007) Reid Priedhorsky Jilin Chen Shyong (Tony) K. Lam Katherine Panciera Loren Terveen John Riedl UNIVERSITY OF MINNESOTA Probability of View Damaged UNIVERSITY OF MINNESOTA WP:Clubhouse? An Exploration of Wikipedia’s Gender Imbalance WikiSym 2011 Shyong (Tony) K. Lam University of Minnesota Anuradha Uduwage University of Minnesota Zhenhua Dong Nankai University Shilad Sen Macalester College David R. Musicant Carleton College Loren Terveen University of Minnesota John Riedl University of Minnesota UNIVERSITY OF MINNESOTA Gender Gap – Overall 2009 editor cohort – females are… 16% of known-gender editors [1 in 6] 9% of known-gender edits [1 in 11] UC Irvine 2012 13 H1a: Gap-Exists Gender Gap – Historical Is Wikipedia’s gender gap closing? Many tech gender gaps are narrowing… Internet, Smartphones, online games …and some have reversed… Blogging, Facebook, Twitter UC Irvine 2012 15 Gender Gap Over Time UC Irvine 2012 16 Movie Gender – Article Length Female-interest topics = Lower quality articles © 2001 Lionsgate © 1982 Orion Pictures © 1995 Warner Bros. -

Wikipedia Data Analysis

Wikipedia data analysis. Introduction and practical examples Felipe Ortega June 29, 2012 Abstract This document offers a gentle and accessible introduction to conduct quantitative analysis with Wikipedia data. The large size of many Wikipedia communities, the fact that (for many languages) the already account for more than a decade of activity and the precise level of detail of records accounting for this activity represent an unparalleled opportunity for researchers to conduct interesting studies for a wide variety of scientific disciplines. The focus of this introductory guide is on data retrieval and data preparation. Many re- search works have explained in detail numerous examples of Wikipedia data analysis, includ- ing numerical and graphical results. However, in many cases very little attention is paid to explain the data sources that were used in these studies, how these data were prepared before the analysis and additional information that is also available to extend the analysis in future studies. This is rather unfortunate since, in general, data retrieval and data preparation usu- ally consumes no less than 75% of the whole time devoted to quantitative analysis, specially in very large datasets. As a result, the main goal of this document is to fill in this gap, providing detailed de- scriptions of available data sources in Wikipedia, and practical methods and tools to prepare these data for the analysis. This is the focus of the first part, dealing with data retrieval and preparation. It also presents an overview of useful existing tools and frameworks to facilitate this process. The second part includes a description of a general methodology to undertake quantitative analysis with Wikipedia data, and open source tools that can serve as building blocks for researchers to implement their own analysis process. -

Operationalizing Conflict and Cooperation Between Automated Software Agents in Wikipedia: a Replication and Expansion of “Even Good Bots Fight”

49 Operationalizing Conflict and Cooperation between Automated Software Agents in Wikipedia: A Replication and Expansion of “Even Good Bots Fight” R. STUART GEIGER, Berkeley Institute for Data Science, University of California, Berkeley AARON HALFAKER, Wikimedia Research, Wikimedia Foundation This paper replicates, extends, and refutes conclusions made in a study published in PLoS ONE (“Even Good Bots Fight”), which claimed to identify substantial levels of conflict between automated software agents (or bots) in Wikipedia using purely quantitative methods. By applying an integrative mixed-methods approach drawing on trace ethnography, we place these alleged cases of bot-bot conflict into context and arrive at a better understanding of these interactions. We found that overwhelmingly, the interactions previously characterized as problematic instances of conflict are typically better characterized as routine, productive, even collaborative work. These results challenge past work and show the importance of qualitative/quantitative collaboration. In our paper, we present quantitative metrics and qualitative heuristics for operationalizing bot-bot conflict. We give thick descriptions of kinds of events that present as bot-bot reverts, helping distinguish conflict from non-conflict. We computationally classify these kinds of events through patterns in edit summaries. By interpreting found/trace data in the socio-technical contexts in which people give that data meaning, we gain more from quantitative measurements, drawing deeper understandings about the governance of algorithmic systems in Wikipedia. We have also released our data collection, processing, and analysis pipeline, to facilitate computational reproducibility of our findings and to help other researchers interested in conducting similar mixed-method scholarship in other platforms and contexts. -

Download and Use.34 the Code Generated Content and Its Applications in a Multilingual Context

Language-agnostic Topic Classification for Wikipedia Isaac Johnson Martin Gerlach Diego Sáez-Trumper [email protected] [email protected] [email protected] Wikimedia Foundation Wikimedia Foundation Wikimedia Foundation United States United States United States ABSTRACT are attracting the interest of editors or readers?—especially across A major challenge for many analyses of Wikipedia dynamics—e.g., the different language editions. imbalances in content quality, geographic differences in what con- Wikipedia itself has a number of editor-curated annotation sys- tent is popular, what types of articles attract more editor discussion— tems that bring some order to all of this content. Most directly per- is grouping the very diverse range of Wikipedia articles into coher- haps is the category network, but content can also be categorized ent, consistent topics. This problem has been addressed using vari- based on properties stored in Wikidata, tagging by WikiProjects ous approaches based on Wikipedia’s category network, WikiPro- (groups of editors who focus on a specific topic), or inclusion of jects, and external taxonomies. However, these approaches have templates such as infoboxes. While these annotation systems are always been limited in their coverage: typically, only a small sub- quite powerful and extensive, they ultimately are human-generated set of articles can be classified, or the method cannot be applied and semi-structured and thus have many edge cases and under- across (the more than 300) languages on Wikipedia. In this paper, coverage in languages or communities that do not have editors who we propose a language-agnostic approach based on the links in an can maintain these annotations (see [10]). -

Wikipedia @ 20

Wikipedia @ 20 Wikipedia @ 20 Stories of an Incomplete Revolution Edited by Joseph Reagle and Jackie Koerner The MIT Press Cambridge, Massachusetts London, England © 2020 Massachusetts Institute of Technology This work is subject to a Creative Commons CC BY- NC 4.0 license. Subject to such license, all rights are reserved. The open access edition of this book was made possible by generous funding from Knowledge Unlatched, Northeastern University Communication Studies Department, and Wikimedia Foundation. This book was set in Stone Serif and Stone Sans by Westchester Publishing Ser vices. Library of Congress Cataloging-in-Publication Data Names: Reagle, Joseph, editor. | Koerner, Jackie, editor. Title: Wikipedia @ 20 : stories of an incomplete revolution / edited by Joseph M. Reagle and Jackie Koerner. Other titles: Wikipedia at 20 Description: Cambridge, Massachusetts : The MIT Press, [2020] | Includes bibliographical references and index. Identifiers: LCCN 2020000804 | ISBN 9780262538176 (paperback) Subjects: LCSH: Wikipedia--History. Classification: LCC AE100 .W54 2020 | DDC 030--dc23 LC record available at https://lccn.loc.gov/2020000804 Contents Preface ix Introduction: Connections 1 Joseph Reagle and Jackie Koerner I Hindsight 1 The Many (Reported) Deaths of Wikipedia 9 Joseph Reagle 2 From Anarchy to Wikiality, Glaring Bias to Good Cop: Press Coverage of Wikipedia’s First Two Decades 21 Omer Benjakob and Stephen Harrison 3 From Utopia to Practice and Back 43 Yochai Benkler 4 An Encyclopedia with Breaking News 55 Brian Keegan 5 Paid with Interest: COI Editing and Its Discontents 71 William Beutler II Connection 6 Wikipedia and Libraries 89 Phoebe Ayers 7 Three Links: Be Bold, Assume Good Faith, and There Are No Firm Rules 107 Rebecca Thorndike- Breeze, Cecelia A. -

Criticism of Wikipedia from Wikipidia.Pdf

Criticism of Wikipedia from Wikipidia For a list of criticisms of Wikipedia, see Wikipedia:Criticisms. See also Wikipedia:Replies to common objections. Two radically different versions of a Wikipedia biography, presented to the public within days of each other: Wikipedia's susceptibility to edit wars and bias is one of the issues raised by Wikipedia critics http://medicalexposedownloads.com/PDF/Criticism%20of%20Wikipedia%20from%20Wikipidia.pdf http://medicalexposedownloads.com/PDF/Examples%20of%20Bias%20in%20Wikipedia.pdf http://medicalexposedownloads.com/PDF/Wikipedia%20is%20Run%20by%20Latent%20Homosexual%20Homophob ics.pdf http://medicalexposedownloads.com/PDF/Bigotry%20and%20Bias%20in%20Wikipedia.pdf http://medicalexposedownloads.com/PDF/Dear%20Wikipedia%20on%20Libelous%20lies%20against%20Desire%20 Dubounet.pdf http://medicalexposedownloads.com/PDF/Desir%c3%a9%20Dubounet%20Wikipidia%20text.pdf Criticism of Wikipedia—of the content, procedures, and operations, and of the Wikipedia community—covers many subjects, topics, and themes about the nature of Wikipedia as an open source encyclopedia of subject entries that almost anyone can edit. Wikipedia has been criticized for the uneven handling, acceptance, and retention of articles about controversial subjects. The principal concerns of the critics are the factual reliability of the content; the readability of the prose; and a clear article layout; the existence of systemic bias; of gender bias; and of racial bias among the editorial community that is Wikipedia. Further concerns are that the organization allows the participation of anonymous editors (leading to editorial vandalism); the existence of social stratification (allowing cliques); and over-complicated rules (allowing editorial quarrels), which conditions permit the misuse of Wikipedia. Wikipedia is described as unreliable at times. -

Wikipedia Editor Drop-Off a Framework to Characterize Editors’ Inactivity

Wikipedia Editor Drop-Off A Framework to Characterize Editors’ Inactivity Marc Miquel-Ribé Cristian Consonni David Laniado Eurecat - Centre Tecnològic de Eurecat - Centre Tecnològic de Eurecat - Centre Tecnològic de Catalunya Catalunya Catalunya [email protected] [email protected] [email protected] ABSTRACT spread to its adoption even among professionals and academia - it While there is extensive literature both on the motivations of has issues in being able to renew or grow its communities [3, 6]. Wikipedia’s editors and on newcomers’ retention, less is known In many Wikipedia language communities, the decline or stabi- about the process by which experienced editors leave. In this paper, lization in the number of editors was detected more than a decade we present an approach to characterize Wikipedia’s editor drop-off ago [3]. Despite dedicated interventions by the Wikimedia Foun- 1 2 3 as the transitional states from activity to inactivity. Our approach dation and other organizations, and groups of editors, it has 4 is based on the data that can be collected or inferred about editors’ not been possible to reverse this trend. While one can speculate activity within the project, namely their contributions to encyclope- whether the project is not as attractive to newcomers as in the dic articles, discussions with other editors, and overall participation. early days, we can observe that there are both language editions Along with the characterization, we want to advance three main and topics with very different