Detecting Undisclosed Paid Editing in Wikipedia

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Analysis of Contributions to Wikipedia from Tor

Are anonymity-seekers just like everybody else? An analysis of contributions to Wikipedia from Tor Chau Tran Kaylea Champion Andrea Forte Department of Computer Science & Engineering Department of Communication College of Computing & Informatics New York University University of Washington Drexel University New York, USA Seatle, USA Philadelphia, USA [email protected] [email protected] [email protected] Benjamin Mako Hill Rachel Greenstadt Department of Communication Department of Computer Science & Engineering University of Washington New York University Seatle, USA New York, USA [email protected] [email protected] Abstract—User-generated content sites routinely block contri- butions from users of privacy-enhancing proxies like Tor because of a perception that proxies are a source of vandalism, spam, and abuse. Although these blocks might be effective, collateral damage in the form of unrealized valuable contributions from anonymity seekers is invisible. One of the largest and most important user-generated content sites, Wikipedia, has attempted to block contributions from Tor users since as early as 2005. We demonstrate that these blocks have been imperfect and that thousands of attempts to edit on Wikipedia through Tor have been successful. We draw upon several data sources and analytical techniques to measure and describe the history of Tor editing on Wikipedia over time and to compare contributions from Tor users to those from other groups of Wikipedia users. Fig. 1. Screenshot of the page a user is shown when they attempt to edit the Our analysis suggests that although Tor users who slip through Wikipedia article on “Privacy” while using Tor. Wikipedia’s ban contribute content that is more likely to be reverted and to revert others, their contributions are otherwise similar in quality to those from other unregistered participants and to the initial contributions of registered users. -

Why Medical Schools Should Embrace Wikipedia

Innovation Report Why Medical Schools Should Embrace Wikipedia: Final-Year Medical Student Contributions to Wikipedia Articles for Academic Credit at One School Amin Azzam, MD, MA, David Bresler, MD, MA, Armando Leon, MD, Lauren Maggio, PhD, Evans Whitaker, MD, MLIS, James Heilman, MD, Jake Orlowitz, Valerie Swisher, Lane Rasberry, Kingsley Otoide, Fred Trotter, Will Ross, and Jack D. McCue, MD Abstract Problem course on student participants, and improved their articles, enjoyed giving Most medical students use Wikipedia readership of students’ chosen articles. back “specifically to Wikipedia,” and as an information source, yet medical broadened their sense of physician schools do not train students to improve Outcomes responsibilities in the socially networked Wikipedia or use it critically. Forty-three enrolled students made information era. During only the “active 1,528 edits (average 36/student), editing months,” Wikipedia traffic Approach contributing 493,994 content bytes statistics indicate that the 43 articles Between November 2013 and November (average 11,488/student). They added were collectively viewed 1,116,065 2015, the authors offered fourth-year higher-quality and removed lower- times. Subsequent to students’ efforts, medical students a credit-bearing course quality sources for a net addition of these articles have been viewed nearly to edit Wikipedia. The course was 274 references (average 6/student). As 22 million times. designed, delivered, and evaluated by of July 2016, none of the contributions faculty, medical librarians, and personnel of the first 28 students (2013, 2014) Next Steps from WikiProject Medicine, Wikipedia have been reversed or vandalized. If other schools replicate and improve Education Foundation, and Translators Students discovered a tension between on this initiative, future multi-institution Without Borders. -

Wikimania Stockholm Program.Xlsx

Wikimania Stockholm Program Aula Magna Södra Huset combined left & right A wing classroom. Plenary sessions 1200 Szymborska 30 halls Room A5137 B wing lecture hall. Murad left main hall 700 Maathai 100 Room B5 B wing classroom. Arnold right main hall 600 Strickland 30 Room B315 classroom on top level. B wing classroom. Gbowee 30 Menchú 30 Room B487 Officially Kungstenen classroom on top level. B wing classroom. Curie 50 Tu 40 Room B497 Officially Bergsmann en open space on D wing classroom. Karman 30 Ostrom 30 middle level Room D307 D wing classroom. Allhuset Ebadi 30 Room D315 Hall. Officially the D wing classroom. Yousafzai 120 Lessing 70 Rotunda Room D499 D wing classroom. Juristernas hus Alexievich 100 Room D416 downstairs hall. Montalcini 75 Officially the Reinholdssalen Williams upstairs classroom 30 Friday 16 August until 15:00 All day events: Community Village Hackathon upstairs in Juristernas Wikitongues language Building Aula Magna Building 08:30 – Registration 08:30 – 10:00 10:00 Welcome session & keynote 10:00 – Michael Peter Edson 10:00 – 12:00 12:00 co-founder and Associate Director of The Museum for the United Nations — UN Live 12:00 – Lunch 12:00 – 13:00 13:00 & meetups Building Aula Magna Allhuset Juristernas hus Södra Huset Building Murad Arnold Curie Karman Yousafzai Montalcini Szymborska Maathai Strickland Menchú Tu Ostrom Ebadi Lessing Room A5137 B5 B315 B487 B497 D307 D315 D499 Room Space Free Knowledge and the Sustainable RESEARCH STRATEGY EDUCATION GROWTH TECHNOLOGY PARTNERSHIPS TECHNOLOGY STRATEGY HEALTH PARTNERSHIPS -

NNLM's Nationwide Online Wikipedia Edit-A-Thon

Portland State University PDXScholar Online Northwest Online Northwest 2019 Mar 29th, 11:15 AM - 12:00 PM Collaboration and Innovation: NNLM’s Nationwide Online Wikipedia Edit-a-Thon Ann Glusker University of Washington, [email protected] Follow this and additional works at: https://pdxscholar.library.pdx.edu/onlinenorthwest Let us know how access to this document benefits ou.y Glusker, Ann, "Collaboration and Innovation: NNLM’s Nationwide Online Wikipedia Edit-a-Thon" (2019). Online Northwest. 11. https://pdxscholar.library.pdx.edu/onlinenorthwest/2019/schedule/11 This Presentation is brought to you for free and open access. It has been accepted for inclusion in Online Northwest by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. Collaboration and Innovation: NNLM’s Nationwide Online Wikipedia Edit-a-Thon Program Ann Glusker / [email protected] WITH: Elaina Vitale / [email protected] Franda Liu / [email protected] Stacy Brody / [email protected] NATIONAL NETWORK OF LIBRARIES OF MEDICINE (NNLM.GOV) Eight Health Sciences Libraries each function as the Regional Medical Library (RML) for their respective region. The RMLs coordinate the operation of a Network of Libraries and other organizations to carry out regional and national programs. NNLM offers: funding, training, community outreach, partnerships to increase health awareness and access to NLM resources. EDIT-A-THONS Generally: In-person Focus on a topic area Include an opening talk Include instruction on -

Critical Point of View: a Wikipedia Reader

w ikipedia pedai p edia p Wiki CRITICAL POINT OF VIEW A Wikipedia Reader 2 CRITICAL POINT OF VIEW A Wikipedia Reader CRITICAL POINT OF VIEW 3 Critical Point of View: A Wikipedia Reader Editors: Geert Lovink and Nathaniel Tkacz Editorial Assistance: Ivy Roberts, Morgan Currie Copy-Editing: Cielo Lutino CRITICAL Design: Katja van Stiphout Cover Image: Ayumi Higuchi POINT OF VIEW Printer: Ten Klei Groep, Amsterdam Publisher: Institute of Network Cultures, Amsterdam 2011 A Wikipedia ISBN: 978-90-78146-13-1 Reader EDITED BY Contact GEERT LOVINK AND Institute of Network Cultures NATHANIEL TKACZ phone: +3120 5951866 INC READER #7 fax: +3120 5951840 email: [email protected] web: http://www.networkcultures.org Order a copy of this book by sending an email to: [email protected] A pdf of this publication can be downloaded freely at: http://www.networkcultures.org/publications Join the Critical Point of View mailing list at: http://www.listcultures.org Supported by: The School for Communication and Design at the Amsterdam University of Applied Sciences (Hogeschool van Amsterdam DMCI), the Centre for Internet and Society (CIS) in Bangalore and the Kusuma Trust. Thanks to Johanna Niesyto (University of Siegen), Nishant Shah and Sunil Abraham (CIS Bangalore) Sabine Niederer and Margreet Riphagen (INC Amsterdam) for their valuable input and editorial support. Thanks to Foundation Democracy and Media, Mondriaan Foundation and the Public Library Amsterdam (Openbare Bibliotheek Amsterdam) for supporting the CPOV events in Bangalore, Amsterdam and Leipzig. (http://networkcultures.org/wpmu/cpov/) Special thanks to all the authors for their contributions and to Cielo Lutino, Morgan Currie and Ivy Roberts for their careful copy-editing. -

ORES Scalable, Community-Driven, Auditable, Machine Learning-As-A-Service in This Presentation, We Lay out Ideas

ORES Scalable, community-driven, auditable, machine learning-as-a-service In this presentation, we lay out ideas... This is a conversation starter, not a conversation ender. We put forward our best ideas and thinking based on our partial perspectives. We look forward to hearing yours. Outline - Opportunities and fears of artificial intelligence - ORES: A break-away success - The proposal & asks We face a significant challenge... There’s been a significant decline in the number of active contributors to the English-language Wikipedia. Contributors have fallen by 40% over the past eight years, to about 30,000. Hamstrung by our own success? “Research indicates that the problem may be rooted in Wikipedians’ complex bureaucracy and their often hard-line responses to newcomers’ mistakes, enabled by semi-automated tools that make deleting new changes easy.” - MIT Technology Review (December 1, 2015) It’s the revert-new-editors’-first-few-edits-and-alienate-them problem. Imagine an algorithm that can... ● Judge whether an edit was made in good faith, so that new editors don’t have their first contributions wiped out. ● Detect bad-faith edits and help fight vandalism. Machine learning and prediction have the power to support important work in open contribution projects like Wikipedia at massive scales. But it’s far from a no-brainer. Quite the opposite. On the bright side, Machine learning has the potential to help our projects scale by reducing the workload of editors and enhancing the value of our content. by Luiz Augusto Fornasier Milani under CC BY-SA 4.0, from Wikimedia Commons. On the dark side, AIs have the potential to perpetuate biases and silence voices in novel and insidious ways. -

UCSF Medical Education

Amin Azzam, MD, MA James Heilman, MD University of California, San University of British Columbia Francisco Canada United States of America Editing Wikipedia for Medical School Credit – You Can Too! W Wikipedia Zero Population P D Outreach E Readability O O S Dissemination R R Scare vs. Share Was A. Azzam. J. Heilman Medicine 2.0 2014 Population “Imagine a world in which every single person on the planet is given free access to the sum of all human knowledge. That's what we're doing.” Jimmy Wales Wikipedia Co-Founder A. Azzam. J. Heilman Medicine 2.0 2014 Population A. Azzam. J. Heilman Medicine 2.0 2014 Population A. Azzam. J. Heilman Medicine 2.0 2014 Population A. Azzam. J. Heilman Medicine 2.0 2014 Population A. Azzam. J. Heilman Medicine 2.0 2014 Population A. Azzam. J. Heilman Medicine 2.0 2014 Population A. Azzam. J. Heilman Medicine 2.0 2014 Population A. Azzam. J. Heilman Medicine 2.0 2014 Population A. Azzam. J. Heilman Medicine 2.0 2014 Population A. Azzam. J. Heilman Medicine 2.0 2014 W Wikipedia Zero Population P D Outreach Organization E Readability O O S Dissemination R R Scare vs. Share Was A. Azzam. J. Heilman Medicine 2.0 2014 Organization Structure of WikiProject Medicine Article quality scale Article importance scale Importance x quality framework Traffic statistics A. Azzam. J. Heilman Medicine 2.0 2014 W Wikipedia Zero Population P D Outreach Organization E Readability O O Word meaning(s) S Dissemination R R Scare vs. Share Was A. Azzam. J. Heilman Medicine 2.0 2014 Word meaning(s) Simplify then “Guess I started by looking at the intro translate paragraph. -

Editing Wikipedia for Medical School Credit – Analysis of Data from Three Cycles of an Elective for Nothingfourth- Yearto Studentsdisclose

Amin Azzam Lauren Maggio Evans Whitaker Mihir Joshi James Heilman Editing Wikipedia for Medical School Credit – Analysis of data from three cycles of an elective for Nothingfourth- yearto studentsdisclose. Val Swisher Fred Trotter Jake Orlowitz Will Ross Jack McCue A. Azzam et al. WGEA 2015 January 21, 2014 Wikipedia is the single leading source of medical information for patients and healthcare professionals. Did you know? The top 100 English Wikipedia pages for healthcare topics were accessed, on average, 1.9 million times during 2013. A. Azzam et al. WGEA 2015 A recent study A. Azzam et al. WGEA 2015 Did you know? WikiProject Medicine is a group of all volunteer individuals committed to improving the quality of health-related content on Wikipedia? WikiProject Medicine has rank-ordered the approximately 26,000 health-related topics on The importance scale takes into English Wikipedia by account both number of eyeballs importance. clicking and global burden of disease. A. Azzam et al. WGEA 2015 Did you know? All Wikipedia health-related articles are rated/graded ? A. Azzam et al. WGEA 2015 Did you know? Translators Without Borders have translated 581 English Wikipedia health-related articles to 53 other language Wikipedias A. Azzam et al. WGEA 2015 Did you know? A. Azzam et al. WGEA 2015 And that recent study again A. Azzam et al. WGEA 2015 Did you know? Wikipedia Zero is an initiative to provide access to Wikipedia for free? It is currently estimated that 400 million people in 46 developing countries via 54 mobile phone operators have access via their cell phones! A. -

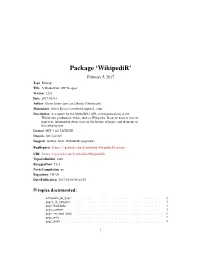

Package 'Wikipedir'

Package ‘WikipediR’ February 5, 2017 Type Package Title A MediaWiki API Wrapper Version 1.5.0 Date 2017-02-04 Author Oliver Keyes [aut, cre], Brock Tilbert [ctb] Maintainer Oliver Keyes <[email protected]> Description A wrapper for the MediaWiki API, aimed particularly at the Wikimedia 'production' wikis, such as Wikipedia. It can be used to retrieve page text, information about users or the history of pages, and elements of the category tree. License MIT + file LICENSE Imports httr, jsonlite Suggests testthat, knitr, WikidataR, pageviews BugReports https://github.com/Ironholds/WikipediR/issues URL https://github.com/Ironholds/WikipediR/ VignetteBuilder knitr RoxygenNote 5.0.1 NeedsCompilation no Repository CRAN Date/Publication 2017-02-05 08:44:55 R topics documented: categories_in_page . .2 pages_in_category . .3 page_backlinks . .4 page_content . .5 page_external_links . .6 page_info . .7 page_links . .8 1 2 categories_in_page query . .9 random_page . .9 recent_changes . 10 revision_content . 11 revision_diff . 12 user_contributions . 14 user_information . 15 WikipediR . 17 Index 18 categories_in_page Retrieves categories associated with a page. Description Retrieves categories associated with a page (or list of pages) on a MediaWiki instance Usage categories_in_page(language = NULL, project = NULL, domain = NULL, pages, properties = c("sortkey", "timestamp", "hidden"), limit = 50, show_hidden = FALSE, clean_response = FALSE, ...) Arguments language The language code of the project you wish to query, if appropriate. project The project you wish to query ("wikiquote"), if appropriate. Should be provided in conjunction with language. domain as an alternative to a language and project combination, you can also provide a domain ("rationalwiki.org") to the URL constructor, allowing for the querying of non-Wikimedia MediaWiki instances. pages A vector of page titles, with or without spaces, that you want to retrieve cate- gories for. -

Towards 'Health Information for All': Medical Content on Wikipedia

Towards ‘Health Information for All’: Medical content on Wikipedia received 6.5 billion page views in 2013. blogs.lse.ac.uk/impactofsocialsciences/2015/05/19/towards-health-information-for-all-wikipedia/ 5/19/2015 The medical content in Wikipedia receives substantial online traffic, links to a great body of academic scholarship and presents a massive opportunity for health care information. James Heilman and Andrew West present their findings on the wider editorial landscape looking to improving the quality and impact of medical content on the web. Data points to the enormous potential of these efforts, and further analysis will help to focus this momentum. Recently we published “Wikipedia and Medicine: Quantifying Readership, Editors, and the Significance of Natural Language” in the Journal of Medical Internet Research (JMIR). Some of the key-findings include: 1. Medical content received about 6.5 billion page views in 2013. This was extrapolated from empirical per- article “desktop” traffic data plus project-wide mobile estimates. 2. Wikipedia appears to be the single most used website for health information globally, exceeding traffic observed at the NIH, WebMD, WHO et al.. 3. Wikipedia’s health content is more than 1 billion bytes of text across more than 255 natural languages (equal to 127 volumes the size of the Encyclopedia Britannica). 4. Nearly 1 million references support this content and the number of references has more than doubled since 2009, with citation density outpacing raw byte growth. 5. Article access patterns are heavily language-dependent, i.e., most topics show substantially differing popularity ranks across Wikipedia’s different natural language versions. -

The Production and Consumption of Quality Content in Peer Production Communities

The Production and Consumption of Quality Content in Peer Production Communities A Dissertation SUBMITTED TO THE FACULTY OF THE UNIVERSITY OF MINNESOTA BY Morten Warncke-Wang IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY Loren Terveen and Brent Hecht December, 2016 Copyright c 2016 M. Warncke-Wang All rights reserved. Portions copyright c Association for Computing Machinery (ACM) and is used in accordance with the ACM Author Agreement. Portions copyright c Association for the Advancement of Artificial Intelligence (AAAI) and is used in accordance with the AAAI Distribution License. Map tiles used in illustrations are from Stamen Design and published under a CC BY 3.0 license, and from OpenStreetMap and published under the ODbL. Dedicated in memory of John. T. Riedl, Ph.D professor, advisor, mentor, squash player, friend i Acknowledgements This work would not be possible without the help and support of a great number of people. I would first like to thank my family, in particular my parents Kari and Hans who have had to see yet another of their offspring fly off to the United States for a prolonged period of time. They have supported me in pursuing my dreams even when it meant I would be away from them. I also thank my brother Hans and his fiancée Anna for helping me get settled in Minnesota, solving everyday problems, and for the good company whenever I stopped by. Likewise, I thank my brother Carl and his wife Bente whom I have only been able to see ever so often, but it has always been moments I have cherished. -

Community, Cooperation, and Conflict in Wikipedia

Community, Cooperation, and Conflict in Wikipedia John Riedl GroupLens Research University of Minnesota UNIVERSITY OF MINNESOTA Wikipedia & the “Editor Decline” Number of Active Editors UC Irvine 2012 2 Vision ! "Community Health ! "Humans + Computers ! "Synthetic vs. Analytic Research UC Irvine 2012 3 Readership vs. Creation Date UC Irvine 2012 4 Search Engine Test vs Creation Date UC Irvine 2012 5 UC Irvine 2012 6 Community Health We can tell when a community has failed. Our goal is to know before it fails, and to know what to do to prevent failure. UC Irvine 2012 7 UC Irvine 2012 8 ! "Reader ! "Contributor ! "Collaborator ! "Leader UC Irvine 2012 9 Creating, Destroying, and Restoring Value in Wikipedia (Group 2007) Reid Priedhorsky Jilin Chen Shyong (Tony) K. Lam Katherine Panciera Loren Terveen John Riedl UNIVERSITY OF MINNESOTA Probability of View Damaged UNIVERSITY OF MINNESOTA WP:Clubhouse? An Exploration of Wikipedia’s Gender Imbalance WikiSym 2011 Shyong (Tony) K. Lam University of Minnesota Anuradha Uduwage University of Minnesota Zhenhua Dong Nankai University Shilad Sen Macalester College David R. Musicant Carleton College Loren Terveen University of Minnesota John Riedl University of Minnesota UNIVERSITY OF MINNESOTA Gender Gap – Overall 2009 editor cohort – females are… 16% of known-gender editors [1 in 6] 9% of known-gender edits [1 in 11] UC Irvine 2012 13 H1a: Gap-Exists Gender Gap – Historical Is Wikipedia’s gender gap closing? Many tech gender gaps are narrowing… Internet, Smartphones, online games …and some have reversed… Blogging, Facebook, Twitter UC Irvine 2012 15 Gender Gap Over Time UC Irvine 2012 16 Movie Gender – Article Length Female-interest topics = Lower quality articles © 2001 Lionsgate © 1982 Orion Pictures © 1995 Warner Bros.