Bots and Cyborgs: Wikipedia's Immune System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wikipedia and Intermediary Immunity: Supporting Sturdy Crowd Systems for Producing Reliable Information Jacob Rogers Abstract

THE YALE LAW JOURNAL FORUM O CTOBER 9 , 2017 Wikipedia and Intermediary Immunity: Supporting Sturdy Crowd Systems for Producing Reliable Information Jacob Rogers abstract. The problem of fake news impacts a massive online ecosystem of individuals and organizations creating, sharing, and disseminating content around the world. One effective ap- proach to addressing false information lies in monitoring such information through an active, engaged volunteer community. Wikipedia, as one of the largest online volunteer contributor communities, presents one example of this approach. This Essay argues that the existing legal framework protecting intermediary companies in the United States empowers the Wikipedia community to ensure that information is accurate and well-sourced. The Essay further argues that current legal efforts to weaken these protections, in response to the “fake news” problem, are likely to create perverse incentives that will harm volunteer engagement and confuse the public. Finally, the Essay offers suggestions for other intermediaries beyond Wikipedia to help monitor their content through user community engagement. introduction Wikipedia is well-known as a free online encyclopedia that covers nearly any topic, including both the popular and the incredibly obscure. It is also an encyclopedia that anyone can edit, an example of one of the largest crowd- sourced, user-generated content websites in the world. This user-generated model is supported by the Wikimedia Foundation, which relies on the robust intermediary liability immunity framework of U.S. law to allow the volunteer editor community to work independently. Volunteer engagement on Wikipedia provides an effective framework for combating fake news and false infor- mation. 358 wikipedia and intermediary immunity: supporting sturdy crowd systems for producing reliable information It is perhaps surprising that a project open to public editing could be highly reliable. -

An Analysis of Contributions to Wikipedia from Tor

Are anonymity-seekers just like everybody else? An analysis of contributions to Wikipedia from Tor Chau Tran Kaylea Champion Andrea Forte Department of Computer Science & Engineering Department of Communication College of Computing & Informatics New York University University of Washington Drexel University New York, USA Seatle, USA Philadelphia, USA [email protected] [email protected] [email protected] Benjamin Mako Hill Rachel Greenstadt Department of Communication Department of Computer Science & Engineering University of Washington New York University Seatle, USA New York, USA [email protected] [email protected] Abstract—User-generated content sites routinely block contri- butions from users of privacy-enhancing proxies like Tor because of a perception that proxies are a source of vandalism, spam, and abuse. Although these blocks might be effective, collateral damage in the form of unrealized valuable contributions from anonymity seekers is invisible. One of the largest and most important user-generated content sites, Wikipedia, has attempted to block contributions from Tor users since as early as 2005. We demonstrate that these blocks have been imperfect and that thousands of attempts to edit on Wikipedia through Tor have been successful. We draw upon several data sources and analytical techniques to measure and describe the history of Tor editing on Wikipedia over time and to compare contributions from Tor users to those from other groups of Wikipedia users. Fig. 1. Screenshot of the page a user is shown when they attempt to edit the Our analysis suggests that although Tor users who slip through Wikipedia article on “Privacy” while using Tor. Wikipedia’s ban contribute content that is more likely to be reverted and to revert others, their contributions are otherwise similar in quality to those from other unregistered participants and to the initial contributions of registered users. -

Community Or Social Movement? Piotr Konieczny

Wikipedia: Community or social movement? Piotr Konieczny To cite this version: Piotr Konieczny. Wikipedia: Community or social movement?. Interface: a journal for and about social movements, 2009. hal-01580966 HAL Id: hal-01580966 https://hal.archives-ouvertes.fr/hal-01580966 Submitted on 4 Sep 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Interface: a journal for and about social movements Article Volume 1 (2): 212 - 232 (November 2009) Konieczny, Wikipedia Wikipedia: community or social movement? Piotr Konieczny Abstract In recent years a new realm for study of political and sociological phenomena has appeared, the Internet, contributing to major changes in our societies during its relatively brief existence. Within cyberspace, organizations whose existence is increasingly tied to this virtual world are of interest to social scientists. This study will analyze the community of one of the largest online organizations, Wikipedia, the free encyclopedia with millions of volunteer members. Wikipedia was never meant to be a community, yet it most certainly has become one. This study asks whether it is something even more –whether it is an expression of online activism, and whether it can be seen as a social movement organization, related to one or more of the Internet-centered social movements industries (in particular, the free and open-source software movement industry). -

Detecting Undisclosed Paid Editing in Wikipedia

Detecting Undisclosed Paid Editing in Wikipedia Nikesh Joshi, Francesca Spezzano, Mayson Green, and Elijah Hill Computer Science Department, Boise State University Boise, Idaho, USA [email protected] {nikeshjoshi,maysongreen,elijahhill}@u.boisestate.edu ABSTRACT 1 INTRODUCTION Wikipedia, the free and open-collaboration based online ency- Wikipedia is the free online encyclopedia based on the principle clopedia, has millions of pages that are maintained by thousands of of open collaboration; for the people by the people. Anyone can volunteer editors. As per Wikipedia’s fundamental principles, pages add and edit almost any article or page. However, volunteers should on Wikipedia are written with a neutral point of view and main- follow a set of guidelines when editing Wikipedia. The purpose tained by volunteer editors for free with well-defned guidelines in of Wikipedia is “to provide the public with articles that summa- order to avoid or disclose any confict of interest. However, there rize accepted knowledge, written neutrally and sourced reliably” 1 have been several known incidents where editors intentionally vio- and the encyclopedia should not be considered as a platform for late such guidelines in order to get paid (or even extort money) for advertising and self-promotion. Wikipedia’s guidelines strongly maintaining promotional spam articles without disclosing such. discourage any form of confict-of-interest (COI) editing and require In this paper, we address for the frst time the problem of identi- editors to disclose any COI contribution. Paid editing is a form of fying undisclosed paid articles in Wikipedia. We propose a machine COI editing and refers to editing Wikipedia (in the majority of the learning-based framework using a set of features based on both cases for promotional purposes) in exchange for compensation. -

Critical Point of View: a Wikipedia Reader

w ikipedia pedai p edia p Wiki CRITICAL POINT OF VIEW A Wikipedia Reader 2 CRITICAL POINT OF VIEW A Wikipedia Reader CRITICAL POINT OF VIEW 3 Critical Point of View: A Wikipedia Reader Editors: Geert Lovink and Nathaniel Tkacz Editorial Assistance: Ivy Roberts, Morgan Currie Copy-Editing: Cielo Lutino CRITICAL Design: Katja van Stiphout Cover Image: Ayumi Higuchi POINT OF VIEW Printer: Ten Klei Groep, Amsterdam Publisher: Institute of Network Cultures, Amsterdam 2011 A Wikipedia ISBN: 978-90-78146-13-1 Reader EDITED BY Contact GEERT LOVINK AND Institute of Network Cultures NATHANIEL TKACZ phone: +3120 5951866 INC READER #7 fax: +3120 5951840 email: [email protected] web: http://www.networkcultures.org Order a copy of this book by sending an email to: [email protected] A pdf of this publication can be downloaded freely at: http://www.networkcultures.org/publications Join the Critical Point of View mailing list at: http://www.listcultures.org Supported by: The School for Communication and Design at the Amsterdam University of Applied Sciences (Hogeschool van Amsterdam DMCI), the Centre for Internet and Society (CIS) in Bangalore and the Kusuma Trust. Thanks to Johanna Niesyto (University of Siegen), Nishant Shah and Sunil Abraham (CIS Bangalore) Sabine Niederer and Margreet Riphagen (INC Amsterdam) for their valuable input and editorial support. Thanks to Foundation Democracy and Media, Mondriaan Foundation and the Public Library Amsterdam (Openbare Bibliotheek Amsterdam) for supporting the CPOV events in Bangalore, Amsterdam and Leipzig. (http://networkcultures.org/wpmu/cpov/) Special thanks to all the authors for their contributions and to Cielo Lutino, Morgan Currie and Ivy Roberts for their careful copy-editing. -

ORES Scalable, Community-Driven, Auditable, Machine Learning-As-A-Service in This Presentation, We Lay out Ideas

ORES Scalable, community-driven, auditable, machine learning-as-a-service In this presentation, we lay out ideas... This is a conversation starter, not a conversation ender. We put forward our best ideas and thinking based on our partial perspectives. We look forward to hearing yours. Outline - Opportunities and fears of artificial intelligence - ORES: A break-away success - The proposal & asks We face a significant challenge... There’s been a significant decline in the number of active contributors to the English-language Wikipedia. Contributors have fallen by 40% over the past eight years, to about 30,000. Hamstrung by our own success? “Research indicates that the problem may be rooted in Wikipedians’ complex bureaucracy and their often hard-line responses to newcomers’ mistakes, enabled by semi-automated tools that make deleting new changes easy.” - MIT Technology Review (December 1, 2015) It’s the revert-new-editors’-first-few-edits-and-alienate-them problem. Imagine an algorithm that can... ● Judge whether an edit was made in good faith, so that new editors don’t have their first contributions wiped out. ● Detect bad-faith edits and help fight vandalism. Machine learning and prediction have the power to support important work in open contribution projects like Wikipedia at massive scales. But it’s far from a no-brainer. Quite the opposite. On the bright side, Machine learning has the potential to help our projects scale by reducing the workload of editors and enhancing the value of our content. by Luiz Augusto Fornasier Milani under CC BY-SA 4.0, from Wikimedia Commons. On the dark side, AIs have the potential to perpetuate biases and silence voices in novel and insidious ways. -

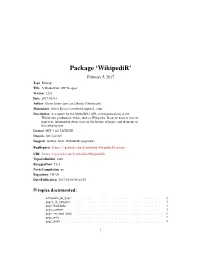

Package 'Wikipedir'

Package ‘WikipediR’ February 5, 2017 Type Package Title A MediaWiki API Wrapper Version 1.5.0 Date 2017-02-04 Author Oliver Keyes [aut, cre], Brock Tilbert [ctb] Maintainer Oliver Keyes <[email protected]> Description A wrapper for the MediaWiki API, aimed particularly at the Wikimedia 'production' wikis, such as Wikipedia. It can be used to retrieve page text, information about users or the history of pages, and elements of the category tree. License MIT + file LICENSE Imports httr, jsonlite Suggests testthat, knitr, WikidataR, pageviews BugReports https://github.com/Ironholds/WikipediR/issues URL https://github.com/Ironholds/WikipediR/ VignetteBuilder knitr RoxygenNote 5.0.1 NeedsCompilation no Repository CRAN Date/Publication 2017-02-05 08:44:55 R topics documented: categories_in_page . .2 pages_in_category . .3 page_backlinks . .4 page_content . .5 page_external_links . .6 page_info . .7 page_links . .8 1 2 categories_in_page query . .9 random_page . .9 recent_changes . 10 revision_content . 11 revision_diff . 12 user_contributions . 14 user_information . 15 WikipediR . 17 Index 18 categories_in_page Retrieves categories associated with a page. Description Retrieves categories associated with a page (or list of pages) on a MediaWiki instance Usage categories_in_page(language = NULL, project = NULL, domain = NULL, pages, properties = c("sortkey", "timestamp", "hidden"), limit = 50, show_hidden = FALSE, clean_response = FALSE, ...) Arguments language The language code of the project you wish to query, if appropriate. project The project you wish to query ("wikiquote"), if appropriate. Should be provided in conjunction with language. domain as an alternative to a language and project combination, you can also provide a domain ("rationalwiki.org") to the URL constructor, allowing for the querying of non-Wikimedia MediaWiki instances. pages A vector of page titles, with or without spaces, that you want to retrieve cate- gories for. -

Wikipedia Vandalism Detection: Combining Natural Language, Metadata, and Reputation Features

University of Pennsylvania ScholarlyCommons Departmental Papers (CIS) Department of Computer & Information Science 2-2011 Wikipedia Vandalism Detection: Combining Natural Language, Metadata, and Reputation Features B. Thomas Adler University of California, Santa Cruz, [email protected] Luca de Alfaro University of California, Santa Cruz -- Google, [email protected] Santiago M. Mola-Velasco Universidad Politcnica de Valencia, [email protected] Paolo Rosso Universidad Politcnica de Valencia, [email protected] Andrew G. West University of Pennsylvania, [email protected] Follow this and additional works at: https://repository.upenn.edu/cis_papers Part of the Other Computer Sciences Commons Recommended Citation B. Thomas Adler, Luca de Alfaro, Santiago M. Mola-Velasco, Paolo Rosso, and Andrew G. West, "Wikipedia Vandalism Detection: Combining Natural Language, Metadata, and Reputation Features", Lecture Notes in Computer Science: Computational Linguistics and Intelligent Text Processing 6609, 277-288. February 2011. http://dx.doi.org/10.1007/978-3-642-19437-5_23 CICLing '11: Proceedings of the 12th International Conference on Intelligent Text Processing and Computational Linguistics, Tokyo, Japan, February 20-26, 2011. This paper is posted at ScholarlyCommons. https://repository.upenn.edu/cis_papers/457 For more information, please contact [email protected]. Wikipedia Vandalism Detection: Combining Natural Language, Metadata, and Reputation Features Abstract Wikipedia is an online encyclopedia which anyone can edit. While most edits are constructive, about 7% are acts of vandalism. Such behavior is characterized by modifications made in bad faith; introducing spam and other inappropriate content. In this work, we present the results of an effort to integrate three of the leading approaches to Wikipedia vandalism detection: a spatio-temporal analysis of metadata (STiki), a reputation-based system (WikiTrust), and natural language processing features. -

Editing Wikipedia: a Guide to Improving Content on the Online Encyclopedia

wikipedia globe vector [no layers] Editing Wikipedia: A guide to improving content on the online encyclopedia Wikimedia Foundation 1 Imagine a world in which every single human wikipedia globebeing vector [no layers] can freely share in the sum of all knowledge. That’s our commitment. This is the vision for Wikipedia and the other Wikimedia projects, which volunteers from around the world have been building since 2001. Bringing together the sum of all human knowledge requires the knowledge of many humans — including yours! What you can learn Shortcuts This guide will walk you through Want to see up-to-date statistics about how to contribute to Wikipedia, so Wikipedia? Type WP:STATS into the the knowledge you have can be freely search bar as pictured here. shared with others. You will find: • What Wikipedia is and how it works • How to navigate Wikipedia The text WP:STATS is what’s known • How you can contribute to on Wikipedia as a shortcut. You can Wikipedia and why you should type shortcuts like this into the search • Important rules that keep Wikipedia bar to pull up specific pages. reliable In this brochure, we designate shortcuts • How to edit Wikipedia with the as | shortcut WP:STATS . VisualEditor and using wiki markup • A step-by-step guide to adding content • Etiquette for interacting with other contributors 2 What is Wikipedia? Wikipedia — the free encyclopedia that anyone can edit — is one of the largest collaborative projects in history. With millions of articles and in hundreds of languages, Wikipedia is read by hundreds of millions of people on a regular basis. -

Automatic Vandalism Detection in Wikipedia: Towards a Machine Learning Approach

Automatic Vandalism Detection in Wikipedia: Towards a Machine Learning Approach Koen Smets and Bart Goethals and Brigitte Verdonk Department of Mathematics and Computer Science University of Antwerp, Antwerp, Belgium {koen.smets,bart.goethals,brigitte.verdonk}@ua.ac.be Abstract by users on a blacklist. Since the end of 2006 some vandal bots, computer programs designed to detect and revert van- Since the end of 2006 several autonomous bots are, or have dalism have seen the light on Wikipedia. Nowadays the most been, running on Wikipedia to keep the encyclopedia free from vandalism and other damaging edits. These expert sys- prominent of them are ClueBot and VoABot II. These tools tems, however, are far from optimal and should be improved are built around the same primitives that are included in Van- to relieve the human editors from the burden of manually dal Fighter. They use lists of regular expressions and consult reverting such edits. We investigate the possibility of using databases with blocked users or IP addresses to keep legit- machine learning techniques to build an autonomous system imate edits apart from vandalism. The major drawback of capable to distinguish vandalism from legitimate edits. We these approaches is the fact that these bots utilize static lists highlight the results of a small but important step in this di- of obscenities and ‘grammar’ rules which are hard to main- rection by applying commonly known machine learning al- tain and easy to deceive. As we will show, they only detect gorithms using a straightforward feature representation. De- 30% of the committed vandalism. So there is certainly need spite the promising results, this study reveals that elemen- for improvement. -

Why Wikipedia Is So Successful?

Term Paper E Business Technologies Why Wikipedia is so successful? Master in Business Consulting Winter Semester 2010 Submitted to Prof.Dr. Eduard Heindl Prepared by Suresh Balajee Madanakannan Matriculation number-235416 Fakultät Wirtschaftsinformatik Hochschule Furtwangen University I ACKNOWLEDGEMENT This is to claim that all the content in this article are from the author Suresh Balajee Madanakannan. The resources can found in the reference list at the end of each page. All the ideas and state in the article are from the author himself with none plagiary and the author owns the copyright of this article. Suresh Balajee Madanakannan II Contents 1. Introduction .............................................................................................................................................. 1 1.1 About Wikipedia ................................................................................................................................. 1 1.2 Wikipedia servers and architecture .................................................................................................... 5 2. Factors that led Wikipedia to be successful ............................................................................................ 7 2.1 User factors ......................................................................................................................................... 7 2.2 Knowledge factors .............................................................................................................................. 8 -

The Production and Consumption of Quality Content in Peer Production Communities

The Production and Consumption of Quality Content in Peer Production Communities A Dissertation SUBMITTED TO THE FACULTY OF THE UNIVERSITY OF MINNESOTA BY Morten Warncke-Wang IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY Loren Terveen and Brent Hecht December, 2016 Copyright c 2016 M. Warncke-Wang All rights reserved. Portions copyright c Association for Computing Machinery (ACM) and is used in accordance with the ACM Author Agreement. Portions copyright c Association for the Advancement of Artificial Intelligence (AAAI) and is used in accordance with the AAAI Distribution License. Map tiles used in illustrations are from Stamen Design and published under a CC BY 3.0 license, and from OpenStreetMap and published under the ODbL. Dedicated in memory of John. T. Riedl, Ph.D professor, advisor, mentor, squash player, friend i Acknowledgements This work would not be possible without the help and support of a great number of people. I would first like to thank my family, in particular my parents Kari and Hans who have had to see yet another of their offspring fly off to the United States for a prolonged period of time. They have supported me in pursuing my dreams even when it meant I would be away from them. I also thank my brother Hans and his fiancée Anna for helping me get settled in Minnesota, solving everyday problems, and for the good company whenever I stopped by. Likewise, I thank my brother Carl and his wife Bente whom I have only been able to see ever so often, but it has always been moments I have cherished.