System Integration in Web 2.0 Environment

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PHP Credits Configuration

PHP Version 5.0.1 www.entropy.ch Release 1 System Darwin G4-500.local 7.7.0 Darwin Kernel Version 7.7.0: Sun Nov 7 16:06:51 PST 2004; root:xnu/xnu-517.9.5.obj~1/RELEASE_PPC Power Macintosh Build Date Aug 13 2004 15:03:31 Configure './configure' '--prefix=/usr/local/php5' '--with-config-file-path=/usr/local/php5/lib' '--with-apxs' '- Command -with-iconv' '--with-openssl=/usr' '--with-zlib=/usr' '--with-mysql=/Users/marc/cvs/entropy/php- module/src/mysql-standard-*' '--with-mysqli=/usr/local/mysql/bin/mysql_config' '--with- xsl=/usr/local/php5' '--with-pdflib=/usr/local/php5' '--with-pgsql=/Users/marc/cvs/entropy/php- module/build/postgresql-build' '--with-gd' '--with-jpeg-dir=/usr/local/php5' '--with-png- dir=/usr/local/php5' '--with-zlib-dir=/usr' '--with-freetype-dir=/usr/local/php5' '--with- t1lib=/usr/local/php5' '--with-imap=../imap-2002d' '--with-imap-ssl=/usr' '--with- gettext=/usr/local/php5' '--with-ming=/Users/marc/cvs/entropy/php-module/build/ming-build' '- -with-ldap' '--with-mime-magic=/usr/local/php5/etc/magic.mime' '--with-iodbc=/usr' '--with- xmlrpc' '--with-expat -dir=/usr/local/php5' '--with-iconv-dir=/usr' '--with-curl=/usr/local/php5' '-- enable-exif' '--enable-wddx' '--enable-soap' '--enable-sqlite-utf8' '--enable-ftp' '--enable- sockets' '--enable-dbx' '--enable-dbase' '--enable-mbstring' '--enable-calendar' '--with- bz2=/usr' '--with-mcrypt=/usr/local/php5' '--with-mhash=/usr/local/php5' '--with- mssql=/usr/local/php5' '--with-fbsql=/Users/marc/cvs/entropy/php-module/build/frontbase- build/Library/FrontBase' Server -

Web Application Development with PHP 4.0 00 9971 FM 6/16/00 7:24 AM Page Ii

00 9971 FM 6/16/00 7:24 AM Page i Web Application Development with PHP 4.0 00 9971 FM 6/16/00 7:24 AM Page ii Other Books by New Riders Publishing MySQL GTK+/Gnome Application Paul DuBois, 0-7357-0921-1 Development Havoc Pennington, 0-7357-0078-8 A UML Pattern Language Paul Evitts, 1-57870-118-X DCE/RPC over SMB: Samba and Windows NT Domain Internals Constructing Superior Software Luke Leighton, 1-57870-150-3 Paul Clements, 1-57870-147-3 Linux Firewalls Python Essential Reference Robert Ziegler, 0-7357-0900-9 David Beazley, 0-7357-0901-7 Linux Essential Reference KDE Application Development Ed Petron, 0-7357-0852-5 Uwe Thiem, 1-57870-201-1 Linux System Administration Developing Linux Applications with Jim Dennis, M. Carling, et al, GTK+ and GDK 1-556205-934-3 Eric Harlow, 0-7357-0021-4 00 9971 FM 6/16/00 7:24 AM Page iii Web Application Development with PHP 4.0 Tobias Ratschiller Till Gerken With contributions by Zend Technologies, LTD 201 West 103rd Street, Zeev Suraski Indianapolis, Indiana 46290 Andi Gutmans 00 9971 FM 6/16/00 7:24 AM Page iv Web Application Development with PHP 4.0 By:Tobias Ratschiller and Till Gerken Copyright © 2000 by New Riders Publishing Publisher FIRST EDITION: July, 2000 David Dwyer All rights reserved. No part of this book may be reproduced Executive Editor or transmitted in any form or by any means, electronic or Al Valvano mechanical, including photocopying, recording, or by any information storage and retrieval system, without written Managing Editor permission from the publisher, except for the inclusion of Gina Brown brief quotations in a review. -

XML Schema Built-In Data Types Reference

APPENDIX A ■ ■ ■ XML Schema Built-in Data Types Reference XML Schemas provide a number of built-in data types. You can use these types directly as types or use them as base types to create new and complex data types. The built-in types presented in this appendix are broken down into primitive and derived types and further grouped by area of functionality for easier reference. Type Definition XML Schema data types are built upon relationships where every type definition is either an extension or a restriction to another type definition. This relationship is called the type defi- nition hierarchy. The topmost definition, serving as the root of the hierarchy, is the ur-type definition, named anyType. It is the only definition that does not have a basis in any other type. Using this data type is similar to using ANY within a DTD. It effectively means that the data has no constraints. Take the following element declaration, for example: <xsd:element name="anything" type="xsd:anyType" /> An element based on this declaration can contain any type of data. It can be any of the built-in types as well as any user-derived type. The simple ur-type definition, named anySimpleType, is a special restriction on the ur-type definition. It constrains the anyType definition by limiting data to only the built-in data types, shown in the following sections. For example, the following element declaration defines an element that can be any built-in type but cannot be a complex type, which is sim- ply an element that can contain subelements or attributes, as explained in Chapter 3: <xsd:element name="simplelement" type="xsd:anySimpleType" /> The built-in types are divided into two varieties: primitive types and derived types. -

Lecture 38: Concurrent & Distributed Programming Message Passing

Lecture 38: Concurrent & Distributed Programming CSC 131 Fall, 2006 Message Passing Ada Tasks • Must provide send and receive operations w/ message and receiver/sender. • Synchronous message passing • Synchronous or asynchronous? • Tasks have some features of monitors • If asynchronous then use !mailbox" to store • Exports entry names #w/ parameters$ • If synchronous then sender and receiver must • Entry names have FIFO queues !rendezvous" before either can proceed. task body Buffer is MaxBufferSize: constant INTEGER := 50; Store: array(1..MaxBufferSize) of CHARACTER; BufferStart: INTEGER := 1; Accepting an entry BufferEnd: INTEGER := 0; BufferSize: INTEGER := 0; begin loop select when BufferSize < MaxBufferSize => accept insert(ch: in CHARACTER) do Ca!er only blocked Store(BufferEnd) := ch; in accep " end insert; select BufferEnd := BufferEnd mod MaxBufferSize + 1; BufferSize := BufferSize + 1; [when <cond> =>] <select alternative> or when BufferSize > 0 => accept delete(ch: out CHARACTER) do {or [when <cond> =>] <select alternative>} ch := Store(BufferStart); end delete; [else <statements>] BufferStart := BufferStart mod MaxBufferSize + 1; BufferSize := BufferSize -1; or end select accept more (notEmpty: out BOOLEAN) do notEmpty := BufferSize > 0; end more; or terminate; end select; end loop end Buffer; task type Producer; task body Producer is Comparing Mechanisms ch: CHARACTER; begin loop TEXT_IO.GET(ch); • Shared memory concurrency Buffer.insert(ch); end loop; ' Semaphores very low level. end Producer; ' Monitors passive regions encapsulating resources to be shared #mutual exclusion$. Cooperation enforced task type Consumer; by wait and signal statements. task body Consumer is ch: CHARACTER; begin • Distributed Systems loop ' Everything active in Ada tasks #resources and Buffer.delete(ch); processes$ TEXT_IO.PUT(ch); end loop; ' Monitors and processes can easily be structured as end Consumer; Ada tasks and vice'versa. -

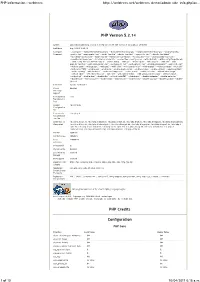

PHP Version 5.2.14

PHP information - webtrees http://webtrees.net/webtrees_demo/admin_site_info.php?ac... PHP Version 5.2.14 System Linux wh3.2qikhosting.co.nz 2.6.18-194.26.1.el5 #1 SMP Tue Nov 9 12:54:40 EST 2010 i686 Build Date Aug 12 2010 15:41:50 Configure ' ./configure' ' --build=i686-redhat-linux-gnu' ' --host=i686-redhat-linux-gnu' ' --target=i386-redhat-linux-gnu' ' --program-prefix=' ' Command --prefix=/usr' ' --exec-prefix=/usr' ' --bindir=/usr/bin' ' --sbindir=/usr/sbin' ' --sysconfdir=/etc' ' --datadir=/usr/share' ' --includedir=/usr/include' ' --libdir=/usr/lib' ' --libexecdir=/usr/libexec' ' --localstatedir=/var' ' --sharedstatedir=/usr/com' ' --mandir=/usr/share/man' ' --infodir=/usr/share/info' ' --cache-file=../config.cache' ' --with-libdir=lib' ' --with-config-file-path=/etc' ' --with-config-file-scan-dir=/etc/php.d' ' --disable-debug' ' --with-pic' ' --disable-rpath' ' --without-pear' ' --with-bz2' ' --with- exec-dir=/usr/bin' ' --with-freetype-dir=/usr' ' --with-png-dir=/usr' ' --with-xpm-dir=/usr' ' --enable-gd-native-ttf' ' --with-t1lib=/usr' ' --without-gdbm' ' --with-gettext' ' --with-gmp' ' --with-iconv' ' --with-jpeg-dir=/usr' ' --with-openssl' ' --with-pcre-regex' ' --with-zlib' ' --with-layout=GNU' ' --enable-exif' ' --enable-ftp' ' --enable-magic-quotes' ' --enable-sockets' ' --enable-sysvsem' ' --enable-sysvshm' ' --enable-sysvmsg' ' --with-kerberos' ' --enable-ucd-snmp-hack' ' --enable-shmop' ' --enable-calendar' ' --without-mime-magic' ' --without-sqlite' ' --with-libxml-dir=/usr' ' --with-xml' ' --with-system-tzdata' ' --with-apxs2=/usr/sbin/apxs' -

Openstep and Solaris

OpenStep and Solaris A White Paper A Sun Microsystems, Inc. Business 2550 Garcia Avenue Mountain View, CA 94043 TM U.S.A. 1994 Sun Microsystems, Inc., NeXT Computer, Inc. Sunsoft 2550 Garcia Avenue, Mountain View, California 94043-1100 U.S.A. NeXT Computer, Inc. 900 Chesapeake Drive, Redwood City, California 94063 U.S.A. NEXTSTEP Release 3 Copyright 1988-1994 NeXT Computer, Inc. All rights reserved. [6453.00] This product and related documentation are protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or related documentation may be reproduced in any form by any means without prior written authorization of NeXT, Sun and their licensors, if any. Portions of this product may be derived from the UNIX® and Berkeley 4.3 BSD systems, licensed from UNIX System Laboratories, Inc. and the University of California, respectively. Third-party font software in this product is protected by copyright and licensed from NeXT’s Font Suppliers. RESTRICTED RIGHTS LEGEND: Use, duplication, or disclosure by the United States Government is subject to the restrictions set forth in DFARS 252.227-7013 (c)(1)(ii) and FAR 52.227-19. The product described in this publication may be protected by one or more U.S. patents, foreign patents, or pending applications. TRADEMARKS Sun, Sun Microsystems, the Sun logo, SMCC, the SMCC logo, SunSoft, the SunSoft logo, Solaris, SunOS, OpenWindows, DeskSet, ONC, NFS, NetISAM, and ToolTalk are trademarks or registered trademarks of Sun Microsystems, Inc. NeXT, the NeXT logo, NEXTSTEP, the NEXTSTEP logo, OpenStep, NEXTSTEP Developer, ObjectWare, Portable Distributed Objects, and PDO are trademarks or registered trademarks of NeXT Computer, Inc. -

Restful Or Restless – Current State of Today's Top Web Apis

RESTful or RESTless – Current State of Today’s Top Web APIs Frederik B¨ulthoff, Maria Maleshkova AIFB, Karlsruhe Institute of Technology (KIT), Germany frederik.buelthoff@student.kit.edu, [email protected] Abstract Recent developments in the world of services on the Web show that both the number of available Web APIs as well as the applications built on top is constantly increasing. This trend is commonly attributed to the wide adoption of the REST architectural principles [1]. Still, the development of Web APIs is rather autonomous and it is up to the providers to decide how to implement, expose and describe the Web APIs. The individual implementations are then commonly documented in textual form as part of a webpage, showing a wide variety in terms of content, structure and level of detail. As a result, client application developers are forced to manually process and interpret the documen- tation. Before we can achieve a higher level of automation and can make any significant improvement to current practices and technolo- gies, we need to reach a deeper understanding of their similarities and differences. Therefore, in this paper we present a thorough analysis of the most popular Web APIs through the examination of their docu- mentation. We provide conclusions about common description forms, output types, usage of API parameters, invocation support, level of reusability, API granularity and authentication details. The collected data builds a solid foundation for identifying deficiencies and can be used as a basis for devising common standards and guidelines for Web API development. arXiv:1902.10514v1 [cs.SE] 20 Feb 2019 1 Introduction Recent developments in the world of services on the Web show that both the number of available Web APIs as well as the applications built on top is constantly increasing1. -

Cyber Hygiene Sample Report

ASSESSMENT SUMMARY Cyber Hygiene Assessment CYHYNONE NUMBER April 2, 2018 DATE Cyber Hygiene Assessment Sample Organization Contents 1 How To Use This Report 5 1.1 SAMPLE Points of Contact ................................................. 5 2 Report Card 6 3 Executive Summary 7 4 Sub-Organization Summary 10 5 Methodology 11 5.1 Background ........................................................ 11 5.2 Process .......................................................... 11 6 Approximate Host Locations 14 7 Vulnerability Scan Results 15 8 Results Trending 17 9 Conclusion 20 Appendices 21 Appendix A Vulnerability Summary 21 Appendix B Vulnerability Changes Since Last Report 23 B.1 Mitigated Vulnerabilities .................................................. 23 B.2 New Vulnerabilities Detected ................................................ 32 B.3 Re-Detected (Previously-Mitigated) Vulnerabilities ..................................... 32 B.4 Recently-Detected Vulnerabilities ............................................. 36 Appendix C Detailed Findings and Recommended Mitigations by Vulnerability 44 Appendix D Critical and High Vulnerability Mitigations by IP Address 69 2 April 2, 2018 Appendix E False Positive Findings 70 E.1 Expiring Soon False Positive Findings ........................................... 70 E.2 All False Positive Findings ................................................. 70 Appendix F Frequently Asked Questions 71 Appendix G Attachments 74 Appendix H Glossary and Acronyms 75 List of Figures 1 Top Vulnerabilities by Occurrence -

Adobe Coldfusion, Review the Coldfusion Product Editions, System Requirements, and Other High-Level Considerations

Installing ADOBE® COLDFUSION SFMFBTF Legal notices Legal notices For legal notices, see http://help.adobe.com/en_US/legalnotices/index.html. Last updated 2/17/2016 iii Contents Understanding ColdFusion Server Profiles . .1 Preparing to Install ColdFusion . .2 Installing the Server Configuration . .4 Installing the JEE Configuration . 32 Installing ColdFusion Express . 57 Installing Integrated Technologies . 59 Configuring your System . 65 Troubleshooting installation issues . 74 Last updated 2/17/2016 1 Understanding ColdFusion Server Profiles To develop and deploy ColdFusion applications, you can use one of the multiple server profiles available when you install ColdFusion. The ColdFusion installer allows you to install the ColdFusion Server in the following profiles (modes): 1 Development profile 2 Production profile 3 Production profile (secure) Depending on the type of profile selected during the installation, the server will be automatically configured with the appropriate settings. Choose the right profile in the installer: Last updated 2/17/2016 2 Once you install the Server using a particular profile, you can't change the profile later. The following table shows the difference between the 3 supported Server profiles: Feature Development Profile Production Profile Production Profile (Secure) Support for all types of Yes No No debugging Support for remote inspection Yes No No Support for strict enforcement of No Yes Yes complex passwords Support for remote start/stop Yes No No Support for Weinre and other Yes No No bundled servers (For instance, Node.js) Support for enabling unused Yes No No servlets Support for Secure Profile . No No Yes Depending upon the option selected, a few settings will be pre-configured in the Administrator. -

Using Perl with WDDX Table of Contents

By icarus This article copyright Melonfire 2000−2002. All rights reserved. Using Perl With WDDX Table of Contents Speaking In Tongues...........................................................................................................................................1 The Big Picture....................................................................................................................................................2 Packet Sniffer......................................................................................................................................................3 Boyz 'N The Hood...............................................................................................................................................6 All Mixed Up.....................................................................................................................................................10 Flying Toasters And Dancing Knives..............................................................................................................14 Different Strokes...............................................................................................................................................17 This Way Out....................................................................................................................................................20 i Speaking In Tongues As a Web developer, you probably already know how frustrating it is to get applications written in different languages to talk nice to -

PHP Optimization Using Hip Hop Virtual Machine Chaitali Tambe, Pramod Pawar, Dashrath Mane Vivekanand Education Society Institute of Technology, Chembur

International Journal of Advanced Research in Computer Engineering & Technology (IJARCET) Volume 4 Issue 6, June 2015 PHP Optimization Using Hip Hop Virtual Machine Chaitali Tambe, Pramod Pawar, Dashrath Mane Vivekanand education society institute of technology, Chembur Abstract— HipHop compiler is PHP based compiler created facebook.com. PHP applications that were developed by facebook in order to optimize the php code and save using HipHop terminology resulted in increased speed of resources on the facebook server. HPHPc translates the php application. Several drawbacks of standalone php code into executable c++ code. Later facebook realized that applications were eliminated by using HPHPc, one of HPHPc had some snags which included resource consumption and parallel maintenance of HPHP and which was performance. HPHPi. In order to overcome the drawbacks of HPHPc A. Limitation of HPHPc: HipHop virtual machine was developed in 2011 which is a process virtual machine based on just-in-time (JIT) HPHPc did not fully support the PHP language, compilation, serving as an execution engine for PHP and including the create_function() and eval() Hack programming languages. HHVM provides complete constructs. support for the entire PHP language. This paper shows how HHVM is superior to other compilers like PHP, PHP 7 It involved a specific time and resource consuming and how various framework uses HHVM to optimize deployment process that required a bigger than php. In addition the lock-down approach shows the 1 GB binary to be compiled and distributed to behavior of each framework with hhvm and show how many servers in short orders. performance optimization can be obtained for heavy In addition, maintaining HPHPc and HPHPi in websites. -

Distributed Object Computing (DOC) Security: Paradigms and Strategies

M P 9 8 B M I T R E P R O D U C T Distributed Object Computing (DOC) Security: Paradigms and Strategies November 1998 Deborah J. Bodeau Charles M. Schmidt Vipin Swarup F. Javier Thayer © 1998 The MITRE Corporation MITRE Center for Integrated Intelligence Systems Bedford, Massachusetts Table of Contents Section Page 1 Introduction 1 1.1 Object-Related Concepts and Terminology 1 1.2 Security Concerns 3 1.3 Interoperability Concerns 4 1.4 Frameworks for Understanding Distributed Object Computing and Security 5 2 Characterization and Comparison Strategies 7 2.1 Characterization Framework 7 2.2 Object-Related Concepts in Different DOC Paradigms 10 2.3 High-Level Comparison 13 3 CORBA 15 3.1 CORBA Motivation and Background 15 3.1.1 CORBA Object Model 16 3.1.2 Architectural Overview 16 3.1.3 Security Services in the Architecture 18 3.2 Technical Perspective on CORBA at the Conceptual Stratum 18 3.2.1 CORBA Object Model 20 3.3 Technical Perspective on CORBA at the Architectural Stratum 23 3.3.1 Security Domains in CORBA 23 3.3.2 The CORBA Threat-Mitigation Model 24 3.4 Characterizing CORBA-Compliant Architectures, Systems, and Products 25 3.4.1 Conceptual Stratum 25 3.4.2 Architectural Stratum 26 3.4.3 Implementation Stratum 27 4 COM, DCOM, and COM+ 28 4.1 COM Motivation and Background 28 4.1.1 Architectural Overview 29 4.1.2 Security Services in the Architecture 30 4.2 Technical Perspective on COM at the Conceptual Stratum 30 4.3 Technical Perspective on COM at the Architectural Stratum 31 4.3.1 Security Services 32 4.3.2 Processing Overview