Field of View Podcast with Joseph Artuso

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hakuin on Kensho: the Four Ways of Knowing/Edited with Commentary by Albert Low.—1St Ed

ABOUT THE BOOK Kensho is the Zen experience of waking up to one’s own true nature—of understanding oneself to be not different from the Buddha-nature that pervades all existence. The Japanese Zen Master Hakuin (1689–1769) considered the experience to be essential. In his autobiography he says: “Anyone who would call himself a member of the Zen family must first achieve kensho- realization of the Buddha’s way. If a person who has not achieved kensho says he is a follower of Zen, he is an outrageous fraud. A swindler pure and simple.” Hakuin’s short text on kensho, “Four Ways of Knowing of an Awakened Person,” is a little-known Zen classic. The “four ways” he describes include the way of knowing of the Great Perfect Mirror, the way of knowing equality, the way of knowing by differentiation, and the way of the perfection of action. Rather than simply being methods for “checking” for enlightenment in oneself, these ways ultimately exemplify Zen practice. Albert Low has provided careful, line-by-line commentary for the text that illuminates its profound wisdom and makes it an inspiration for deeper spiritual practice. ALBERT LOW holds degrees in philosophy and psychology, and was for many years a management consultant, lecturing widely on organizational dynamics. He studied Zen under Roshi Philip Kapleau, author of The Three Pillars of Zen, receiving transmission as a teacher in 1986. He is currently director and guiding teacher of the Montreal Zen Centre. He is the author of several books, including Zen and Creative Management and The Iron Cow of Zen. -

Whittaker, Jim RFK #1

Jim Whittaker Oral History Interview –RFK #1, 4/25/1969 Administrative Information Creator: Jim Whittaker Interviewer: Roberta W. Greene Date of Interview: April 25, 1969 Place of Interview: Hickory Hill, McLean, Virginia Length: 21 pp. Biographical Note Whittaker, Jim; Friend, associate, Robert F. Kennedy, 1965-1968; expedition leader, National Geographic climb, Mt. Kennedy, Yukon, Canada, 1965; campaign worker, Robert F. Kennedy for President, 1968. Whittaker discusses the climb he led up Mount Kennedy which included Senator Robert F. Kennedy [RFK], the formation of their relationship due to this trip, and his thoughts on RFK’s personality, among other issues. Access Restrictions No restrictions. Usage Restrictions According to the deed of gift signed January 8, 1991, copyright of these materials has been assigned to the United States Government. Users of these materials are advised to determine the copyright status of any document from which they wish to publish. Copyright The copyright law of the United States (Title 17, United States Code) governs the making of photocopies or other reproductions of copyrighted material. Under certain conditions specified in the law, libraries and archives are authorized to furnish a photocopy or other reproduction. One of these specified conditions is that the photocopy or reproduction is not to be “used for any purpose other than private study, scholarship, or research.” If a user makes a request for, or later uses, a photocopy or reproduction for purposes in excesses of “fair use,” that user may be liable for copyright infringement. This institution reserves the right to refuse to accept a copying order if, in its judgment, fulfillment of the order would involve violation of copyright law. -

The Wonders of the Invisible World. Observations As Well Historical As Theological, Upon the Nature, the Number, and the Operations of the Devils (1693)

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Electronic Texts in American Studies Libraries at University of Nebraska-Lincoln 1693 The Wonders of the Invisible World. Observations as Well Historical as Theological, upon the Nature, the Number, and the Operations of the Devils (1693) Cotton Mather Second Church (Congregational), Boston Reiner Smolinski , Editor Georgia State University, [email protected] Follow this and additional works at: https://digitalcommons.unl.edu/etas Part of the American Studies Commons Mather, Cotton and Smolinski, Reiner , Editor, "The Wonders of the Invisible World. Observations as Well Historical as Theological, upon the Nature, the Number, and the Operations of the Devils (1693)" (1693). Electronic Texts in American Studies. 19. https://digitalcommons.unl.edu/etas/19 This Article is brought to you for free and open access by the Libraries at University of Nebraska-Lincoln at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Electronic Texts in American Studies by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. The Wonders of the Invisible World: COTTON MATHER (1662/3–1727/8). The eldest son of New England’s leading divine, Increase Mather and grand- Observations as Well Historical as Theological, son of the colony’s spiritual founders Richard Mather and John Cotton, Mather was born in Boston, educated at Har- upon the Nature, the Number, and the vard (B. A. 1678; M. A. 1681), and received an honorary Doctor of Divinity degree from Glasgow University (1710). Operations of the Devils As pastor of Boston’s Second Church (Congregational), he came into the political limelight during America’s version [1693] of the Glorious Revolution, when Bostonians deposed their royal governor, Sir Edmund Andros (April 1689). -

Idioms-And-Expressions.Pdf

Idioms and Expressions by David Holmes A method for learning and remembering idioms and expressions I wrote this model as a teaching device during the time I was working in Bangkok, Thai- land, as a legal editor and language consultant, with one of the Big Four Legal and Tax companies, KPMG (during my afternoon job) after teaching at the university. When I had no legal documents to edit and no individual advising to do (which was quite frequently) I would sit at my desk, (like some old character out of a Charles Dickens’ novel) and prepare language materials to be used for helping professionals who had learned English as a second language—for even up to fifteen years in school—but who were still unable to follow a movie in English, understand the World News on TV, or converse in a colloquial style, because they’d never had a chance to hear and learn com- mon, everyday expressions such as, “It’s a done deal!” or “Drop whatever you’re doing.” Because misunderstandings of such idioms and expressions frequently caused miscom- munication between our management teams and foreign clients, I was asked to try to as- sist. I am happy to be able to share the materials that follow, such as they are, in the hope that they may be of some use and benefit to others. The simple teaching device I used was three-fold: 1. Make a note of an idiom/expression 2. Define and explain it in understandable words (including synonyms.) 3. Give at least three sample sentences to illustrate how the expression is used in context. -

About Autism Script

Slide 1. What will I learn in this Autism Navigator tool? Welcome to Autism Navigator, a unique collection of web-based tools that uses extensive video footage to bridge the gap between science and community practice. You will have the opportunity to explore three topics about autism. You will learn about: • the core diagnostic features of autism, • the critical importance of early detection and early intervention, and • current information on prevalence and causes of autism. You will also have the opportunity to access some of the innovative features of Autism Navigator. A slide index is located on the left side of the bottom toolbar. A slide viewer is located on the right side of the bottom toolbar. These can be used to navigate easily to specific slides. For a self-guided tour to learn how to navigate Autism Navigator and for tech support, go to Help in the top bar. What are the diagnostic features of autism? Slide 2. Video: How is autism impacting us now? Child: Gingerbread houses…Cornucopia… Autism can be obvious or subtle. A child who can read by three, but can’t play peak-a-boo… Child: Macadamia pineapple tart… One who knows everything about trains and dinosaurs and gets upset if you ask about anything else. One who may never utter a spoken word, but rather use pictures or signing to be understood. The signs are as varied as the number of children affected. It affects all children. Boys 4 times more often than girls. There is no known biological marker or medical test to help diagnose it. -

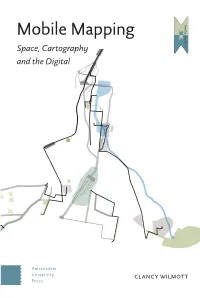

Mobile Mapping Mobile Mapping Mediamatters

media Mobile Mapping matters Space, Cartography and the Digital Amsterdam University clancy wilmott Press Mobile Mapping MediaMatters MediaMatters is an international book series published by Amsterdam University Press on current debates about media technology and its extended practices (cultural, social, political, spatial, aesthetic, artistic). The series focuses on critical analysis and theory, exploring the entanglements of materiality and performativity in ‘old’ and ‘new’ media and seeks contributions that engage with today’s (digital) media culture. For more information about the series see: www.aup.nl Mobile Mapping Space, Cartography and the Digital Clancy Wilmott Amsterdam University Press The publication of this book is made possible by a grant from the European Research Council (ERC) under the European Community’s 7th Framework program (FP7/2007-2013)/ ERC Grant Number: 283464 Cover illustration: Clancy Wilmott Cover design: Suzan Beijer Lay-out: Crius Group, Hulshout isbn 978 94 6298 453 0 e-isbn 978 90 4853 521 7 doi 10.5117/9789462984530 nur 670 © C. Wilmott / Amsterdam University Press B.V., Amsterdam 2020 All rights reserved. Without limiting the rights under copyright reserved above, no part of this book may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form or by any means (electronic, mechanical, photocopying, recording or otherwise) without the written permission of both the copyright owner and the author of the book. Every effort has been made to obtain permission to use all copyrighted illustrations reproduced in this book. Nonetheless, whosoever believes to have rights to this material is advised to contact the publisher. Table of Contents Acknowledgements 7 Part 1 – Maps, Mappers, Mapping 1. -

Cestoni, Angelo

Interview with Angelo Cestoni April 13th, 2004 Pittsburgh, PA Interviewer: James Zanella Transcriber: Daniele Talone [Begin Tape 1, Side A] AC: My dad lived there. And he brought me here; they put me in the army in 1939. He made the proper papers and I came over in 1939. I came over like a package; I had a tag on me. JZ: Really? AC: So I came to New York, and there were two people that took care of me, took me off the ship. But I didn’t have a visa, so I had to go through the regular check-out line. I didn’t have any American money, and my dad didn’t come up to get me. He lived up in Ford City. There were two people, a man and a lady that took me, and took me to a hotel. They wired my dad for the money, the train money to come to Pittsburgh. So that took about three days for the money to get there. This was 1939 now. Finally the money came and these two people come and put me on the train to Pittsburgh. And you should have seen me, trying to ask some of those people I was on the coach with, to tell me to get off in Pittsburgh, but I could not understand. I never spoke English, I never heard a word. JZ: So what year was this? AC: 1939. I got off the ship up in New York. So we pulled in to Greensburg, two o’clock in the morning. -

An Introductory Wisdom School with Cynthia Bourgeault Course Transcript & Companion Guide

An Introductory Wisdom School with Cynthia Bourgeault Course Transcript & Companion Guide DAY 2 OF WISDOM SCHOOL Day 2.1 Morning Reading, Chant, Meditation & Body Prayer Day 2.2 Morning Teaching The Great Benedictine Monasticism* Day 2.3 Morning Conscious Practical Work Day 2.4 Afternoon Reading, Chant & Meditation (offered by co-leaders) Day 2.5 Afternoon Teaching The Gospel of Thomas: Logion 2 Day 2.6 Evening Questions & Response, Chant & Closing Meditation* © WisdomWayofKnowing.org An Introductory Wisdom School with Cynthia Bourgeault—Course Transcript & Companion Guide Notes: © WisdomWayofKnowing.org Day 2.1 Morning Reading, Chant, Meditation & Body Prayer [00:19] From The Rule of Saint Benedict, early 6th century: If we wish to reach eternal life, … then—while there is still time, while we are in this body and have time to accomplish all these things by the light of life—we must run and do now what will profit us forever. Therefore we intend to establish a school for the Lord’s service. In drawing up its regulations, we hope to set down nothing harsh, nothing burdensome. The good of all concerned, however, may prompt us to a little strictness in order to amend faults and to safeguard love. Do not be daunted immediately by fear and run away from the road that leads to salvation. It is bound to be narrow at the outset. But as we progress in this way of life and in faith, we shall run on the path of God’s commandments, our hearts overflowing with the inexpressible delight of love. —Prologue 42-49, from The Rule of St. -

NASA Chat: Stay 'Up All Night' to Watch the Perseids! Experts Dr. Bill Cooke, Danielle Moser and Rhiannon Blaauw August

NASA Chat: Stay ‘Up All Night’ to Watch the Perseids! Experts Dr. Bill Cooke, Danielle Moser and Rhiannon Blaauw August 11, 2012 _____________________________________________________________________________________ Moderator Brooke: Good evening, everyone, and thanks for joining us tonight to watch the 2012 Perseid meteor shower. Your chat experts tonight are Bill Cooke, Danielle Moser, and Rhiannon Blaauw from NASA's Marshall Space Flight Center in Huntsville, Ala. This is a moderated chat, and we expect a lot of questions, so please be patient -- it may take a few minutes for the experts to get to your question. So here we go -- let's talk Perseids! Boady_N_Oklahoma: Good evening!! Rhiannon: Welcome! We look forward to answering your questions tonight! StephenAbner: I live in Berea, Kentucky. Can you please tell me which part of the sky I should focus on for best viewing? Bill: Lie on your back and look straight up. Avoid looking at the Moon. Victoria_C.: Because we pass through the cloud every year, does the amount of meteors decline in time, too? Rhiannon: The meteoroid stream is replenished as the particles are travelling around the orbit of the stream. We will have Perseids for a long time still. Jerrte: When will the Perseids end? What year will be the last to view? Bill: The Perseids will be around for the next few centuries. Victoria_C.: Why do meteors fall more on one night than different nights? Bill: On certain nights, the Earth passes closest to the debris left behind by the comet. When this happens, we get a meteor shower with higher rates. Boady_N_Oklahoma: I'm in central rural oklahoma with crystal clear skies. -

Democratic Culture That Applauds Moves That Attack Republicans and Defend Democratic Values, but Which Come Back to Haunt You in Ratings

DAVID LENEFSKY ATTORNEY & COUNSELOR AT LAW 277 BARK AVENUE - 47T~FL. NEW YORK,- N.Y. 10172 SBtlC LENEFSKY (1907-1981) TEL (212) 829-0090 . CEORGBANNE O'KEEWC FAX (212) 829-4010 February 8, 1999 Joel J. Roessner, Esq. Federal Election Commission 999 E Street, N.W. zs" Washington, D.C. 20463 5-- --I3 w- w- .:n-24 m N *:.I$!& ?,$w*-I-.mpz c Dear Mr. Roessner: ,-X%go" '-2 Please find enclosed the complete set of Presidential Aada$ prepared by Richard Morris. a9' DL/j mc Enclosures UPSTATE NEW YORK OFFICE WEST SHOKAN, N.Y. 12494 TEL (914) 657-8690 FAX (914) 657-2995 0 [email protected] I. TRACKING - WE C0”NUE TO WIN JULY ... LEAD UP 1 TO 16 PTS I I ; A. Lead has grown to 52-36 (up ftom 52-37 last week) 1. In three way race lead is also 16 (48-32-15) I3.criton Ratings not changed .c. .f 15 - 1. Job Rating at 60 (down 1) 7 =: .‘i.I 2. Favorabililty steady at 59 Y (d 3. Re-elect at 5 1% (up 2) C. Dole favorability downsto4947 (fkom 5046) 1 Continued decline: 5% July 2 -- 53% fl; July 8 - 50% Juiy 17- 49% 2. Negatives are wb;king 3. Dole’s favorability is much less intense than om’ a In three way: Dole gets 60% of his favorables Clinton gets 75% of his favorables , b. In a two way Dole gets 67% of his favorables Clinton.. gets 81% of his favorables 4. Dole’s unfavorabiIity has same intensity as our unfavorabililty Dole loses 95% of his unfavorabJein a 2 way Clinton loses 91% of his unfavokible in a 2 way I 5. -

K Ukasch , S Hieldsclashat BRSA Meeting

Executive director's performance scored Kukasch, Shields clash at BRSA m eeting By Audrey Kratz Democratic assemblymen or senators to help maintenance at the plant. BRSA Engineer obscure his lack of satisfactory performance UNION BEACH solve the p la n t’s problem s. Frank Williamson credited the reorganiza in operating the BRSA and to prevent further Bayshore Regional Sewerage Authority “He prefers to use International Flavors tion with helping to improve the plant’s effi scrutiny of his performance.” Commissioner Herbert Kukasch released a and Fragrances and Keansburg as the ciency. In the statement he released Monday, 71-page document Monday night which accus scapegoats to divert attention from his own For the first time in several months, he Kukasch answered Shields with exerpts from ed the utility’s executive director, Francis X. poor performance,” Kukasch said. “Not all said, the plant fulfilled its federal en newspaper articles, utility progress reports, Shields, of failing to do his job properly. the problems at the BRSA relate directly to vironmental requirements for sewage treat memos to former director Paul Smith, letters “Mr. Shields has not performed adequate the occasional heavy solids from IF F .” m ent. from the DEP, and letters from the ly,” Kukasch said in a letter to the authority “One thing is certain,” he added. “Mr. The plant met its full requirement to authority’s engineering consultants. and its member municipalities. “Despite the Shields never will require someone to make remove 85 percent of suspended solids from He refuted Shields’ charge of political additional personnel and expenditures, the excuses for him—he is doing a consummate the effluent and 91 percent of biochemical ox m otivation by saying th a t he had w orked w ell plant was in poorer shape after two years of job by himself.” ygen demand bacteria. -

Abraham Akau Kualoa Ranch, O`Ahu

Abraham Akau Kualoa Ranch, O`ahu As Kualoa Ranch hand and foreman for over 45 years, Uncle Abraham can still ride, rope, herd, brand, and handle horses and cattle better than anyone on the place. Even though he claims to be retired, everyone knows it isn’t true because a true Paniolo never stops doing what he does best. Uncle Manny was first hired at age 15 by Parker Ranch to train wild horses. I got into that breaking pen and I just loved it. The wilder they came, the better, he says. The rough and tumble cowboy later broke his back in five places when he fell from his horse while going after a runaway steer. The late Francis Morgan who established Kualoa Ranch, saw Abe’s special qualities when he arrived there in 1953. Mr. Morgan said, “Of all the things I’ve ever done for Kualoa Ranch, the best thing was to hire Abraham Akau.” His skill and knowledge, decades of dedicated service, love for and patience with horses as well as people; and the respect people hold for him as a cowboy and a teacher make him a true Paniolo. Series 1, Tape 6 ORAL HISTORY INTERVIEW with Abraham Akau, Sr. (AA) on October 31, 2000 at Kualoa Ranch BY: Anna Loomis (AL) AL: This interview is with Abraham Akau on October 31, 2000 at Kualoa Ranch. The interviewer is Anna Loomis. AL: Maybe you can just start by telling me when and where you were born. AA: I was born on Waimea, Big Island. Went to school over there.