Praise for Science on the Verge

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Abstracts-Significant Digits

SIGNIFICANT DIGITS Responsible Use of Quantitative Information @Brussels, Fondation Universitaire 9-10 June 2015 Joint Research Centre Joint Research Centre Brussels, 09-10 June 2015 Preamble We live in an age when good policies are assumed to be evidence-based. And that evidential base is assumed to be at its best when expressed in numbers. The digital information may be derived from quantitative data organised in statistics, or from qualitative data organised in indicators. Either way, evidence in digital form provides the accepted foundation of policy arguments over a very broad range of issues. In the policy realm there are frequent debates over particular policy issues and their associated evidence. But only rarely is the nature of the evidence called into question. Such a faith in numbers can be dangerous. Policies in economic and financial policy, based on numbers whose significance was less than assumed, recently turned out to be quite disastrously wrong. Other examples can easily be cited. The decades-long period of blaming dietary fats for heart disease, rather than sugar, is a notable recent case. We are concerned here with the systemic problem: whether we are regularly placing too much of an evidentiary burden on quantitative sciences whose strength and maturity are inherently inadequate. The harm that has been done to those sciences, as well as to the policy process, should be recognised. Only in that way can future errors be avoided. In this workshop we will review a seminal essay by Andrea Saltelli and Mario Giampietro, ‘The Fallacy of Evidence Based Policy’. That paper contains positive recommendations for the development of a responsible quantification. -

Week & Date Syllabus for ASTR 1200

Week & Date Syllabus for ASTR 1200 Doug Duncan, Fall 2018 *** be sure to read "About this Course" on the class Desire 2 Learn website*** The Mastering Astronomy Course Code is there, under "Mastering Astronomy" Do assigned readings BEFORE TUESDAYS - there will be clicker questions on the reading. Reading assignments are pink on this syllabus. Homework will usually be assigned on Tuesday, due the following Tuesday Read the textbook Strategically! Focus on answering the "Learning Goals." Week 1 Introductions. The amazing age of discovery in which we live. Aug. 28 The sky and its motions. My goals for the course. What would you like to learn about? What do I expect from you? Good news/bad news. Science vs. stories about science. This is a "student centered" class. I'm used to talking (Public Radio career). Here you talk too. Whitewater river analogy: If you don't want to paddle, don't get in the boat. Grading. Cheating. Clickers and why we use them. Homework out/in Fridays. Tutorials. Challenges. How to do well in the class. [reading clicker quiz] Why employers love to hire graduates of this class! Read bottom syllabus; "Statement of Expectations" for Arts & Sciences Classes" Policy on laptops and cell phones. Registrations: Clickers, Mastering Astronomy. Highlights of what the term will cover -- a bargain tour of the universe Bloom's Taxonomy of Learning What can we observe about the stars? Magnitude and color. Visualizing the Celestial Sphere - extend Earth's poles and equator to infinity! Visualizing the local sky: atltitude and azimuth Homeworks #1, How to Use Mastering Astronomy, due Sept. -

Supersizing Daubert Science for Litigation and Its Implications for Legal Practice and Scientific Research

Volume 52 Issue 4 Article 6 2007 Supersizing Daubert Science for Litigation and Its Implications for Legal Practice and Scientific Research Gary Edmond Follow this and additional works at: https://digitalcommons.law.villanova.edu/vlr Part of the Evidence Commons Recommended Citation Gary Edmond, Supersizing Daubert Science for Litigation and Its Implications for Legal Practice and Scientific Research, 52 Vill. L. Rev. 857 (2007). Available at: https://digitalcommons.law.villanova.edu/vlr/vol52/iss4/6 This Symposia is brought to you for free and open access by Villanova University Charles Widger School of Law Digital Repository. It has been accepted for inclusion in Villanova Law Review by an authorized editor of Villanova University Charles Widger School of Law Digital Repository. Edmond: Supersizing Daubert Science for Litigation and Its Implications f 2007] SUPERSIZING DAUBERT SCIENCE FOR LITIGATION AND ITS IMPLICATIONS FOR LEGAL PRACTICE AND SCIENTIFIC RESEARCH GARY EDMOND* I. INTRODUCTION: SCIENCE FOR LITIGATION AND THE EXCLUSIONARY ETHOS T HIS essay is about sciencefor litigation, its implications and limitations.' "Science for litigation" is expert evidence specifically developed for litigation-extant, pending or anticipated.2 Although, as we shall see, federal judges have demonstrated a curious tendency to restrict the concept to expert evidence associated with litigation which is underway or pending. Ordinarily, expert evidence developed for, or tailored to, litigation carries an epistemic stigma. Conventionally, the fact that expert evidence is ori- ented toward a specific goal is thought to impair the independence of the experts and the reliability of their evidence. This often manifests in con- cerns that evidence developed for litigation is inconsistent with scientific knowledge and the opinions of experts not embroiled in litigation. -

Tripadvisor Appoints Lindsay Nelson As President of the Company's Core Experience Business Unit

TripAdvisor Appoints Lindsay Nelson as President of the Company's Core Experience Business Unit October 25, 2018 A recognized leader in digital media, new CoreX president will oversee the TripAdvisor brand, lead content development and scale revenue generating consumer products and features that enhance the travel journey NEEDHAM, Mass., Oct. 25, 2018 /PRNewswire/ -- TripAdvisor, the world's largest travel site*, today announced that Lindsay Nelson will join the company as president of TripAdvisor's Core Experience (CoreX) business unit, effective October 30, 2018. In this role, Nelson will oversee the global TripAdvisor platform and brand, helping the nearly half a billion customers that visit TripAdvisor monthly have a better and more inspired travel planning experience. "I'm really excited to have Lindsay join TripAdvisor as the president of Core Experience and welcome her to the TripAdvisor management team," said Stephen Kaufer, CEO and president, TripAdvisor, Inc. "Lindsay is an accomplished executive with an incredible track record of scaling media businesses without sacrificing the integrity of the content important to users, and her skill set will be invaluable as we continue to maintain and grow TripAdvisor's position as a global leader in travel." "When we created the CoreX business unit earlier this year, we were searching for a leader to be the guardian of the traveler's journey across all our offerings – accommodations, air, restaurants and experiences. After a thorough search, we are confident that Lindsay is the right executive with the experience and know-how to enhance the TripAdvisor brand as we evolve to become a more social and personalized offering for our community," added Kaufer. -

The Rise and Fall of the Wessely School

THE RISE AND FALL OF THE WESSELY SCHOOL David F Marks* Independent Researcher Arles, Bouches-du-Rhône, Provence-Alpes-Côte d'Azur, 13200, France *Address for correspondence: [email protected] Rise and Fall of the Wessely School THE RISE AND FALL OF THE WESSELY SCHOOL 2 Rise and Fall of the Wessely School ABSTRACT The Wessely School’s (WS) approach to medically unexplained symptoms, myalgic encephalomyelitis and chronic fatigue syndrome (MUS/MECFS) is critically reviewed using scientific criteria. Based on the ‘Biopsychosocial Model’, the WS proposes that patients’ dysfunctional beliefs, deconditioning and attentional biases cause illness, disrupt therapies, and lead to preventable deaths. The evidence reviewed here suggests that none of the WS hypotheses is empirically supported. The lack of robust supportive evidence, fallacious causal assumptions, inappropriate and harmful therapies, broken scientific principles, repeated methodological flaws and unwillingness to share data all give the appearance of cargo cult science. The WS approach needs to be replaced by an evidence-based, biologically-grounded, scientific approach to MUS/MECFS. 3 Rise and Fall of the Wessely School Sickness doesn’t terrify me and death doesn’t terrify me. What terrifies me is that you can disappear because someone is telling the wrong story about you. I feel like that’s what happened to all of us who are living this. And I remember thinking that nobody’s coming to look for me because no one even knows that I went missing. Jennifer Brea, Unrest, 20171. 1. INTRODUCTION This review concerns a story filled with drama, pathos and tragedy. It is relevant to millions of seriously ill people with conditions that have no known cause or cure. -

A Day in the Life of Your Data

A Day in the Life of Your Data A Father-Daughter Day at the Playground April, 2021 “I believe people are smart and some people want to share more data than other people do. Ask them. Ask them every time. Make them tell you to stop asking them if they get tired of your asking them. Let them know precisely what you’re going to do with their data.” Steve Jobs All Things Digital Conference, 2010 Over the past decade, a large and opaque industry has been amassing increasing amounts of personal data.1,2 A complex ecosystem of websites, apps, social media companies, data brokers, and ad tech firms track users online and offline, harvesting their personal data. This data is pieced together, shared, aggregated, and used in real-time auctions, fueling a $227 billion-a-year industry.1 This occurs every day, as people go about their daily lives, often without their knowledge or permission.3,4 Let’s take a look at what this industry is able to learn about a father and daughter during an otherwise pleasant day at the park. Did you know? Trackers are embedded in Trackers are often embedded Data brokers collect and sell, apps you use every day: the in third-party code that helps license, or otherwise disclose average app has 6 trackers.3 developers build their apps. to third parties the personal The majority of popular Android By including trackers, developers information of particular individ- and iOS apps have embedded also allow third parties to collect uals with whom they do not have trackers.5,6,7 and link data you have shared a direct relationship.3 with them across different apps and with other data that has been collected about you. -

Netflix and the Development of the Internet Television Network

Syracuse University SURFACE Dissertations - ALL SURFACE May 2016 Netflix and the Development of the Internet Television Network Laura Osur Syracuse University Follow this and additional works at: https://surface.syr.edu/etd Part of the Social and Behavioral Sciences Commons Recommended Citation Osur, Laura, "Netflix and the Development of the Internet Television Network" (2016). Dissertations - ALL. 448. https://surface.syr.edu/etd/448 This Dissertation is brought to you for free and open access by the SURFACE at SURFACE. It has been accepted for inclusion in Dissertations - ALL by an authorized administrator of SURFACE. For more information, please contact [email protected]. Abstract When Netflix launched in April 1998, Internet video was in its infancy. Eighteen years later, Netflix has developed into the first truly global Internet TV network. Many books have been written about the five broadcast networks – NBC, CBS, ABC, Fox, and the CW – and many about the major cable networks – HBO, CNN, MTV, Nickelodeon, just to name a few – and this is the fitting time to undertake a detailed analysis of how Netflix, as the preeminent Internet TV networks, has come to be. This book, then, combines historical, industrial, and textual analysis to investigate, contextualize, and historicize Netflix's development as an Internet TV network. The book is split into four chapters. The first explores the ways in which Netflix's development during its early years a DVD-by-mail company – 1998-2007, a period I am calling "Netflix as Rental Company" – lay the foundations for the company's future iterations and successes. During this period, Netflix adapted DVD distribution to the Internet, revolutionizing the way viewers receive, watch, and choose content, and built a brand reputation on consumer-centric innovation. -

How to Get Started with Zoom - the Verge

8/3/2020 How to get started with Zoom - The Verge HOW-TO REVIEWS TECH 14 How to get started with Zoom You can make a free account right now By Jay Peters @jaypeters Mar 31, 2020, 2:51pm EDT If you buy something from a Verge link, Vox Media may earn a commission. See our ethics statement. Illustration by Alex Castro / The Verge Part of The Verge Guide to staying connected Before the pandemic, many companies were already using the videoconferencing app Zoom for business meetings, interviews, and other purposes. More recently, many individuals facing long days without contact with friends and family have moved to Zoom for face-to-face and group get-togethers. This is a quick guide for those who haven’t tried Zoom yet, featuring tips on how to get started using its free version. One thing to keep in mind: while one-to-one video calls can https://www.theverge.com/2020/3/31/21197215/how-to-zoom-free-account-get-started-register-sign-up-log-in-invite 1/7 8/3/2020 How to get started with Zoom - The Verge go as long as you want, any group calls on Zoom are limited to 40 minutes. If you want to have longer talks without interruption, you can either pay for Zoom’s Pro plan ($14.99 a month) or try an alternative videoconferencing app. (Note: there have been reports that the 40 minutes is sometimes extended — at least one staffer from The Verge found that an evening meeting with five friends was sent an extension when time started running out — but there has been no official word of any change from Zoom.) HOW TO REGISTER FOR ZOOM The first thing to do, of course, is to register for the service. -

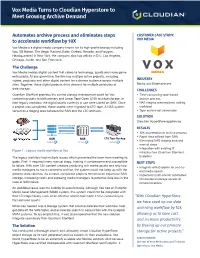

Vox Media Turns to Cloudian Hyperstore to Meet Growing Archive Demand

Vox Media Turns to Cloudian Hyperstore to Meet Growing Archive Demand Automates archive process and eliminates steps CUSTOMER CASE STUDY: to accelerate workflow by 10X VOX MEDIA Vox Media is a digital media company known for its high-profile brands including Vox, SB Nation, The Verge, Racked, Eater, Curbed, Recode, and Polygon. Headquartered in New York, the company also has offices in D.C, Los Angeles, Chicago, Austin, and San Francisco. The Challenge Vox Media creates digital content that caters to technology, sports and video game enthusiasts. At any given time, the firm has multiple active projects, including videos, podcasts and other digital content for a diverse audience across multiple INDUSTRY sites. Together, these digital projects drive demand for multiple petabytes of Media and Entertainment data storage. CHALLENGES Quantum StorNext provides the central storage management point for Vox, • Time-consuming tape-based connecting users to both primary and Linear Tape Open (LTO) archival storage. In archive process their legacy workflow, the digital assets currently in use were stored on SAN. Once • NAS staging area required, adding a project was completed, those assets were migrated to LTO tape. A NAS system workload served as a staging area between the SAN and the LTO archives. • Tape archive not searchable SOLUTION Cloudian HyperStore appliances PROJECT COMPLETE RESULTS PROJECT RETRIEVAL • 10X acceleration of archive process • Rapid data offload from SAN SAN NAS LTO Tape Backup • Eliminated NAS staging area and manual steps • Integration with existing IT Figure 1 : Legacy media workflow at Vox infrastructure (Quantum StorNext, The legacy workflow had multiple issues which prevented the team from meeting its Evolphin) goals. -

Abstract Papers

Workshop on Philosophy & Engineering WPE2008 The Royal Academy of Engineering London November 10th-12th 2008 Supported by the Royal Academy of Engineering, Illinois Foundry for Innovation in Engineering Education (iFoundry), the British Academy, ASEE Ethics Division, the International Network for Engineering Studies, and the Society for Philosophy & Technology Co-Chairs: David E. Goldberg and Natasha McCarthy Deme Chairs: Igor Aleksander, W Richard Bowen, Joseph C. Pitt, Caroline Whitbeck Contents 1. Workshop Schedule p.2 2. Abstracts – plenary sessions p.5 3. Abstracts – contributed papers p.7 4. Abstracts – poster session p.110 1 Workshop Schedule Monday 10 November 2008 All Plenary sessions take place in F4, the main lecture room 9.00 – 9.30 Registration 9.30 – 9.45 Welcome and introduction of day’s theme(s) Taft Broome and Natasha McCarthy 09.45 – 10.45 Billy V. Koen: Toward a Philosophy of Engineering: An Engineer’s Perspective 10. 45 – 11.15 Coffee break 11. 15 – 12.45 Parallel session – submitted papers A. F1 Mikko Martela Esa Saarinen, Raimo P. Hämäläinen, Mikko Martela and Jukka Luoma: Systems Intelligence Thinking as Engineering Philosophy David Blockley: Integrating Hard and Soft Systems Maarten Frannsen and Bjørn Jespersen: From Nutcracking to Assisted Driving: Stratified Instrumental Systems and the Modelling of Complexity B. F4 Ton Monasso: Value-sensitive design methodology for information systems Ibo van de Poel: Conflicting values in engineering design and satisficing Rose Sturm and Albrecht Fritzsche: The dynamics of practical wisdom in IT- professions C. G1 Ed Harris: Engineering Ethics: From Preventative Ethics to Aspirational Ethics Bocong Li: The Structure and Bonds of Engineering Communities Priyan Dias: The Engineer’s Identity Crisis:Homo Faber vs. -

Keeping Faith with the Student-Athlete, a Solid Start and a New Beginning for a New Century

August 1999 In light of recent events in intercollegiate athletics, it seems particularly timely to offer this Internet version of the combined reports of the Knight Commission on Intercollegiate Athletics. Together with an Introduction, the combined reports detail the work and recommendations of a blue-ribbon panel convened in 1989 to recommend reforms in the governance of intercollegiate athletics. Three reports, published in 1991, 1992 and 1993, were bound in a print volume summarizing the recommendations as of September 1993. The reports were titled Keeping Faith with the Student-Athlete, A Solid Start and A New Beginning for a New Century. Knight Foundation dissolved the Commission in 1996, but not before the National Collegiate Athletic Association drastically overhauled its governance based on a structure “lifted chapter and verse,” according to a New York Times editorial, from the Commission's recommendations. 1 Introduction By Creed C. Black, President; CEO (1988-1998) In 1989, as a decade of highly visible scandals in college sports drew to a close, the trustees of the John S. and James L. Knight Foundation (then known as Knight Foundation) were concerned that athletics abuses threatened the very integrity of higher education. In October of that year, they created a commission on Intercollegiate Athletics and directed it to propose a reform agenda for college sports. As the trustees debated the wisdom of establishing such a commission and the many reasons advanced for doing so, one of them asked me, “What’s the down side of this?” “Worst case,” I responded, “is that we could spend two years and $2 million and wind up with nothing to show of it.” As it turned out, the time ultimately became more than three years and the cost $3 million. -

The Problem of Context in Quality Improvement

Perspectives on context The problem of context in quality improvement Professor Mary Dixon-Woods About the authors Mary Dixon-Woods is Professor of Medical Sociology and Wellcome Trust Senior Investigator in the SAPPHIRE group, Department of Health Sciences, University of Leicester, UK and Deputy Editor-in-Chief of BMJ Quality and Safety. She leads a large programme of research focused on patient safety and healthcare improvement, healthcare ethics, and methodological innovation in studying healthcare. Contents 1. Introduction 89 2. Context and causality 90 Realist evaluation 90 Theory building in the clinical sciences 91 What QI can learn from the clinical sciences? 92 3. Why the clinical science approach is not enough 93 Cargo cult quality improvement 94 The problem of describing QI interventions 94 The role of practical wisdom in getting QI to work 95 The role of practical wisdom in studying and understanding QI activities 97 4. The principal research questions relating to context 99 References 100 88 THE HEALTH FOUNDATION: PERSPECTIVES ON CONTEXT The problem of context in quality improvement 1. Introduction disciplines, including politics,8,9 only latterly has the context sensitivity of many QI initiatives in healthcare Though (formal) quality improvement in healthcare become properly recognised.10,11 The challenge has only a brief history, it is history littered with now is twofold: how to study interactions between examples of showpiece programmes that do not contexts and interventions to develop a more credible consistently manage to export their success once science of quality improvement, and how to deal with transplanted beyond the home soil of early iterations,1 contextual effects in implementing quality improvement or that demonstrate startling variability in their impact interventions.