Beyond Trivial Counterfactual Generations with Diverse Valuable Explanations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

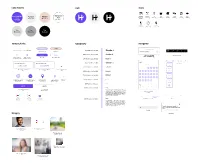

Color Palette Typography Header 1 Navigation Imagery Logo

Color Palette Logo Icons Background Brand/Highlight Highlight Highlight Dates Page/ Restaurant Bar Coffee Movies The Arts Music Sports /Text Date on date (location tag) (location tag) (location tag) (location tag) (location tag) (location tag) (location tag) /Text #EDEDED #F1D7CA plan page #6A42ED #FFFFFF Outdoors Time Location (location tag) (Date plan page) (Date plan page) Text Text Text #DDDDDD #AAAAAA #000000 Buttons/Links Typography Navigation Button Button Ready to go on a date? Open drop down menu Close drop down menu Source Serif Pro, s: 36, Bold Header 1 4 1 Inactive primary CTA Secondary CTA Enable “Set Up a Date” Feature Private until you both have enabled Button Button IBM Plex Sans, s: 30, Semi-Bold Header 2 Popup with the Enable a date Bottom navigation menu feature on the match chat page Inactive toggle state Active toggle state Primary CTA Tertiary CTA and Filters (enable setup feature) (enable setup feature) IBM Plex Sans, s: 21, Semi-Bold Header 3 Activity Price Rating April Source Serif Pro, s: 24, Bold Subheader 1 Who do you want to date? Who do you want to date? Recommended Filters (expanded) S M T W T F S Select a Match Erin Matthews New Spots IBM Plex Sans, s: 18, Semi-Bold Subheader 2 1 2 3 4 Upcoming Events Setup a Date Buttons (nothing Setup a Date Buttons (selection Close button (used for popout selected made) screens) Get a Drink IBM Plex Sans, s: 15, Semi-Bold Subheader 3 5 6 7 8 9 10 11 Get some Food 12 13 14 15 16 17 18 IBM Plex Sans, s: 24, Semi-Bold Button 1 See a Movie Add Add 19 20 21 22 23 24 25 Get Outside Add button for set up a date Add button for set up a date Location selector on Location selector on IBM Plex Sans, s: 13, Semi-Bold Button 2 Back button 26 27 28 29 30 Sports options (inactive) options (active) map view (current) map view (not-current) Listen to Music IBM Plex Sans, s: 9, Medium Button 3 May Add 6:00 PM 6:00 PM S M T W T F S IBM Plex Sans, s: 14, Medium Body 1 Setup a date button (selected Setup a date button This is an example of a body text paragraph. -

The Black Arts Enterprise and the Production of African American Poetry

0/-*/&4637&: *ODPMMBCPSBUJPOXJUI6OHMVFJU XFIBWFTFUVQBTVSWFZ POMZUFORVFTUJPOT UP MFBSONPSFBCPVUIPXPQFOBDDFTTFCPPLTBSFEJTDPWFSFEBOEVTFE 8FSFBMMZWBMVFZPVSQBSUJDJQBUJPOQMFBTFUBLFQBSU $-*$,)&3& "OFMFDUSPOJDWFSTJPOPGUIJTCPPLJTGSFFMZBWBJMBCMF UIBOLTUP UIFTVQQPSUPGMJCSBSJFTXPSLJOHXJUI,OPXMFEHF6OMBUDIFE ,6JTBDPMMBCPSBUJWFJOJUJBUJWFEFTJHOFEUPNBLFIJHIRVBMJUZ CPPLT0QFO"DDFTTGPSUIFQVCMJDHPPE The Black Arts Enterprise and the Production of African American Poetry The Black Arts Enterprise and the Production of African American Poetry Howard Rambsy II The University of Michigan Press • Ann Arbor First paperback edition 2013 Copyright © by the University of Michigan 2011 All rights reserved Published in the United States of America by The University of Michigan Press Manufactured in the United States of America c Printed on acid-free paper 2016 2015 2014 2013 5432 No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, or otherwise, without the written permission of the publisher. A CIP catalog record for this book is available from the British Library. Library of Congress Cataloging-in-Publication Data Rambsy, Howard. The black arts enterprise and the production of African American poetry / Howard Rambsy, II. p. cm. Includes bibliographical references and index. ISBN 978-0-472-11733-8 (cloth : acid-free paper) 1. American poetry—African American authors—History and criticism. 2. Poetry—Publishing—United States—History—20th century. 3. African Americans—Intellectual life—20th century. 4. African Americans in literature. I. Title. PS310.N4R35 2011 811'.509896073—dc22 2010043190 ISBN 978-0-472-03568-7 (pbk. : alk. paper) ISBN 978-0-472-12005-5 (e-book) Cover illustrations: photos of writers (1) Haki Madhubuti and (2) Askia M. Touré, Mari Evans, and Kalamu ya Salaam by Eugene B. Redmond; other images from Shutterstock.com: jazz player by Ian Tragen; African mask by Michael Wesemann; fist by Brad Collett. -

Dynamic and Interactive R Graphics for the Web: the Gridsvg Package

JSS Journal of Statistical Software MMMMMM YYYY, Volume VV, Issue II. http://www.jstatsoft.org/ Dynamic and Interactive R Graphics for the Web: The gridSVG Package Paul Murrell Simon Potter The Unversity of Auckland The Unversity of Auckland Abstract This article describes the gridSVG package, which provides functions to convert grid- based R graphics to an SVG format. The package also provides a function to associate hyperlinks with components of a plot, a function to animate components of a plot, a function to associate any SVG attribute with a component of a plot, and a function to add JavaScript code to a plot. The last two of these provides a basis for adding interactivity to the SVG version of the plot. Together these tools provide a way to generate dynamic and interactive R graphics for use in web pages. Keywords: world-wide web, graphics, R, SVG. 1. Introduction Interactive and dynamic plots within web pages are becomingly increasingly popular, as part of a general trend towards making data sets more open and accessible on the web, for example, GapMinder (Rosling 2008) and ManyEyes (Viegas, Wattenberg, van Ham, Kriss, and McKeon 2007). The R language and environment for statistical computing and graphics (R Development Core Team 2011) has many facilities for producing plots, and it can produce graphics formats that are suitable for including in web pages, but the core graphics facilities in R are largely focused on static plots. This article describes an R extension package, gridSVG, that is designed to embellish and transform a standard, static R plot and turn it into a dynamic and interactive plot that can be embedded in a web page. -

Fira Code: Monospaced Font with Programming Ligatures

Personal Open source Business Explore Pricing Blog Support This repository Sign in Sign up tonsky / FiraCode Watch 282 Star 9,014 Fork 255 Code Issues 74 Pull requests 1 Projects 0 Wiki Pulse Graphs Monospaced font with programming ligatures 145 commits 1 branch 15 releases 32 contributors OFL-1.1 master New pull request Find file Clone or download lf- committed with tonsky Add mintty to the ligatures-unsupported list (#284) Latest commit d7dbc2d 16 days ago distr Version 1.203 (added `__`, closes #120) a month ago showcases Version 1.203 (added `__`, closes #120) a month ago .gitignore - Removed `!!!` `???` `;;;` `&&&` `|||` `=~` (closes #167) `~~~` `%%%` 3 months ago FiraCode.glyphs Version 1.203 (added `__`, closes #120) a month ago LICENSE version 0.6 a year ago README.md Add mintty to the ligatures-unsupported list (#284) 16 days ago gen_calt.clj Removed `/**` `**/` and disabled ligatures for `/*/` `*/*` sequences … 2 months ago release.sh removed Retina weight from webfonts 3 months ago README.md Fira Code: monospaced font with programming ligatures Problem Programmers use a lot of symbols, often encoded with several characters. For the human brain, sequences like -> , <= or := are single logical tokens, even if they take two or three characters on the screen. Your eye spends a non-zero amount of energy to scan, parse and join multiple characters into a single logical one. Ideally, all programming languages should be designed with full-fledged Unicode symbols for operators, but that’s not the case yet. Solution Download v1.203 · How to install · News & updates Fira Code is an extension of the Fira Mono font containing a set of ligatures for common programming multi-character combinations. -

Katowice 2015

Więcej o książce CENA 28 ZŁ ISSN 0208-6336 (+ VAT) ISBN 978-83-8012-387-8 KATOWICE 2015 NR 3469 KATOWICE 2015 Redaktor serii: Językoznawstwo Polonistyczne Bożena Witosz Recenzentka Piotra Łobacz Wstęp Międzynarodowy alfabet fonetyczny (dalej: IPA) jest powszechnie znanym systemem transkrypcji stosowanym w różnych dziedzinach badań nad mową, lecz bardzo rzadko używanym w obrębie językoznawstwa polskiego, nie tylko w badaniach nad językiem polskim, ale także nad innymi językami słowiań skimi. Co więcej, nie dysponujemy, poza jednym wyjątkiem (Żurawski, red., 2011), polskim opracowaniem zawierającym obecnie obowiązujące symbole alfabetu międzynarodowego. Zestawy symboli opublikowane w pracach Wiktora Jassema (zob. bibliografia), w Słowniku wymowy polskiej PWN (SWP, 1977) oraz Encyklopedii językoznawstwa ogólnego (EJO, 1999), a także w wielu podręcznikach zdezaktualizowały się po 1989 roku. Zresztą nawet w opra cowaniu Język polski. Nauka o języku (Żurawski, red., 2011) nie znajdziemy wszystkich symboli IPA ani bardzo potrzebnych wskazówek dotyczących tego alfabetu (sposobu stawiania symboli oraz opisu ich budowy graficznej). Zadanie omówienia IPA jest bardzo skomplikowane, ponieważ nie wystarczy podać wartości samych symboli (a jest ich bardzo dużo, bo ponad 2501), ale trzeba także wyjaśnić sposób ich użycia (szczególnie ważne jest to w przy padku diakrytów), ich budowę (to z kolei jest istotne w przypadku nietypo wych liter typu <ʒ ɟ>), znaczenie angielskich terminów fonetycznych stoso wanych w opisie symboli IPA (nie ma tutaj pełnej jednoznaczności względem terminów polskich), sposoby konstruowania symboli fonetycznych w edyto rach tekstu (od czcionek komputerowych, tzw. fontów, zawierających sym bole IPA począwszy, na sposobach pozycjonowania znaków diakrytycznych skończywszy). Tak zaprojektowana monografia byłaby jednak niepraktyczna: zawierałaby ogromną ilość informacji, z których przeciętny użytkownik alfa betu fonetycznego nie skorzystałby, a już na pewno nie studiowałby ich po to tylko, by wstawić do swojego tekstu kilka symboli fonetycznych. -

FONT GUIDE Workbook to Help You Find the Right One for Your Brand

FONT GUIDE Workbook to help you find the right one for your brand. www.ottocreative.com.au Choosing the right font for your brand YOUR BRAND VALUES: How different font styles can be used to make up your brand: Logo Typeface: Is usually a bit more special and packed with your brands personality. This font should be used sparingly and kept for special occasions. Headings font: Logo Font This font will reflect the same brand values as your logo font - eg in this example both fonts are feminine and elegant. Headings Unlike your logo typeface, this font should be easier to read and look good a number of different sizes and thicknesses. Body copy Body font: The main rule here is that this font MUST be easy to read, both digitally and for print. If there is already alot going on in your logo and heading font, keep this style simple. Typefaces, common associations & popular font styles San Serif: Clean, Modern, Neutral Try these: Roboto, Open Sans, Lato, Montserrat, Raleway Serif: Classic, Traditional, reliable Try these: Playfair Display, Lora, Source Serif Pro, Prata, Gentium Basic Slab Serif: Youthful, modern, approachable Try these: Roboto Slab, Merriweather, Slabo 27px, Bitter, Arvo Script: Feminine, Romantic, Elegant Try these: Dancing Script, Pacifico, Satisfy, Courgette, Great Vibes Monotype:Simple, Technical, Futuristic Try these: Source Code Pro, Nanum Gothic Coding, Fira Mono, Cutive Mono Handwritten: Authentic, casual, creative Try these: Indie Flower, Shadows into light, Amatic SC, Caveat, Kalam Display: Playful, fun, personality galore Try these: Lobster, Abril Fatface, Luckiest Guy, Bangers, Monoton NOTE: Be careful when using handwritten and display fonts, as they can be hard to read. -

New Features in Mpdf V6.0

NewNew FeaturesFeatures inin mPDFmPDF v6.0v6.0 Advanced Typography Many TrueType fonts contain OpenType Layout (OTL) tables. These Advanced Typographic tables contain additional information that extend the capabilities of the fonts to support high-quality international typography: ● OTL fonts support ligatures, positional forms, alternates, and other substitutions. ● OTL fonts include information to support features for two-dimensional positioning and glyph attachment. ● OTL fonts contain explicit script and language information, so a text-processing application can adjust its behavior accordingly. mPDF 6 introduces the power and flexibility of the OpenType Layout font model into PDF. mPDF 6 supports GSUB, GPOS and GDEF tables for now. mPDF 6 does not support BASE and JSTF at present. Other mPDF 6 features to enhance complex scripts: ● improved Bidirectional (Bidi) algorithm for right-to-left (RTL) text ● support for Kashida for justification of arabic scripts ● partial support for CSS3 optional font features e.g. font-feature-settings, font-variant ● improved "autofont" capability to select fonts automatically for any script ● support for CSS :lang selector ● dictionary-based line-breaking for Lao, Thai and Khmer (U+200B is also supported) ● separate algorithm for Tibetan line-breaking Note: There are other smart-font technologies around to deal with complex scripts, namely Graphite fonts (SIL International) and Apple Advanced Typography (AAT by Apple/Mac). mPDF 6 does not support these. What can OTL Fonts do? Support for OTL fonts allows the faithful display of almost all complex scripts: (ܐSyriac ( ,(שלום) Hebrew ,(اﻟﺴﻼم ﻋﻠﻴﻢ) Arabic ● ● Indic - Bengali (ামািলকুম), Devanagari (नमते), Gujarati (નમતે), Punjabi (ਸਿਤ ਸੀ ਅਕਾਲ), Kannada ( ), Malayalam (നമെ), Oriya (ନମସ୍କର), Tamil (வணக்கம்), Telugu ( ) ನಮ ជបសួរំ నమరం ● Sinhala (ආයුෙඛා්වන්), Thai (สวัสดี), Lao (ສະບາຍດີ), Khmer ( ), Myanmar (မဂလပၝ), Tibetan (བ་ས་བ་གས།) Joining and Reordering র + ◌্ + খ + ◌্ + ম + ◌্ + ক + ◌্ + ষ + ◌্ + র + ি◌ + ◌ু = িু cf. -

264 Tugboat, Volume 37 (2016), No. 3 Typographers' Inn Peter Flynn

264 TUGboat, Volume 37 (2016), No. 3 A Typographers’ Inn X LE TEX Peter Flynn Back at the ranch, we have been experimenting with X LE ATEX in our workflow, spurred on by two recent Dashing it off requests to use a specific set of OpenType fonts for A I recently put up a new version of Formatting Infor- some GNU/Linux documentation. X LE TEX offers A mation (http://latex.silmaril.ie), and in the two major improvements on pdfLTEX: the use of section on punctuation I described the difference be- OpenType and TrueType fonts, and the handling of tween hyphens, en rules, em rules, and minus signs. UTF-8 multibyte characters. In particular I explained how to type a spaced Font packages. You can’t easily use the font pack- dash — like that, using ‘dash~---Ђlike’ to put a A ages you use with pdfLTEX because the default font tie before the dash and a normal space afterwards, encoding is EU1 in the fontspec package which is key so that if the dash occurred near a line-break, it to using OTF/TTF fonts, rather than the T1 or OT1 would never end up at the start of a line, only at A conventionally used in pdfLTEX. But late last year the end. I somehow managed to imply that a spaced Herbert Voß kindly posted a list of the OTF/TTF dash was preferable to an unspaced one (probably fonts distributed with TEX Live which have packages because it’s my personal preference, but certainly A of their own for use with X LE TEX [6]. -

Dell™ Gigaos 6.5 Release Notes July 2015

Dell™ GigaOS 6.5 Release Notes July 2015 These release notes provide information about the Dell™ GigaOS release. • About Dell GigaOS 6.5 • System requirements • Product licensing • Third-party contributions • About Dell About Dell GigaOS 6.5 For complete product documentation, visit http://software.dell.com/support/. System requirements Not applicable. Product licensing Not applicable. Third-party contributions Source code is available for this component on http://opensource.dell.com/releases/Dell_Software. Dell will ship the source code to this component for a modest fee in response to a request emailed to [email protected]. This product contains the following third-party components. For third-party license information, go to http://software.dell.com/legal/license-agreements.aspx. Source code for components marked with an asterisk (*) is available at http://opensource.dell.com. Dell GigaOS 6.5 1 Release Notes Table 1. List of third-party contributions Component License or acknowledgment abyssinica-fonts 1.0 SIL Open Font License 1.1 ©2003-2013 SIL International, all rights reserved acl 2.2.49 GPL (GNU General Public License) 2.0 acpid 1.0.10 GPL (GNU General Public License) 2.0 alsa-lib 1.0.22 GNU Lesser General Public License 2.1 alsa-plugins 1.0.21 GNU Lesser General Public License 2.1 alsa-utils 1.0.22 GNU Lesser General Public License 2.1 at 3.1.10 GPL (GNU General Public License) 2.0 atk 1.30.0 LGPL (GNU Lesser General Public License) 2.1 attr 2.4.44 GPL (GNU General Public License) 2.0 audit 2.2 GPL (GNU General Public License) 2.0 authconfig 6.1.12 GPL (GNU General Public License) 2.0 avahi 0.6.25 GNU Lesser General Public License 2.1 b43-fwcutter 012 GNU General Public License 2.0 basesystem 10.0 GPL (GNU General Public License) 3 bash 4.1.2-15 GPL (GNU General Public License) 3 bc 1.06.95 GPL (GNU General Public License) 2.0 bind 9.8.2 ISC 1995-2011. -

The Fontspec Package Font Selection for XƎLATEX and Lualatex

The fontspec package Font selection for XƎLATEX and LuaLATEX Will Robertson and Khaled Hosny [email protected] 2013/05/12 v2.3b Contents 7.5 Different features for dif- ferent font sizes . 14 1 History 3 8 Font independent options 15 2 Introduction 3 8.1 Colour . 15 2.1 About this manual . 3 8.2 Scale . 16 2.2 Acknowledgements . 3 8.3 Interword space . 17 8.4 Post-punctuation space . 17 3 Package loading and options 4 8.5 The hyphenation character 18 3.1 Maths fonts adjustments . 4 8.6 Optical font sizes . 18 3.2 Configuration . 5 3.3 Warnings .......... 5 II OpenType 19 I General font selection 5 9 Introduction 19 9.1 How to select font features 19 4 Font selection 5 4.1 By font name . 5 10 Complete listing of OpenType 4.2 By file name . 6 font features 20 10.1 Ligatures . 20 5 Default font families 7 10.2 Letters . 20 6 New commands to select font 10.3 Numbers . 21 families 7 10.4 Contextuals . 22 6.1 More control over font 10.5 Vertical Position . 22 shape selection . 8 10.6 Fractions . 24 6.2 Math(s) fonts . 10 10.7 Stylistic Set variations . 25 6.3 Miscellaneous font select- 10.8 Character Variants . 25 ing details . 11 10.9 Alternates . 25 10.10 Style . 27 7 Selecting font features 11 10.11 Diacritics . 29 7.1 Default settings . 11 10.12 Kerning . 29 7.2 Changing the currently se- 10.13 Font transformations . 30 lected features . -

Cyberarts 2021 Since Its Inception in 1987, the Prix Ars Electronica Has Been Honoring Creativity and Inno- Vativeness in the Use of Digital Media

Documentation of the Prix Ars Electronica 2021 Lavishly illustrated and containing texts by the prize-winning artists and statements by the juries that singled them out for recognition, this catalog showcases the works honored by the Prix Ars Electronica 2021. The Prix Ars Electronica is the world’s most time-honored media arts competition. Winners are awarded the coveted Golden Nica statuette. Ever CyberArts 2021 since its inception in 1987, the Prix Ars Electronica has been honoring creativity and inno- vativeness in the use of digital media. This year, experts from all over the world evaluated Prix Ars Electronica S+T+ARTS 3,158 submissions from 86 countries in four categories: Computer Animation, Artificial Intelligence & Life Art, Digital Musics & Sound Art, and the u19–create your world com - Prize ’21 petition for young people. The volume also provides insights into the achievements of the winners of the Isao Tomita Special Prize and the Ars Electronica Award for Digital Humanity. ars.electronica.art/prix STARTS Prize ’21 STARTS (= Science + Technology + Arts) is an initiative of the European Commission to foster alliances of technology and artistic practice. As part of this initiative, the STARTS Prize awards the most pioneering collaborations and results in the field of creativity 21 ’ and innovation at the intersection of science and technology with the arts. The STARTS Prize ‘21 of the European Commission was launched by Ars Electronica, BOZAR, Waag, INOVA+, T6 Ecosystems, French Tech Grande Provence, and the Frankfurt Book Fair. This Prize catalog presents the winners of the European Commission’s two Grand Prizes, which honor Innovation in Technology, Industry and Society stimulated by the Arts, and more of the STARTS Prize ‘21 highlights. -

The Selnolig Package: Selective Suppression of Typographic Ligatures*

The selnolig package: Selective suppression of typographic ligatures* Mico Loretan† 2015/10/26 Abstract The selnolig package suppresses typographic ligatures selectively, i.e., based on predefined search patterns. The search patterns focus on ligatures deemed inappropriate because they span morpheme boundaries. For example, the word shelfful, which is mentioned in the TEXbook as a word for which the ff ligature might be inappropriate, is automatically typeset as shelfful rather than as shelfful. For English and German language documents, the selnolig package provides extensive rules for the selective suppression of so-called “common” ligatures. These comprise the ff, fi, fl, ffi, and ffl ligatures as well as the ft and fft ligatures. Other f-ligatures, such as fb, fh, fj and fk, are suppressed globally, while making exceptions for names and words of non-English/German origin, such as Kafka and fjord. For English language documents, the package further provides ligature suppression rules for a number of so-called “discretionary” or “rare” ligatures, such as ct, st, and sp. The selnolig package requires use of the LuaLATEX format provided by a recent TEX distribution, e.g., TEXLive 2013 and MiKTEX 2.9. Contents 1 Introduction ........................................... 1 2 I’m in a hurry! How do I start using this package? . 3 2.1 How do I load the selnolig package? . 3 2.2 Any hints on how to get started with LuaLATEX?...................... 4 2.3 Anything else I need to do or know? . 5 3 The selnolig package’s approach to breaking up ligatures . 6 3.1 Free, derivational, and inflectional morphemes .