Deep Video Color Propagation 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Theory for Painting Video: Color Perception

Color Theory For Painting Video: Color Perception • http://www.ted.com/talks/lang/eng/beau_lotto_optical_illusions_show_how_we_see.html • Experiment • http://www.youtube.com/watch?v=y8U0YPHxiFQ Intro to color theory • http://www.youtube.com/watch?v=059-0wrJpAU&feature=relmfu Color Theory Principles • The Color Wheel • Color context • Color Schemes • Color Applications and Effects The Color Wheel The Color Wheel • A circular diagram displaying the spectrum of visible colors. The Color Wheel: Primary Colors • Primary Colors: Red, yellow and blue • In traditional color theory, primary colors can not be mixed or formed by any combination of other colors. • All other colors are derived from these 3 hues. The Color Wheel: Secondary Colors • Secondary Colors: Green, orange and purple • These are the colors formed by mixing the primary colors. The Color Wheel: Tertiary Colors • Tertiary Colors: Yellow- orange, red-orange, red-purple, blue-purple, blue-green & yellow-green • • These are the colors formed by mixing a primary and a secondary color. • Often have a two-word name, such as blue-green, red-violet, and yellow-orange. Color Context • How color behaves in relation to other colors and shapes is a complex area of color theory. Compare the contrast effects of different color backgrounds for the same red square. Color Context • Does your impression od the center square change based on the surround? Color Context Additive colors • Additive: Mixing colored Light Subtractive Colors • Subtractive Colors: Mixing colored pigments Color Schemes Color Schemes • Formulas for creating visual unity [often called color harmony] using colors on the color wheel Basic Schemes • Analogous • Complementary • Triadic • Split complement Analogous Color formula used to create color harmony through the selection of three related colors which are next to one another on the color wheel. -

Studio Art 9: Value, Intensity, and Types of Color April 6–9 Time Allotment: 20 Minutes Per Day

Studio Art 9: Value, Intensity, and Types of Color April 6–9 Time Allotment: 20 minutes per day Hello Great Hearts Northern Oaks 9th grade families! My name is Ms. Hoelscher and I will be one of the founding art teachers at Great Hearts Live Oak in August. I am excited to start my journey a little earlier with you here at Great Hearts Northern Oaks! I studied art and biopsychology at the University of Dallas, graduating in May 2019. Studying the liberal arts in a classical setting changed how I view the world and how I interact with it. My love of classical education and creating has guided me to Great Hearts. I look forward to sharing that passion and love with the students as we delve into Truth, Goodness, and Beauty. Please feel free to reach me at [email protected] if you have any questions or concerns. I will be having office hours starting this week using Zoom. My hours will be on Tuesday and Thursday. Period 4 will be 10:00AM-10:50AM and period 6 will be 1:00PM-1:50PM. I look forward to meeting you all! Packet Overview Date Objective(s) Page Number Monday, April 6 1. Define and describe value and intensity of color. 2-4 Tuesday, April 7 1. Compare and contrast local color and optical 5-6 color. Wednesday, April 8 1. Compare and contrast arbitrary and 7-8 exaggerated/heightened color. Thursday, April 9 1. Demonstrate Impressionistic use of color, value, 9-10 and intensity. Friday, April 10 April Break, no class! Additional Notes: Use a separate piece of paper, sketchbooks or the spaces provided in this packet to create your designs and images. -

Marianne Times Column

Portrait of the Artist: Marianne F. Buckley Curran By Theresa Brown “Portrait of the Artist” is a bi-weekly series introducing Hull Artists to the community by asking each artist to answer 10 questions that will give you a glimpse into their world. Marianne Buckley-Curran’s connection with Hull Artists goes back to its early founding years and the seasonal “Studio at the Beach” in the old MDC garage in the late ‘90s. Like many other Hull Artists, some of her earliest memories are of creative activities and a connection to an artistic family member. In her case a grandfather, who was a Lighthouse Keeper, sign painter, and artist, taught Marianne how to properly use an oil paint brush as a small child. Although art making continued to be an important part of Marianne’s life, practicality and natural athleticism led her to pursue a college degree and career in Physical Education. While taking a break from teaching to raise her family, Marianne was able to link her knowledge and love of art to a part time entrepreneurial venture as an artist agent. She arranged exhibition of works by other artists in area businesses as well as organized and operated local art shows. ****( my strategy was to learn the business of art while I was developing my own artistic vision ) Always surrounded by the artistry of others, Buckley-Curran began to question why she wasn’t exhibiting her own work and started entering her paintings in juried shows. Success in these shows encouraged her to refocus on making art and to study with local painter John Kilroy. -

Regionalism and Local Color Fiction in Nineteenth-Century Us Literature

Filologia y Lingiiistica XXVII(2): 141-153, 2001 THE POLITICS OF PLACE: REGIONALISM AND LOCAL COLOR FICTION IN NINETEENTH-CENTURY U.S. LITERATURE Kari Meyers Skredsvig RESUMEN Este articulo es el primero de una serie dedicada a las relaciones entre autoria femenina y la noci6n de lugar/espacio en la literatura estadounidense. Se presenta un panorama general a fin de contextualizar el regionalismo y el localismo como movimientos literarios y como subgdneros literarios en el desanollo de la literatura estadounidense del siglo diecinueve, mediante el andlisis de los contextos hist6ricos, sociales, politicos y literarios que inicialmente propiciaron estas dos tendencias literarias y posteriormente influyeron en su desapariciSn. Tambidn se examina el contenido cultural y aporte literario de estas etiquetas, asi como la posibilidad de intercambiarlas. ABSTRACT This is the first in a series of articles dealing with the interrelationships of female authorship and space/place in U.S. literature. This article provides an overview for contextualizing regionalism and local color both as literary movements and as literary subgenres in the development of nineteenth-century U.S. literature by exploring the historical, social, political, and literary environments which initially propitiated and later influenced the demise of these two literary tendencies. It also examines the cultural and literary import of these labels, as well as their possible interchangeability. Place and space are components of human reality at its most fundamental level. InThe Poetics of Space, Gaston Bachelard affirms that our home is "our first universe, a real cosmos in every sense of the word" (1994:4). We construct a personal identity not only for and within ourselves, but inevitably grounded in our context, at the same time that the environment constmcts us. -

Phototherapy: from Ancient Egypt to the New Millennium

Original Article &&&&&&&&&&&&&& Phototherapy: From Ancient Egypt to the New Millennium Antony F. McDonagh, PhD induce significant phototherapeutic responses, heliotherapy was the only possible form of phototherapy up until the end of the 19th century. From the time of the Pharaohs, most ancient civilizations and cultures have worshipped the sun or sun gods and appear to have Phototherapy with ultraviolet light was widely and successfully used in the made some connection between sunlight and health.1,2 The use of past for treatment of a variety of diseases. Phototherapy with visible light sunbaths by the ancient Romans and Greeks for maintaining general alone has no benefit except in the therapy and prophylaxis of unconjugated health and for therapeutic purposes is particularly well documented. hyperbilirubinemia. For this purpose, radiation in the region of 480 to Whether connections between specific disorders and sunlight 500 nm is most effective and radiation above 550 nm is useless. The exposure were ever made by these ancient cultures is difficult to principle effect of the treatment is not photodegradation of bilirubin, but know, but certainly sunlight can be used successfully to treat many conversion of the pigment to structural isomers that are more polar and skin disorders.3 If nothing else, heliotherapy would have had an more readily excreted than the normal, more toxic ‘‘dark’’ form of the antirachitic and bactericidal action. pigment. This, coupled with some photooxidation of bilirubin, diminishes In ancient China, what has been termed heliotherapy was one of the overall pool of bilirubin in the body and lowers plasma levels. In the the immortalizing techniques of early Daoism, introduced by future, phototherapy may be supplanted by pharmacologic treatment, but in Lingyan Tzu-Ming in the first century AD during the Han dynasty.4 the near future, the most likely advance will be the introduction of novel One technique, described about four centuries later during the Tang forms of light production and delivery. -

White Light & Shade

Match the colored strips on the left with Answer: All four colors on the the strip on the right which is the same left would appear as "a", for color seen in room without any light. without light there would be no color. 1 2 3 4 a b c d LIGHT IS ADDITIVE COLOR PERCEPTION Light may be white or colored. Light isadditive. We perceive color in objects because those objects When two or more colored lights are mixed, a have a pigment which absorbes some light rays and lighter mixture results. White light results from reflects those we see. A yellow ball, for example, is combining all three primary colors of light at high perceived as yellow because its pigmentation reflects intensity. Light primaries are the secondary that color. Darker colors will absorb more light, colors of pigment. A rainbow is white light because they have more pigmentation with which to refracted by rain drops. absorb light. PIGMENT IS SUBTRACTIVE LIGHT PRIMARIES Mixing the primary pigments in equal amounts RED ORANGE produces black. Pigment is consideredsubtractive , GREEN for as more pigments are mixed together (excluding BLUE/VIOLET white), darker hues result. Black is the presence of all colors in pigment. PIGMENT PRIMARIES CYAN CREATING THE ILLUSION OF LIGHT AND SHADE MAGENTA YELLOW In order to create the illusion of light falling across different colors and values, artists must recognize how light modifies color. When we say that an apple is red, we refer to its local color of redness. Refer to the illustration below which shows what This local color red exists only in our minds, for this happens when a white light illuminates a portion red will change with every change in lighting. -

![Greek Color Theory and the Four Elements [Full Text, Not Including Figures] J.L](https://docslib.b-cdn.net/cover/6957/greek-color-theory-and-the-four-elements-full-text-not-including-figures-j-l-1306957.webp)

Greek Color Theory and the Four Elements [Full Text, Not Including Figures] J.L

University of Massachusetts Amherst ScholarWorks@UMass Amherst Greek Color Theory and the Four Elements Art July 2000 Greek Color Theory and the Four Elements [full text, not including figures] J.L. Benson University of Massachusetts Amherst Follow this and additional works at: https://scholarworks.umass.edu/art_jbgc Benson, J.L., "Greek Color Theory and the Four Elements [full text, not including figures]" (2000). Greek Color Theory and the Four Elements. 1. Retrieved from https://scholarworks.umass.edu/art_jbgc/1 This Article is brought to you for free and open access by the Art at ScholarWorks@UMass Amherst. It has been accepted for inclusion in Greek Color Theory and the Four Elements by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. Cover design by Jeff Belizaire ABOUT THIS BOOK Why does earlier Greek painting (Archaic/Classical) seem so clear and—deceptively— simple while the latest painting (Hellenistic/Graeco-Roman) is so much more complex but also familiar to us? Is there a single, coherent explanation that will cover this remarkable range? What can we recover from ancient documents and practices that can objectively be called “Greek color theory”? Present day historians of ancient art consistently conceive of color in terms of triads: red, yellow, blue or, less often, red, green, blue. This habitude derives ultimately from the color wheel invented by J.W. Goethe some two centuries ago. So familiar and useful is his system that it is only natural to judge the color orientation of the Greeks on its basis. To do so, however, assumes, consciously or not, that the color understanding of our age is the definitive paradigm for that subject. -

203-02 Developing Local Color

Journeyman every effort was made to insure the accuracy of the information contained in this lesson © JANIE GILDOW Journeyman JOURNEYMAN LESSON 203-02 © JANIE GILDOW Journeyman WELCOME TO R.T. PENCILS ACADEMY! !"#$%&''(%&)&*$(+$,#+(-.#,$/0$-(1#$20&$3$-00,$+0)(,$&.,#'+/3.,(.-$04$3))$3+5#%/+ 04$/"#$%0)0'#,$5#.%()6$!"#$70&'.#2*3.$8#1#)$)#++0.+$3'#$09#'#,$40'$/"#$*0'# 3,13.%#,$+/&,#./6 :0'$/"#$%0*5)#/#$)(+/$04$%)3++$,#+%'(5/(0.+; $"//5;<<===6>3.(#-(),0=6%0*<"/*)<%3/3)0-6"/*) ?@())+$3.,$/#%".(A&#+$3'#$5)3..#,$/0$B&(),$0.$0.#$3.0/"#'$(.$3$+#A&#./(3) *3..#'C$+0$B2$+/3'/(.-$=(/"$/"#$)0=#'$.&*B#'#,$)#++0.+$3.,$%0*5)#/(.- 5'#'#A&(+(/#+C$20&D))$%0./(.&#$(.$20&'$%0*40'/$E0.#$3+$20&$=0'@$20&'$=32$/0$/"# "(-"#'$)#1#)$)#++0.+6 F3%"$)#++0.$%0./3(.+$(.40'*3/(0.$3.,$())&+/'3/(0.+$/0$#G5)3(.$3.,$%)3'(42$3)) 3+5#%/+$04$/"#$+&B>#%/$B#(.-$%01#'#,6$F3%"$)#++0.$%0./3(.+$#G#'%(+#+$=(/"$+/#5H B2H+/#5$(.+/'&%/(0.+$40'$20&$/0$40))0=6$I.,$#3%"$)#++0.$=())$5'01(,#$20&$=(/"$)(.# ,'3=(.-+C$,#J.(/(0.+C$*3/#'(3)+$)(+/C$3.,$%0)0'$53)#//#6 K#3,$/"'0&-"$/"#$)#++0.$40'$3$-#.#'3)$01#'1(#=$3.,$&.,#'+/3.,(.-C$5'01(,# +&(/3B)#$535#'C$+0*#$+&55)#*#./3)$*3/#'(3)+C$3.,$/"#$%0)0'#,$5#.%()+$)(+/#,$40' 20&$(.$/"#$L0)0'$M3)#//#6 © JANIE GILDOW Journeyman The red barn photos in this lesson were graciously provided by my sister, Carolyn Christy, and my good friend (and former student), Bruce Hudkins. I owe them both a debt of gratitude, since I now live in Arizona-- where red barns are as scarce as hen’s teeth! Thank you, Bruce and Carolyn! © JANIE GILDOW Journeyman In this lesson, you’ll put your color mixing skills to work as you analyze color and value. -

Interaction of Color Interaction of Color Handouts and Exercises Table of Contents

Josef Albers Interaction Of Color Interaction of Color Handouts and Exercises Table of Contents Interaction of Color Phase I Pre-course inventory .....................................................4 Albers quotes .................................................................5 Some color term defi nitions ...........................................6 Assignment list ...............................................................7 Tips on approaching assignments ..................................8 Critique guide ................................................................9 Find dad .......................................................................10 Interaction of value ......................................................11 Value, hue, tinting, shading, toning .............................12 Halation: What is it? How can we create it? ..............13 Transparency - Film, Veil,Volume Color & Atmosphere Transparency illusions .................................................16 Creating the illusion of fi lm: Steps to consider ...........17 Creating the illusion of fi lm: Some things to consider 18 Light Light & color ...............................................................20 Colored light ................................................................21 We see form, values and color because of light ...........22 Ideas on creating the illusion of light and shade .........23 Light & shadows: Creating the illusion .......................24 Plotting shadows .........................................................25 Translucent -

Color Terms (PDF)

Educational Material Glossary of Color Terms achromatic Having no color or hue; without identifiable hue. Most blacks, whites, grays, and browns are achromatic. advancing colors Colors that appear to come towards you (warm colors). analogous colors Closely related hues, especially those in which we can see a common hue; hues that are neighbors on the color wheel, such as blue, blue-green, and green. chroma The purity or degree of saturation of a color; relative absence of white or gray in a color. See intensity. color field painting A movement that grew out of Abstract Expressionism, in which large stained or painted areas or “fields of color” evoke aesthetic and emotional responses. color wheel A circular arrangement of contiguous spectral hues used in some color systems. Also called a color circle. complementary colors Two hues directly opposite one another on a color wheel (for example, red and green, yellow and purple) which, when mixed together in proper proportions, produce a neutral gray. These color combinations create the strongest possible contrast of color, and when placed close together, intensify the appearance of the other. The true complement of a color can be seen in its afterimage. cool colors Colors whose relative visual temperatures make them seem cool. Cool colors generally include green, blue-green, blue, blue-violet, and violet. The quality of warmness or coolness is relative to adjacent hues. See also warm colors. hue The pure state of any color or a pure pigment that has not had white or black added to it. intensity The relative purity or saturation of a hue (color), on a scale from bright (pure) to dull (mixed with another hue or a neutral.) Also called chroma. -

Color Constancy Using Local Color Shifts

Color Constancy Using Local Color Shifts Marc Ebner Universit¨at W¨urzburg, Lehrstuhl f¨ur Informatik II, Am Hubland, 97074 W¨urzburg, Germany, [email protected], http://www2.informatik.uni-wuerzburg.de/staff/ebner/welcome.html Abstract. The human visual system is able to correctly determine the color of objects in view irrespective of the illuminant. This ability to compute color constant descriptors is known as color constancy. We have developed a parallel algorithm for color constancy. This algorithm is based on the computation of local space average color using a grid of processing elements. We have one processing element per image pixel. Each processing element has access to the data stored in neighboring elements. Local space average color is used to shift the color of the input pixel in the direction of the gray vector. The computations are executed inside the unit color cube. The color of the input pixel as well as local space average color is simply a vector inside this Euclidean space. We compute the component of local space average color which is orthogonal to the gray vector. This component is subtracted from the color of the input pixel to compute a color corrected image. Before performing the color correction step we can also normalize both colors. In this case, the resulting color is rescaled to the original intensity of the input color such that the image brightness remains unchanged. 1 Motivation The human visual system is able to correctly determine the color of objects irrespective of the light which illuminates the objects. For instance, if we are in a room illuminated with yellow lights, we are nevertheless able to determine the correct color of the objects inside the room. -

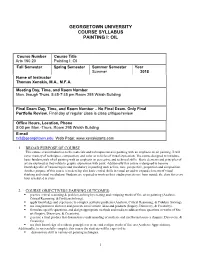

Georgetown University Course Syllabus Painting I: Oil

GEORGETOWN UNIVERSITY COURSE SYLLABUS PAINTING I: OIL Course Number Course Title Arts 150 20 Painting I: Oil Fall Semester Spring Semester Summer Semester Year Summer 2018 Name of Instructor Thomas Xenakis, M.A., M.F.A. Meeting Day, Time, and Room Number Mon. though Thurs. 5:45-7:45 pm Room 295 Walsh Building Final Exam Day, Time, and Room Number – No Final Exam. Only Final Portfolio Review. Final day of regular class is class critique/review Office Hours, Location, Phone 5:00 pm Mon.-Thurs. Room 295 Walsh Building E-mail [email protected] Web Page: www.xenakisarts.com 1. BROAD PURPOSE OF COURSE This course is an introduction to the materials and techniques used in painting with an emphasis on oil painting. It will cover mastery of techniques, composition, and color as vehicles of visual expression. The course designed to introduce basic fundamentals of oil painting with an emphasis on perceptive and technical skills. Basic elements and principles of art are explored as they relate to graphic expression with paint. Additionally this course is designed to become knowledgeable of various topics and vocabulary in painting such as line, tone, perspective, proportion and composition. Another purpose of this course is to develop also basic critical skills in visual art and to expand elements of visual thinking and visual vocabulary. Students are required to work on their studio projects one hour outside the class for every hour scheduled in class 2. COURSE OBJECTIVES/LEARNING OUTCOMES: . practice critical reasoning & problem-solving by creating and critiquing works of fine art in painting (Analysis, Critical Reasoning, & Problem-Solving).