How Familiarity with Different Domains Influences Cognitive Reflection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Table of Contents for Strategic Planning Managers Implementation Tools Handbook

STRATEGIC PLANNING HANDBOOK AND MANAGERS IMPLEMENTATION TOOLS SOUTHERN UNIVERSITY AT NEW ORLEANS Academic Years 2006-2011 Strategic Implementation Plan Academic Years 2006 to 2011 Southern University at New Orleans Revised December 2, 2010 ii FORWARD The State of Louisiana requires higher education institutions to provide a strategic plan for intended operations. Southern University at New Orleans (SUNO) has prepared and implemented strategic initiatives for academic years 2006 to 2011. The SUNO strategic plan includes the Louisiana Board of Regents and the Southern University System strategic goals for higher education institutions including student access and success, academic and operational quality and accountability, and service and research. These goals are complemented by an institutional vision of community linkages, academic excellence, illiteracy and poverty reduction, transparency, and technological excellence. These SUNO strategic directions are paramount for higher education eminence and for rebuilding our community and the Gulf Coast after the Hurricanes of 2005. The strategic plan was facilitated by a strategic planning committee made up of representatives of every campus unit and was developed with input from the entire University family including community representatives. The implementation of the strategic plan will ensure that SUNO will continue to provide quality education, service to our communities, and contributions to the economic development of the State of Louisiana. Victor Ukpolo Chancellor Southern University at New Orleans iii TABLE OF CONTENTS CHANCELLOR’S LETTER ………………………………………………………… vi UNIVERSITY STRATEGIC PLANNING COMMITTEE ………………….…….. vii I. STRATEGIC PLANNING PROCESS BACKGROUND INFORMATION …. 1 A. A Definition of Strategic Planning ………………………………………….... 1 B. Purpose of Strategic Planning ………………………………………………... 1 C. Steps in a Strategic Planning Process (Example 1) …………………………. -

Strategic Planning and Management Guidelines for Transportation Agencies

331 NATIONAL COOPERATIVE HIGHWAY RESEARCH PROGRAM REPORT 33I STRATEGIC PLANNING AND MANAGEMENT GUIDELINES FOR TRANSPORTATION AGENCIES TRANSPORTATION RESEARCH BOARD NATIONAL RESEARCH COUNCIL TRANSPORTATION RESEARCH BOARD EXECUTIVE COMMITTEE 1990 OFFICERS Chairman: Wayne Muri, Chief Engineer, Missouri Highway & Transportation Department Vice Chairman: C. Michael Walton, Bess Harris Jones Centennial Professor and Chairman, College of Engineering, The University of Texas at Austin Executive Director: Thomas B. Deen, Transportation Research Board MEMBERS JAMES B. BUSEY IV, Federal Aviation Administrator, U.S. Department of Transportation (ex officio) GILBERT E. CARMICHAEL, Federal Railroad Administrator, U.S. Department of Transportation. (ex officio) BRIAN W. CLYMER, Urban Mass Transportation Administrator, US. Department of Transportation (ex officio) JERRY R. CURRY, National Highway Traffic Safety Administrator, US. Department of Transportation (ex officio) FRANCIS B. FRANCOIS, Executive Director, American Association of State Highway and Transportation Officials (ex officio) JOHN GRAY, President, Notional Asphalt Pavement Association (ex officio) THOMAS H. HANNA, President and Chief Executive Officer, Motor Vehicle Manufacturers Association of the United States, Inc. (ex officio) HENRY J. HATCH, Chief of Engineers and Commander, U.S. Army Corps of Engineers (ex officio) THOMAS D. LARSON, Federal Highway Administrator, U.S. Department of Transportation (ex officio) GEORGE H. WAY, JR., Vice President for Research and Test Departments, Association -

Military Planning Process

Module 3: Operational Framework L e s s o n 1 Military Planning Process • All military staff officers in the FHQ of a UN mission need to understand the UN Military Planning Process for peacekeeping operations in Why is this order to fully participate in the process important for me? • Successful military operations rely on commanders and staff understanding and employing a common and comprehensive planning and decision-making process • Identify the phases of the Military Planning Process • Explain the basic methods to analyze the Operational Environment (OE) Learning Outcomes • Undertake the Analysis of the OE • Develop Courses Of Action (COA) • Evaluate different COAs Overview of the Military Planning Process Analysis of the Operational Environment Lesson Content Mission Analysis Course of Action Development A methodical process that relies on joint Definition efforts of commanders of the and staff to seek Military optimal solutions and to Planning make decisions to achieve an objective in Process a dynamic environment • Comprehensive • Efficient • Inclusive Principles of • Informative Planning • Integrated (with long term goals) • Logical • Transparent Themes of Planning • Identify problems and objectives • Gather information • Generate options to achieve those goals • Decide on the way ahead and then execute it – Who, What, Where, When, How, Why? Likely • Inefficient use of resources Consequences of • Potential loss of life Hasty or Incomplete • Ultimately mission failure Planning Successful military operations rely on commanders and staff understanding and employing a common and comprehensive process 1. Analysis of the Operational Environment. Phases of 2. Mission Analysis. Military Planning 3. Course of Action Development. Process in UN 4. Course of Action Analysis and Peacekeeping Decision. -

Strategic Planning Basics for Managers

STRATEGIC PLANNING Guide for Managers 1 Strategic Planning Basics for Managers In all UN offices, departments and missions, it is critical that managers utilize the most effective approach toward developing a strategy for their existing programmes and when creating new programmes. Managers use the strategy to communicate the direction to staff members and guide the larger department or office work. Here you will find practical techniques based on global management best practices. Strategic planning defined Strategic planning is a process of looking into the future and identifying trends and issues against which to align organizational priorities of the Department or Office. Within the Departments and Offices, it means aligning a division, section, unit or team to a higher-level strategy. In the UN, strategy is often about achieving a goal in the most effective and efficient manner possible. For a few UN offices (and many organizations outside the UN), strategy is about achieving a mission comparatively better than another organization (i.e. competition). For everyone, strategic planning is about understanding the challenges, trends and issues; understanding who are the key beneficiaries or clients and what they need; and determining the most effective and efficient way possible to achieve the mandate. A good strategy drives focus, accountability, and results. How and where to apply strategic planning UN departments, offices, missions and programmes develop strategic plans to guide the delivery of an overall mandate and direct multiple streams of work. Sub-entities create compatible strategies depending on their size and operational focus. Smaller teams within a department/office or mission may not need to create strategies; there are, however, situations in which small and medium teams may need to think strategically, in which case the following best practices can help structure the thinking. -

Section 4 How to Plan Communication Strategically

SECTION 4 How to plan communication strategically “Failing to plan is planning to fail” 227 SECTION 4 HOW TO PLAN COMMUNICATION STRATEGICALLY What is in this section? Biodiversity conservation depends on the actions of many people and organisations. CEPA is a means to gain people’s support and assistance. CEPA fails when the activities are not properly planned and prepared. Planning and preparation for CEPA identifies pitfalls, ensures efficient use of resources and maximises effect. Section 1 dealt with importance of CEPA to achieve biodiversity objectives. It explained the role of CEPA in NBSAP formulation, updating and implementing. Section 2 provides CEPA tools to make use of networks. Much can be done with networks and networking to implement the NBSAP with limited resources. Section 3 provides CEPA tools to involve stakeholders in implementing the NBSAP. Section 4 provides tools to strategically plan communication, assisting to develop a communication plan step by step. In all sections the toolkit is comprised of CEPA Fact Providing theory and practice pointers on how and why to use CEPA Sheet Providing a small case study of how CEPA has been used to illustrate the fact sheets Example Providing a handy reference list to check your CEPA planning against Checklist 228 SECTION 4 HOW TO PLAN COMMUNICATION STRATEGICALLY Contents Introduction Why plan communication strategically?. .231 Fact Sheet: What is strategic communication? . 231 Example: Communication Plan for a Biodiversity Day Campaign. 232 Checklist: communication planning . 233 Step 1: Analysis of the issue and role of communication. 234 Fact Sheet: How to analyse the problem. 235 Fact Sheet: How to identify the role of communication. -

INTRODUCTION to the COMPREHENSIVE PLAN Alan M

INTRODUCTION TO THE COMPREHENSIVE PLAN Alan M. Efrussy, AICP "Planning is the triumph of logic over dumb luck" -Anonymous as quoted by David L. Pugh, AICP "The best offense is" a good defense" - Anonymous as quoted by Alan M Efrussy, AICP The purpose of this chapter is to describe the importance, purposes and elements of the comprehensive plan. This discussion represents the author's perspective and recognizes that there are a number of ways to prepare a comprehensive plan and that different elements may be included in plans, reflecting the particular orientation or emphasis of the community. What is important is that a community has a comprehensive plan. This author and many cities’ planning commission members in Texas are indebted to the authors of chapters regarding the comprehensive plan published by the Educational Foundation, Inc. of the Texas Chapter of the American Planning Association, as part of earlier editions of the Guide to Urban Planning in Texas Communities. The earlier authors were Robert L. Lehr, AICP, planner and former Professor of Urban and Regional Planning at the University of Oklahoma, and Robert L. Wegner, Sr., AICP, Professor, School of Urban and Public Affairs, at the University of Texas at Arlington. Definition of a Comprehensive Plan A comprehensive plan can be defined as a long-range plan intended to direct the growth and physical development of a community for a 20 to 30 year or longer period. Ideally, and if feasible, it is appropriate to try to prepare a comprehensive plan for the ultimate development of a community. This will allow for ultimate utility, transportation, and community facilities planning, and therefore can aid in a more time and cost-effective planning and budgeting program. -

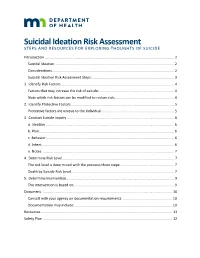

Suicidal Ideation Risk Assessment STEPS and RESOURCES for EXPLORING THOUGHTS of SUICIDE Introduction

Suicidal Ideation Risk Assessment STEPS AND RESOURCES FOR EXPLORING THOUGHTS OF SUICIDE Introduction .................................................................................................................................... 2 Suicidal Ideation .......................................................................................................................... 2 Considerations ............................................................................................................................ 2 Suicidal Ideation Risk Assessment Steps..................................................................................... 3 1. Identify Risk Factors ................................................................................................................... 4 Factors that may increase the risk of suicide: ............................................................................ 4 Note which risk factors can be modified to reduce risk: ............................................................ 4 2. Identify Protective Factors ......................................................................................................... 5 Protective factors are unique to the individual. ......................................................................... 5 3. Conduct Suicide Inquiry .............................................................................................................. 6 a. Ideation .................................................................................................................................. -

Dwight D. Eisenhower National Fish Hatchery Draft Recreational Fishing Plan March 2020

U.S. Fish & Wildlife Service Dwight D. Eisenhower National Fish Hatchery Draft Recreational Fishing Plan March 2020 Dwight D. Eisenhower National Fish Hatchery Draft Recreational Fishing Plan March 2020 United States Department of the Interior Fish and Wildlife Service Dwight D. Eisenhower National Fish Hatchery 4 Holden Road North Chittenden, VT 05763 Submitted: Project Leader Date Concurrence: Complex Manager Date Approved: Assistant Regional Director, Fish and Aquatic Conservation Date Dwight D. Eisenhower National Fish Hatchery Draft Recreational Fishing Plan 2 Recreational Fishing Table of Contents I. Introduction 4 II. Statement of Objectives 5 III. Description of Fishing Program 5 A. Areas to be Opened to Fishing 5 B. Species to be Taken, Fishing Seasons, Fishing Access 6 C. Fishing Permit Requirements 6 D. Consultation and Coordination with the State 6 E. Law Enforcement 7 F. Funding and Staff Requirements 7 IV. Conduct of the Fishing Program 7 A. Angler Permit Application, Selection, and/or Registration Procedures 7 B. Hatchery-Specific Fishing Regulations 7 C. Relevant State Regulations 7 D. Other Hatchery Rules and Regulations for Fishing 8 V. Public Engagement 8 A. Outreach for Announcing and Publicizing the Fishing Program 8 B. Anticipated Public Reaction to the Fishing Program 8 C. How Anglers Will Be Informed of Relevant Rules and Regulations 8 VI. Compatibility Determination 8 VII. References 9 VIII. Figures 10 Environmental Assessment ……………………………………………………………………..11 Dwight D. Eisenhower National Fish Hatchery Draft Recreational Fishing Plan 3 I. Introduction Dwight D. Eisenhower (DDE) National Fish Hatchery (NFH) is part of the U.S. and Wildlife Service’s (Service) Fish and Aquatic Conservation (FAC) program. -

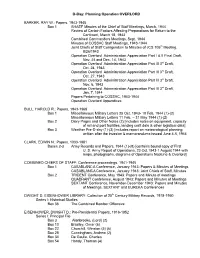

D-Day: Planning Operation OVERLORD

D-Day: Planning Operation OVERLORD BARKER, RAY W.: Papers, 1943-1945 Box 1 SHAEF Minutes of the Chief of Staff Meetings, March, 1944 Review of Certain Factors Affecting Preparations for Return to the Continent, March 18, 1943 Combined Commanders Meetings, Sept. 1944 Minutes of COSSAC Staff Meetings, 1943-1944 Joint Chiefs of Staff Corrigendum to Minutes of JCS 105th Meeting, 8/26/1943 Operation Overlord Administration Appreciation Part I & II Final Draft, Nov. 24 and Dec. 14, 1943 Operation Overlord Administration Appreciation Part III 3rd Draft, Oct. 24, 1943 Operation Overlord Administration Appreciation Part III 3rd Draft, Oct. 27, 1943 Operation Overlord Administration Appreciation Part III 3rd Draft, Nov. 6, 1943 Operation Overlord Administration Appreciation Part III 3rd Draft, Jan. 7, 1944 Papers Pertaining to COSSAC, 1943-1944 Operation Overlord Appendices BULL, HAROLD R.: Papers, 1943-1968 Box 1 Miscellaneous Military Letters 25 Oct. 1943- 10 Feb. 1944 (1)-(2) Miscellaneous Military Letters 11 Feb. – 31 May 1944 (1)-(2) Box 2 Diary Pages and Other Notes (2) [includes notes on equipment, capacity of rail and port facilities, landing craft data & other logistical data] Box 3 Weather Pre-D-day (1)-(3) [includes report on meteorological planning written after the invasion & memorandums issued June 4-5, 1944 CLARK, EDWIN N.: Papers, 1933-1981 Boxes 2-3 Army Records and Papers, 1944 (1)-(8) [contains bound copy of First U. S. Army Report of Operations, 23 Oct.1943-1 August 1944 with maps, photographs, diagrams of Operations Neptune & Overlord] COMBINED CHIEFS OF STAFF: Conference proceedings, 1941-1945 Box 1 CASABLANC A Conference, January 1943: Papers & Minutes of Meetings CASABLANCA Conference, January 1943: Joint Chiefs of Staff, Minutes Box 2 TRIDENT Conference, May 1943: Papers and Minute of meetings QUADRANT Conference, August 1943: Papers and Minutes of Meetings SEXTANT Conference, November-December 1943: Papers and Minutes of Meetings, SEXTANT and EUREKA Conferences DWIGHT D. -

Marathon County Strategic Plan MARATHON 2018–2022 COUNTY

Marathon County Strategic Plan MARATHON 2018–2022 COUNTY WISCONSIN MARATHON COUNTY BOARD Kurt Gibbs, County Board Chair Lee Peek, County Board Vice-Chair OFFICE OF COUNTY ADMINISTRATION Brad Karger, County Administrator Lance Leonhard, Deputy Administrator 2018–2022 STRATEGIC PLANNING TEAM Brad Karger, County Administrator Lance Leonhard, Deputy Administrator Kurt Gibbs, County Board Chair Lee Peek, County Board Vice-Chair Rebecca Frisch, Conservation, Planning & Zoning Director Joan Theurer, Health Officer Jeffrey M. Pritchard, Planning Analyst Amanda Ostrowski, Public Health Educator Ben Krombholz, IT Technician Jonathan Schmunk, Administrative Coordinator STRATEGIC PLAN FACILITATORS Eric Giordano, Wisconsin Institute of Public Policy and Service Amanda Ostrowski, Marathon County Health Department Information about the 2018–2022 Strategic Plan is available online at www.co.marathon.wi.us/StrategicPlan. You also may contact the Marathon County Conservation, Planning & Zoning Department at 715-261-6000 from 8:00 a.m. to 4:30 p.m. Monday–Friday, or visit the office at 210 River Drive, Wausau, WI 54403. CONTENTS • FOREWORD ..................................................................................... ii • STRATEGIC PLAN FRAMEWORK (Figure 1) .............................. 1 • INTRODUCTION ............................................................................. 2 • Marathon County’s Overarching Goal............................................ 2 • 2016 Comprehensive Plan Topics (Figure 2) .................................. 3 • History -

Preparing a Forest Plan

Foundations of Forest Planning Volume 1 (Version 3.1) Preparing a Forest Plan USDA Forest Service October 2008 Preparing a Forest Plan This is a concept book and a work in progress. This document provides a tool for forest planning. The ideas contained in this document are based on the experiences of folks who have created forest plans under the National Forest Management Act (NFMA). It reflects what has worked in the past and may work well in the future. In this document, “Forest” also refers to National Grasslands and Prairies. For more information contact: Ecosystem Management Coordination Staff Forest Service U.S. Department of Agriculture 1400 Independence Avenue SW Mailstop 1104 Washington, DC 20250-1104 202-205-0895 http://www.fs.fed.us/emc/nfma/index.htm Foundations of Forest Planning Volume 1 (Version 3.1) Preparing a Forest Plan Forest Planning..........................................................................................1 Stages of Planning .................................................................................................... 2 Why Three Parts? ..................................................................................................... 3 How is a Forest Plan Used? .................................................................................... 3 A Business Model for Planning.............................................................................. 4 Part One—Vision.......................................................................................7 A Description of Forest Roles and -

The 8-Step Communication Planning Model

Communication Planning for Sustainability and The 8-Step Communication Planning Model Prepared by the Communication & Social Marketing Center http://www.sshs.samhsa.gov/communications • [email protected] • 800–790–2647 Introduction No matter where you are in your grant cycle, creating and relying on a communication plan developed specifically for your grant site in collaboration with your partners and stakeholders can be a critical component of your initiative’s long-term success. A well-considered plan can provide a strategic roadmap for your communication activities. This can be particularly true for Safe Schools/Healthy Students (SS/HS) initiatives embarking on a plan for sustainability. Creating a communication plan for sustainability presents you and your partners with an opportunity to recommit as a team to sustaining success. Your plan will help uncover ways to deepen existing partnerships and develop new ones that hold the potential to help sustain—or even expand—critical functions of your initiative. Your plan will allow you to make the most of your coalition’s limited time and resources. Having a plan in place can help alleviate the stress many grantees feel near the end of Federal funding—stress that’s often accompanied by the question, “What on earth do we do now?” Maybe you and your partners created a communication plan early in your grant cycle. We certainly hope so! If you did, now is the perfect time to revisit that process, since you’ll be reaching out to new and different audiences to tell your story and generate support for the future. Or, perhaps you and your partners have been communicating here and there to promote your initiative—a press release submitted to your local paper announcing your grant award way back when, a brochure that describes your programs and services, a Web site that’s updated every once in a while.