On SZK and PP

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Information Theory Methods in Communication Complexity

INFORMATION THEORY METHODS IN COMMUNICATION COMPLEXITY BY NIKOLAOS LEONARDOS A dissertation submitted to the Graduate School—New Brunswick Rutgers, The State University of New Jersey in partial fulfillment of the requirements for the degree of Doctor of Philosophy Graduate Program in Computer Science Written under the direction of Michael Saks and approved by New Brunswick, New Jersey JANUARY, 2012 ABSTRACT OF THE DISSERTATION Information theory methods in communication complexity by Nikolaos Leonardos Dissertation Director: Michael Saks This dissertation is concerned with the application of notions and methods from the field of information theory to the field of communication complexity. It con- sists of two main parts. In the first part of the dissertation, we prove lower bounds on the random- ized two-party communication complexity of functions that arise from read-once boolean formulae. A read-once boolean formula is a formula in propositional logic with the property that every variable appears exactly once. Such a formula can be represented by a tree, where the leaves correspond to variables, and the in- ternal nodes are labeled by binary connectives. Under certain assumptions, this representation is unique. Thus, one can define the depth of a formula as the depth of the tree that represents it. The complexity of the evaluation of general read-once formulae has attracted interest mainly in the decision tree model. In the communication complexity model many interesting results deal with specific read-once formulae, such as disjointness and tribes. In this dissertation we use information theory methods to prove lower bounds that hold for any read-once ii formula. -

A Revisionist History of Algorithmic Game Theory

A Revisionist History of Algorithmic Game Theory Moshe Y. Vardi Rice University Theoretical Computer Science: Vols. A and B van Leeuwen, 1990: Handbook of Theoretical Computer Science Volume A: algorithms and complexity • Volume B: formal models and semantics (“logic”) • E.W. Dijkstra, EWD Note 611: “On the fact that the Atlantic Ocean has two sides” North-American TCS (FOCS&STOC): Volume A. • European TCS (ICALP): Volumes A&B • A Key Theme in FOCS/STOC: Algorithmic Game Theory – algorithm design for strategic environments 1 Birth of AGT: The ”Official” Version NEW YORK, May 16, 2012 – ACM’s Special Interest Group on Algorithms and Computation Theory (SIGACT) together with the European Association for Theoretical Computer Science (EATCS) will recognize three groups of researchers for their contributions to understanding how selfish behavior by users and service providers impacts the behavior of the Internet and other complex computational systems. The papers were presented by Elias Koutsoupias and Christos Papadimitriou, Tim Roughgarden and Eva Tardos, and Noam Nisan and Amir Ronen. They will receive the 2012 Godel¨ Prize, sponsored jointly by SIGACT and EATCS for outstanding papers in theoretical computer science at the International Colloquium on Automata, Languages and Programming (ICALP), July 9–13, in Warwick, UK. 2 Three seminal papers Koutsoupias&Papadimitriou, STACS 1999: Worst- • case Equilibira – introduced the “price of anarchy” concept, a measure of the extent to which competition approximates cooperation, quantifying how much utility is lost due to selfish behaviors on the Internet, which operates without a system designer or monitor striving to achieve the “social optimum.” Roughgarden & Tardos, FOCS 2000: How Bad is • Selfish Routing? – studied the power and depth of the “price of anarchy” concept as it applies to routing traffic in large-scale communications networks to optimize the performance of a congested network. -

On the Utility of Fine-Grained Complexity Theory

On The Utility of Fine-Grained Complexity Theory Manuel Sabin Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2020-165 http://www2.eecs.berkeley.edu/Pubs/TechRpts/2020/EECS-2020-165.html August 14, 2020 Copyright © 2020, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. On The Utility of Fine-Grained Complexity Theory by Manuel Sabin (they/them) A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Shafi Goldwasser, Chair Associate Professor Prasad Raghavendra Assistant Professor Nikhil Srivastava Summer 2020 On The Utility of Fine-Grained Complexity Theory Copyright 2020 by Manuel Sabin (they/them) 1 Abstract On The Utility of Fine-Grained Complexity Theory by Manuel Sabin (they/them) Doctor of Philosophy in Computer Science University of California, Berkeley Professor Shafi Goldwasser, Chair The nascent field of Fine-Grained Complexity Theory has emerged and grown rapidly in the past decade. By studying \Hardness within P" and the connections of problems computable in, say, n2 time versus n3 time, this field addresses the practical efficiency of problems. -

A Satisfiability Algorithm for AC0 961 Russel Impagliazzo, William Matthews and Ramamohan Paturi

A Satisfiability Algorithm for AC0 Russell Impagliazzo ∗ William Matthews † Ramamohan Paturi † Department of Computer Science and Engineering University of California, San Diego La Jolla, CA 92093-0404, USA January 2011 Abstract ing the size of the search space. In contrast, reducing We consider the problem of efficiently enumerating the problems such as planning or model-checking to the spe- satisfying assignments to AC0 circuits. We give a zero- cial cases of cnf Satisfiability or 3-sat can increase the error randomized algorithm which takes an AC0 circuit number of variables dramatically. On the other hand, as input and constructs a set of restrictions which cnf-sat algorithms (especially for small width clauses partitions {0, 1}n so that under each restriction the such as k-cnfs) have both been analyzed theoretically value of the circuit is constant. Let d denote the depth and implemented empirically with astounding success, of the circuit and cn denote the number of gates. This even when this overhead is taken into account. For some algorithm runs in time |C|2n(1−µc,d) where |C| is the domains of structured cnf formulas that arise in many d−1 applications, state-of-the-art SAT-solvers can handle up size of the circuit for µc,d ≥ 1/O[lg c + d lg d] with probability at least 1 − 2−n. to hundreds of thousands of variables and millions of As a result, we get improved exponential time clauses [11]. Meanwhile, very few results are known ei- algorithms for AC0 circuit satisfiability and for counting ther theoretically or empirically about the difficulty of solutions. -

From Online Algorithms to Search Games and Interruptible Systems Spyros Angelopoulos

Computation under Uncertainty: From Online Algorithms to Search Games and Interruptible Systems Spyros Angelopoulos To cite this version: Spyros Angelopoulos. Computation under Uncertainty: From Online Algorithms to Search Games and Interruptible Systems. Data Structures and Algorithms [cs.DS]. Sorbonne University, 2020. tel- 03094902 HAL Id: tel-03094902 https://hal.archives-ouvertes.fr/tel-03094902 Submitted on 15 Jan 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Computation under Uncertainty: From Online Algorithms to Search Games and Interruptible Systems Spyros Angelopoulos Habilitation Thesis Defended on: December 14, 2020 Committee members Steve Alpern, Warwick Business School Nikhil Bansal, TU Eindhoven Christoph Dürr, CNRS and Sorbonne Université Pierre Fraigniaud, CNRS and Université de Paris Lisa Hellerstein, New York University Patrick Jaillet, MIT Claire Mathieu, CNRS and Université de Paris Laboratoire d’Informatique de Paris 6 Sorbonne Université France Computation under uncertainty: From Online Problems to Search Games and Interruptible Systems Spyros Angelopoulos Abstract This Habilitation thesis explores aspects of computation in a status of uncertainty. First, we study online problems, in which the input is not known in advance, and the algorithm must make decisions for each input item without knowledge of future input items. -

EATCS General Assembly 2013

EATCS General Assembly 2013 Luca Aceto School of Computer Science, Reykjavik University [email protected] 10 July 2013 Luca Aceto EATCS General Assembly 2013 Agenda for the general assembly 1 Reports on ICALP 2013 2 Award of the best student papers 3 Report on the organization of ICALP 2014 (Philip Bille) 4 Proposal for ICALP 2015 for approval by the General Assembly (Kazuo Iwama) 5 Orna Kupferman. The Gender Challenge at TCS. 6 Report from the president 7 Any other business Luca Aceto EATCS General Assembly 2013 In memoriam Kohei Honda Philippe Darondeau (ICALP 2012 invited speaker) Luca Aceto EATCS General Assembly 2013 Reports on ICALP 2013 1 Organizing committee (Agnis Skuskovniks) 2 Marta Kwiatkowska on behalf of the PC chairs Festive occasion This is the 40th ICALP! Luca Aceto EATCS General Assembly 2013 Award of the best student papers Best student paper for Track A Radu Curticapean. Counting matchings of size k is #W [1]-hard Best student paper for Track B Nicolas Basset. A maximal entropy stochastic process for a timed automaton. There was no eligible accepted paper for Track C this year. Luca Aceto EATCS General Assembly 2013 Upcoming ICALP conferences Report on the organization of ICALP 2014 in Copenhagen (Philip Bille) Novelty: Four-day ICALP! PC chairs: Track A: Elias Koutsoupias (Oxford, UK); Track B: Javier Esparza (TU M¨unich,Germany); Track C: Pierre Fraigniaud (Paris Diderot, FR). Invited speakers: Sanjeev Arora (Princeton University, USA), Maurice Herlihy (Brown University, USA), Viktor Kuncak (EPFL, CH) and Claire Mathieu (ENS Paris, France). Proposal for ICALP 2015 in Kyoto for approval by the General Assembly (Kazuo Iwama) Co-located with LICS 2015 First ICALP ever outside Europe! Luca Aceto EATCS General Assembly 2013 Upcoming ICALP conferences Report on the organization of ICALP 2014 in Copenhagen (Philip Bille) Novelty: Four-day ICALP! PC chairs: Track A: Elias Koutsoupias (Oxford, UK); Track B: Javier Esparza (TU M¨unich,Germany); Track C: Pierre Fraigniaud (Paris Diderot, FR). -

SIGACT Viability

SIGACT Name and Mission: SIGACT: SIGACT Algorithms & Computation Theory The primary mission of ACM SIGACT (Association for Computing Machinery Special Interest Group on Algorithms and Computation Theory) is to foster and promote the discovery and dissemination of high quality research in the domain of theoretical computer science. The field of theoretical computer science is interpreted broadly so as to include algorithms, data structures, complexity theory, distributed computation, parallel computation, VLSI, machine learning, computational biology, computational geometry, information theory, cryptography, quantum computation, computational number theory and algebra, program semantics and verification, automata theory, and the study of randomness. Work in this field is often distinguished by its emphasis on mathematical technique and rigor. Newsletters: Volume Issue Issue Date Number of Pages Actually Mailed 2014 45 01 March 2014 N/A 2013 44 04 December 2013 104 27-Dec-13 44 03 September 2013 96 30-Sep-13 44 02 June 2013 148 13-Jun-13 44 01 March 2013 116 18-Mar-13 2012 43 04 December 2012 140 29-Jan-13 43 03 September 2012 120 06-Sep-12 43 02 June 2012 144 25-Jun-12 43 01 March 2012 100 20-Mar-12 2011 42 04 December 2011 104 29-Dec-11 42 03 September 2011 108 29-Sep-11 42 02 June 2011 104 N/A 42 01 March 2011 140 23-Mar-11 2010 41 04 December 2010 128 15-Dec-10 41 03 September 2010 128 13-Sep-10 41 02 June 2010 92 17-Jun-10 41 01 March 2010 132 17-Mar-10 Membership: based on fiscal year dates 1st Year 2 + Years Total Year Professional -

A New Lower Bound for Deterministic Truthful Scheduling

A New Lower Bound for Deterministic Truthful Scheduling∗ Yiannis Giannakopoulos† Alexander Hammerl† Diogo Poças† July 7, 2020 Abstract We study the problem of truthfully scheduling m tasks to n selfish unrelated machines, under the objective of makespan minimization, as was introduced in the seminal work of Nisan and Ro- nen [STOC’99]. Closing the current gap of 2.618, n on the approximation ratio of deterministic truthful mechanisms is a notorious open problem[ in] the field of algorithmic mechanism design. We provide the first such improvement in more than a decade, since the lower bounds of 2.414 (for n = 3) and 2.618 (for n ) by Christodoulou et al. [SODA’07] and Koutsoupias and Vidali [MFCS’07], respectively. More→ ∞specifically, we show that the currently best lower bound of 2.618 can be achieved even for just n = 4 machines; for n = 5 we already get the first improvement, namely 2.711; and allowing the number of machines to grow arbitrarily large we can get a lower bound of 2.755. 1 Introduction Truthful scheduling of unrelated parallel machines is a prototypical problem in algorithmic mechanism design, introduced in the seminal paper of Nisan and Ronen [NR99] that essentially initiated this field of research. It is an extension of the classical combinatorial problem for the makespan minimization objective (see, e.g., [Vaz03, Ch. 17] or [Hal97, Sec. 1.4]), with the added twist that now machines are rational, strategic agents that would not hesitate to lie about their actual processing times for each job, if this can reduce their personal cost, i.e., their own completion time. -

Game Theory, Alive Anna R

Game Theory, Alive Anna R. Karlin Yuval Peres 10.1090/mbk/101 Game Theory, Alive Game Theory, Alive Anna R. Karlin Yuval Peres AMERICAN MATHEMATICAL SOCIETY Providence, Rhode Island 2010 Mathematics Subject Classification. Primary 91A10, 91A12, 91A18, 91B12, 91A24, 91A43, 91A26, 91A28, 91A46, 91B26. For additional information and updates on this book, visit www.ams.org/bookpages/mbk-101 Library of Congress Cataloging-in-Publication Data Names: Karlin, Anna R. | Peres, Y. (Yuval) Title: Game theory, alive / Anna Karlin, Yuval Peres. Description: Providence, Rhode Island : American Mathematical Society, [2016] | Includes bibliographical references and index. Identifiers: LCCN 2016038151 | ISBN 9781470419820 (alk. paper) Subjects: LCSH: Game theory. | AMS: Game theory, economics, social and behavioral sciences – Game theory – Noncooperative games. msc | Game theory, economics, social and behavioral sciences – Game theory – Cooperative games. msc | Game theory, economics, social and behavioral sciences – Game theory – Games in extensive form. msc | Game theory, economics, social and behavioral sciences – Mathematical economics – Voting theory. msc | Game theory, economics, social and behavioral sciences – Game theory – Positional games (pursuit and evasion, etc.). msc | Game theory, economics, social and behavioral sciences – Game theory – Games involving graphs. msc | Game theory, economics, social and behavioral sciences – Game theory – Rationality, learning. msc | Game theory, economics, social and behavioral sciences – Game theory – Signaling, -

Download This PDF File

Godel¨ Prize 2014 Call for Nominations Deadline:January 17, 2014. The Gödel Prize for outstanding papers in the area of theoretical computer sci- ence is sponsored jointly by the European Association for Theoretical Computer Science (EATCS) and the Association for Computing Machinery, Special Interest Group on Algorithms and Computation Theory (ACM-SIGACT). The award is presented annually, with the presentation taking place alternately at the Interna- tional Colloquium on Automata, Languages, and Programming (ICALP) and the ACM Symposium on Theory of Computing (STOC). The 22nd GÃ˝udelPrize will be awarded at the 41st ICALP in Copenhagen in July 2014. The Prize is named in honor of Kurt GÃ˝udelin recognition of his major con- tributions to mathematical logic and of his interest, discovered in a letter he wrote to John von Neumann shortly before von NeumannâA˘ Zs´ death, in what has be- come the famous âAIJP˘ versus NPâA˘ I˙ question. The Prize includes an award of USD 5000. Award Committee: The winner of the Prize is selected by a committee of six members. The EATCS President and the SIGACT Chair each appoint three members to the committee, to serve staggered three-year terms. The committee is chaired alternately by representatives of EATCS and SIGACT. The 2014 Award Committee consists of Krzysztof Apt (CWI Amsterdam), Giuseppe F. Italiano (UniversitÃa˘ di Roma Tor Vergata), Joseph Mitchell (State University of New York at Stony Brook), Andrew Pitts (University of Cambridge), Daniel Spielman (Yale University), and ÃL’va Tardos (Cornell University). Eligibility: The rules for the 2014 Prize are given below and they supersede any different interpretation of the generic rule to be found on websites of both SIGACT and EATCS. -

Dynamic Selfish Routing

DYNAMIC SELFISH ROUTING Von der Fakultat¨ fur¨ Mathematik, Informatik und Naturwissenschaften der Rheinisch-Westfalischen¨ Technischen Hochschule Aachen zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften genehmigte Dissertation vorgelegt von Diplom-Informatiker SIMON FISCHER aus Hagen Berichter: Universitatsprofessor¨ Dr. Berthold Vocking¨ Universitatsprofessor¨ Dr. Petri Mah¨ onen¨ Tag der mundlichen¨ Prufung:¨ 13. Juni 2007 Diese Dissertation ist auf den Internetseiten der Hochschulbibliothek online verfugbar.¨ Dynamic Selfish Routing SIMON FISCHER Aachen • June 2007 iv Abstract This thesis deals with dynamic, load-adaptive rerouting policies in game theoretic settings. In the Wardrop model, which forms the basis of our dynamic population model, each of an infinite number of agents injects an infinitesimal amount of flow into a network, which in turn induces latency on the edges. Each agent may choose from a set of paths and strives to minimise its sustained latency selfishly. Population states which are stable in that no agent can improve its latency by switching to another path are referred to as Wardrop equilibria. A variety of results in this model have been obtained recently. Most of these revolve around the price of anarchy, which measures the degrada- tion of performance due to the selfish behaviour of the agents as compared to a centrally optimised solution. Most of these analyses consider the no- tion of Wardrop equilibria as a solution concept of selfish routing games, but disregard the question of how such an equilibrium can be attained in the first place. In fact, common game theoretic assumptions needed for the motivation of equilibria in general strategic games are not satisfied for rout- ing games in large networks, the Internet being the prime example. -

Sponsored by IEEE Computer Society Technical Committee On

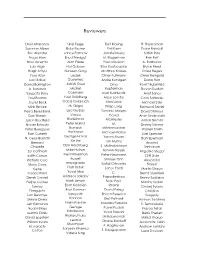

Reviewers Dorit Aharonov Uriel Feige Ralf Klasing R. Rajaraman Susanne Albers Eldar Fischer Phil Klein Dana Randall Eric Allender Lance Fortnow Jon Kleinberg Satish Rao Noga Alon Ehud Friedgut M. Klugerman Ran Raz Nina Amenta Alan Frieze Pascal Koiran A. Razborov Lars Arge Hal Gabow Elias Koutsoupias Bruce Reed Hagit Attiya Naveen Garg Matthias Krause Oded Regev Yossi Azar Leszek Oliver Kullmann Omer Reingold Laci Babai Gasieniec Andre Kundgen Dana Ron David Barrington Ashish Goel Orna Ronitt Rubinfeld A. Barvinok Michel Kupferman Steven Rudich Saugata Basu Goemans Eyal Kushilevitz Amit Sahai Paul Beame Paul Goldberg Arjen Lenstra Cenk Sahinalp Jozsef Beck Oded Goldreich Nati Linial Michael Saks Mihir Bellare M. Grigni Philip Long Raimund Seidel Petra Berenbrink Leo Guibas Dominic Mayers David Shmoys Dan Boneh Vassos David Amin Shokrollahi Julian Bradfield Hadzilacos McAllester Alistair Sinclair Nader Bshouty Peter Hajnal M. Danny Sleator Peter Buergisser Ramesh Mitzenmacher Warren Smith Hariharan Ran Canetti Michael Molloy Joel Spencer George Havas N. Cesa-Bianchi Yoram Moses Dan Spielman Xin He Bernard Ian Munro Aravind Chazelle Dan Hirschberg S. Muthukrishnan Srinivasan Ed Coffman Mike Hutton Ashwin Nayak Angelika Steger Edith Cohen Neil Immerman Peter Neumann Cliff Stein Richard Cole Russell Shmuel Onn Alexander Impagliazzo Steve Cook Rafail Ostrovsky Stolyar Piotr Indyk Gene Janos Pach Martin Strauss Cooperman Yuval Ishai C. Bernd Sturmfels Derek Corneil Andreas Jakoby Papadimitriou Benny Sudakov Felipe Cucker Mark Jerrum Boaz Patt- Madhu Sudan Sanjoy Erich Kaltofen Shamir Ondrej Sykora Dasgupta Ravi Kannan David Peleg Christian Luc Devroye Sampath Enoch Peserico Szegedy Shlomi Dolev Kannan Erez Petrank Amnon Ta-Shma Jeff Edmonds Haim Kaplan Maurizio Pizzonia E.